CSC/ECE 506 Spring 2013/10a os: Difference between revisions

(Created page with "'''Prefetching and Memory Consistency Models''' == '''Overview''' == This wiki article explores two different topics Sequential Prefetching and Memory Consistency models. The a...") |

(→Quiz) |

||

| (75 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

[https://docs.google.com/a/ncsu.edu/document/d/18ap34rWJImZjMAtGbANK9lZMon4NgwqDb5UY9Z8ksLM Link to write up] | |||

'''Prefetching and Memory Consistency Models''' | '''Prefetching and Memory Consistency Models''' | ||

== ''' | Previous articles | ||

This | |||

# [http://wiki.expertiza.ncsu.edu/index.php/CSC/ECE_506_Spring_2012/10a_dr Article 1] | |||

# [http://wiki.expertiza.ncsu.edu/index.php/CSC/ECE_506_Spring_2012/10a_jp Article 2] | |||

== '''Cache Misses'''== | |||

Cache misses can be classified into four categories: conflict, compulsory, capacity [3], and coherence. <b>Conflict misses</b> are misses that would not occur if the cache was fully-associative and had LRU replacement. <b>Compulsory misses</b> are misses required in any cache organization because they are the first references to an instruction or piece of data. <b>Capacity misses</b> occur when the cache size is not sufficient to hold data between references. <b>Coherence misses</b> are misses that occur as a result of invalidation to preserve multiprocessor cache consistency.The number of compulsory and capacity misses can be reduced by employing prefetching techniques such as by increasing the size of the cache lines or by prefetching blocks ahead of time. | |||

== '''Prefetching'''== | |||

Prefetching is a common technique to reduce the read miss penalty. Prefetching relies on predicting which blocks currently missing in the cache will be read in the future and on bringing these blocks into the cache prior to the reference triggering the miss. | |||

Prefetching can be achieved in two ways: | |||

* Software Prefetching | |||

* Hardware Prefetching | |||

===Software and Hardware Prefetching<ref>http://www.roguewave.com/portals/0/products/threadspotter/docs/2011.2/manual_html_linux/manual_html/ch_intro_prefetch.html</ref>=== | |||

With <b>software prefetching</b> the programmer or compiler inserts prefetch instructions into the program. These are instructions that initiate a load of a cache line into the cache, but do not stall waiting for the data to arrive.A critical property of prefetch instructions is the time from when the prefetch is executed to when the data is used. If the prefetch is too close to the instruction using the prefetched data, the cache line will not have had time to arrive from main memory or the next cache level and the instruction will stall. This reduces the effectiveness of the prefetch.If the prefetch is too far ahead of the instruction using the prefetched data, the prefetched cache line will instead already have been evicted again before the data is actually used. The instruction using the data will then cause another fetch of the cache line and have to stall. This not only eliminates the benefit of the prefetch instruction, but introduces additional costs since the cache line is now fetched twice from main memory or the next cache level. This increases the memory bandwidth requirement of the program. | |||

Many modern processors implement [http://suif.stanford.edu/papers/mowry92/subsection3_5_2.html hardware prefetching]. This means that the processor monitors the memory access pattern of the running program and tries to predict what data the program will access next and prefetches that data. There are few different variants of how this can be done. | |||

1. A <i>stream</i> prefetcher looks for streams where a sequence of consecutive cache lines are accessed by the program. When such a stream is found the processor starts prefetching the cache lines ahead of the program's accesses. | |||

2. A [http://www.bergs.com/stefan/general/general_slides/sld011.htm stride] prefetcher looks for instructions that make accesses with regular strides, that do not necessarily have to be to consecutive cache lines. When such an instruction is detected the processor tries to prefetch the cache lines it will access ahead of it. | |||

3. An [http://www.techarp.com/showfreebog.aspx?lang=0&bogno=282 adjacent cache line prefetcher] automatically fetches adjacent cache lines to ones being accessed by the program. This can be used to mimic behaviour of a larger cache line size in a cache level without actually having to increase the line size. | |||

====Software vs. Hardware Prefetching<ref>http://software.intel.com/en-us/articles/how-to-choose-between-hardware-and-software-prefetch-on-32-bit-intel-architecture</ref>==== | |||

Software prefetching has the following characteristics: | |||

* Can handle irregular access patterns, which do not trigger the hardware prefetcher. | |||

* Can use less bus bandwidth than hardware prefetching. | |||

* Software prefetches must be added to new code, and they do not benefit existing applications. | |||

The characteristics of the hardware prefetching are as follows : | |||

* Works with existing applications | |||

* Requires regular access patterns | |||

* Start-up penalty before hardware prefetcher triggers and extra fetches after array finishes. | |||

* Will not prefetch across a 4K page boundary (i.e., the program would have to initiate demand loads for the new page before the hardware prefetcher will start prefetching from the new page). | |||

===Hardware-based Prefetching Techniques<ref>http://suif.stanford.edu/papers/mowry92/subsection3_5_2.html</ref><ref>http://static.usenix.org/event/fast07/tech/full_papers/gill/gill_html/node4.html</ref>=== | |||

Based on the previous memory access, the hardware based techniques can be employed to dynamically predict the memory address to prefetch. These techniques can be classified into two main classes: | |||

* On-chip Schemes: Based on the addresses required by the processor in all data references. | |||

* Off-chip Schemes: Based on the addresses that result in L1 cahce misses | |||

Some of the most commonly used Prefetching techniques are discussed below. | |||

The most common prefetching approach is to perform sequential readahead. The simplest form is One Block Lookahead (OBL), where we prefetch one block beyond the requested block. OBL can be of three types: | |||

1).''always prefetch'' - prefetch the next block on each reference | |||

2).''prefetch on miss'' - prefetch the next block only on a miss | |||

3).''tagged prefetch'' - prefetch the next block only if the referenced block is accessed for the first time. | |||

P-Block Lookahead extends the idea of OBL by prefetching P blocks instead of one, where is also referred to as the degree of prefetch. A variation of the P-Block Lookahead algorithm which dynamically adapts the degree of prefetch for the workload. | |||

A per stream scheme selects the appropriate degree of prefetch on each miss based on a prefetch degree selector (PDS) table. For the case where cache is abundant, Infinite-Block Lookahead has also been studied. | |||

Stride-based prefetching has also been studied mainly for processor caches where strides are detected based on information provided by the application, a lookahead into the instruction stream, or a reference prediction table indexed by the program counter. Sequential prefetching can be consider as a better choice because most strides lie within the block size and it can also exploit locality. | |||

History-based prefetching has been proposed in various forms. A history-based table can be used to predict the next pages to prefetch. In a variant of history based prefetching, multiple memory predictions are prefetched at the same time. Data compression techniques have also been applied to predict future access patterns. | |||

Most commercial data storage systems use very simple prefetching schemes like sequential prefetching. This is because only sequential prefetching can achieve a high long-term predictive accuracy in data servers. Strides that cross page or track boundaries are uncommon in workloads and therefore not worth implementing. History-based prefetching suffers from low predictive accuracy and the associated cost of the extra reads on an already bottlenecked I/O system. The data storage system cannot use most hardware-initiated or software-initiated prefetching techniques as the applications typically run on external hardware. | |||

=='''Prefetching Improvements'''== | |||

Fortunately a number of techniques have evolved to address many of the concerns about prefetching, thus making it significantly more effective. | |||

==='''Cache Sizing'''=== | |||

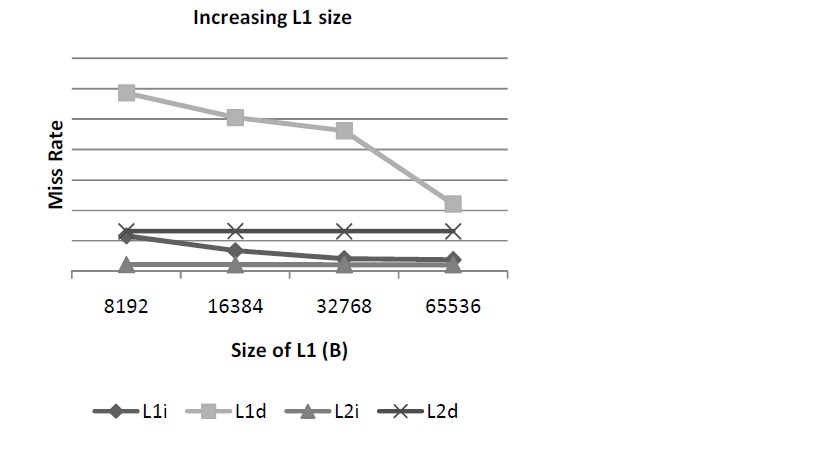

Increasing the dimensions of the cache greatly improves the performance of prefetching. This can be done either through increasing the cache size itself or increasing set associativity. A small cache size is a significant problem with prefetching because conflict misses increase dramatically. Any benefit you might derive from having fewer cache lookup misses is negated by this higher incidence of conflict miss. The following study assembled some benchmarks for a given SPARC processor that utilized prefetching. The following graph shows various levels of cache misses based on various cache sizes. With 16K and 32K cache sizes, prefetching reduces cache misses to fairly close to 0, which the number of misses without prefetching is rather high. | |||

For example, the following study of a BioInformatics application showed the effects of prefetching cache misses based on the size of the L1 cache. As can be seen, cache size has a major impact on the miss rate and, subsequently, performance in the system.<ref>http://www.nikolaylaptev.com/master/classes/cs254.pdf</ref> | |||

[[File:Cachesize.jpg|819px|thumb|center|Figure 3.1-Effect of prefetching cache misses]] | |||

==='''Improved Prefetch Timing'''=== | |||

Improving prefetch timing algorithms can also significantly improve performance and prevent the issue with data being prefetched too late or too early to be useful. One possibility in this case is to implement a technique called Aggressive Prefetching<ref>http://static.usenix.org/events/hotos05/prelim_papers/papathanasiou/papathanasiou_html/paper.html</ref>. Traditionally, prefetch algorithms have only incremental amounts of data with higher levels of confidence. The more aggressive approach, however, seeks to search more deeply in the reference stream to bring in a wider array of data that may be used in the near future. This does, however, require a more resource-intensive machine to avoid negatively impacting performance. | |||

Greater amounts of processing power and storage have made this more aggressive approach possible. While older architectures with slower processors and less memory might have had issues with larger volumes of data being prefetched, this is much less of a concern now. Varying performance from a variety of devices also changes the need to be conservative in prefetching. Aggressive prefetching can take advantage of some latencies being higher than others, which older, more conservative architectures had more consistent levels of bandwidth throughout. | |||

==='''Memory Pattern Recognition'''=== | |||

Effective memory pattern recognition algorithms can go a long way towards resolving issues with prefetching values unnecessarily (ie ones that might not be used in a sufficient timeframe). One example is the stride technique. For example, memory addresses that are sequential in nature, like an array, would all be brought in together as a unit since more than likely most or all of these elements will be utilized. This is an improvement over a more conservative prefetcher that may just assume memory located together will be used. The more conservative version has been known to have a much higher incidence of bringing in unnecessary blocks of data. | |||

= | Another variation of this is the linked memory reference pattern. Linked lists and tree structures are most often associated with this type. Generally, occurrences of code similar to ptr = ptr->next are considered as one structure and subsequently brought into memory together.<ref>http://www.bergs.com/stefan/general/papers/general.pdf</ref> | ||

For example, instead of: | |||

while (p) { | |||

work(p) | |||

p = next(p) | |||

} | |||

the linked memory reference pattern may work in the following way: | |||

while (p) { | |||

prefetch(next(p)) | |||

work(p) | |||

p = next(p) | |||

} | |||

Here, p will be brought in ahead of time and be closer to the processor for subsequent use. This is more efficient than the initial loop. | |||

==='''Markov Prefetcher'''=== | |||

This particular prefetch technique<ref>http://www.bergs.com/stefan/general/papers/general.pdf</ref> is built around the idea of remembering past sequences of cache misses and, utilizing this memory, it will bring it subsequent misses in the sequence. Generally a rather large table would be used containing previous address misses. This table is maintained in a similar manner as a cache. Sequences stored in this table are used to then help predict future sequences that may need to be used by prefetching. | |||

== | ==='''Stealth Prefetching'''=== | ||

A more modern and innovative technique, stealth prefetching<ref>http://arnetminer.org/publication/stealth-prefetching-53889.html</ref>, attempts to deal with issues surrounding the increase of interconnection bandwidth and increased memory latency. It also helps to avoiding the problem of using bandwidth to prematurely access shared data that will later result in state downgrades. Basically, this technique attempts to focus on specific regions of memory in a more coarsely designed fashion to identify which segments are heavily used by multiple processors and which ones are not. Lines that are not being shared by multiple processors are more aggressively brought nearer to the local processor, thus improving performance by as much as 20%. With the increased use of fast multiprocessors, this alleviates some of the bandwidth bottlenecks. | |||

=='''Memory Consistency models'''<ref>http://wiki.expertiza.ncsu.edu/index.php/CSC/ECE_506_Spring_2012/10a_dr</ref><ref>http://parasol.tamu.edu/~rwerger/Courses/654/consistency1.pdf</ref>== | |||

A basic requirement for the implementation of a shared multiprocessor system is to correctly order the reads or writes to any memory location which are seen by any processor attempting to access it. This requirement is called as the memory consistency requirement. The memory consistency models adhere to this requirement. The models are implemented in a system at machine code interface as well as high level language interface. They are implemented while translating a high level code to machine level code which is directly executed. Therefore the consistency models make the memory operations predictable. | |||

== | ==='''An overview of memory consistency models'''=== | ||

We can classify consistency models as those conforming to the programmer’s intuition and those which do not. The programmer’s implicit expectation in the ordering of memory accesses is that the memory accesses should follow the order given in the program and they should occur instantaneously, i.e. atomically. The expectations are completely captured by the [http://en.wikipedia.org/wiki/Sequential_consistency ''Sequential Consistency''] model, which was defined by [http://en.wikipedia.org/wiki/Leslie_Lamport Leslie Lamport] as follows: | |||

“''A multiprocessor is sequentially consistent if the result of any execution is the same as if the operations of all processors (cores) were executed in some sequential order, and the operations of each individual processor (core) appear in this sequence in the order specified by its program.''” | |||

[[Image:3.1.jpg|645px|thumb|right| Figure 4.1 An overview of the memory consistency models]] | |||

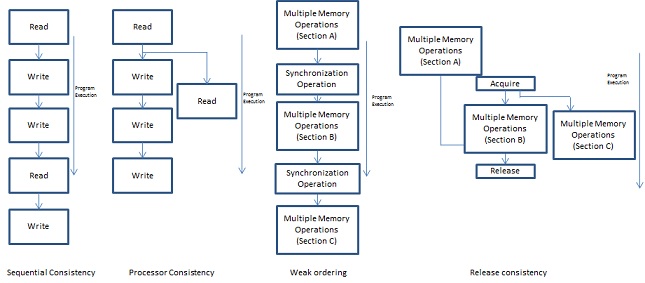

This model restricts the reordering of any read or store instructions and thus restricts compiler optimization techniques. Another memory consistency model which allows the reordering of operations is called as the relaxed consistency model. As the reads and writes can be reordered, they deviate from the programmer’s expectation. Some of the relaxed consistency models include processor consistency, weak ordering, and release consistency models. | |||

''Processor consistency model (PC)'' - Processor consistency model relaxes the requirement of all stores to be completed before a load operation to take place i.e. a more recent load can be completed before the store preceding is completed. | |||

''Weak ordering (WO)'' – Weak ordering separates all read and write instructions into critical sections. Reordering the critical section themselves is not allowed by this model, but ordering of instructions within the section itself is allowed. These critical sections are separated by synchronization events. The synchronization events can be implemented in the form of locks, barriers or post – wait pairing. Therefore the requirements for the weak ordering model to work are: | |||

* The programmer has clearly defined the critical sections and has implemented the synchronization of the critical section explicitly. | |||

* All read and write operations before a synchronization event have been completed. | |||

* All loads and stores following a critical section cannot precede the section. | |||

''Release consistency model''- This model classifies synchronization events as acquire and release operations. Acquire operations allows a thread to enter a critical section while a thread leaves the critical section after a release operation. Hence an acquire operation is not concerned about older read / write operations as that is requirement is taken care of by the release operation and similarly a release operation is concerned about younger read /writes as it is handled by the acquire operation. Hence we can rewrite the requirements for weak ordering to follow release consistency model as follows: | |||

* The programmer has clearly defined the critical sections and has implemented the synchronization of the critical section explicitly. | |||

* All read and writes before a release operation should be completed | |||

* All acquire operations related to a critical section should be completed before handling a younger read write. | |||

* The acquire and release operations should be atomic with respect to each other. | |||

A core difference between weak ordering and release consistency is that in release consistency overlapping of critical sections is possible. | |||

Figure 3.1 shows how a sequence of instructions will be executed following a particular consistency model. As compared to sequential consistency, relaxed consistency models provide faster execution. Now if we can prefetch instructions while conforming to the consistency model, then that will result in an even higher speed up. | |||

=='''Prefetching under consistency models'''<ref>http://parasol.tamu.edu/~rwerger/Courses/654/pref1.pdf</ref>== | |||

Prefetching can also be classified as binding and non- binding. In binding prefetching, the value of the prefetched data is locked the instant it is prefetched and will be released only when the process reads it later via a regular read. Other processes cannot modify the data during this period. In non-binding prefetching , other processors can modify the data till the actual regular read is done. An advantage of using non -binding prefetching is that it does not affect the correctness of any consistency model. | |||

Let’s see the improvement in execution time using prefetching by considering a set of instructions given in example 5.1 and 5.2. For both sets, the cache hit latency is 1 cycle while the cache miss latency is 100 cycles. An invalidation based cache coherence scheme is used in both the examples. | |||

Example 5.1: | |||

lock L (miss) | |||

write A (miss) | |||

write B (miss) | |||

unlock L (hit) | |||

3. | Example 5.2: | ||

lock L (miss) | |||

read C (miss) | |||

read D (hit) | |||

read E[D] (miss) | |||

unlock L (hit) | |||

In sequential consistency (SC), reordering of operations is not allowed. Hence for example 5.1, 3 cache misses and a cache hit take a total of 301 cycles to execute. Under relaxed consistency (RC), the writes can be pipelined within the critical region bounded by the lock. Hence under RC, the total time for execution is 202 cycles as the second write is a cache hit. Prefetching improves the performance of systems following either SC or RC. Using prefetching, we assume that lock acquisition is bound to succeed and hence prefetch both the writes. When lock acquisition actually succeeds, we already have data for both the writes prefetched in our cache. Hence all writes incur a cache hit and the total number of cycles required to execute the set will be reduced to 103 cycles for both consistency models. | |||

A case where prefetching does not improve execution time is given in example 5.2. The read instructions indicate a data dependency between the third and second read. Under SC, the instructions take 302 cycles to perform. Under RC, they take 203 cycles as reading the value of E is a cache hit using pipelining within the critical section. With the prefetching, the instructions take 203 cycles under SC and 202 cycles under RC. This reduction in improvement of execution time is attributed to the data dependency between the D and E (under SC) and between lock acquisition and D (under RC). Hence prefetching fails to improve the performance in execution time in such cases. | |||

=='''Disadvantages of Prefetching'''<ref>http://wiki.expertiza.ncsu.edu/index.php/CSC/ECE_506_Spring_2012/10a_dr</ref>== | |||

* Increased Complexity and overhead of handling the prefetching algorithms- Performance must be improved significantly to counterbalance this overhead and complexity or the efforts are not worthwhile. | |||

* With multiple cores, prefetching requests can originate from a variety of different cores. This puts additional stress on memory to not only deal with regular prefetch requests but also to handle prefetch from different sources, thus greatly increasing the overhead and complexity of logic. | |||

* If prefetched data is stored in the data cache, then cache conflict or cache pollution, can become a significant burden. | |||

=='''Conclusion'''== | |||

The article dealt with the concept of prefetching, its hardware and software implementation. It gave a brief overview of few of the memory consistency models implemented to date and how prefetching can affect the execution time across different consistency models. Overall, prefetching can genuinely help in lowering execution time if out-of-order execution does not take place. However it cannot help in overlapping of memory accesses; the processor will get the loads in the order determined in the program. The only difference is that it will get the data from the cache rather than the main memory because it has been prefetched. | |||

5. | =='''Quiz'''== | ||

1. In example 5.1, if we reorder the writes within the critical section and as a result write B occurs before write A, will this result in lower execution time in either Sequential consistency (SC) or Relaxed consistency (RC)? | |||

# Yes in both models | |||

# Yes in SC, No in RC | |||

# No in SC, Yes in RC | |||

# No in both SC and RC. | |||

2. ___________ model categorizes the synchronization operation into Acquire and Release. | |||

# Sequential Consistency | |||

# Release Consistency | |||

# Weak Ordering | |||

# Processor Consistency. | |||

3. Which of these is <i>not </i>a type of hardware implementation of a prefetcher? | |||

# Predication | |||

# Stride | |||

# Stream | |||

# Adjacent Cache line prefetcher | |||

=References= | =References= | ||

<references/> | <references/> | ||

Latest revision as of 23:01, 3 April 2013

Prefetching and Memory Consistency Models

Previous articles

Cache Misses

Cache misses can be classified into four categories: conflict, compulsory, capacity [3], and coherence. Conflict misses are misses that would not occur if the cache was fully-associative and had LRU replacement. Compulsory misses are misses required in any cache organization because they are the first references to an instruction or piece of data. Capacity misses occur when the cache size is not sufficient to hold data between references. Coherence misses are misses that occur as a result of invalidation to preserve multiprocessor cache consistency.The number of compulsory and capacity misses can be reduced by employing prefetching techniques such as by increasing the size of the cache lines or by prefetching blocks ahead of time.

Prefetching

Prefetching is a common technique to reduce the read miss penalty. Prefetching relies on predicting which blocks currently missing in the cache will be read in the future and on bringing these blocks into the cache prior to the reference triggering the miss.

Prefetching can be achieved in two ways:

- Software Prefetching

- Hardware Prefetching

Software and Hardware Prefetching<ref>http://www.roguewave.com/portals/0/products/threadspotter/docs/2011.2/manual_html_linux/manual_html/ch_intro_prefetch.html</ref>

With software prefetching the programmer or compiler inserts prefetch instructions into the program. These are instructions that initiate a load of a cache line into the cache, but do not stall waiting for the data to arrive.A critical property of prefetch instructions is the time from when the prefetch is executed to when the data is used. If the prefetch is too close to the instruction using the prefetched data, the cache line will not have had time to arrive from main memory or the next cache level and the instruction will stall. This reduces the effectiveness of the prefetch.If the prefetch is too far ahead of the instruction using the prefetched data, the prefetched cache line will instead already have been evicted again before the data is actually used. The instruction using the data will then cause another fetch of the cache line and have to stall. This not only eliminates the benefit of the prefetch instruction, but introduces additional costs since the cache line is now fetched twice from main memory or the next cache level. This increases the memory bandwidth requirement of the program.

Many modern processors implement hardware prefetching. This means that the processor monitors the memory access pattern of the running program and tries to predict what data the program will access next and prefetches that data. There are few different variants of how this can be done.

1. A stream prefetcher looks for streams where a sequence of consecutive cache lines are accessed by the program. When such a stream is found the processor starts prefetching the cache lines ahead of the program's accesses.

2. A stride prefetcher looks for instructions that make accesses with regular strides, that do not necessarily have to be to consecutive cache lines. When such an instruction is detected the processor tries to prefetch the cache lines it will access ahead of it.

3. An adjacent cache line prefetcher automatically fetches adjacent cache lines to ones being accessed by the program. This can be used to mimic behaviour of a larger cache line size in a cache level without actually having to increase the line size.

Software vs. Hardware Prefetching<ref>http://software.intel.com/en-us/articles/how-to-choose-between-hardware-and-software-prefetch-on-32-bit-intel-architecture</ref>

Software prefetching has the following characteristics:

- Can handle irregular access patterns, which do not trigger the hardware prefetcher.

- Can use less bus bandwidth than hardware prefetching.

- Software prefetches must be added to new code, and they do not benefit existing applications.

The characteristics of the hardware prefetching are as follows :

- Works with existing applications

- Requires regular access patterns

- Start-up penalty before hardware prefetcher triggers and extra fetches after array finishes.

- Will not prefetch across a 4K page boundary (i.e., the program would have to initiate demand loads for the new page before the hardware prefetcher will start prefetching from the new page).

Hardware-based Prefetching Techniques<ref>http://suif.stanford.edu/papers/mowry92/subsection3_5_2.html</ref><ref>http://static.usenix.org/event/fast07/tech/full_papers/gill/gill_html/node4.html</ref>

Based on the previous memory access, the hardware based techniques can be employed to dynamically predict the memory address to prefetch. These techniques can be classified into two main classes:

- On-chip Schemes: Based on the addresses required by the processor in all data references.

- Off-chip Schemes: Based on the addresses that result in L1 cahce misses

Some of the most commonly used Prefetching techniques are discussed below. The most common prefetching approach is to perform sequential readahead. The simplest form is One Block Lookahead (OBL), where we prefetch one block beyond the requested block. OBL can be of three types:

1).always prefetch - prefetch the next block on each reference

2).prefetch on miss - prefetch the next block only on a miss

3).tagged prefetch - prefetch the next block only if the referenced block is accessed for the first time.

P-Block Lookahead extends the idea of OBL by prefetching P blocks instead of one, where is also referred to as the degree of prefetch. A variation of the P-Block Lookahead algorithm which dynamically adapts the degree of prefetch for the workload.

A per stream scheme selects the appropriate degree of prefetch on each miss based on a prefetch degree selector (PDS) table. For the case where cache is abundant, Infinite-Block Lookahead has also been studied.

Stride-based prefetching has also been studied mainly for processor caches where strides are detected based on information provided by the application, a lookahead into the instruction stream, or a reference prediction table indexed by the program counter. Sequential prefetching can be consider as a better choice because most strides lie within the block size and it can also exploit locality. History-based prefetching has been proposed in various forms. A history-based table can be used to predict the next pages to prefetch. In a variant of history based prefetching, multiple memory predictions are prefetched at the same time. Data compression techniques have also been applied to predict future access patterns.

Most commercial data storage systems use very simple prefetching schemes like sequential prefetching. This is because only sequential prefetching can achieve a high long-term predictive accuracy in data servers. Strides that cross page or track boundaries are uncommon in workloads and therefore not worth implementing. History-based prefetching suffers from low predictive accuracy and the associated cost of the extra reads on an already bottlenecked I/O system. The data storage system cannot use most hardware-initiated or software-initiated prefetching techniques as the applications typically run on external hardware.

Prefetching Improvements

Fortunately a number of techniques have evolved to address many of the concerns about prefetching, thus making it significantly more effective.

Cache Sizing

Increasing the dimensions of the cache greatly improves the performance of prefetching. This can be done either through increasing the cache size itself or increasing set associativity. A small cache size is a significant problem with prefetching because conflict misses increase dramatically. Any benefit you might derive from having fewer cache lookup misses is negated by this higher incidence of conflict miss. The following study assembled some benchmarks for a given SPARC processor that utilized prefetching. The following graph shows various levels of cache misses based on various cache sizes. With 16K and 32K cache sizes, prefetching reduces cache misses to fairly close to 0, which the number of misses without prefetching is rather high.

For example, the following study of a BioInformatics application showed the effects of prefetching cache misses based on the size of the L1 cache. As can be seen, cache size has a major impact on the miss rate and, subsequently, performance in the system.<ref>http://www.nikolaylaptev.com/master/classes/cs254.pdf</ref>

Improved Prefetch Timing

Improving prefetch timing algorithms can also significantly improve performance and prevent the issue with data being prefetched too late or too early to be useful. One possibility in this case is to implement a technique called Aggressive Prefetching<ref>http://static.usenix.org/events/hotos05/prelim_papers/papathanasiou/papathanasiou_html/paper.html</ref>. Traditionally, prefetch algorithms have only incremental amounts of data with higher levels of confidence. The more aggressive approach, however, seeks to search more deeply in the reference stream to bring in a wider array of data that may be used in the near future. This does, however, require a more resource-intensive machine to avoid negatively impacting performance.

Greater amounts of processing power and storage have made this more aggressive approach possible. While older architectures with slower processors and less memory might have had issues with larger volumes of data being prefetched, this is much less of a concern now. Varying performance from a variety of devices also changes the need to be conservative in prefetching. Aggressive prefetching can take advantage of some latencies being higher than others, which older, more conservative architectures had more consistent levels of bandwidth throughout.

Memory Pattern Recognition

Effective memory pattern recognition algorithms can go a long way towards resolving issues with prefetching values unnecessarily (ie ones that might not be used in a sufficient timeframe). One example is the stride technique. For example, memory addresses that are sequential in nature, like an array, would all be brought in together as a unit since more than likely most or all of these elements will be utilized. This is an improvement over a more conservative prefetcher that may just assume memory located together will be used. The more conservative version has been known to have a much higher incidence of bringing in unnecessary blocks of data.

Another variation of this is the linked memory reference pattern. Linked lists and tree structures are most often associated with this type. Generally, occurrences of code similar to ptr = ptr->next are considered as one structure and subsequently brought into memory together.<ref>http://www.bergs.com/stefan/general/papers/general.pdf</ref>

For example, instead of:

while (p) {

work(p)

p = next(p)

}

the linked memory reference pattern may work in the following way:

while (p) {

prefetch(next(p))

work(p)

p = next(p)

}

Here, p will be brought in ahead of time and be closer to the processor for subsequent use. This is more efficient than the initial loop.

Markov Prefetcher

This particular prefetch technique<ref>http://www.bergs.com/stefan/general/papers/general.pdf</ref> is built around the idea of remembering past sequences of cache misses and, utilizing this memory, it will bring it subsequent misses in the sequence. Generally a rather large table would be used containing previous address misses. This table is maintained in a similar manner as a cache. Sequences stored in this table are used to then help predict future sequences that may need to be used by prefetching.

Stealth Prefetching

A more modern and innovative technique, stealth prefetching<ref>http://arnetminer.org/publication/stealth-prefetching-53889.html</ref>, attempts to deal with issues surrounding the increase of interconnection bandwidth and increased memory latency. It also helps to avoiding the problem of using bandwidth to prematurely access shared data that will later result in state downgrades. Basically, this technique attempts to focus on specific regions of memory in a more coarsely designed fashion to identify which segments are heavily used by multiple processors and which ones are not. Lines that are not being shared by multiple processors are more aggressively brought nearer to the local processor, thus improving performance by as much as 20%. With the increased use of fast multiprocessors, this alleviates some of the bandwidth bottlenecks.

Memory Consistency models<ref>http://wiki.expertiza.ncsu.edu/index.php/CSC/ECE_506_Spring_2012/10a_dr</ref><ref>http://parasol.tamu.edu/~rwerger/Courses/654/consistency1.pdf</ref>

A basic requirement for the implementation of a shared multiprocessor system is to correctly order the reads or writes to any memory location which are seen by any processor attempting to access it. This requirement is called as the memory consistency requirement. The memory consistency models adhere to this requirement. The models are implemented in a system at machine code interface as well as high level language interface. They are implemented while translating a high level code to machine level code which is directly executed. Therefore the consistency models make the memory operations predictable.

An overview of memory consistency models

We can classify consistency models as those conforming to the programmer’s intuition and those which do not. The programmer’s implicit expectation in the ordering of memory accesses is that the memory accesses should follow the order given in the program and they should occur instantaneously, i.e. atomically. The expectations are completely captured by the Sequential Consistency model, which was defined by Leslie Lamport as follows:

“A multiprocessor is sequentially consistent if the result of any execution is the same as if the operations of all processors (cores) were executed in some sequential order, and the operations of each individual processor (core) appear in this sequence in the order specified by its program.”

This model restricts the reordering of any read or store instructions and thus restricts compiler optimization techniques. Another memory consistency model which allows the reordering of operations is called as the relaxed consistency model. As the reads and writes can be reordered, they deviate from the programmer’s expectation. Some of the relaxed consistency models include processor consistency, weak ordering, and release consistency models.

Processor consistency model (PC) - Processor consistency model relaxes the requirement of all stores to be completed before a load operation to take place i.e. a more recent load can be completed before the store preceding is completed.

Weak ordering (WO) – Weak ordering separates all read and write instructions into critical sections. Reordering the critical section themselves is not allowed by this model, but ordering of instructions within the section itself is allowed. These critical sections are separated by synchronization events. The synchronization events can be implemented in the form of locks, barriers or post – wait pairing. Therefore the requirements for the weak ordering model to work are:

- The programmer has clearly defined the critical sections and has implemented the synchronization of the critical section explicitly.

- All read and write operations before a synchronization event have been completed.

- All loads and stores following a critical section cannot precede the section.

Release consistency model- This model classifies synchronization events as acquire and release operations. Acquire operations allows a thread to enter a critical section while a thread leaves the critical section after a release operation. Hence an acquire operation is not concerned about older read / write operations as that is requirement is taken care of by the release operation and similarly a release operation is concerned about younger read /writes as it is handled by the acquire operation. Hence we can rewrite the requirements for weak ordering to follow release consistency model as follows:

- The programmer has clearly defined the critical sections and has implemented the synchronization of the critical section explicitly.

- All read and writes before a release operation should be completed

- All acquire operations related to a critical section should be completed before handling a younger read write.

- The acquire and release operations should be atomic with respect to each other.

A core difference between weak ordering and release consistency is that in release consistency overlapping of critical sections is possible. Figure 3.1 shows how a sequence of instructions will be executed following a particular consistency model. As compared to sequential consistency, relaxed consistency models provide faster execution. Now if we can prefetch instructions while conforming to the consistency model, then that will result in an even higher speed up.

Prefetching under consistency models<ref>http://parasol.tamu.edu/~rwerger/Courses/654/pref1.pdf</ref>

Prefetching can also be classified as binding and non- binding. In binding prefetching, the value of the prefetched data is locked the instant it is prefetched and will be released only when the process reads it later via a regular read. Other processes cannot modify the data during this period. In non-binding prefetching , other processors can modify the data till the actual regular read is done. An advantage of using non -binding prefetching is that it does not affect the correctness of any consistency model. Let’s see the improvement in execution time using prefetching by considering a set of instructions given in example 5.1 and 5.2. For both sets, the cache hit latency is 1 cycle while the cache miss latency is 100 cycles. An invalidation based cache coherence scheme is used in both the examples.

Example 5.1:

lock L (miss) write A (miss) write B (miss) unlock L (hit)

Example 5.2:

lock L (miss) read C (miss) read D (hit) read E[D] (miss) unlock L (hit)

In sequential consistency (SC), reordering of operations is not allowed. Hence for example 5.1, 3 cache misses and a cache hit take a total of 301 cycles to execute. Under relaxed consistency (RC), the writes can be pipelined within the critical region bounded by the lock. Hence under RC, the total time for execution is 202 cycles as the second write is a cache hit. Prefetching improves the performance of systems following either SC or RC. Using prefetching, we assume that lock acquisition is bound to succeed and hence prefetch both the writes. When lock acquisition actually succeeds, we already have data for both the writes prefetched in our cache. Hence all writes incur a cache hit and the total number of cycles required to execute the set will be reduced to 103 cycles for both consistency models.

A case where prefetching does not improve execution time is given in example 5.2. The read instructions indicate a data dependency between the third and second read. Under SC, the instructions take 302 cycles to perform. Under RC, they take 203 cycles as reading the value of E is a cache hit using pipelining within the critical section. With the prefetching, the instructions take 203 cycles under SC and 202 cycles under RC. This reduction in improvement of execution time is attributed to the data dependency between the D and E (under SC) and between lock acquisition and D (under RC). Hence prefetching fails to improve the performance in execution time in such cases.

Disadvantages of Prefetching<ref>http://wiki.expertiza.ncsu.edu/index.php/CSC/ECE_506_Spring_2012/10a_dr</ref>

- Increased Complexity and overhead of handling the prefetching algorithms- Performance must be improved significantly to counterbalance this overhead and complexity or the efforts are not worthwhile.

- With multiple cores, prefetching requests can originate from a variety of different cores. This puts additional stress on memory to not only deal with regular prefetch requests but also to handle prefetch from different sources, thus greatly increasing the overhead and complexity of logic.

- If prefetched data is stored in the data cache, then cache conflict or cache pollution, can become a significant burden.

Conclusion

The article dealt with the concept of prefetching, its hardware and software implementation. It gave a brief overview of few of the memory consistency models implemented to date and how prefetching can affect the execution time across different consistency models. Overall, prefetching can genuinely help in lowering execution time if out-of-order execution does not take place. However it cannot help in overlapping of memory accesses; the processor will get the loads in the order determined in the program. The only difference is that it will get the data from the cache rather than the main memory because it has been prefetched.

Quiz

1. In example 5.1, if we reorder the writes within the critical section and as a result write B occurs before write A, will this result in lower execution time in either Sequential consistency (SC) or Relaxed consistency (RC)?

- Yes in both models

- Yes in SC, No in RC

- No in SC, Yes in RC

- No in both SC and RC.

2. ___________ model categorizes the synchronization operation into Acquire and Release.

- Sequential Consistency

- Release Consistency

- Weak Ordering

- Processor Consistency.

3. Which of these is not a type of hardware implementation of a prefetcher?

- Predication

- Stride

- Stream

- Adjacent Cache line prefetcher

References

<references/>