CSC/ECE 506 Spring 2012/ch9a cm: Difference between revisions

No edit summary |

|||

| Line 3: | Line 3: | ||

== Thick locks/Lock overhead == | == Thick locks/Lock overhead == | ||

Java is an explicitly multi-threaded language, hence each of the classes are designed with synchronization (using Monitors -link, refer to the code below) in | Java is an explicitly multi-threaded language, hence each of the classes are designed with synchronization (using Monitors -link) in mind. | ||

In Java, the monitor operations are specified by declaring a method or a block as ''synchronized'' (refer to the code below). If the specified method is an instance method, its receiver object, this, is used for a monitor. To realize this monitor behavior, the JVM uses a lock mechanism, Java lock, associated with each object. The lock is acquired by a thread when it enters the object’s monitor, held while the thread is in the monitor and executing the synchronized method, and released when the thread exits from the monitor. If a thread attempts to acquire a lock held by another thread, the second thread is blocked until the first thread releases the lock. | |||

When these classes are used in single threaded programs, there's a huge performance overhead, even in the absence of true concurrency. Currently JDK design favors space over time efficiency. The concurrency primitives are kept outside of the objects to avoid space cost and looked up through a Monitor Cache. Unfortunately this does not scale well since monitor cache itself needs to be locked when making the look-ups. In addition, a large number of synchronized objects created, the space overhead may be too much. | |||

<pre> | <pre> | ||

// Simple counter | |||

class MyCounter { | |||

int value = 0; | |||

synchronized int add(int i) { // Guarded by Java locks | |||

value = value + i; | |||

{ | return value; | ||

} | } | ||

</pre> | </pre> | ||

Revision as of 07:42, 5 April 2012

Introduction

Various schemes have been explored in the past to provide synchronization in a multiprocessor system. This article discusses the overheads associated with traditional locking mechanisms, using Java Monitors as an example. It also discusses some novel methods to and Various techniques have been studied that exploit the

Thick locks/Lock overhead

Java is an explicitly multi-threaded language, hence each of the classes are designed with synchronization (using Monitors -link) in mind. In Java, the monitor operations are specified by declaring a method or a block as synchronized (refer to the code below). If the specified method is an instance method, its receiver object, this, is used for a monitor. To realize this monitor behavior, the JVM uses a lock mechanism, Java lock, associated with each object. The lock is acquired by a thread when it enters the object’s monitor, held while the thread is in the monitor and executing the synchronized method, and released when the thread exits from the monitor. If a thread attempts to acquire a lock held by another thread, the second thread is blocked until the first thread releases the lock.

When these classes are used in single threaded programs, there's a huge performance overhead, even in the absence of true concurrency. Currently JDK design favors space over time efficiency. The concurrency primitives are kept outside of the objects to avoid space cost and looked up through a Monitor Cache. Unfortunately this does not scale well since monitor cache itself needs to be locked when making the look-ups. In addition, a large number of synchronized objects created, the space overhead may be too much.

// Simple counter

class MyCounter {

int value = 0;

synchronized int add(int i) { // Guarded by Java locks

value = value + i;

return value;

}

Additionally, monitors make use of atomic operations(E.g Compare and Swap - CAS) to provide mutual exclusion. Note that CAS incurs local latency and does not really impact scalability. On typical SMP systems, reads are very efficient, since all the requesting processors can have the cache line replicated in their caches.But if even one processor is writing to a shared cache line, those writes will generate considerable cache coherence traffic. Assuming a write-invalidate cache coherence policy, the readers will continually re-load the cache line just to have it subsequently invalidated by the writer. Coherency bandwidth is limited and global resource, so in addition to local latency, excessive sharing traffic will impact overall scalability and impede the progress of threads running on other processors.A so-called coherency miss -- for example a load on processor P1 where processor P2 has the cache line in M-state -- is typically much slower than a normal miss. So if you have threads on processors P1 and P2 iterating trying to acquire the same lock, then lock acquisition itself will generate coherency traffic and result in the cache "sloshing" of the line(s) holding the metadata.

Reducing the overhead - solutions

Thick locks

Thin locks

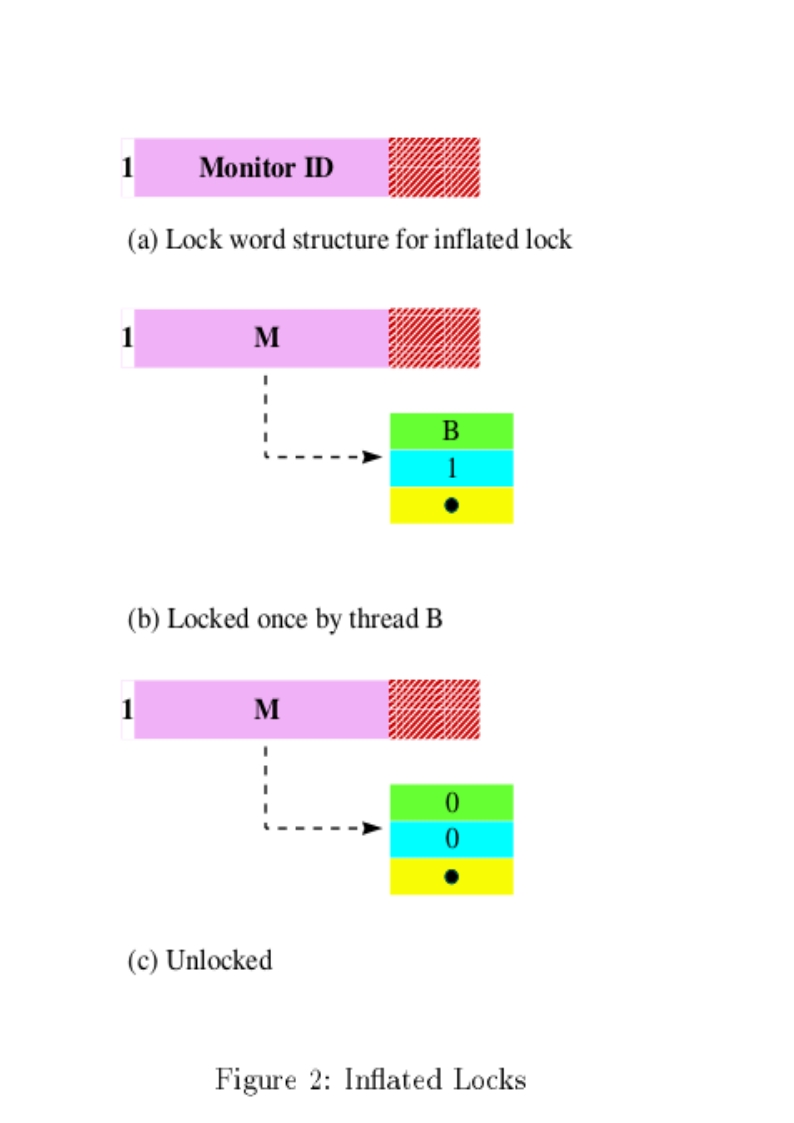

Language supported synchronization leads to inefficiency due to the useless synchronization requirements due to the thread safe nature of the Java Libraries. The necessity of locking introduces the use of heavy weight objects. In Java, synchronization is introduced even when there is no requirementfor synchronization. This causes slowdowns of upto a factor of 2.In Sun JDK implementation, the monitors are placed outside the objects and are looked up from monitor caches.This is highly inefficient and requires locking of the monitor caches to avoid race conditions. It also leads to considerable increase in space overhead due to the size of the monitor structures. This problem is solved by using a new algorithm that requires fewer machine instructions and lesser number of bits per lock.

Thin Locks are an implementation of monitors in IBM's version of the JDK 1.1.2 for AIX. It has the following desirable characteristics over thick locks.

- In the absence of contention, initial and nested locking performance is much better. There is a significant improvement in performance even in th presence of contention, as compared to the JDK implementation.

- Only 24 bits in an object are used for locking.These 24 bits are obtained using encoding techniques on the other values stored in the header.`

- The structure of the 24 bit lock has been engineered to make the most common locking and unlocking functionalities to be executed with minimal machine instructions.

- The 24 bits of the thin lock are divided as follows:

- The first bit consists of the Monitor shape bit which represents if the lock is a thin lock and thick lock. - The next 23 bits point to the thick lock reference in case a thick lock is implemented. - The next 23 bits in case of a thin lock represents the thread identifier(15 bits) and the lock count(8 bits).