CSC/ECE 506 Spring 2012/ch9a cm: Difference between revisions

| (31 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

== Introduction == | == Introduction == | ||

Various schemes have been explored in the past to provide synchronization in a multiprocessor system. These solutions have been known to be associated with either software or hardware overhead or both. Software overhead is because of system calls and other book keeping the Operating system has to | Various schemes have been explored in the past to provide synchronization in a multiprocessor system. These solutions have been known to be associated with either software overhead or hardware overhead or both. Software overhead is because of system calls and other book keeping the Operating system has to perform. Hardware overhead is due to the bus transactions and the [http://en.wikipedia.org/wiki/Cache_coherence Cache coherence protocols]. This article discusses the overheads associated with traditional locking mechanisms, taking Java Virtual Machine<ref name=JVM>http://en.wikipedia.org/wiki/Java_virtual_machine</ref> as an example. It also discusses some novel approaches to counter these overheads and provide efficient synchronization mechanisms. | ||

== Java Synchronization == | == Java Synchronization<ref name=kawachiya>http://www.research.ibm.com/trl/people/kawatiya/Kawachiya05phd.pdf</ref> == | ||

Java is an explicitly multi-threaded language, hence each of the classes | Java is an explicitly multi-threaded language, hence each of the classes that it provides has been designed with implicit synchronization. Java provides a mutual exclusion mechanism based on the concept of [http://en.wikipedia.org/wiki/Monitor_%28synchronization%29 Monitors]. At most only one thread can enter an object’s monitor simultaneously. When another thread is in the monitor, a thread trying to enter is forced to wait by the JVM until the first thread exits from the monitor. | ||

In Java, the monitor operations are specified by declaring a method or a block as ''synchronized'' (refer to the code below). If the specified method is an instance method, its receiver object, ''this'', is used as the owner of the lock. To realize this Monitor behavior, the JVM uses a lock mechanism, "Java lock", associated with each object. The lock is acquired by a thread when it enters the object’s monitor, held while the thread is in the monitor and executing the synchronized method, and released when the thread exits from the monitor. If a thread attempts to acquire a lock held by another thread, the second thread is blocked until the first thread releases the lock. | In Java, the monitor operations are specified by declaring a method or a block as ''synchronized'' (refer to the code below). If the specified method is an instance method, its receiver object, ''this'', is used as the owner of the lock. To realize this Monitor behavior, the JVM uses a lock mechanism, "Java lock", associated with each object. The lock is acquired by a thread when it enters the object’s monitor, held while the thread is in the monitor and executing the synchronized method, and released when the thread exits from the monitor. If a thread attempts to acquire a lock held by another thread, the second thread is blocked until the first thread releases the lock. | ||

| Line 17: | Line 17: | ||

</pre> | </pre> | ||

A very simple implementation of | A very simple but naive implementation of this could be to embed the OS provided monitor object in each of the Objects that is created. Access to each object is synchronized using this Monitor object. Following code illustrates this idea: | ||

<pre> | <pre> | ||

| Line 39: | Line 39: | ||

</pre> | </pre> | ||

When these classes are used in single threaded programs, there's a huge performance overhead, even in the absence of true concurrency. Also, it incurs space overhead due to the presence of monitor code in each of the objects, even if it may not need any synchronization. Currently in JDK design the concurrency primitives are kept outside of the objects to avoid space cost and looked up through a ''Monitor Cache''. Unfortunately this does not scale well since monitor cache itself needs to be locked when making the look-ups. | When these classes are used in single threaded programs, there's a huge performance overhead, even in the absence of true concurrency. Also, it incurs space overhead due to the presence of monitor code in each of the objects, even if it may not need any synchronization. Currently in JDK design the concurrency primitives are kept outside of the objects to avoid space cost and looked up through a ''Monitor Cache''<ref name=kawachiya> </ref>. Unfortunately this does not scale well since monitor cache itself needs to be locked when making the look-ups. | ||

Additionally, monitors make use of atomic operations | Additionally, monitors make use of [http://en.wikipedia.org/wiki/Linearizability atomic operations] to provide mutual exclusion. On typical SMP systems, reads are very efficient, since all the requesting processors can have the cache line replicated in their caches.But if even one processor is writing to a shared cache line, those writes will generate considerable cache coherence traffic. Assuming a write-invalidate cache coherence policy, the readers will continually re-load the cache line just to have it subsequently invalidated by the writer. Coherency bandwidth is a limited and global resource, so in addition to local latency, excessive sharing traffic will impact overall scalability and impede the progress of threads running on other processors. A so-called coherency miss, assuming [http://en.wikipedia.org/wiki/MESI_protocol MESI protocol] -- for example a load on processor P1 where processor P2 has the cache line in M-state -- is typically much slower than a normal miss. So if you have threads on processors P1 and P2 iterating trying to acquire the same lock, then lock acquisition itself will generate coherency traffic and result in the cache "sloshing" of the line(s) holding the metadata <ref name=BiasedLockBlog> https://blogs.oracle.com/dave/entry/biased_locking_in_hotspot </ref>. | ||

== Solutions for Reducing the Overhead == | == Solutions for Reducing the Overhead == | ||

| Line 49: | Line 49: | ||

=== Thin locks === | === Thin locks <ref name=ThinLocks> http://www.research.ibm.com/people/d/dfb/papers/Bacon98Thin.pdf </ref>=== | ||

Language supported synchronization leads to inefficiency due to the useless synchronization requirements due to the thread safe nature of the Java Libraries. The necessity of locking introduces the use of heavy weight objects. In Java, synchronization is introduced even when there is no requirementfor synchronization. This causes slowdowns of upto a factor of 2.In Sun JDK implementation, the monitors are placed outside the objects and are looked up from monitor caches.This is highly inefficient and requires locking of the monitor caches to avoid race conditions. It also leads to considerable increase in space overhead due to the size of the monitor structures. This problem is solved by using a new algorithm that requires fewer machine instructions and lesser number of bits per lock. | Language supported synchronization leads to inefficiency due to the useless synchronization requirements due to the thread safe nature of the Java Libraries. The necessity of locking introduces the use of heavy weight objects. In Java, synchronization is introduced even when there is no requirementfor synchronization. This causes slowdowns of upto a factor of 2.In Sun JDK implementation, the monitors are placed outside the objects and are looked up from monitor caches.This is highly inefficient and requires locking of the monitor caches to avoid race conditions. It also leads to considerable increase in space overhead due to the size of the monitor structures. This problem is solved by using a new algorithm that requires fewer machine instructions and lesser number of bits per lock. | ||

| Line 60: | Line 60: | ||

- The 24 bits of the thin lock are divided as follows: | - The 24 bits of the thin lock are divided as follows: | ||

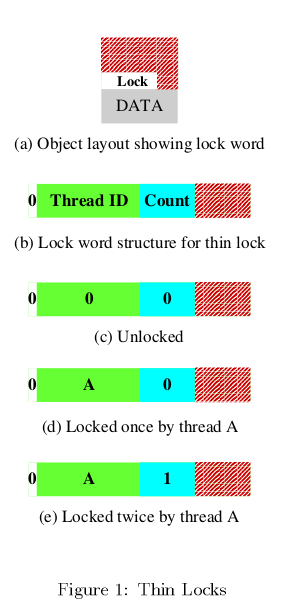

[[File: | [[File:Thinlocks1.png]] | ||

- The first bit consists of the Monitor shape bit which represents if the lock is a thin(flat) lock and thick(inflated) lock. This bimodal feature is the most | - The first bit consists of the Monitor shape bit which represents if the lock is a thin(flat) lock and thick(inflated) lock. This bimodal feature is the most | ||

| Line 76: | Line 76: | ||

Nested unlocking is analogous to nested locking, and is performed by decrementing the lock count. | Nested unlocking is analogous to nested locking, and is performed by decrementing the lock count. | ||

A pseudo C code depicting the Thin Lock | [[File:Thinlocks3.png]] | ||

A pseudo C code depicting the Thin Lock acquisition is given as follows: | |||

| Line 82: | Line 84: | ||

// Object header contains a word for lock | // Object header contains a word for lock | ||

typedef struct object { | typedef struct object { | ||

volatile unsigned int lockword; | volatile unsigned int lockword; | ||

} Object; | } Object; | ||

| Line 88: | Line 90: | ||

int Java_lock_acquire(Object *obj) { | int Java_lock_acquire(Object *obj) { | ||

// flat lock path | // flat lock path | ||

if (compare_and_swap(&obj->lockword, 0, thread_id()) == SUCCESS) | if (compare_and_swap(&obj->lockword, 0, thread_id()) == SUCCESS) | ||

return SUCCESS; | return SUCCESS; | ||

// inflation loop | |||

while ((obj->lockword & SHAPE_BIT) == 0) { | // inflation loop | ||

if (compare_and_swap(&obj->lockword, 0, thread_id()) == SUCCESS) { | while ((obj->lockword & SHAPE_BIT) == 0) { | ||

inflate(obj); return SUCCESS; | if (compare_and_swap(&obj->lockword, 0, thread_id()) == SUCCESS) { | ||

} | inflate(obj); | ||

thread_yield(); | return SUCCESS; | ||

} | } | ||

// inflated lock path | thread_yield(); | ||

monitor_t *mon = (monitor_t *)(obj->lockword & ~SHAPE_BIT); | } | ||

return monitor_enter(mon); | // inflated lock path | ||

monitor_t *mon = (monitor_t *)(obj->lockword & ~SHAPE_BIT); | |||

return monitor_enter(mon); | |||

} | } | ||

| Line 106: | Line 110: | ||

void inflate(Object *obj) | void inflate(Object *obj) | ||

{ | { | ||

monitor_t *mon = Create_a_monitor(); // assume that the MSB is 0 | monitor_t *mon = Create_a_monitor(); // assume that the MSB is 0 | ||

monitor_enter(mon); | monitor_enter(mon); | ||

obj->lockword = SHAPE_BIT | (unsigned int)mon; // set the shape bit | obj->lockword = SHAPE_BIT | (unsigned int)mon; // set the shape bit | ||

} | } | ||

int Java_lock_release(Object *obj) { | int Java_lock_release(Object *obj) { | ||

unsigned int lw = obj->lockword; | unsigned int lw = obj->lockword; | ||

if ((lw & SHAPE_BIT) == 0) { // flat lock path | if ((lw & SHAPE_BIT) == 0) { // flat lock path | ||

if (lw != thread_id()) return ILLEGAL_STATE; | if (lw != thread_id()) | ||

obj->lockword = 0; return SUCCESS; | return ILLEGAL_STATE; | ||

} | obj->lockword = 0; return SUCCESS; | ||

// inflated lock path | } | ||

monitor_t *mon = (monitor_t *)(lw & ~SHAPE_BIT); | // inflated lock path | ||

return monitor_exit(mon); | monitor_t *mon = (monitor_t *)(lw & ~SHAPE_BIT); | ||

return monitor_exit(mon); | |||

} | } | ||

| Line 126: | Line 131: | ||

int Java_lock_wait(Object *obj) | int Java_lock_wait(Object *obj) | ||

{ | { | ||

unsigned int lw = obj->lockword; | unsigned int lw = obj->lockword; | ||

if ((lw & SHAPE_BIT) == 0) { // flat mode | if ((lw & SHAPE_BIT) == 0) { // flat mode | ||

if (lw != thread_id()) return ILLEGAL_STATE; | if (lw != thread_id()) | ||

inflate(obj); // force the inflation | return ILLEGAL_STATE; | ||

inflate(obj); // force the inflation | |||

} | } | ||

// execute the wait using the monitor structure | // execute the wait using the monitor structure | ||

monitor_t *mon = (monitor_t *)(obj->lockword & ~SHAPE_BIT); | monitor_t *mon = (monitor_t *)(obj->lockword & ~SHAPE_BIT); | ||

return monitor_wait(mon); | return monitor_wait(mon); | ||

} | } | ||

// Java_lock_notify()/notify_all() are implemented in the same manner | // Java_lock_notify()/notify_all() are implemented in the same manner | ||

| Line 142: | Line 147: | ||

[[File: | [[File:Thinlocks2.png]] | ||

=== Biased locks === | === Biased locks <ref name=BiasedLocks> http://home.comcast.net/~pjbishop/Dave/QRL-OpLocks-BiasedLocking.pdf</ref> === | ||

By using the thin lock, the cost of lock acquisition has been reduced to one compare and swap operation. However, such an atomic operation is usually very costly compared to simple memory access operations. If we can acquire a lock without any atomic operations for common cases, the cost of locks is further reduced. | By using the thin lock, the cost of lock acquisition has been reduced to one compare and swap operation. However, such an atomic operation is usually very costly compared to simple memory access operations. If we can acquire a lock without any atomic operations for common cases, the cost of locks is further reduced. | ||

In addition, the thin lock implementation uses a busy-wait loop for inflating an object’s lock. It should also be checked that this will not cause performance degradation. When we explore the area of non-contended lock | In addition, the thin lock implementation uses a busy-wait loop for inflating an object’s lock. It should also be checked that this will not cause performance degradation. When we explore the area of non-contended lock acquisition, we arrive at an alternative that exploits these characteristics - Biased locks. | ||

Java Virtual Machines is the case wherein a single process repeatedly acquires and releases a lock, but other processes rarely if ever access this lock (First characteristic above). The locks that can be optimized for this case may be referred to as Quickly Re-acquirable Locks (QRLs). We shall also refer to the optimized code path in which a process reacquires and subsequently releases a lock that it has previously held, and that no other process has ever held, as an ''ultra fast path''. The idea is to try to reduce the use of atomic instructions (e.g CAS,Barriers,etc) in the ultra fast path, because these instructions are typically much more expensive than other instructions. | Java Virtual Machines is the case wherein a single process repeatedly acquires and releases a lock, but other processes rarely if ever access this lock (First characteristic above). The locks that can be optimized for this case may be referred to as Quickly Re-acquirable Locks (QRLs)<ref name=BiasedLocks></ref>. We shall also refer to the optimized code path in which a process reacquires and subsequently releases a lock that it has previously held, and that no other process has ever held, as an ''ultra fast path''. The idea is to try to reduce the use of atomic instructions (e.g CAS,Barriers,etc) in the ultra fast path, because these instructions are typically much more expensive than other instructions. | ||

For QRLs, our target is to avoid all such instructions in both the acquire and release portions of the ultra fast code path. | For QRLs, our target is to avoid all such instructions in both the acquire and release portions of the ultra fast code path. | ||

| Line 161: | Line 166: | ||

Switching a lock from NEUTRAL to being biased to a particular process can be done for the one-time cost of a single CAS operation. The main difficulty in constructing an atomic-free QRL lies in coordinating the revocation of a lock between a revoking process and the bias holder process. Race conditions that must typically be addressed include a revocation that occurs simultaneous to a biased acquire as well as a revocation that occurs simultaneous to a biased release. | Switching a lock from NEUTRAL to being biased to a particular process can be done for the one-time cost of a single CAS operation. The main difficulty in constructing an atomic-free QRL lies in coordinating the revocation of a lock between a revoking process and the bias holder process. Race conditions that must typically be addressed include a revocation that occurs simultaneous to a biased acquire as well as a revocation that occurs simultaneous to a biased release. | ||

In a system that assures Sequential consistency, it is easy to design a technique in which a bias holder reacquires the lock by first writing its quicklock bit and then verifying that the lock has not been revoked; and revoking processes first indicate revocation and then check whether the bias holder has acquired | In a system that assures [http://en.wikipedia.org/wiki/Sequential_consistency Sequential consistency], it is easy to design a technique in which a bias holder reacquires the lock by first writing its quicklock bit and then verifying that the lock has not been revoked; and revoking processes first indicate revocation and then check whether the bias holder has acquired | ||

the Quicklock. However, for weaker consistency models, we may be required to use barriers/fences to ensure correctness. But, in this case, it is sufficient to preclude a single read (of the lock status) from being reordered before a single write (of the Quicklock). Hence, if we can introduce an artificial dependency between the write and read instructions mentioned above, we can ensure that they are not adversely reordered. | the Quicklock. However, for [http://en.wikipedia.org/wiki/Weak_consistency weaker consistency models], we may be required to use barriers/fences to ensure correctness. But, in this case, it is sufficient to preclude a single read (of the lock status) from being reordered before a single write (of the Quicklock). Hence, if we can introduce an artificial dependency between the write and read instructions mentioned above, we can ensure that they are not adversely reordered. | ||

One way of introducing this dependency | One way of introducing this dependency is to '''collocate''' the status field and the quicklock field into the two halves of a 32-bit word. Then, perform the following steps in order to reacquire the lock on the ultra fast path: | ||

* perform a half-word store on the quicklock field | * perform a half-word store on the quicklock field | ||

* load the entire 32 bit word | * load the entire 32 bit word | ||

| Line 170: | Line 175: | ||

Now, because the data read in step 2 includes the data that was written in step 1, the read must be ordered after the write. This approach provides portability to any memory model in which apparent program order is guaranteed for instructions executed on the same processor. | Now, because the data read in step 2 includes the data that was written in step 1, the read must be ordered after the write. This approach provides portability to any memory model in which apparent program order is guaranteed for instructions executed on the same processor. | ||

Because they can be generalized to use any mutual exclusion algorithm with a standard interface, as well as many algorithms that do not use a standard interface, QRL locks can obtain the benefits of any properties of such locks for the uncontended case at the expense of a mere handful of non-atomic instructions in their critical path. | |||

QRL locks are optimized for a single-process repeated-acquisition data access pattern; however, rebiasable QRLs<ref name=BiasedLocks></ref> that can be used with migratory data access patterns, also provide major performance enhancements. | |||

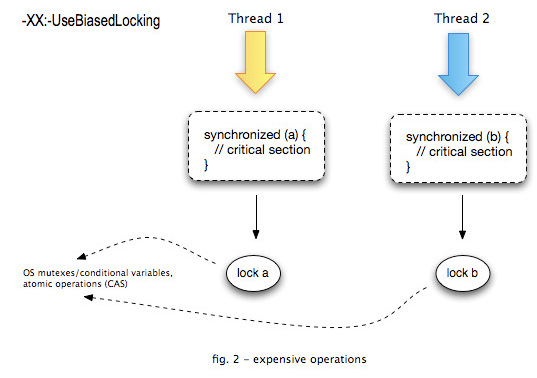

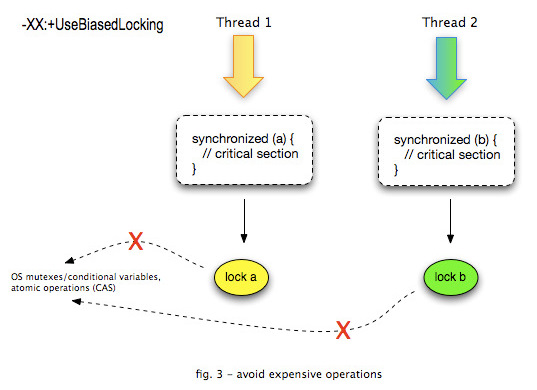

Without Biased Locking: | |||

[[File:biasedlocking1.jpg]] | |||

With Biased Locking: | |||

[[File:biasedlocking.jpg]] | |||

== Conclusion == | |||

By exploiting various characteristics of the programs, we can curtail the synchronization overhead to a major extent. Compared to the "naive" implementation of Java locks, thin locks provide a speed ups of up to a factor of 5. And from the above discussion it is evident that biased locks (QRL) provide the best performance for a common data access pattern in which a single process repeatedly and solely acquires a lock. Coupled with the Collocation technique, this technique can obviate the need for Barriers and Atomic instructions completely, even in case of relaxed memory consistency models. Further research <ref name=RogerLock> http://www.cs.man.ac.uk/~irogers/Reducing_Biased_Lock_Revocation_By_Learning.pdf </ref> is being pursued in trying to reduce the overhead during revocation of Biased locks. Kawachiya <ref name=kawachiya> </ref> provides an original discussion about a related concept called Reservation locks -- also based on thread locality. | |||

== References == | |||

<references /> | |||

Latest revision as of 23:37, 14 April 2012

Introduction

Various schemes have been explored in the past to provide synchronization in a multiprocessor system. These solutions have been known to be associated with either software overhead or hardware overhead or both. Software overhead is because of system calls and other book keeping the Operating system has to perform. Hardware overhead is due to the bus transactions and the Cache coherence protocols. This article discusses the overheads associated with traditional locking mechanisms, taking Java Virtual Machine<ref name=JVM>http://en.wikipedia.org/wiki/Java_virtual_machine</ref> as an example. It also discusses some novel approaches to counter these overheads and provide efficient synchronization mechanisms.

Java Synchronization<ref name=kawachiya>http://www.research.ibm.com/trl/people/kawatiya/Kawachiya05phd.pdf</ref>

Java is an explicitly multi-threaded language, hence each of the classes that it provides has been designed with implicit synchronization. Java provides a mutual exclusion mechanism based on the concept of Monitors. At most only one thread can enter an object’s monitor simultaneously. When another thread is in the monitor, a thread trying to enter is forced to wait by the JVM until the first thread exits from the monitor.

In Java, the monitor operations are specified by declaring a method or a block as synchronized (refer to the code below). If the specified method is an instance method, its receiver object, this, is used as the owner of the lock. To realize this Monitor behavior, the JVM uses a lock mechanism, "Java lock", associated with each object. The lock is acquired by a thread when it enters the object’s monitor, held while the thread is in the monitor and executing the synchronized method, and released when the thread exits from the monitor. If a thread attempts to acquire a lock held by another thread, the second thread is blocked until the first thread releases the lock.

// Simple counter

class MyCounter {

int value = 0;

synchronized int add(int i) { // Guarded by Java locks

value = value + i;

return value;

}

A very simple but naive implementation of this could be to embed the OS provided monitor object in each of the Objects that is created. Access to each object is synchronized using this Monitor object. Following code illustrates this idea:

// Object header contains the OS-provided monitor structure

typedef struct object {

//

monitor_t mon; // initialized when the object is created

//

} Object;

// Current thread acquires the object’s lock

int Java_lock_acquire(Object *obj) {

return monitor_enter(&obj->mon);

}

// Current thread releases the object’s lock

int Java_lock_release(Object *obj) {

return monitor_exit(&obj->mon);

}

When these classes are used in single threaded programs, there's a huge performance overhead, even in the absence of true concurrency. Also, it incurs space overhead due to the presence of monitor code in each of the objects, even if it may not need any synchronization. Currently in JDK design the concurrency primitives are kept outside of the objects to avoid space cost and looked up through a Monitor Cache<ref name=kawachiya> </ref>. Unfortunately this does not scale well since monitor cache itself needs to be locked when making the look-ups.

Additionally, monitors make use of atomic operations to provide mutual exclusion. On typical SMP systems, reads are very efficient, since all the requesting processors can have the cache line replicated in their caches.But if even one processor is writing to a shared cache line, those writes will generate considerable cache coherence traffic. Assuming a write-invalidate cache coherence policy, the readers will continually re-load the cache line just to have it subsequently invalidated by the writer. Coherency bandwidth is a limited and global resource, so in addition to local latency, excessive sharing traffic will impact overall scalability and impede the progress of threads running on other processors. A so-called coherency miss, assuming MESI protocol -- for example a load on processor P1 where processor P2 has the cache line in M-state -- is typically much slower than a normal miss. So if you have threads on processors P1 and P2 iterating trying to acquire the same lock, then lock acquisition itself will generate coherency traffic and result in the cache "sloshing" of the line(s) holding the metadata <ref name=BiasedLockBlog> https://blogs.oracle.com/dave/entry/biased_locking_in_hotspot </ref>.

Solutions for Reducing the Overhead

Most solutions that deal with minimizing Synchronization overhead exploit certain characteristics of programs that need synchronization. In case of Java locks, the first characteristic is that only a few objects are actually used for locks, although any objects can theoretically be used. The monitor table method utilizes this characteristic to reduce the memory consumption. In this method, monitor structures are not prepared for objects that are not used for locks, which is the most common case. When an object is first used for lock, a monitor structure is prepared and associated with the object.

The second characteristic is that contention between threads never occurs for most of the objects used for locks. This fact implies that the heavyweight monitor structure which supports thread suspension is unnecessary for the most common case. The thin lock explained in the next section exploits this characteristic to improve performance.

Thin locks <ref name=ThinLocks> http://www.research.ibm.com/people/d/dfb/papers/Bacon98Thin.pdf </ref>

Language supported synchronization leads to inefficiency due to the useless synchronization requirements due to the thread safe nature of the Java Libraries. The necessity of locking introduces the use of heavy weight objects. In Java, synchronization is introduced even when there is no requirementfor synchronization. This causes slowdowns of upto a factor of 2.In Sun JDK implementation, the monitors are placed outside the objects and are looked up from monitor caches.This is highly inefficient and requires locking of the monitor caches to avoid race conditions. It also leads to considerable increase in space overhead due to the size of the monitor structures. This problem is solved by using a new algorithm that requires fewer machine instructions and lesser number of bits per lock.

Thin Locks are an implementation of monitors in IBM's version of the JDK 1.1.2 for AIX. In the absence of contention, initial and nested locking performance is much better. There is a significant improvement in performance even in th presence of contention, as compared to the JDK implementation.

- Only 24 bits(lockword) in an object are used for locking.These 24 bits are obtained using encoding techniques on the other values stored in the header.

- The structure of the 24 bit lock has been engineered to make the most common locking and unlocking functionalities to be executed with minimal machine instructions.

- The 24 bits of the thin lock are divided as follows:

- The first bit consists of the Monitor shape bit which represents if the lock is a thin(flat) lock and thick(inflated) lock. This bimodal feature is the most

important feature of the thin lock.

- The next 23 bits point to the thick lock reference in case a thick lock is implemented.

- The next 23 bits in case of a thin lock represents the thread identifier(15 bits) and the lock count(8 bits).

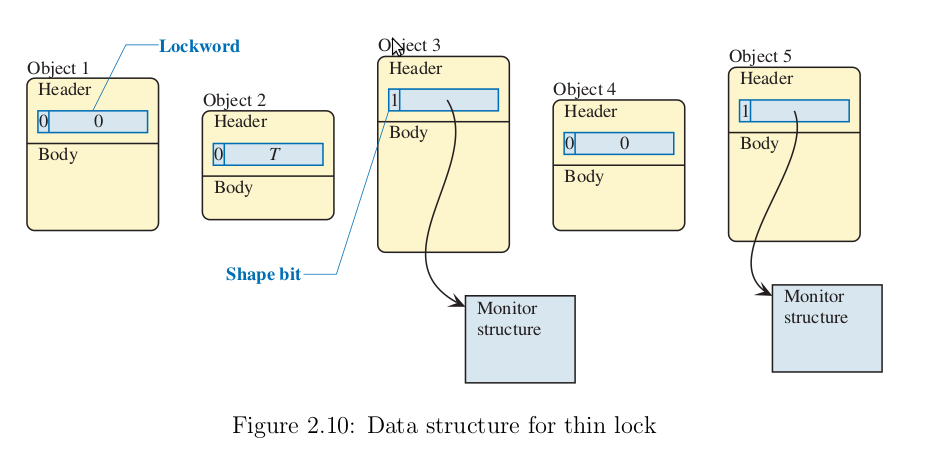

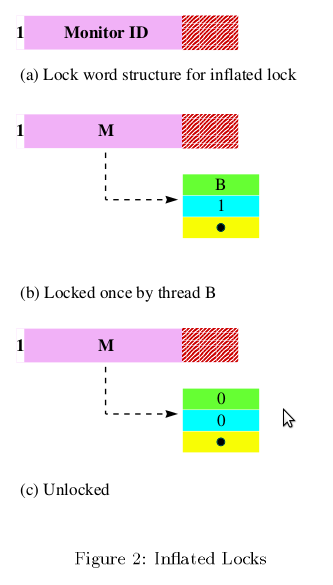

As long as contention does not occur, the lockword is in the flat(thin) mode. The threads can acquire the lock by simply changing the thread id to its own using the compare and swap operation. When a thread tries to acquire the lock which is held by another thread, the lockword is changed to the inflated mode and the shape bit is set to 1. At this time the monitor structure is prepared and the direction to the monitor is stored in the remaining 23 bits of the lockword. The lockword is inflated by acquiring the flat mode lock in a busy-wait loop and then storing the address of the monitor in the lockword while setting the shape bit.

Unlocking an object without contention only involves just checking the lockword for the old value and then replacing it with the new value. This does not require an atomic compare and swap operation due to the property of our locks that, once a lock has been acquired , no other thread can modify it.

Nested Locking of an object is when the same thread locks the object multiple times. The thread tries to lock the object by performing a compare and swap operation which fails. The locking routine then checks for the next most likely condition - locking by the owning thread. The locking routine will check that the monitor shape bit is 0. It then checks if the thread Id is that of the owning thread, and if the lock count is less than 255. If the check succeeds, then the thread locks the object by incrementing the lock count by 1. If the lock count exceeds 255, then lock inflation is done.

Nested unlocking is analogous to nested locking, and is performed by decrementing the lock count.

A pseudo C code depicting the Thin Lock acquisition is given as follows:

// Object header contains a word for lock

typedef struct object {

volatile unsigned int lockword;

} Object;

#define SHAPE_BIT 0x80000000

int Java_lock_acquire(Object *obj) {

// flat lock path

if (compare_and_swap(&obj->lockword, 0, thread_id()) == SUCCESS)

return SUCCESS;

// inflation loop

while ((obj->lockword & SHAPE_BIT) == 0) {

if (compare_and_swap(&obj->lockword, 0, thread_id()) == SUCCESS) {

inflate(obj);

return SUCCESS;

}

thread_yield();

}

// inflated lock path

monitor_t *mon = (monitor_t *)(obj->lockword & ~SHAPE_BIT);

return monitor_enter(mon);

}

// Inflate the object’s lockword, which is held by current thread

void inflate(Object *obj)

{

monitor_t *mon = Create_a_monitor(); // assume that the MSB is 0

monitor_enter(mon);

obj->lockword = SHAPE_BIT | (unsigned int)mon; // set the shape bit

}

int Java_lock_release(Object *obj) {

unsigned int lw = obj->lockword;

if ((lw & SHAPE_BIT) == 0) { // flat lock path

if (lw != thread_id())

return ILLEGAL_STATE;

obj->lockword = 0; return SUCCESS;

}

// inflated lock path

monitor_t *mon = (monitor_t *)(lw & ~SHAPE_BIT);

return monitor_exit(mon);

}

int Java_lock_wait(Object *obj)

{

unsigned int lw = obj->lockword;

if ((lw & SHAPE_BIT) == 0) { // flat mode

if (lw != thread_id())

return ILLEGAL_STATE;

inflate(obj); // force the inflation

}

// execute the wait using the monitor structure

monitor_t *mon = (monitor_t *)(obj->lockword & ~SHAPE_BIT);

return monitor_wait(mon);

}

// Java_lock_notify()/notify_all() are implemented in the same manner

Biased locks <ref name=BiasedLocks> http://home.comcast.net/~pjbishop/Dave/QRL-OpLocks-BiasedLocking.pdf</ref>

By using the thin lock, the cost of lock acquisition has been reduced to one compare and swap operation. However, such an atomic operation is usually very costly compared to simple memory access operations. If we can acquire a lock without any atomic operations for common cases, the cost of locks is further reduced. In addition, the thin lock implementation uses a busy-wait loop for inflating an object’s lock. It should also be checked that this will not cause performance degradation. When we explore the area of non-contended lock acquisition, we arrive at an alternative that exploits these characteristics - Biased locks.

Java Virtual Machines is the case wherein a single process repeatedly acquires and releases a lock, but other processes rarely if ever access this lock (First characteristic above). The locks that can be optimized for this case may be referred to as Quickly Re-acquirable Locks (QRLs)<ref name=BiasedLocks></ref>. We shall also refer to the optimized code path in which a process reacquires and subsequently releases a lock that it has previously held, and that no other process has ever held, as an ultra fast path. The idea is to try to reduce the use of atomic instructions (e.g CAS,Barriers,etc) in the ultra fast path, because these instructions are typically much more expensive than other instructions. For QRLs, our target is to avoid all such instructions in both the acquire and release portions of the ultra fast code path.

In order to support this, two additional fields are added to the default lock object. The first field is a status word that takes on one of the values:

- NEUTRAL

- BIASED

- REVOKING

- DEFAULT.

Initially the lock is NEUTRAL and the first time the lock is acquired, the process acquiring the lock changes the status to BIASED. If a second process attempts to acquire the lock, it eventually changes the lock status to DEFAULT, but may set the status to REVOKING as an intermediary state in the revocation protocol.When the lock state is BIASED, this field additionally contains a process identifier for the current bias owner. The second field is a Boolean bit, that may be called a Quick lock bit -- that indicates whether the bias holder currently holds the lock. Hence, when the lock has been acquired, and not subsequently revoked, the bias holder can acquire or release the lock by simply toggling this bit.

Switching a lock from NEUTRAL to being biased to a particular process can be done for the one-time cost of a single CAS operation. The main difficulty in constructing an atomic-free QRL lies in coordinating the revocation of a lock between a revoking process and the bias holder process. Race conditions that must typically be addressed include a revocation that occurs simultaneous to a biased acquire as well as a revocation that occurs simultaneous to a biased release.

In a system that assures Sequential consistency, it is easy to design a technique in which a bias holder reacquires the lock by first writing its quicklock bit and then verifying that the lock has not been revoked; and revoking processes first indicate revocation and then check whether the bias holder has acquired the Quicklock. However, for weaker consistency models, we may be required to use barriers/fences to ensure correctness. But, in this case, it is sufficient to preclude a single read (of the lock status) from being reordered before a single write (of the Quicklock). Hence, if we can introduce an artificial dependency between the write and read instructions mentioned above, we can ensure that they are not adversely reordered.

One way of introducing this dependency is to collocate the status field and the quicklock field into the two halves of a 32-bit word. Then, perform the following steps in order to reacquire the lock on the ultra fast path:

- perform a half-word store on the quicklock field

- load the entire 32 bit word

- shift out the status field from what we read

Now, because the data read in step 2 includes the data that was written in step 1, the read must be ordered after the write. This approach provides portability to any memory model in which apparent program order is guaranteed for instructions executed on the same processor.

Because they can be generalized to use any mutual exclusion algorithm with a standard interface, as well as many algorithms that do not use a standard interface, QRL locks can obtain the benefits of any properties of such locks for the uncontended case at the expense of a mere handful of non-atomic instructions in their critical path.

QRL locks are optimized for a single-process repeated-acquisition data access pattern; however, rebiasable QRLs<ref name=BiasedLocks></ref> that can be used with migratory data access patterns, also provide major performance enhancements.

Without Biased Locking:

With Biased Locking:

Conclusion

By exploiting various characteristics of the programs, we can curtail the synchronization overhead to a major extent. Compared to the "naive" implementation of Java locks, thin locks provide a speed ups of up to a factor of 5. And from the above discussion it is evident that biased locks (QRL) provide the best performance for a common data access pattern in which a single process repeatedly and solely acquires a lock. Coupled with the Collocation technique, this technique can obviate the need for Barriers and Atomic instructions completely, even in case of relaxed memory consistency models. Further research <ref name=RogerLock> http://www.cs.man.ac.uk/~irogers/Reducing_Biased_Lock_Revocation_By_Learning.pdf </ref> is being pursued in trying to reduce the overhead during revocation of Biased locks. Kawachiya <ref name=kawachiya> </ref> provides an original discussion about a related concept called Reservation locks -- also based on thread locality.

References

<references />