CSC/ECE 506 Spring 2012/10a dr: Difference between revisions

(→D) |

|||

| Line 115: | Line 115: | ||

'''Write atomicity''', included in the definition of SC above, implies that the position in the total order at which a write appears to perform should be the same with respect to all processors. It ensures that nothing a processor does after it has seen the new value produced by a write becomes visible to other processes before they too have seen the new value for that write. In effect, while coherence (write serialization) says that writes to the same location should appear to all processors to have occurred in the same order, sequential consistency says that all writes (to any location) should appear to all processors to have occurred in the same order. The following example shows why write atomicity is important. | '''Write atomicity''', included in the definition of SC above, implies that the position in the total order at which a write appears to perform should be the same with respect to all processors. It ensures that nothing a processor does after it has seen the new value produced by a write becomes visible to other processes before they too have seen the new value for that write. In effect, while coherence (write serialization) says that writes to the same location should appear to all processors to have occurred in the same order, sequential consistency says that all writes (to any location) should appear to all processors to have occurred in the same order. The following example shows why write atomicity is important. | ||

Example: | '''Example:''' | ||

Consider the three processes in Figure 3.4. Show how not preserving write atomicity violates sequential consistency. | Consider the three processes in Figure 3.4. Show how not preserving write atomicity violates sequential consistency. | ||

Since P2 waits until '''X''' becomes 3 and then sets '''Y''' to 80, and since P3 waits until '''Y''' becomes 80 and only then reads value of '''X''', from transitivity we would infer that P3 should find the value of '''X''' to be 3. If P2 is allowed to go on past the read of '''X''' and write '''Y''' before it is guaranteed that P3 has seen the new value of '''X''', then P3 may read the new value of '''Y''' but the old value of '''X''' from its cache, violating our sequentially consistent intuition. | Since P2 waits until '''X''' becomes 3 and then sets '''Y''' to 80, and since P3 waits until '''Y''' becomes 80 and only then reads value of '''X''', from transitivity we would infer that P3 should find the value of '''X''' to be 3. If P2 is allowed to go on past the read of '''X''' and write '''Y''' before it is guaranteed that P3 has seen the new value of '''X''', then P3 may read the new value of '''Y''' but the old value of '''X''' from its cache, violating our sequentially consistent intuition. | ||

Each process’s program order imposes a partial order on the set of all operations; that is, it imposes an ordering on the subset of the operations that are issued by that process. An interleaving (in the above sense) of the operations from different processes defines a total order on the set of all operations. Since the exact interleaving is not defined by SC, interleaving the partial (program) orders for different processes may yield a large number of possible total orders. The following | |||

definitions apply: | Each process’s program order imposes a partial order on the set of all operations; that is, it imposes an ordering on the subset of the operations that are issued by that process. An interleaving (in the above sense) of the operations from different processes defines a total order on the set of all operations. Since the exact interleaving is not defined by SC, interleaving the partial (program) orders for different processes may yield a large number of possible total orders. | ||

''Sequentially Consistent Execution'' - An execution of a program is said to be sequentially consistent if the results it produces are the same as those produced by any one of these possible total orders (interleavings as defined earlier). That is, there should exist a total order or interleaving of program orders from processes that yields the same result as that actual execution. | |||

''Sequentially Consistent System'' - A system is sequentially consistent if any possible execution on that system corresponds to (produces the same results as) some possible total order as defined above. | The following definitions apply: | ||

*''Sequentially Consistent Execution'' - An execution of a program is said to be sequentially consistent if the results it produces are the same as those produced by any one of these possible total orders (interleavings as defined earlier). That is, there should exist a total order or interleaving of program orders from processes that yields the same result as that actual execution. | |||

*''Sequentially Consistent System'' - A system is sequentially consistent if any possible execution on that system corresponds to (produces the same results as) some possible total order as defined above. | |||

Of course, an implicit assumption throughout is that a read returns the last value that was written to that same location (by any process) in the interleaved total order. | Of course, an implicit assumption throughout is that a read returns the last value that was written to that same location (by any process) in the interleaved total order. | ||

==''Relaxed Consistency Models'' == | ==''Relaxed Consistency Models'' == | ||

Revision as of 19:28, 3 April 2012

Prefetching and consistency models

Introduction

In

Prefetching

Sequential prefetching is a simple hardware controlled pre fetching technique which relies on the automatic prefetch of consecutive blocks following the block that misses in the cache, thus exploiting spatial locality. In its simplest for, the number of prefetched blocks on each miss is fixed throughout the execution<ref>http://129.16.20.23/~pers/pub/j5.pdf</ref>.

Prefetching is a common technique to reduce the read miss penalty. Prefetching relies on predicting which blocks currently missing in the cache will be read in the future and on bringing these blocks into the cache prior to the reference triggering the miss. Prefetching approaches proposed in the literature are software or hardware based.

Software controlled prefetching schemes rely on the programmer/compiler to insert prefetch instructions prior to the instructions that trigger a miss. In addition, both the processor and the memory system must be able to support prefetch instructions which can potentially increase the code size and the run-time overhead. By contrast, hardware-controlled prefetch relieve the programmer/compiler from the burden of deciding what and when to prefetch. Usually, these schemes take advantage of the regularity of data access in scientific computations by dynamically detecting access strides.

Basically there are two types of prefetching techniques

1. Fixed sequential prefetching

2. Adaptive sequential prefetching

More details about these is discussed in the following sections:

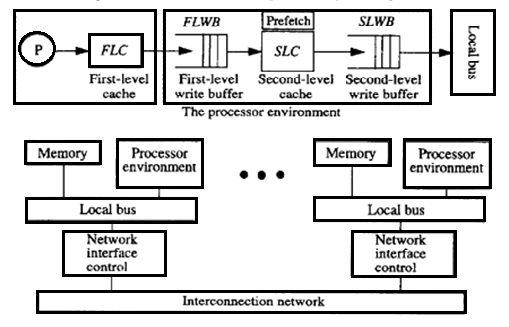

Simulated processing Node Architecture

According to Fig. 1, the processing node consists of a processor, a first-level cache (FLC), a second-level cache (SLC), a first- and second-level write buffer (FLWB and SLWB), a local bus, a network interface controller, and a memory module. The FLC is a direct-mapped write-through cache with no allocation of blocks on write misses and is blocking on read misses. Writes and read miss requests are buffered in the FLWB. The second-level cache (SLC) is a direct-mapped write-back cache. For both prefetching techniques, we only prefetch into the SLC. In addition, a second-level write buffer (SLWB) keeps track of outstanding requests (SLC read miss, prefetch, and write requests). No more than one request to the same block is allowed to be issued to the system; others are just kept in the SLWB while waiting for the pending request to that block to complete. Moreover, a read miss request may bypass write requests if they are for different blocks.

Fixed Sequential Prefetching – A simple Hardware Prefetching scheme

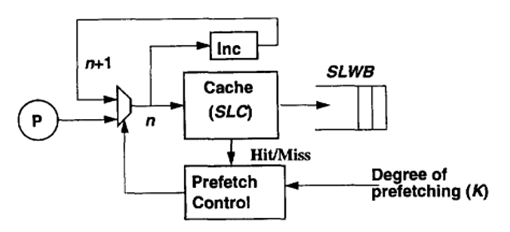

By fixed sequential prefetching we mean .that K consecutive blocks are prefetched into the SLC on a reference to a block, i.e., blocks n + 1 ... n + K are prefetched upon a reference to block n, if they are not present in the cache. Sequential prefetching has been extensively studied in the context of uniprocessors [25], [26] but, to our knowledge, have never been considered for general applications on multiprocessors. Although many sequential strategies have been proposed for uniprocessors, we have restricted ourselves to prefetching on a miss in the SLC. When a reference misses in the SLC, the miss request is sent to memory, and the cache is searched for the K consecutive blocks directly following the missing block in the address space. The blocks among the K consecutive blocks that are not present in the SLC and have no pending requests in the SLWB are prefetched. We refer to K as the degree of prefetching.

Fig. 2 shows the mechanism of the fixed sequential prefetching scheme. As a cache lookup is made for block address n, the next block address (n + 1) is calculated. On a read miss, a read request is issued to the memory system and is kept in the SLWB. In the next cache cycle, the calculated address (n + I) is directed to the cache, and a cache lookup is made. If the block is not present in the cache, a prefetch request is issued and is kept in the SLWB. During that time, the subsequent block address is calculated (n + 2). The number of iterations is determined by the degree of prefetching. The processor is blocked only during the time it takes to handle the first read miss. Since the prefetch requests are issued one at a time and are pipelined in the memory system, they can be overlapped with the original read request. Besides the simple extensions in the SLC to incorporate fixed sequential prefetching, the memory system must be able to handle three new network commands: a prefetch request and two reply messages denoted PreData and PreNeg. Whereas PreData carries the prefetched block, PreNeg tells the cache that the prefetch request cannot be satisfied because the memory copy is in a transient state-some other cache is reading or writing to it.

Adaptive Sequential Prefetching-Further Exploiting the Spatial Locality of Reads

The mechanism behind the adaptive scheme is basically the same as that of fixed sequential prefetching. For example, prefetching is activated by a read miss and blocks are prefetched into the SLC. In contrast to fixed sequential prefetching, however, the degree of prefetching is not fixed; rather it is controlled by a register, the Lookahead Counter. The adaptive sequential prefetching scheme relies on adjusting the degree of prefetching (the value of the Lookahead- Counter) dynamically by counting the useful prefetches, i.e., prefetched blocks that are actually referenced during their lifetime in the cache. To explain how this is achieved, we will first focus on how the algorithm measures the prefetch efficiency and then how the Lookahead Counter is adjusted to a certain prefetch efficiency. The mechanisms needed to achieve these task-two bits per cache line and three counters per cache appear in Table 1.

| PrefetchBit (per Cache Line) | Used to detect useful prefetches (needed when prefetching is tumed on.) |

| ZeroBit (per cache line) | Used to detect when a prefetch would have been useful (needed when prefetching is turned off.) |

| LookaheadCounter (per cache) | The current degree of prefetching (per cache) |

| PrefetchCounter (per cache) | Counts the number of prefetches that have been I returned after each read miss |

| UsefulCounter (per cache) | Counts the number of useful prefetches |

Conceptually, the algorithm measures the prefetch efficiency by counting the fraction of prefetched blocks that are referenced by the processors. If this fraction exceeds a preset threshold, the degree of prefetching is increased and, if it is below another preset threshold, the degree of prefetching is decreased.

Consistency models <ref>http://titanium.cs.berkeley.edu/papers/kamil-su-yelick-sc05.pdf</ref> <ref>http://www.hpl.hp.com/techreports/Compaq-DEC/WRL-95-7.pdf</ref>

The interface for memory in a shared memory multiprocessor is called a memory consistency model. As the interface between the programmer and the system, the effect of the memory consistency model is pervasive in a shared memory system. The model affects programmability because programmers must use it to reason about the correctness of their programs. The model affects the performance of the system because it determines the types of optimizations that may be exploited by the hardware and the system software. Finally, due to a lack of consensus on a single model, portability can be affected when moving software across systems supporting different models.

A memory consistency model specification is required for every level at which an interface is defined between the programmer and the system. At the machine code interface, the memory model specification affects the designer of the machine hardware and the programmer who writes or reasons about machine code. At the high level language interface, the specification affects the programmers who use the high level language and the designers of both the software that converts high-level language code into machine code and the hardware that executes this code. Therefore, the programmability, performance, and portability concerns may be present at several different levels.

In summary, the memory model influences the writing of parallel programs from the programmer’s perspective, and virtually all aspects of designing a parallel system (including the processor, memory system, interconnection network, compiler, and programming languages) from a system designer’s perspective.

The memory consistency model in shared memory parallel programming controls the order in which memory operations performed by one thread may be observed by another. Consistency models are used in distributed systems like distributed shared memory systems or distributed data stores (such as a filesystems, databases, optimistic replication systems or Web caching). The system supports a given model, if operations on memory follow specific rules. The data consistency model specifies a contract between programmer and system, wherein the system guarantees that if the programmer follows the rules, memory will be consistent and the results of memory operations will be predictable.

Sequential Consistency Model (SC) <ref>A Primer on Memory Consistency and Cache Coherence. - Daniel J. Sorin, Mark D. Hill, and David A. Wood</ref>

Arguably the most intuitive memory consistency model is sequential consistency (SC). Sequential consistency was first formalized by Lamport. Lamport first called a single processor (core) sequential if “the result of an execution is the same as if the operations had been executed in the order specified by the program.” He then called a multiprocessor sequentially consistent if “the result of any execution is the same as if the operations of all processors (cores) were executed in some sequential order, and the operations of each individual processor (core) appear in this sequence in the order specified by its program.” This total order of operations is called memory order. In SC, memory order respects each core’s program order, but other consistency models may permit memory orders that do not always respect the program orders.

An easy way to enforce sequential consistency is to insert memory fences after each shared memory access. This forbids all reordering of shared memory operations, which prevents optimizations such as prefetching and code motion, resulting in an unacceptable performance penalty. Various techniques have been proposed to minimize the number of fences, or delay set, required to enforce sequential consistency

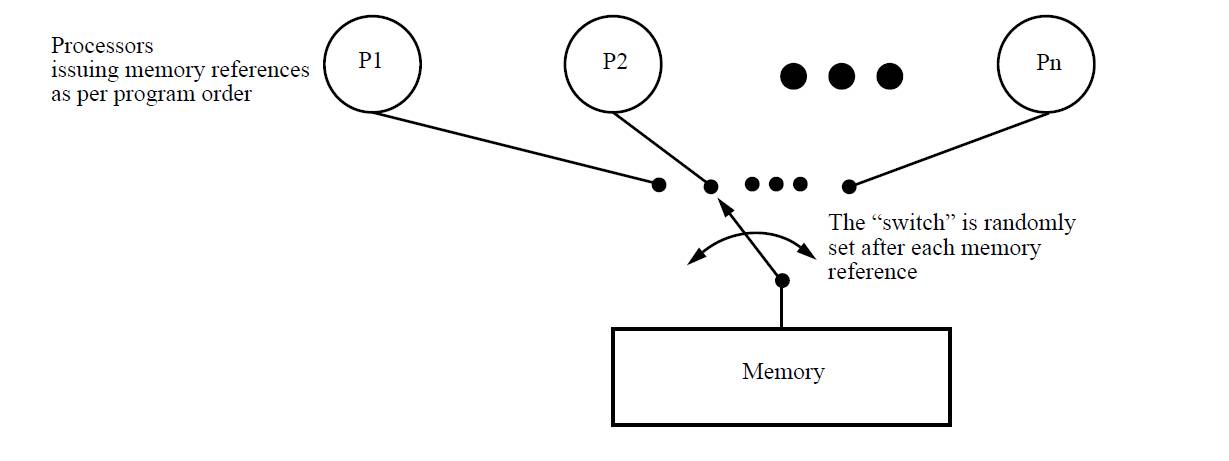

Figure 3.2 depicts the abstraction of memory provided to programmers by a sequentially consistent system. Multiple processes appear to share a single logical memory, even though in the real machine main memory may be distributed across multiple processors, each with their private caches and write buffers.

Every processor appears to issue and complete memory operations one at a time and atomically in program order - that is, a memory operation does not appear to be issued until the previous one has completed - and the common memory appears to service these requests one at a time in an interleaved manner according to an arbitrary (but hopefully fair) schedule. Memory operations appear atomic in this interleaved order; that is, it should appear globally (to all processors) as if one operation in the consistent interleaved order executes and completes before the next one begins.

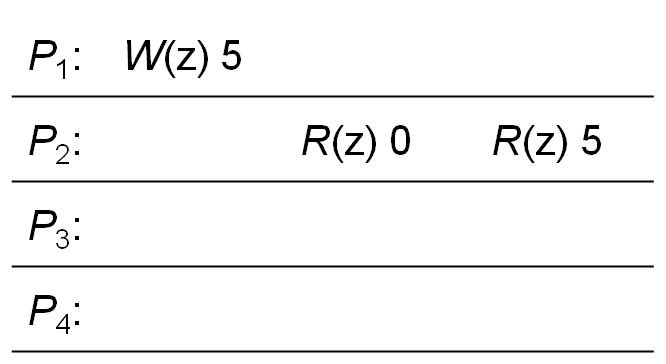

Sequential Consistency Example:

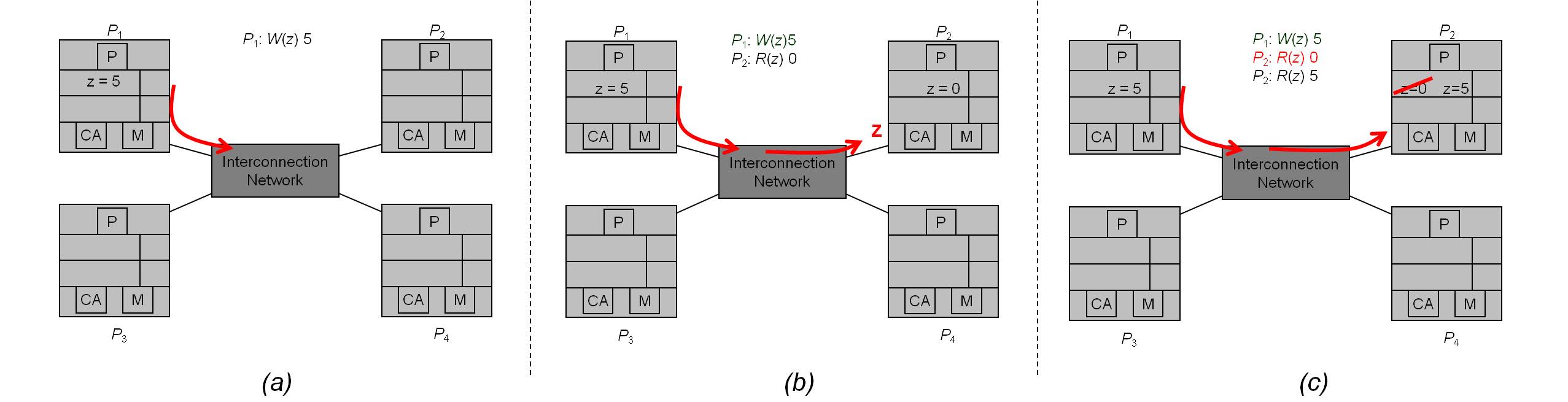

From figure 3.1, P2 does not see P1’s write of z = 5 before its first read of z, so it happens to have an out-of-date value.

However, the write propagates to P2 before its second read of z.

This is legal under SC because,

-> Processors do not always have to see up-to-date values

-> Processors just need to see writes in the order they happen

Note: it would also have been legal under SC for W(z) 1 to propagate to P2 before its first read of z or after its second read of z.

Pictorial representation in figure 3.2

Sufficient Conditions for Preserving Sequential Consistency <ref>Parallel Computer Architecture: A Hardware/Software Approach. - David E. Culler, University of California, Berkeley; Jaswinder Pal Singh, Princeton University</ref>

It is possible to define a set of sufficient conditions that the system should obey that will guarantee sequential consistency in a multiprocessor - whether bus-based or distributed, cache-coherent or not. The following set, adapted from their original form, are commonly used because they are relatively simple without being overly restrictive:

- Every process issues memory requests in the order specified by the program.

- After a write operation is issued, the issuing process waits for the write to complete before issuing its next operation.

- After a read operation is issued, the issuing process waits for the read to complete, and for the write whose value is being returned by the read to complete, before issuing its next operation. That is, if the write whose value is being returned has performed with respect to this processor (as it must have if its value is being returned) then the processor should wait until the write has performed with respect to all processors.

Importance of write Atomicity <ref>Parallel Computer Architecture: A Hardware/Software Approach. - David E. Culler, University of California, Berkeley; Jaswinder Pal Singh, Princeton University</ref>

Write atomicity, included in the definition of SC above, implies that the position in the total order at which a write appears to perform should be the same with respect to all processors. It ensures that nothing a processor does after it has seen the new value produced by a write becomes visible to other processes before they too have seen the new value for that write. In effect, while coherence (write serialization) says that writes to the same location should appear to all processors to have occurred in the same order, sequential consistency says that all writes (to any location) should appear to all processors to have occurred in the same order. The following example shows why write atomicity is important.

Example:

Consider the three processes in Figure 3.4. Show how not preserving write atomicity violates sequential consistency. Since P2 waits until X becomes 3 and then sets Y to 80, and since P3 waits until Y becomes 80 and only then reads value of X, from transitivity we would infer that P3 should find the value of X to be 3. If P2 is allowed to go on past the read of X and write Y before it is guaranteed that P3 has seen the new value of X, then P3 may read the new value of Y but the old value of X from its cache, violating our sequentially consistent intuition.

Each process’s program order imposes a partial order on the set of all operations; that is, it imposes an ordering on the subset of the operations that are issued by that process. An interleaving (in the above sense) of the operations from different processes defines a total order on the set of all operations. Since the exact interleaving is not defined by SC, interleaving the partial (program) orders for different processes may yield a large number of possible total orders.

The following definitions apply:

- Sequentially Consistent Execution - An execution of a program is said to be sequentially consistent if the results it produces are the same as those produced by any one of these possible total orders (interleavings as defined earlier). That is, there should exist a total order or interleaving of program orders from processes that yields the same result as that actual execution.

- Sequentially Consistent System - A system is sequentially consistent if any possible execution on that system corresponds to (produces the same results as) some possible total order as defined above.

Of course, an implicit assumption throughout is that a read returns the last value that was written to that same location (by any process) in the interleaved total order.

Relaxed Consistency Models

So

Conclusion

H

Using

I

Even

External links

1. Sequential hardware prefetching in shared-memory multiprocessors

2. Making Sequential Consistency Practical in Titanium

References

<references/>