CSC/ECE 506 Fall 2007/wiki3 1 satkar

Introduction

The cache organization plays a key role in the modern computers, especially in the multiprocessors. As we scale the number of processors, subsequently the cache miss rate also increases. The high cache miss rate is a cause of concern, since it can significantly limit the performance of multiprocessors. The cache misses are broadly categorized into “Three-Cs”, namely Compulsory misses, Capacity misses and the Conflict misses. There is yet another category of misses introduced by the cache coherent multiprocessors, called the coherence misses. These occur when blocks of data are shared among multiple caches, and are of two types;

• True sharing: When a data word produced by a processor is used by another processor, then it is said to be True Sharing. True sharing is intrinsic to a particular memory reference stream of a program and is not dependent on the block size.

• False Sharing: When independent data words for different processors are placed in the same block, then it is called false sharing.

Increasing the size of the line in the cache helps in reducing the hit time, as more blocks can be accommodated in the same line. However, long cache lines may cause false sharing, when different processors access different words in the same cache line. In essence, they share the same line, without truly sharing the accessed data.

Problem with False Sharing

In multiprocessors, accessing a memory location causes a slice of actual memory (a cache line) containing the memory location requested to be copied into the cache.Subsequent references to the same memory location or those around it can probably be satisfied out of the cache until the system determines it is necessary to maintain the coherency between cache and memory and restore the cache line back to memory.

But, in a scenario, where multiple processors try to update individual elements in the same cache line, the entire cache line is invalidated, even though the updates are independent of each other.Each update of an individual element of a cache line marks the line as invalid. Hence, other processors accessing a different element in the same line see the line marked as invalid. They are forced to fetch a fresh copy of the line from memory, even though the element accessed has not been modified. This is because cache coherency is maintained on a cache-line basis, and not for individual elements. As a result there will be an increase in interconnect traffic and overhead. Also, while the cache-line update is in progress, access to the elements in the line is inhibited.

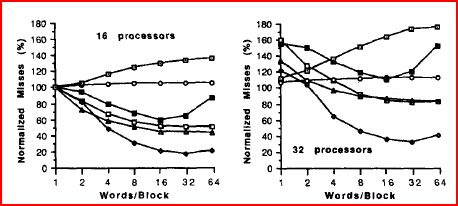

This situation is called false sharing, and might become a bottleneck in the path of performance and scalability. A very important parameter that affects false sharing, is the block size in a cache.An increase in the block size in case of uniprocessors tends to increase the spatial locality due to which the miss rate decreases. Where as in case of multiprocessors, increase in block size not only increases spatial locality but also increases the probability of false sharing. Thus the miss rate due to increased block size can go up or down in multiprocessors. The graph below shows the variations of miss rate as a function of the block size, done with respect to 16 and 32 processors. It depicts the significant increment in the miss rate as the block size increases, due to false sharing.

Strategies to combat “False Sharing”

Proper Block Sizing

An algorithm can be designed to select the block sizes, to minimize bus traffic through the use of variable (static) size blocks; i.e., the block size choice varies over the memory space of the program, but any given word is assigned to a specific fixed block size for the entire program execution. Starting with each word in the memory space that is used, neighboring blocks are combined. If when combined they produce less bus traffic than when left as single blocks. When neighboring words have similar access patterns and it is useful to prefetch one while demand fetching the other, the traffic is reduced when the words (or blocks) are grouped into a single unit due to fewer address transmissions over the bus. When excessive traffic is generated due to false sharing, the problem blocks are isolated by not combining them into larger units.

Data placement optimizations

(a) SplitScalar: Place scalar variables that cause false sharing in different blocks.

(b) Heap Allocate: Allocate shared space from different heap regions according to which processor request the space. It is common for a slave process to access the shared space that it requests itself. If no action is taken, the space allocated by different processes may share the same cache block and lead to false sharing.

(c) Expand Record: Expand records in an array (padding with dummy words) to reduce the sharing of a cache block by different records. While successful prefetching may occur within a record or across records, false sharing usually occurs across records, when more than one of them share the same cache block.

(d) Align Record: Choose a layout for arrays of records that minimizes the number of blocks the average record spans. This optimization maximizes prefetching of the rest of the record when one word of a record is accessed, and may also reduce false sharing.

(e) Lockscalar: Place active scalars that are protected by a lock in the same block as the lock variable. As a result, the scalar is prefetched when the lock is accessed.

False sharing is caused by a mismatch between the memory layout of the write-shared data and the cross-processor memory reference pattern to it. Manually changing the placement of this data to better conform to the memory reference pattern can reduce false sharing up to 75%.

References

[1] False Sharing and Spatial Locality in Multiprocessor Caches Josep Torrellas, Member, IEEE, Mbnica S. Lam, Member, IEEE, and John L. Hennessy, Fellow, IEEE

[2] Analysis of Shared Memory Misses and Reference Patterns Jeffrey B. Rothman and Alan Jay Smith