CSC/ECE 506 Spring 2013/12a cm

Interconnection Network Architecture

In a multi-processor system, processors need to communicate with each other and access each other's resources. In order to route data and messages between processors, an interconnection architecture is needed.

Typically, in a multiprocessor system, message passed between processors are frequent and short[1]. Therefore, the interconnection network architecture must handle messages quickly by having low latency, and must handle several messages at a time and have high bandwidth.

In a network, a processor along with its cache and memory is considered a node. The physical wires that connect between them is called a link. The device that routes messages between nodes is called a router. The shape of the network, such as the number of links and routers, is called the network topology.

History of Network Topologies

Hypercube topologies were invented in the 80s and had desirable characteristics when the number of nodes is small (~1000 maximum, often <100) and every processor must stop working to receive and forward the message [20]. The low-radix era began in 1985 and was defined by routers with between 4 and 8 ports using toroidal, mesh or fat-tree topologies and wormhole routing. This era lasted about 20 years until it was determined that routers with dozens of ports offered superior performance. Two topologies were developed to take advantage of the newly developed high-radix routers. These are flattened butterfly and dragonfly, which are somewhere between a mesh with each point on the mesh being a router (or virtual router in the case of dragonfly) with dozens or hundreds of nodes attached and a fat tree with sufficiently high arity as to only have two levels.

Interconnection evolution in the Top500 List

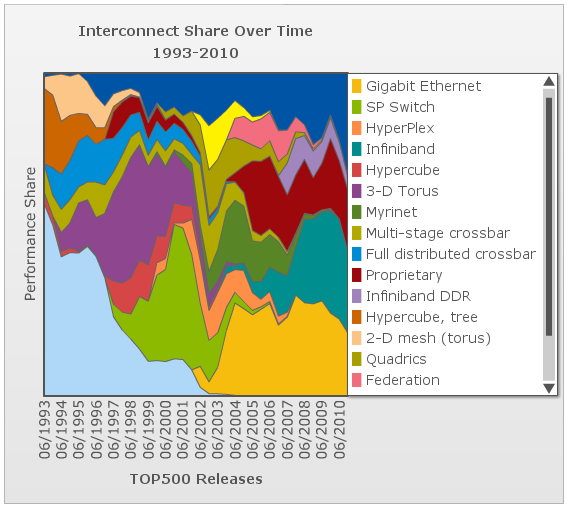

This chart shows the evolution over time of the different interconnect topologies by their dominance in the top500 list of supercomputers [7]. As one can see, many technologies came into vogue briefly before losing performance share and disappearing. In the early days of the list, most of the computers list that the interconnect type is not applicable. However, the trailing end of the hypercube phase is clear in burnt orange. The dark blue at the top is "other" and the dark red in the middle is "proprietary", so we can only speculate about what topologies they might employ. The toroidal mesh appears briefly at the start in a cream color, and slightly outlasts the hypercube. The two crossbar technologies (blue and olive) followed the toroidal mesh. The fully-distributed crossbar died out quickly, but the multi-stage crossbar lasted longer but wasn't ever dominant. The 3-D torus (purple) dominates much of the 90s with hypercube topologies (dark pink) enjoying a short comeback in the later part of the decade. SP Switch (light olive), an IBM interconnect technology which uses a multi-stage crossbar switch replaced the 3-D torus[8]. Myrinet, Quadrics, and Federation all shared the spotlight in the mid 00s each used a similar fat-tree topology[9][10]. The current class of supercomputers is dominated by nodes connected with either Infiniband or gigabit ethernet. Both can be connected in either a fat-tree or 2-D mesh topology. The primary difference between them is speed. Infiniband is considerably faster per link and allows links to be ganged into groups of 4 or 12. Gigabit ethernet is vastly less expensive, however, and some supercomputer designers have apparently chosen to save money on the interconnect technology in order to allow the use of faster nodes [11][12].

References

1. http://www.real-knowledge.com/memory.htm

2. Computer Design & Technology- Lectures slides by Prof.Eric Rotenberg

3. Fundamentals of Parallel Computer Architecture by Prof.Yan Solihin

4. “Cache write policies and performance,” Norman Jouppi, Proc. 20th International Symposium on Computer Architecture (ACM Computer Architecture News 21:2), May 1993, pp. 191–201.

5. Architecture of Parallel Computers, Lecture slides by Prof. Edward Gehringer

6. “Parallel computer architecture: a hardware/software approach” by David. E. Culler, Jaswinder Pal Singh, Anoop Gupta (pg 887)

7. “Evaluating Stream Buffers as a Secondary Cache Replacement” by Subbarao Palacharla and R. E. Kessler. (ref#2)

8. http://en.wikipedia.org/wiki/Instruction_prefetch

9. http://www.real-knowledge.com/memory.htm

10. Yan Solihin, Fundamentals of Parallel Computer Architecture (Solihin Publishing and Consulting, LLC, 2008-2009), p. 151

11. "Intel® 64 and IA-32 Architectures Optimization Reference Manual", Intel Corporation, 1997-2011. http://www.intel.com/Assets/PDF/manual/248966.pdf

12. "Software Optimization Guide for AMD Family 10h and 12h Processors", Advanced Micro Devices, Inc., 2006–2010. http://support.amd.com/us/Processor_TechDocs/40546.pdf

13. http://en.wikipedia.org/wiki/SPARC

14. http://en.wikipedia.org/wiki/Athlon_II

15. http://en.wikipedia.org/wiki/Phenom_II

16. http://en.wikipedia.org/wiki/List_of_Intel_Core_i5_microprocessors

17. http://en.wikipedia.org/wiki/List_of_Intel_microprocessors

18. http://www.cpu-world.com/

19. http://en.wikipedia.org/wiki/CPU_cache#History

20. http://www.multicoreinfo.com/prefetching-multicore-processors/