CSC/ECE 506 Spring 2012/7b yw

Introduction

In this chapter we will first introduce the concept of virtual address memories, more precisely, virtually addressed caches. Pros and cons of virtually addressed caches are discussed. Then we discuss the need for TLB, a cache-like construct that translate virtual address to physical address. Then we raise the issue of TLB coherence.

Virtually addressed caches

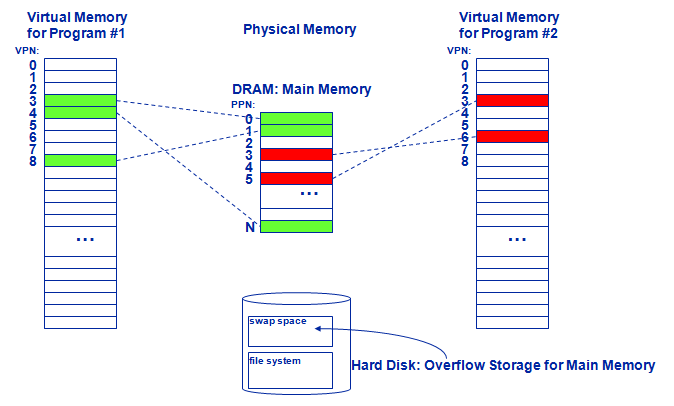

Virtual memory is a memory management technology developed for multithread operating systems. This technique virtualizes a computer architecture's various forms of computer data storage (such as random-access memory and disk storage), allowing a program to be designed as though there is only one kind of memory, "virtual" memory, which behaves like directly addressable read/write memory (RAM).

This technique greatly simplifies programmers job because modern operating system runs each process on its own dedicated virtual memory space. Thus each program runs as if it has the sole access of the virtual memory. In this way, programmers don't have to worry about how operating system switches between processes or how other process operates. Also, Virtual memory makes application programming easier by hiding fragmentation of physical memory; by delegating to the kernel the burden of managing the memory hierarchy (eliminating the need for the program to handle overlays explicitly); and, when each process is run in its own dedicated address space, by obviating the need to relocate program code or to access memory with relative addressing.

The introducing of virtual memory raise the problem of translating virtual address to physical address since we need physical address to access the actual content stored in cache. And that's where Translation Lookaside Buffer comes into use.

What is TLB

A TLB has a fixed number of slots that contain page table entries, which map virtual addresses to physical addresses. The virtual memory is the space seen from a process. This space is segmented in pages of a prefixed size. The page table (generally loaded in memory) keeps track of where the virtual pages are loaded in the physical memory. The TLB is a cache of the page table; that is, only a subset of its content are stored. The TLB references physical memory addresses in its table. It may reside between the CPU and the CPU cache, between the CPU cache and primary storage memory, or between levels of a multi-level cache. The placement determines whether the cache uses physical or virtual addressing. If the cache is virtually addressed, requests are sent directly from the CPU to the cache, and the TLB is accessed only on a cache miss. If the cache is physically addressed, the CPU does a TLB lookup on every memory operation and the resulting physical address is sent to the cache. There are pros and cons to both implementations. Caches that use virtual addressing have for their key part of the virtual address plus, optionally, a key called an "address space identifier" (ASID). Caches that don't have ASIDs must be flushed every context switch in a multiprocessing environment. In a Harvard architecture or hybrid thereof, a separate virtual address space or memory access hardware may exist for instructions and data. This can lead to distinct TLBs for each access type. A common optimization for physically addressed caches is to perform the TLB lookup in parallel with the cache access. The low-order bits of any virtual address (e.g., in a virtual memory system having 4 KB pages, the lower 12 bits of the virtual address) represent the offset of the desired address within the page, and thus they do not change in the virtual-to-physical translation. During a cache access, two steps are performed: an index is used to find an entry in the cache's data store, and then the tags for the cache line found are compared. If the cache is structured in such a way that it can be indexed using only the bits that do not change in translation, the cache can perform its "index" operation while the TLB translates the upper bits of the address. Then, the translated address from the TLB is passed to the cache. The cache performs a tag comparison to determine if this access was a hit or miss. It is possible to perform the TLB lookup in parallel with the cache access even if the cache must be indexed using some bits that may change upon address translation; see the address translation section in the cache article for more details about virtual addressing as it pertains to caches and TLBs.

tlb.PNG

And here is generally how a typical TLB is working. Note that X is the virtual page number to be translated into a physical page number, X' is the Physical number corresponding to X. and V is the Virtual page number of a victim page(that is a page to be kicked off the table).

tlbOP.PNG

Next in this chapter we are going to discuss problems that are going to occur when we extend this TLB structure to multi-processor systems. The solution to these problems is presented in next chapter.