CSC/ECE 506 Spring 2011/ch11 sw

This article discusses the design of scalable shared memory multiprocessors. As we know, bus-based multiprocessor possess the disadvantage of being non-scalable. So, a new system called Distributed Shared Memory was introduced wherein, accessing different parts of memory takes different times. Thus, a DSM is more scalable than a bus-based multiprocessors. But as the size of the DSM increases, so does the cost for the hardware support needed for it.

The wiki chapter introduces the approaches used to scale multiprocessors, the cache coherence protocols for a basic DSM and explains how the race conditions are handled.

Approaches to Large-Scale Multiprocessors

The two main factors limiting the scalability of a bus-based multiprocessor are:

- Physical scalability

- Protocol scalability

As mentioned in section 11.1 of Solihin book, a directory protocol using point-to-point interconnection is the best option. In a directory-based protocol, the directory - a structure - holds the information about which caches have a copy of the block. So it is required to contact the directory to get the list of caches to be requested for the copy of the block (which avoids broadcasting request). The directory-based protocol benefits by saving traffic in cases where data sharing occurs for read-only data.

Design considerations

The following design decisions are chosen to implement a DSM:

- A straight-forward physical address - to - memory mapping function is used.

- Memory is considered to be linear and interleaving is avoided.

- Page allocation policies like the Least Recently Used policy, Round Robin policy etc, can be used.

Also, to implement the directory structure, we have the following options:

- Cache-based or memory-based directory.

- Centralized or distributed directory.

A distributed directory approach is more scalable. In order to implement such a protocol, a directory has the following ways to keep track of which caches hold the copy of a block: [ Solihin, 11.2.1]

- Full-bit vector format

- Coarse-bit vector format

- Limited pointer format

- Sparse directory format

These formats can be either used exclusively or can be used in combinations. The last design issue is where is the directory information physically located, and the following choices exist for this:

- Allocating a part of main memory

- Allocating separate memory

- On the same chip as the processor

Either SRAM or DRAM can be used for storage of the directory information. Based on these design options, the next section discusses the DSM cache coherence protocols.

DSM Cache Coherence Protocol

There are variants of three major cache coherence protocols that have been implemented in commercial DSM machines, and other protocols have been proposed in the research community. The protocols differ in terms of directory organization (and therefore directory memory overhead), the number and types of messages exchanged between nodes, the direct protocol processing overhead, and inherent scalability features.

This section discusses the Scalable Coherent Interface (SCI) protocol for maintaining cache coherency. As we know, a distributed shared-memory multiprocessor provides shared memory at the software level, while the actual hardware implementation is a distributed message passing system. The IEEE Standard for Scalable Coherent Interface (SCI) includes a protocol for maintaining cache coherence among the distributed components in such a distributed shared-memory multiprocessor.[1]

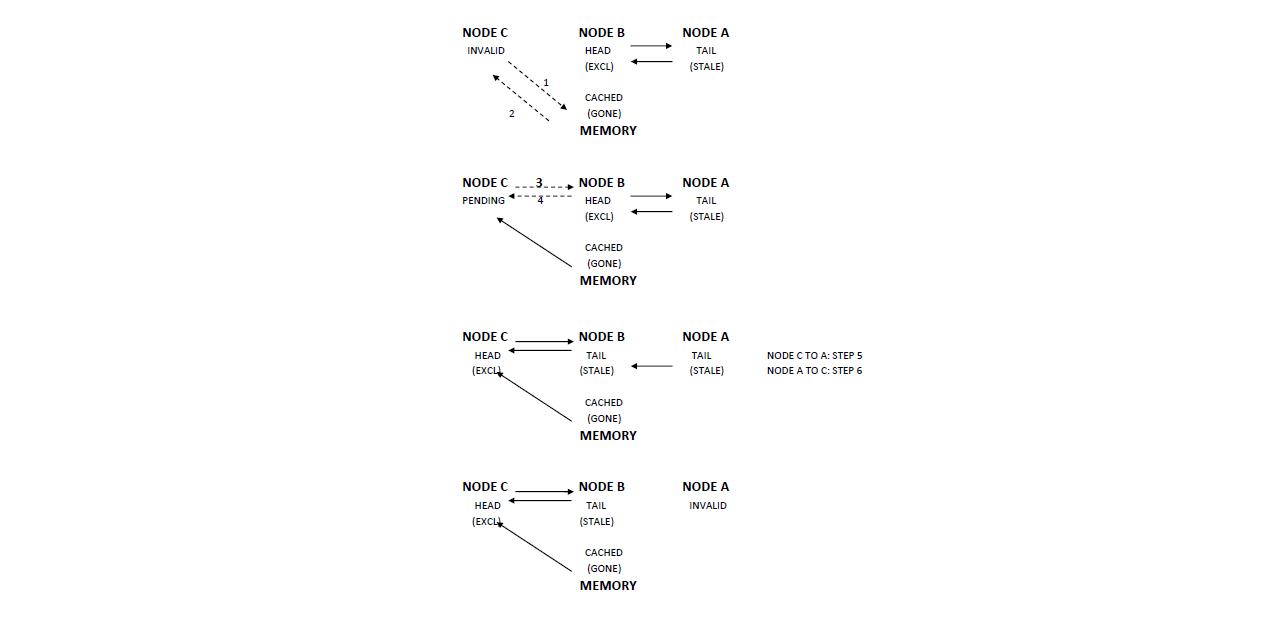

SCI defines a chain-directory based protocol. Memory that can be coherently cached is divided into 64 bytes long lines. For each line, a distributed directory is maintained that maintains the nodes whose caches contain the copy of the line. A doubly-linked list called the sharing list is used to implement the directory. Each memory line is associated with state information and pointer to the head of the sharing list. Each cache line has a forward and backward pointer. Cache lines also contain information about the cache data and the position (head, mid, tail) in the sharing list. The SCI cache coherence protocol is based on write invalidation. A head line can become exclusive by invalidating the rest of the sharing list.

The following figure shows the steps involved in acquiring exclusive access to a line, specifically a line previously in exclusive mode:

References

[1] Ulrich Stern, and David L. Dill. Automatic Veri cation of the SCI Cache Coherence Protocol. Department of Computer Science, Stanford University, Stanford.

See Also

[1] http://ntrg.cs.tcd.ie/undergrad/4ba2.05/group12/index.html

[2] http://www.csl.cornell.edu/~heinrich/dissertation/ChapterTwo.pdf

[3] Simoni, Richard. Implementing a Directory-Based Cache Consistency Protocol. Technical Report: CSL-TR-90-423 March 1990.

[4] D. B. Gustavson. The Scalable Coherent Interface and related standards projects. IEEE Micro, 12(1):10{22, 1992.

[5] IEEE Std 1596-1992, IEEE Standard for Scalable Coherent Interface (SCI).

[6] D. V. James, A. T. Laundrie, S. Gjessing, and G. S. Sohi. Distributed-directory scheme: Scalable Coherent Interface. Computer, 23(6):74{7, 1990.