CSC/ECE 506 Spring 2010/ch 12 PP

Interconnection Networks

Choosing the “Best” Network

While there is no best network that would work well for all applications, it greatly depends on the given application and the parallel system at hand. The following are some of the factors affect the choice of interconnection network.

1. Performance Requirements: Minimize message latency, avoid network from saturating (unable to deliver messages injected by nodes) and increase throughput of the network.

2. Scalability: Adding more processors should increase I/O bandwidth, Memory Bandwidth and Network Bandwidth should increase proportionally.

3. Incremental expandability: Should provide incremental expandability, allowing addition of a small number of nodes while minimizing resource wastage. For example, a network designed for number of processors to be a power of 4 makes it difficult to expand.

4. Partitionability: May be required for security reasons. If network can be partitioned into smaller systems, traffic produced by one user will not affect the others.

5. Simplicity: Simple Design lead to higher clock frequencies and better performance.

6. Distance Span: Maximum distance between nodes should be small.

7. Physical Constraints: Complexity of the connection is limited by the maximum wire density possible and by the maximum pin count. Factors like packaging, wiring, operating temperature should be taken into account since they pose many limitations on designs.

8. Reliability and Reparability: Should allow easy upgrades and repairs. Should minimize faults or detect them and correct them.

9. Expected Workloads: If kind of application is known in advance, network can be optimized for it. If not, network should be robust, design paramenters should be selected to perform well over a wide range of traffic conditions.

10. Cost Constraints: The “best” network might be too expensive. Alternative design considerations are important to meet cost constraints.

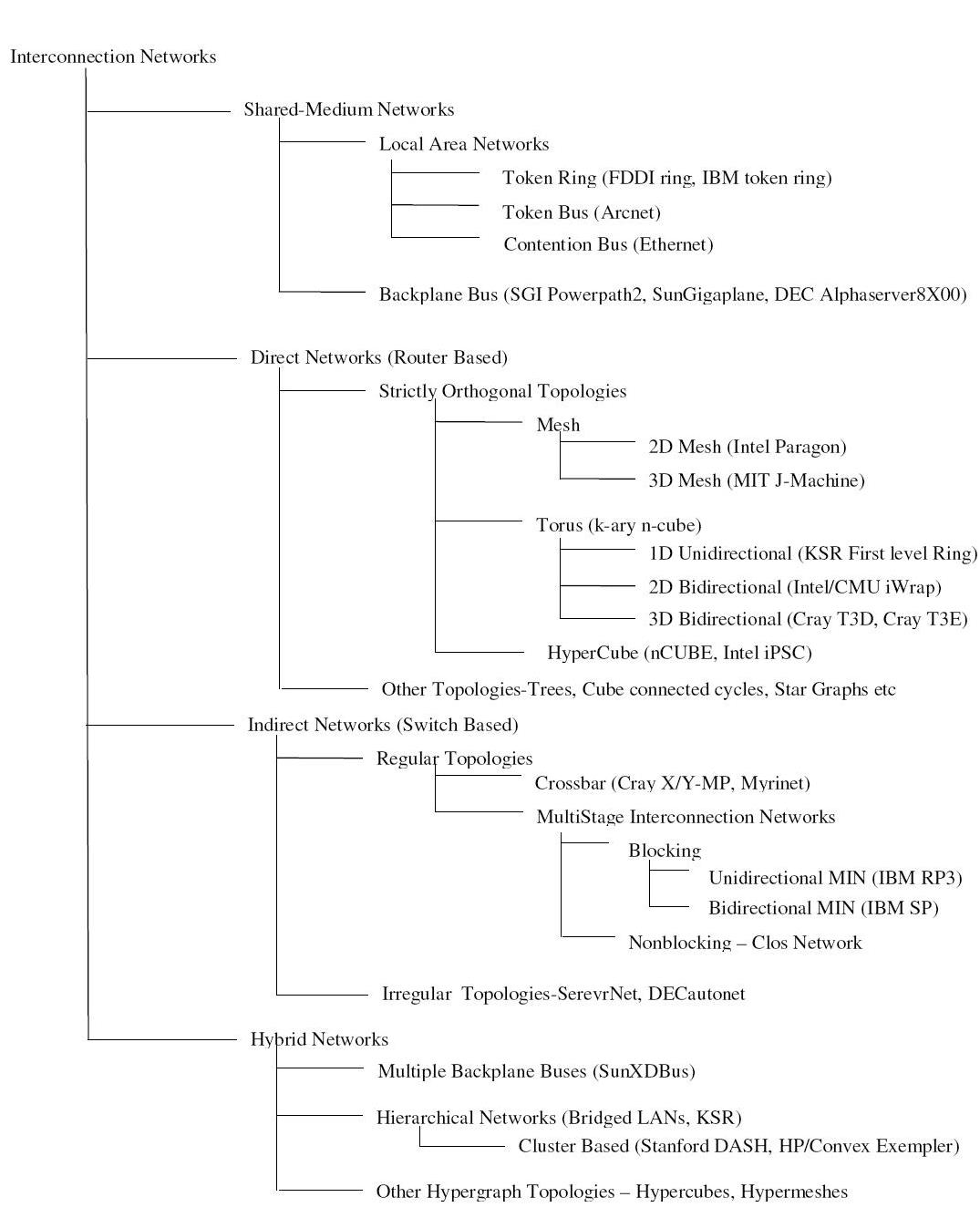

Classification of Interconnection networks

Figure 1 classifies Interconnection Networks based on network topologies. For each class, figure shows hierarchy of subclasses with some real implementations in parenthesis. In Shared-Medium Networks, transmission medium is shared by all devices. Direct Networks have point to point links directly connecting each communicating device to a subset of other devices in the network. Indirect networks connect devices using one or more switches. Hybrid networks are a combination of the above approaches.

Token Ring

Implementation examples:

- IBM token ring: Supports bandwidth of 4 or 16 Mbps based on coaxial tube

- FDDI: Bandwidth of 100Mbps using fiber optics

Token Bus

Nodes are physically connected as a bus, but logically form a ring with tokens passed around to determine the turns for sending. A token is passed around the network nodes and only the node possessing the token may transmit. If a node doesn't have anything to send, the token is passed on to the next node on the virtual ring.

Implementation examples:

- ARCNET: Attached Resource Computer NETwork

Backplane Bus

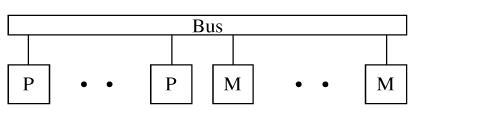

Simplest interconnection structure for bus-based parallel computers. Commonly used to connect processors and memory modules to form UMA architecture. Many techniques are used for information transfers like synchronous, asynchronous, multiplexed and non-multiplexed.

Implementation examples:

- Gigaplane used in Sun Ultra Enterprise X000 Server (ca. 1996): 2.68 Gbyte/s peak, 256 bits data, 42 bits address, split transaction protocol, 83.8 MHz clock

- DEC AlphaServer8X00, i.e. 8200 & 8400 (ca. 1995): 2.1 Gbyte/s, 256 bits data, 40 bits address, split transaction protocol, 100 MHz clock (1 foot length)

- SGI PowerPath -2 (ca. 1993): 1.2 Gbyte/s, 256 bits data, 40 bits address, 6 bits control, split transaction protocol, 47.5 MHz clock

- HP9000 Multiprocessor Memory Bus (ca. 1993): 1Gbyte/s, 128 buts data, 64 bit address, 13 inches, pipelined bus, 60 MHz clock

Direct Networks

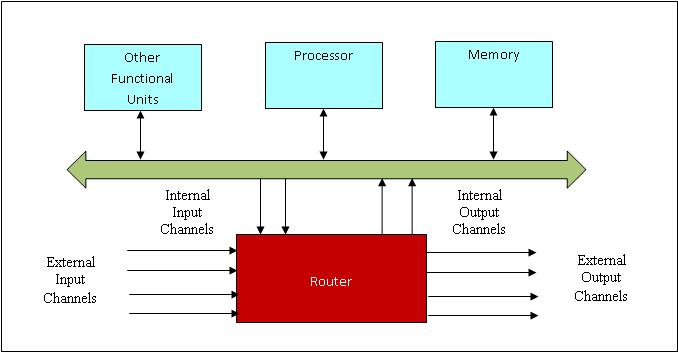

Bus based systems are not scalable since bus becomes a bottleneck when more processors are added. Direct network or point-to-point network scales well with large number of processors. A direct network consists of a set of nodes, each directly connected to a subset of other nodes. Figure__ shows a generic node.

Each Router is connected to its neighbor’s routers through a unidirectional or bidirectional channel and handles the message communication among nodes. Internal channels connect local memory/processor to the router. External channels are used for communication between the routers. A direct network is formed by connecting input channels of one node to output channels of other nodes. Every input channel is paired with a corresponding output channel and there are many ways to interconnect these nodes such that every node in the network is able to reach every other node.

Network topology is Orthogonal if and only if nodes can be arranged in a orthogonal n-dimensional space, and every link can be arranged in such a way that it produces a displacement in a single dimension.

Strictly Orthogonal Topologies

In a strictly orthogonal topology, every node has at least one link crossing each dimension.

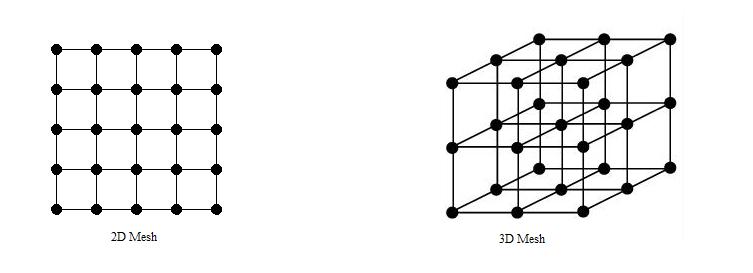

Mesh

Implementation Examples:

- Intel Paragon: 16 rows and 16 columns of nodes, with a 2D mesh connecting them, 130 MB/sec for 1 MB messages, with a latency of around 100 usecs.

- MIT Reliable Router: 2-D Mesh, 23-bit links (16- bit data), 200 MHz, 400 M bytes/s per link per direction, bidirectional signaling, reliable transmission.

- MIT M-Machine: 3-D Mesh, 800 M bytes/s for each network channel.

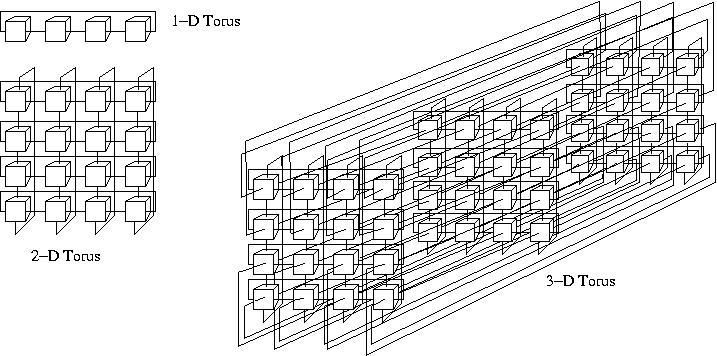

Torus

Implementation Examples:

- Chaos Router: 2-D torus topology, bidirectional 8-bit links, 180 MHz, 260 Mbytes/s in each direction.

- Cray T3E: Bidirectional 3-D torus, 14-bit data in each direction, 375 MHz link speed, 600 Mbytes/s in each direction.

- Cray T3D: Bidirectional 3-D torus, up to 1024 nodes (8 x 16 x 8), 24-bit links (16-bit data, 8-bit control), 150 MHz, 300 Mbytes/s per link.

Hypercube

Implementation Examples:

- Intel iPSC-2 Hypercube: Binary hypercube topology, bit-serial channels at 2.8 Mbytes/s.

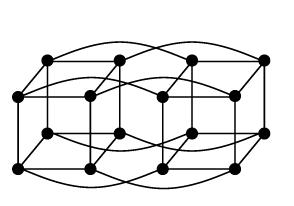

Other Topologies

In a weakly orthogonal topology, some nodes may not have any link in some dimensions.