CSC/ECE 517 Spring 2019 - Project M1901 Implement missing WebAudio automation support

Introduction

Servo

Servo is an developmental browser engine designed to make use of the memory safety properties and concurrency features of the Rust programming language. The project was initiated by Mozilla Research with effort from Samsung to port it to Android and ARM processors

More information about Servo is available here

Rust

Rust is a systems programming language which focuses on memory safety and concurrency. It is similar to C++ but ensures memory safety and provides high performance. Rust is brain child of Mozilla and simple as this

fn main() {

println!("Hello World");

}

More information about Rust can be found here and here is why we love Rust, SF Loved

Web Audio API

The Web Audio API involves handling audio operations inside an audio context, and has been designed to allow modular routing. An audio routing graph has been created by linking together the Basic Audio operations performed with audio nodes. Several sources — with different types of channel layout — are supported even within a single context. This modular design provides the flexibility to create complex audio functions with dynamic effects.

Audio nodes are linked into chains and simple webs by their inputs and outputs. They typically start with one or more sources. Sources provide arrays of sound intensities (samples) at very small timeslices, often tens of thousands of them per second. These could be either computed mathematically (such as OscillatorNode), or they can be recordings from sound/video files (like AudioBufferSourceNode and MediaElementAudioSourceNode) and audio streams (MediaStreamAudioSourceNode). In fact, sound files are just recordings of sound intensities themselves, which come in from microphones or electric instruments, and get mixed down into a single, complicated wave.

Outputs of these nodes could be linked to inputs of others, which mix or modify these streams of sound samples into different streams. A common modification is multiplying the samples by a value to make them louder or quieter (as is the case with GainNode). Once the sound has been sufficiently processed for the intended effect, it can be linked to the input of a destination (AudioContext.destination), which sends the sound to the speakers or headphones. This last connection is only necessary if the user is supposed to hear the audio.

A simple, typical workflow for web audio would look something like this:

1. Create audio context

2. Inside the context, create sources — such as <audio>, oscillator, stream

3. Create effects nodes, such as reverb, biquad filter, panner, compressor

4. Choose final destination of audio, for example your system speakers

5. Connect the sources up to the effects, and the effects to the destination.

Web Audio Automation

AudioParam is used to control the AudioNode functioning, say, Volume (a Gain parameter). These values can either be scheduled to be changed at precise times or set to particular value immediately following an event or at an event. The schedule to change when used with AudioContext.currentTime can helps in volume fades, filter sweeps etc. This would work aims to implement SetValueCurveAtTime().

setValueCurveAtTime() is one such method in AudioParam that takes an array as input and schedules a change. The array is usually a curve in wedAudio and achieved my linear interpolation between the values from the floating-point values array and for the duration, d from the startTime, s

v(t) = values[N * (t - s) / d], where N is the length of the values in the array.

And after the end of the curve time interval (t >= s + d), the value will remain constant at the final curve value. This would persist to happen until the next automation event.

One of the application of setValueCurveAtTime() it to create tremolo effect. Suppose linear nor an exponential curve satisfy the needs then user can create curve based on values to setValueCurveAtTime() with an array of timing values. Its a much loved approached against multiple calls to setValueAtTime().

AutomationEvent::SetValueCurveAtTime(val,start_time,duration)=>{

let progress =(current_tick - event_start_time).0 as f32

let k=(val.len()-1/duration)*progress

//v[t] is computed by interpolating between V[k] and V[k+1]

}

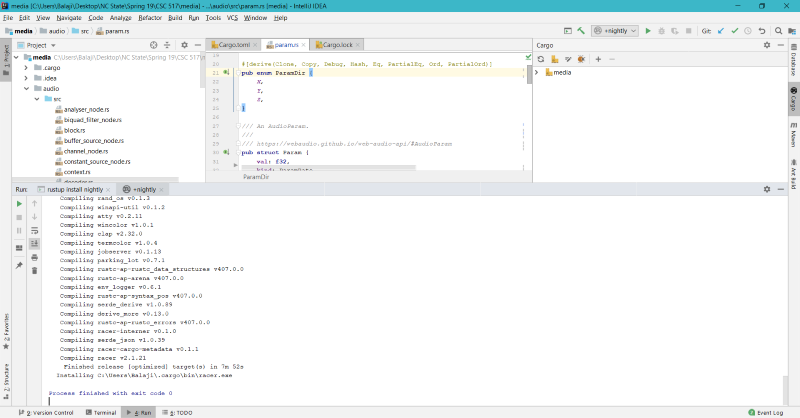

Build

Servo is built with Cargo, the Rust package manager. We also use Mozilla's Mach tools to orchestrate the build and other tasks.

Implementation of the Project

Code

The code is implemented as in here. The code below is the main code snippet for the SetValueCurveAtTime function. The values and function behavior is define in the param.rs file.

AutomationEvent::SetValueCurveAtTime(ref values, start, duration) => {

let time_diff = ((duration.0 as f32) - (start.0 as f32)) as f32;

let mut progress = ((((current_tick.0 as f32) - (start.0 as f32)) as f32) / time_diff) as f32;

if progress < 0.0 {

progress = 0.0 as f32;

}

let n = values.len() as f32;

let k = (((n - 1.) * progress) as f32).floor();

let next = k + 1. as f32;

let step = time_diff / (n - 1.);

if next < n {

let time_k = (k * step) as f32;

let time_k_next = (next * step) as f32;

let v_k = values[k as usize];

let v_k_next = values[next as usize];

*value = v_k + (v_k_next - v_k) * (((current_tick.0 as f32) - time_k) / (time_k_next - time_k));

}

true

}

AutomationEvent::CancelAndHoldAtTime(..) => false,

AutomationEvent::CancelScheduledValues(..) | AutomationEvent::SetValue(..) => {

unreachable!("CancelScheduledValues/SetValue should never appear in the timeline")

}

Let's walk through the code.

AutomationEvent::SetValueCurveAtTime() is a fn and takes three arguments: values, start, duration.

progress, computes the difference between the first value in start_time and time and makes explicit conversion to f32.

let time_diff = ((duration.0 as f32) - (start.0 as f32)) as f32;

let mut progress = ((((current_tick.0 as f32) - (start.0 as f32)) as f32) / time_diff) as f32;

time_diff computes the total difference between duration and start_time, this is required when the values that needs to be computed goes beyond time_diff from start_time to set a constant value later.

if progress < 0.0 {

progress = 0.0 as f32;

}

Sets 0 if the progress from values array and present time being negative.

let n = values.len() as f32;

let k = (((n - 1.) * progress) as f32).floor();

let next = k + 1. as f32;

let step = time_diff / (n - 1.);

n, total length of values array. k, compute as k as in [here and next is k+1, the delta or step is computed that would be added to generate the sound wave from the values array. Say, the frequency start at 44KHz and the step would be used to change the frequency of the curve.

if next < n {

let time_k = (k * step) as f32;

let time_k_next = (next * step) as f32;

let v_k = values[k as usize];

let v_k_next = values[next as usize];

*value = v_k + (v_k_next - v_k) * (((current_tick.0 as f32) - time_k) / (time_k_next - time_k));

}

The code block generates sequences of values from values</code array and using step value we computed. And does that till it reaches the total length of the array.

true

Used to loop the function call made from here.

AutomationEvent::CancelAndHoldAtTime(..) => false,

AutomationEvent::CancelScheduledValues(..) | AutomationEvent::SetValue(..) => {

unreachable!("CancelScheduledValues/SetValue should never appear in the timeline")

}

Code instructs to cancel this event or end of this function and proceed to next instructions. In Audio API unless you explicitly say the block to end, the block would end and hardware device would be stopped from consumption.

Examples

Examples are unique aspect to Rust. This instructs that code to make use of the function we just wrote and generates a sound output. Refer our example here for the SetValueCurveAtTime()

And example is usually called by using cargo build in rust or cargo run.

Cargo.toml dictates the naming and the file to be called as.

cargo run --example set_value_curve

The above code instructs to run the example set_value_curve to generate a sound output through our terminal.

Compile

Cargo.lock instructs the dependencies that would be required to build this project.

Cargo.toml calls all the folders or members to be complied. Usually the compilation would be at /media

Cargo.lock installs number of packages called crates that would be used by the project to access the modules. Each of the member in Cargo.toml would have Carog.toml in them that would instruct dependencies during the build. build-dependencies would be used if you have dependencies before the build.

.cargo config is similar to a dotenv file for Rust

Test

If you would like to test our build please look into Fixes before proceeding. We would suggest MacOS or Linux 16.04 LTS for any development and testing. Virtual Machine or VirtualBox could be another option. We spent countless days in frustration with libraries and never ending apt installs so avoid Linux 18.04 for this build test. Only one of our team member who used MacOS were able to successfully build the servo/media successfully most times, the rest of two who are windows cmd/msys2 fanatics have a hard time at MSDN before they gave in to sudo.

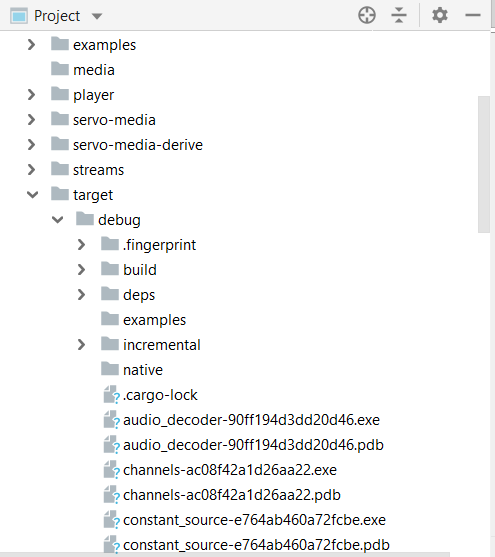

Once cargo build is completed. Open /target/debug to view the examples that are generated.

To run all examples after the cargo build is complete, use cargo run --example <bin_name>

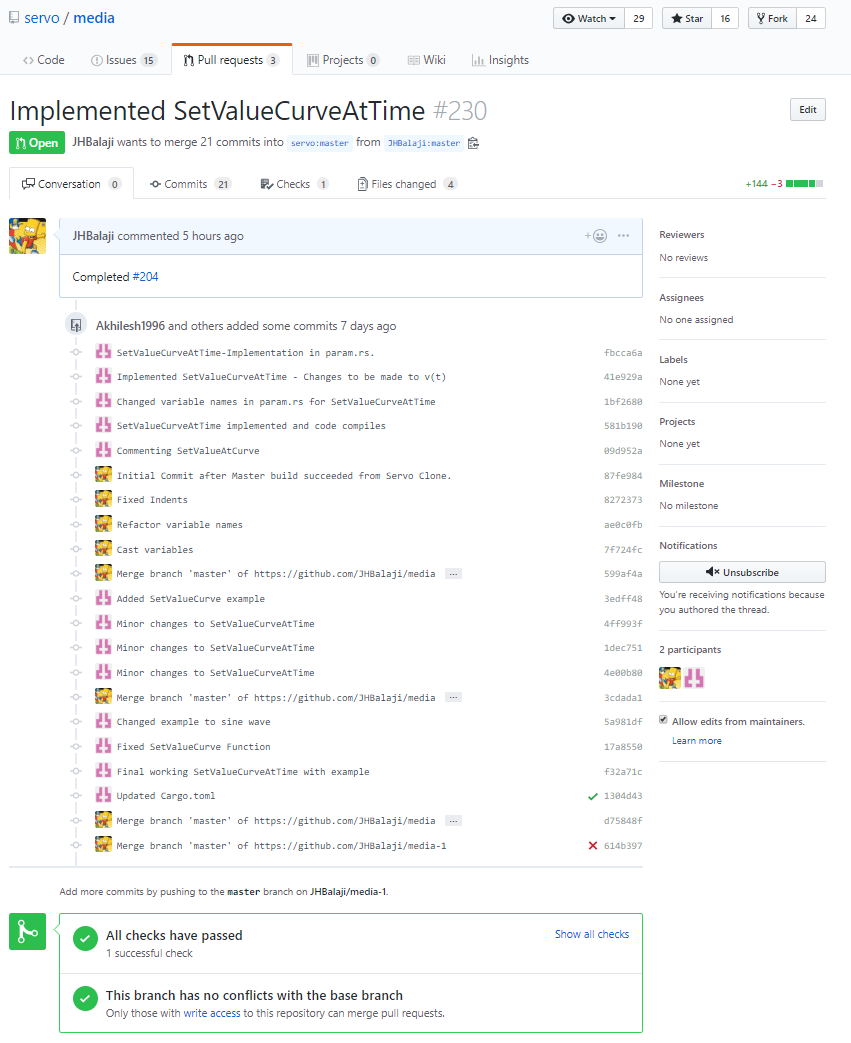

Status of the Project

The build is ready but awaiting for Pull Request to be merged

Normal build from Master Repo

To build Servo in development mode. This is useful for development, but the resulting binary is very slow.

git clone https://github.com/servo/servo

cd servo

./mach build --dev

./mach run tests/html/about-mozilla.html

For benchmarking, performance testing, or real-world use, add the --release flag to create an optimized build:

./mach build --release

./mach run --release tests/html/about-mozilla.html

Compiling and Running the code

Follow the instructions posted in this URL and install GStreamer and Rust. After that follow the following lines for compiling on MacOS.

1. brew install gstreamer gst-plugins-base gst-plugins-good gst-plugins-bad gst-plugins-ugly gst-libav gst-rtsp-server gst-editing-services

2. export PKG_CONFIG_PATH=/usr/local/Cellar/libffi/3.2.1/lib/pkgconfig/

3. rustup override set nightly

After this run the following command to compile servo/media

cargo build

After compiling you will be able to test our example that is places in the examples folder.

Servo Media

Requirements

In order to build this crate you need to install gstreamer which can be found here [1]

Test Case

After you run cargo build, you should be able to see the executable of the example inside the target/debug folder. Following is the test we have written file to check the functioning of the SetValueCurveAtTIme.

extern crate servo_media;

extern crate servo_media_auto;

use servo_media::audio::constant_source_node::ConstantSourceNodeOptions;

use servo_media::audio::gain_node::GainNodeOptions;

use servo_media::audio::node::{AudioNodeInit, AudioNodeMessage, AudioScheduledSourceNodeMessage};

use servo_media::audio::param::{ParamType, UserAutomationEvent};

use servo_media::ServoMedia;

use std::sync::Arc;

use std::{thread, time};

fn run_example(servo_media: Arc<ServoMedia>) {

let context = servo_media.create_audio_context(Default::default());

let dest = context.dest_node();

//Initializing the values vector for SetValueCurve function

let mut values: Vec<f32> = Vec::new();

values.push(0.0);

values.push(0.0);

values.push(0.0);

values.push(0.0);

values.push(1.0);

values.push(1.0);

values.push(1.0);

values.push(1.0);

values.push(0.0);

values.push(0.0);

values.push(0.0);

values.push(0.0);

values.push(0.5);

values.push(0.5);

values.push(0.5);

values.push(0.5);

values.push(0.0);

values.push(0.0);

values.push(0.0);

values.push(0.0);

let start_time = 0.;

let end_time = 5.;

let n = values.len() as f32;

let value_next = values[(n - 1.) as usize];

let cs = context.create_node(

AudioNodeInit::ConstantSourceNode(ConstantSourceNodeOptions::default()),

Default::default(),

);

let mut gain_options = GainNodeOptions::default();

gain_options.gain = 0.0;

let gain = context.create_node(

AudioNodeInit::GainNode(gain_options.clone()),

Default::default(),

);

let osc = context.create_node(

AudioNodeInit::OscillatorNode(Default::default()),

Default::default(),

);

context.connect_ports(osc.output(0), gain.input(0));

context.connect_ports(cs.output(0), gain.param(ParamType::Gain));

context.connect_ports(gain.output(0), dest.input(0));

let _ = context.resume();

context.message_node(

osc,

AudioNodeMessage::AudioScheduledSourceNode(AudioScheduledSourceNodeMessage::Start(0.)),

);

context.message_node(

gain,

AudioNodeMessage::AudioScheduledSourceNode(AudioScheduledSourceNodeMessage::Start(0.)),

);

context.message_node(

cs,

AudioNodeMessage::AudioScheduledSourceNode(AudioScheduledSourceNodeMessage::Start(0.)),

);

context.message_node(

cs,

AudioNodeMessage::SetParam(

ParamType::Offset,

UserAutomationEvent::SetValueCurveAtTime(values, start_time, end_time),

),

);

context.message_node(

osc,

AudioNodeMessage::SetParam(

ParamType::Frequency,

UserAutomationEvent::SetValueAtTime(value_next, end_time),

),

);

thread::sleep(time::Duration::from_millis(7000));

let _ = context.close();

}

fn main() {

ServoMedia::init::<servo_media_auto::Backend>();

if let Ok(servo_media) = ServoMedia::get() {

run_example(servo_media);

} else {

unreachable!();

}

}

<references></references>

Video of testing audio output=

Issues and Fixes

Windows Specific during build