CSC/ECE 517 Spring 2018 E1814 Write unit tests for collusion cycle.rb

Introduction

Expertiza Background

Expertiza is an opensource web application maintained by students and faculty members of North Carolina State University. Through it students can submit and peer-review learning objects (articles, code, web sites, etc). More information on http://wiki.expertiza.ncsu.edu/index.php/Expertiza_documentation.

Project Description

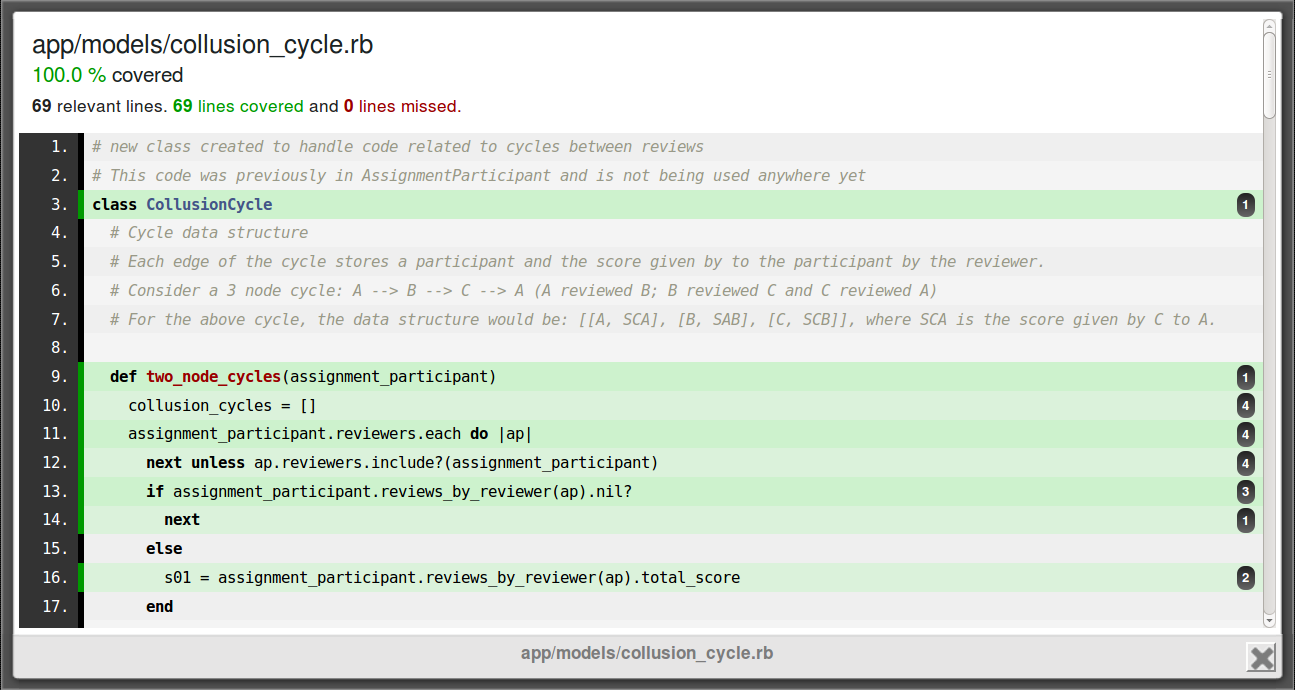

Collusion_cycle.rb is a part of the Expertiza project used to calculate potential collusion cycles between peer reviews. Our purpose is to write corresponding unit tests (collusion_cycle_spec.rb) for it.

Project Details

Model introduction

Model purpose

The file collusion_cycle.rb in app/models is used to calculate potential collusion cycles between peer reviews which means two assignment participants are accidentally assigned to review each other's assignments directly or through a so-called collusion cycle. For example, if participant A was reviewed by participant B, participant B was reviewed by participant C, participant C was reviewed by participant A and all the reviews were indeed finished, then a three nodes cycle exists. Cases including two nodes, three nodes and four nodes have been covered in collusion_cycle.rb.

Functions introduction

4. cycle_similarity_score(cycle)

The function below is used to calculate the similarity which is the average difference between each pair of scores.

For example, if we input a cycle with data like [[participant1, 100], [participant2, 95], [participant3, 85]] (PS: 100 is the score participant1 receives.), the similarity score will be (|100-95|+|100-85|+|95-85|)/3 = 10.0 .

def cycle_similarity_score(cycle)

similarity_score = 0.0

count = 0.0

for pivot in 0...cycle.size - 1 do

pivot_score = cycle[pivot][1]

for other in pivot + 1...cycle.size do

similarity_score += (pivot_score - cycle[other][1]).abs

count += 1.0

end

end

similarity_score /= count unless count == 0.0

similarity_score

end

5. cycle_deviation_score(cycle)

The function below is used to calculate the deviation of one cycle which is the average difference of all participants between the standard review score and the review score from this particular cycle.

For example, if we input a cycle with data like [[participant1, 95], [participant2, 90], [participant3, 91]] (PS: 95 is the score participant1 receives.) and the standard scores for each are 90, 80 and 91, the deviation score will be (|95-90|+|90-80|+|91-91|)/3 = 5.0 .

def cycle_deviation_score(cycle)

deviation_score = 0.0

count = 0.0

for member in 0...cycle.size do

participant = AssignmentParticipant.find(cycle[member][0].id)

total_score = participant.review_score

deviation_score += (total_score - cycle[member][1]).abs

count += 1.0

end

deviation_score /= count unless count == 0.0

deviation_score

end

Test Description

Cycle Detection

1. two-node

2. three node

3. four node

Scores

1. cycle_similarity_score(cycle)

The code below are the unit tests for the cycle_similarity_score(cycle) function. The function is very simple, as it doesn't call any other functions. We only need to input the appropriate data and check whether the output is correct. So for each cases, 2 nodes or 3 nodes, I set different cycle as input and check the result of the function.

context "#cycle_similarity_score" do

it "similarity score of 2 node" do

cycle = [[participant1, 100], [participant2, 90]]

expect(colcyc.cycle_similarity_score(cycle)).to eq (10.0)

end

it "similarity score of 3 node" do

cycle = [[participant1, 100], [participant2, 95], [participant3, 85]]

expect(colcyc.cycle_similarity_score(cycle)).to eq (10)

end

end

2. deviation

Coverage Result

Future Work

Reference

1. rspec *2

2. expertiza *3