ECE506 CSC/ECE 506 Spring 2013/11a ad: Difference between revisions

(Created page with "=Introduction= When dealing with a relatively small number of processors (8-16), according to Solihin 320, using a bus based shared memory structure is fine. Unfortunately, when...") |

No edit summary |

||

| Line 48: | Line 48: | ||

<br> | <br> | ||

11a. Performance of DSM systems. Distributed shared memory systems combine the programming models of shared memory systems, and the scalability of distributed systems. However, since DSM systems need extra coordination between software layer and underlying hardware, achieving good performance could be a big challenge. The factors that harm the performance could be the overhead to maintain cache coherence, memory consistency, and the latency of interconnections. Please further explore the factors that can affect the performance of DSM systems, and the improvements that have been made on the existing systems. | |||

= Introduction = | |||

<b>Under construction</b> | |||

THE REFERENCES (correct formatting and hyperlinks), DEFINITIONS, AND QUIZ ARE STILL UNDER CONSTRUCTION. PLEASE BE PATIENT AND BE SURE TO RE-REVIEW BY THE END OF THE WEEK. | |||

Parallel processor system architectures can be implemented as distributed shared memory systems, in which clusters of processors or single processors are connected in a larger network of nodes, where each node has it's own physical memory, but the physical memories are combined into one shared memory which each node can address. | |||

This article discusses the requirements of three basic components of DSM parallel architecture design: cache coherence, memory consistency, and interconnects. Each is first described and then examined for performance concerns. Finally, case studies are performed on a hardware (Cray X1) and a software implementation (Shasta DSM protocol) of DSM systems to discuss specific performance improvements that have been made. | |||

== Cache coherence == | |||

DSM systems must maintain cache coherence just as it required by bus-based multiprocessor systems. Cache coherence problems arise when it is undefined how a change of a value in a specific processor's cache is propagated to the other caches [1, p. 183]. If multiple processors access and modify a shared location in memory and produce outputs based on that shared variable, it is possible to calculate incorrect values if cache coherence is not maintained. | |||

Ensuring that a value changed in one cache is sent to another cache is called <i>write propagation</i>. [1, p. 183] Write propagation is one of the requirements that must be addressed to be provide cache coherency. Without write propagation, one processor could modify a cached value and not notify the other processors that have the same value cached. The other caches may believe they have the latest data, thus on subsequent reads, their caches will provide it to their respective processors, leading to incoherent results. | |||

Another requirement for cache coherence is <i>write serialization</i>, which Solihin [1 p. 183] defines as a requirement that "multiple changes to a single memory location are seen in the same order by all processors". If two processors perform writes to a single memory location in a certain order, then all other processors in the system should see the writes (by reading that memory location and subsequently caching the values) in the order in which they were written. If other processors observe the writes by reading the variable, but see the writes in different orders, this can lead to incoherent copies of the same variable in multiple caches while each think they have the latest copy. | |||

Thus, for correctness purposes, it is required that write propagation and write serialization are provided by the cache coherence implementation. | |||

In order to maintain cache coherence, a cache coherence protocol is implemented in hardware (or in specific cases, in software). In DSM systems, the cache coherence controller in a node interfaces with the processor and it's cache, but also has a communication link to the other nodes through an interconnect network via a <i>communication assist</i>. It receives and acts upon requests from the local processor, as well as sends and receives (and acts on) requests sent to/from other nodes as <i>network transactions</i> through the communication assist. | |||

Unlike bus based multiprocessor systems, the coherence controllers are not connected with a medium that allows for (serialized) communication nor bus signal lines, such as the SHARED line (which is asserted in a bus based system when another processor has a copy of that cache block which is being addressed). In bus based systems, the bus is also the medium in which invalidations or updates are sent to other coherence controllers, depending on the coherence protocol. Further, bus based systems allow for snooping of requests from other coherence controllers such as read, read-exclusive, flushes, etc. Since no bus exists, but invalidations or updates have to be sent to other coherence controllers, these are sent as network transactions. Additionally, since no bus exists, it isn't guaranteed that a request will be seen by other processors once it is sent, so acknowledgment messages are also sent as network transactions in response to requests. | |||

DSM based systems do not replicate the broadcasting of messages to other coherence controllers as bus based systems do because the bandwidth requirements would be prohibitively large. Many DSM systems utilize a construct called a <i>directory</i> that stores information about which cache block is cached in which state by the different nodes to avoid having to broadcast invalidations, updates, upgrades, interventions, flushes, or other messages sent by coherence controllers on buses. The directory enables a node to select a subset of nodes as message recipients intelligently, thereby reducing the network traffic. | |||

To ensure that a directory is properly updated and reflects the true state of the caches, the directory has its own coherence protocol which responds to read, read exclusive (write), and upgrade requests from the different nodes, and sends messages including invalidations to sharer nodes, replies with and without data to nodes, and interventions to nodes. Solihin [1, p. 332-352] covers directory based coherence protocols in further detail. | |||

Since each memory block address maps to a specific node (this mapping is generally determined at boot time), then a new term, <i>home node</i>, is introduced to signify the node which houses a specific block in memory. One naive implementation of directories is to have a centralized directory that exists at one node, but this suffers from performance problems since that node becomes a bottleneck for all transactions, so a logical improvement is to utilize decentralized directories, where each home node maintains a directory for its blocks. Since the mapping for a block is fixed, then any node knows immediately which is the home node, and only needs to send requests to that node directly. Solihin [1, p. 325-327] covers home nodes and directory placement in further detail. | |||

== Memory consistency == | |||

DSM systems must also maintain memory consistency just as bus based systems. Memory consistency is concerned with how all processors in the system see the ordering of loads and stores [1, p.283]. If one or more processors see the loads and stores as occurring in different orders, it is possible that each develops an inconsistent view of memory. Systems maintain memory consistency by implementing memory consistency models, which are discussed in detail below. | |||

The need for memory consistency can be explained through an example concerning programmers intuition [1]. Suppose there is a program with three threads that has a cascading series of locks, as shown below, which is inspired by an example from [1, p. 285]. | |||

All variables are initialized to 0. | |||

<pre> | |||

P0: P1: P2: | |||

s1: a = 1; s3: while(!a_rdy) {} s6: while(!ab_rdy) {} | |||

s2: a_rdy = 1; s4: b = a + 1; s7: c = a * b; | |||

s5: ab_rdy = 1; | |||

</pre> | |||

On the surface, it would be expected that P0 sets a value to a and sets a_rdy. P1 spins until a_rady is set, at which point it assigns b = a + 1 = 2, and sets a flag indicating a and b are ready. P2 spins on ab_rdy until P1 sets it, when P2 finally sets c = a * b = 1 * 2 = 2. | |||

Looking deeper, it is clear that this is only expected behavior because it is expected that s1 precedes s2 (s1->s2), and s3->s4->s5, and s6->s7. Expecting that the program execute as this is called the expectation of <i>program order</i>, which Solihin explains as "memory accesses are executed in a thread to follow the order in which they occur in the source code" [1, p. 285]. | |||

The other expectation is that the memory accesses are <i>atomic</i>, in that the memory accesses (namely writes) occur without overlapping with other memory accesses. An example of memory accesses being non-atomic (or overlapping) is from the example above. Consider that P0 sends "a" to P1 and P2 and the value arrives at P1 immediately, but due to an interconnection delay, takes longer time to propagate to P2. It is possible that P2 executes s7 and updates the value of c without having received the new value of "a" from P0, thereby setting c = a * b = 0 * 2 = 0, which is not the expected result. [1] | |||

Combining the expectation of write atomicity and program order define the <i>sequential consistency</i> memory model. | |||

Other relaxed memory consistency models also exist, but all serve to give the programmer an understanding of the memory consistency provided by the system to ensure that programs produce the expected output. More info on memory consistency models can be found in Solihin [1]. | |||

== Interconnections == | |||

DSM systems consist of nodes that are connected together via interconnects. The collection of all interconnects combined is analogous to a bus on a bus-based multiprocessor system, but in the case of DSM systems, these interconnects are individual discrete connections. | |||

Interconnects are the media through which cache coherence messages, such as invalidations, upgrades, cache to cache transfers, etc must be sent. Unlike in bus based systems where all processors exist on the same bus and will generally see things occur on the bus at the same time and in the same order, interconnects are point to point connections, so it is likely that a message sent from one node to two others will not arrive at the others at the same time nor in order. | |||

Since the interconnects do not preserve serialization, protocols must be developed that dictate that messages must be sent, but where in a bus based system it could be taken for granted that if a message is put on the bus that it will be received, it can't be taken for granted in interconnects, thus the protocols must include a series of acknowledgement messages as responses to initiated messages. Since messages must be sent as discrete packets, these messages incur latency in being created, and since they must route from point to point, and sometimes are relayed through nodes as a series of responses / exchanges, extra latency can be incurred for a transaction that would've been much faster on a bus based system. | |||

Special care must be taken to understand the latency and design it through protocol implementations. | |||

= Performance Concerns = | |||

A DSM machine has unique requirements compared to shared memory / bus based machines in order to provide cache coherence and memory consistency, as well as having interconnects. Performance concerns of each of these concepts in DSM machines are discussed in detail. | |||

== Maintaining cache coherence == | |||

To maintain correct cache coherence, write propagation and write serialization must be provided, both of which can have adverse effects on performance. | |||

Write serialization requires that all writes to a memory location be seen in the same order by other processors. Earlier, an example was given indicating how write serialization can be violated by observing writes out of order. A naive implementation of write serialization would require that a request and all it's messages are performed atomically to avoid overlapping of requests [1, p. 338]. Solihin [1, p. 342-344] discusses correctness issues that can occur if requests are allowed to overlap without special consideration. A non-overlapping approach would require that each request has conditions defined that indicate when it was begun and when it ends, in order for the home node to observe and wait for completion prior to processing other requests to the same block. | |||

The performance concern of disallowing overlapping of requests is that subsequent read or write operations to the same block would be delayed from initiating, even if some of the messages within the requests can be overlapped without correctness concerns. | |||

Another performance problem that can arise through cache coherence are false sharing misses. False sharing can be explained by an example. Suppose two processors have a cache block caches in the shared state, but processor A is reading and writing to a variable x within this block, and processor B is reading and writing to a variable y within this block. Although both processors are not attempting to access each others variables, since both variables map to the same cache block, then each processor either invalidates or sends updates to the other <i>without the other actually needing the data</i>. in a DSM system, these invalidations or updates can unnecessarily utilize a significant amount of interconnect bandwidth. | |||

== Maintaining memory consistency == | |||

Depending on the memory consistency model that is being enforced, performance can be lost be having to ensure various degrees of atomicity of memory accesses and program ordering. | |||

For sequential consistency, to follow program order requires that the program executes statements in the order defined by the source code for a thread. The implication is that statements within a thread cannot be executed out of order, so compiler optimizations and processor optimizations that include out of order execution as an attempt to reduce the latency of individual instructions and increase instruction level parallelism on pipelined architectures must be avoided to varying degrees. [1, p. 293] | |||

For atomicity of memory accesses to be seen by all processors, special considerations need to be made for DSM systems. In general, all processors must be able to detect when a load or a store has completed. For a store, or write atomicity, on a bus based system, the completion can be assumed as occurring as soon as a read exclusive request reach the bus. This is because all processors on the bus are guaranteed to see the request and will immediately invalidate themselves, resulting in the next read to the location becoming a read miss, requiring the block to be re-cached with the most up to date value. On a DSM system, however, there is no bus, so a write cannot be assumed complete as soon as invalidations are sent on the network. Rather, they must wait until acknowledgements of the invalidations are received which can take many hops and incur high latency, especially if there is network congestion. [1, p.292] | |||

Overall, memory consistency on DSM systems can require latencies while waiting for acknowledgements to see completion of writes and loss of performance from in-order execution. | |||

Relaxed memory consistency models are techniques normally used to alleviate performance concerns, and are discussed in detail as improvements in specific DSM systems. | |||

== Latency of interconnections == | |||

A mentioned in the cache coherency and memory consistency sections, interconnections unique distinguish DSM systems from bus based systems. Interconnections are unlike a bus in that they do not guarantee that messages reach recipients, and certainly aren't seen by the receivers at the same moment. Each message must be sent as a transaction in a networking protocol, and each packet sent has at least the latency of hops through routers in the network, and can also incur latency in being generated. | |||

Since messages can become ubiquitous if the DSM system was naively designed to perform identically to a bus based system, care must be taken to design coherence protocols and consistency models that minimize the sending and receiving of messages, and if they must be sent or received, allow for overlapping of execution without blocking while waiting for messages or their receipt. | |||

Revision as of 19:50, 16 April 2013

Introduction

When dealing with a relatively small number of processors (8-16), according to Solihin 320, using a bus based shared memory structure is fine. Unfortunately, when you need to provide a shared memory structure for processors much greater than that, you will need a different set of organization. This new organization is needed due to the physical limitations of the bus. There are two ways you can create such a system. These include Distributed Shared Memory (DSM) or Non-Uniform Memory Access (NUMA). The benefits of having a DSM and NUMA is that we can now scale to a larger amount of processors. The disadvantage is that scaling in such a way may not be the most cost-effective solution, Solihin 320. For the remainder of this section, we will be discussing the performance of DSM's.

According to Solihin 320, there are two aspects that restrict the scalability of bus-based multiprocessors. These include the physical limitations of interconnections and the limitations of the protocol. To explain in detail, on a bus-based system, adding a processor will not affect any other physical restrictions on the system. Unfortunately, when adding a new processor, you will be reducing the speed of the bus. Second, the protocol needed to keep coherence does not scale well. As you increase the number of processors to the system, the amount of traffic also increases. This means that you might run the risk of overwhelming the bandwith. According to Solihin, there are a few ways that we can mitigate this problem. The following is from 321 of the Solihin textbook.

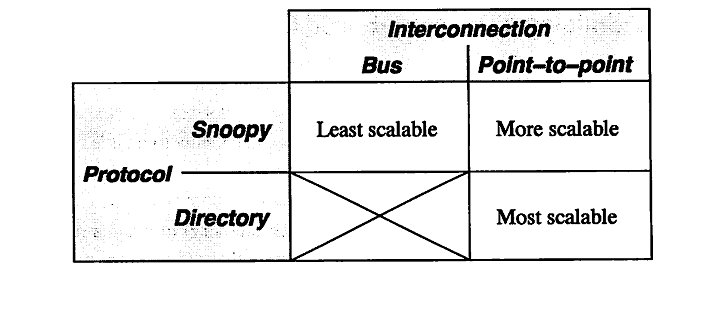

From the table, we can see that there is three ways to scale a multiprocessor system. The first being a single bus system. This is the least scalable due to the limitations of the bus wire itself. As you add processors you will decrease the bus speed due to having to increase the wire length. Also, you run into an issue of overwhelming the bus due to the amount of traffic. The second way is to use a point-to-point bus system. This allows for the speed of the bus to remain relatively fast, but since the traffic will also scale with the number of processors, there will be a limitation due to overwhelming the bus system with traffic. Lastly, the most scalable system to date is using a directory system. This allows for the bus to remain fast due to the short wires, and the bus traffic to remain low since the directory holds information on cache locations.

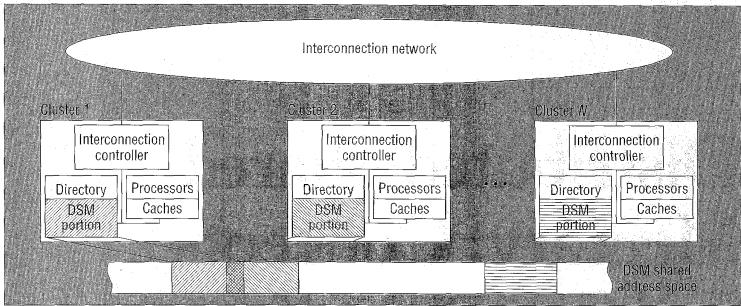

Protic <ref name="protic">Protic, J.; Tomasevic, M.; Milutinovic, V.; , "Distributed shared memory: concepts and systems," Parallel & Distributed Technology: Systems & Applications, IEEE , vol.4, no.2, pp.63-71, Summer 1996 doi: 10.1109/88.494605 paper</ref> defines a DSM to "consists of multiple independent processing nodes with local memory modules, connected by a general interconnection network." What this means is that rather than having these processors connected on a single bus line, there is a network of bus lines. The new issues that arise from such a method involves how to communicate with another node. This is usually solved using a message passing model that is effective depending on the topology that is used. According to Protic, "... compared to shared-memory systems, hardware problems are easier and software problems more complex in distributed-memory systems." What we can walk away with is that even though you have made the hardware limitation less of a factor, the software problem is now more complex in nature. A good picture of what a DSM is, is shown below.

doi: 10.1109/88.494605

paper</ref>

According to Nitzberg <ref name="nitzberg">Nitzberg, B.; Lo, V.; , "Distributed shared memory: a survey of issues and algorithms," Computer , vol.24, no.8, pp.52-60, Aug. 1991

doi: 10.1109/2.84877

paper</ref> DSM has been researched since the 1980's. There are many reasons why DSM has been an area of research focus. Uniprocessor bus-based systems suffer from a hardware and software limitation that can be mitigated using a DSM. But, this has only become an issue as we start having more and faster processors that we want to add to the system. There are three approaches that have been used to implement a DSM system. These include hardware, operating system, and compiler implementations.

According to Shi <ref name="shi">Shi, Weisong. Performance Optimization of Software Distributed Shared Memory Systems. Beijing: Higher Education, 2004. Print. paper</ref>, "The main difficulty in building a software DSM system is solving the memory coherence problem, namely, the problem of how to make a newly written value to be visible to demanding processors on time." Regarding this issue, the two major points are the memory consistency model and cache coherence protocols. The best description is given by Shi<ref name="shi"></ref>, "...memory consistency model determines when the modified data should be visible to other processors and cache coherence protocol determines what values should be visible to other processors." From earlier, we can see that on a shared bus line multiprocessor system, this is already a complex problem. When adding a layer of abstraction, this can become a very large problem. For these two complex problems, there are two spectrum of solutions. One being hardware support, the second being software support.

Software Support

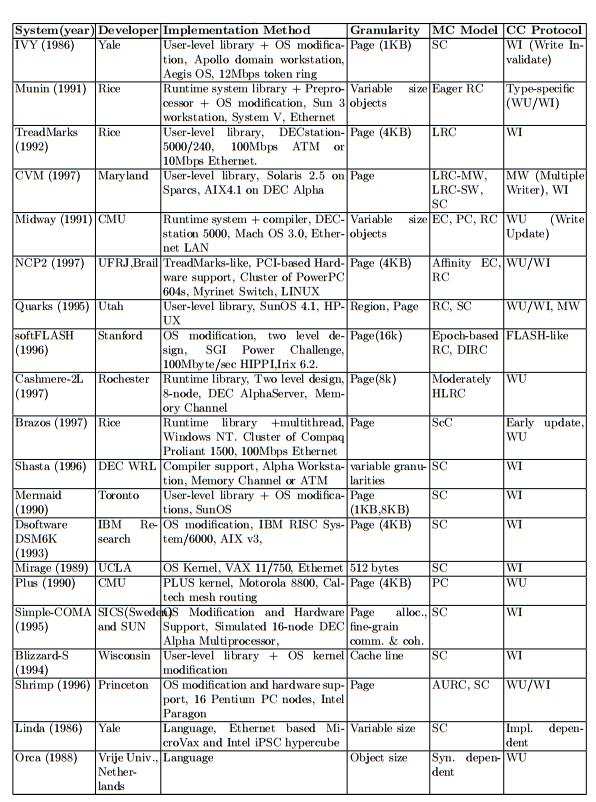

In 1986, the first software supported DSM was created. Since then, it has been well over 20 years and there have been great improvement upon the first initial system. First, it is usually the case that the software support will find some way to relax the memory consistency model. This is due to the fact that memory passing on a DSM is much more expensive than message passing on a single bus system. Over the last 20 years, over 20 different memory consistency models have been proposed <ref name="shi"></ref>. Second, cache coherence must be addressed. Having multiple cache copies means that when one copy is updated the other cache copies should be affected in some way such that the old values are not used. Traditionally there are two techniques, one being snoopy protocol and the second being directory based protocol. According to Shi <ref name="shi"></ref>, snoopy protocol is less used due the fact that it requires hardware support. Lastly, according to Shi <ref name="shi"></ref> the major problem is the interface. For a DSM system to be competitive, it has to be able to work for many customers. Below is a listing of some representative software DSM implementations.

Hardware Support

Although a lot of research has been towards software support for DSM, there has been some research in adding some hardware support. Unfortunately, according to Shi <ref name="shi"></ref> there is a rejection of hardware support from large corporations. What will occur when using hardware support is a issue of compatibility. Fortunately, recent adoptions of certain hardware standards will allow for some hardware support on the mass level.

11a. Performance of DSM systems. Distributed shared memory systems combine the programming models of shared memory systems, and the scalability of distributed systems. However, since DSM systems need extra coordination between software layer and underlying hardware, achieving good performance could be a big challenge. The factors that harm the performance could be the overhead to maintain cache coherence, memory consistency, and the latency of interconnections. Please further explore the factors that can affect the performance of DSM systems, and the improvements that have been made on the existing systems.

Introduction

Under construction

THE REFERENCES (correct formatting and hyperlinks), DEFINITIONS, AND QUIZ ARE STILL UNDER CONSTRUCTION. PLEASE BE PATIENT AND BE SURE TO RE-REVIEW BY THE END OF THE WEEK.

Parallel processor system architectures can be implemented as distributed shared memory systems, in which clusters of processors or single processors are connected in a larger network of nodes, where each node has it's own physical memory, but the physical memories are combined into one shared memory which each node can address.

This article discusses the requirements of three basic components of DSM parallel architecture design: cache coherence, memory consistency, and interconnects. Each is first described and then examined for performance concerns. Finally, case studies are performed on a hardware (Cray X1) and a software implementation (Shasta DSM protocol) of DSM systems to discuss specific performance improvements that have been made.

Cache coherence

DSM systems must maintain cache coherence just as it required by bus-based multiprocessor systems. Cache coherence problems arise when it is undefined how a change of a value in a specific processor's cache is propagated to the other caches [1, p. 183]. If multiple processors access and modify a shared location in memory and produce outputs based on that shared variable, it is possible to calculate incorrect values if cache coherence is not maintained.

Ensuring that a value changed in one cache is sent to another cache is called write propagation. [1, p. 183] Write propagation is one of the requirements that must be addressed to be provide cache coherency. Without write propagation, one processor could modify a cached value and not notify the other processors that have the same value cached. The other caches may believe they have the latest data, thus on subsequent reads, their caches will provide it to their respective processors, leading to incoherent results.

Another requirement for cache coherence is write serialization, which Solihin [1 p. 183] defines as a requirement that "multiple changes to a single memory location are seen in the same order by all processors". If two processors perform writes to a single memory location in a certain order, then all other processors in the system should see the writes (by reading that memory location and subsequently caching the values) in the order in which they were written. If other processors observe the writes by reading the variable, but see the writes in different orders, this can lead to incoherent copies of the same variable in multiple caches while each think they have the latest copy.

Thus, for correctness purposes, it is required that write propagation and write serialization are provided by the cache coherence implementation.

In order to maintain cache coherence, a cache coherence protocol is implemented in hardware (or in specific cases, in software). In DSM systems, the cache coherence controller in a node interfaces with the processor and it's cache, but also has a communication link to the other nodes through an interconnect network via a communication assist. It receives and acts upon requests from the local processor, as well as sends and receives (and acts on) requests sent to/from other nodes as network transactions through the communication assist.

Unlike bus based multiprocessor systems, the coherence controllers are not connected with a medium that allows for (serialized) communication nor bus signal lines, such as the SHARED line (which is asserted in a bus based system when another processor has a copy of that cache block which is being addressed). In bus based systems, the bus is also the medium in which invalidations or updates are sent to other coherence controllers, depending on the coherence protocol. Further, bus based systems allow for snooping of requests from other coherence controllers such as read, read-exclusive, flushes, etc. Since no bus exists, but invalidations or updates have to be sent to other coherence controllers, these are sent as network transactions. Additionally, since no bus exists, it isn't guaranteed that a request will be seen by other processors once it is sent, so acknowledgment messages are also sent as network transactions in response to requests.

DSM based systems do not replicate the broadcasting of messages to other coherence controllers as bus based systems do because the bandwidth requirements would be prohibitively large. Many DSM systems utilize a construct called a directory that stores information about which cache block is cached in which state by the different nodes to avoid having to broadcast invalidations, updates, upgrades, interventions, flushes, or other messages sent by coherence controllers on buses. The directory enables a node to select a subset of nodes as message recipients intelligently, thereby reducing the network traffic.

To ensure that a directory is properly updated and reflects the true state of the caches, the directory has its own coherence protocol which responds to read, read exclusive (write), and upgrade requests from the different nodes, and sends messages including invalidations to sharer nodes, replies with and without data to nodes, and interventions to nodes. Solihin [1, p. 332-352] covers directory based coherence protocols in further detail.

Since each memory block address maps to a specific node (this mapping is generally determined at boot time), then a new term, home node, is introduced to signify the node which houses a specific block in memory. One naive implementation of directories is to have a centralized directory that exists at one node, but this suffers from performance problems since that node becomes a bottleneck for all transactions, so a logical improvement is to utilize decentralized directories, where each home node maintains a directory for its blocks. Since the mapping for a block is fixed, then any node knows immediately which is the home node, and only needs to send requests to that node directly. Solihin [1, p. 325-327] covers home nodes and directory placement in further detail.

Memory consistency

DSM systems must also maintain memory consistency just as bus based systems. Memory consistency is concerned with how all processors in the system see the ordering of loads and stores [1, p.283]. If one or more processors see the loads and stores as occurring in different orders, it is possible that each develops an inconsistent view of memory. Systems maintain memory consistency by implementing memory consistency models, which are discussed in detail below.

The need for memory consistency can be explained through an example concerning programmers intuition [1]. Suppose there is a program with three threads that has a cascading series of locks, as shown below, which is inspired by an example from [1, p. 285].

All variables are initialized to 0.

P0: P1: P2:

s1: a = 1; s3: while(!a_rdy) {} s6: while(!ab_rdy) {}

s2: a_rdy = 1; s4: b = a + 1; s7: c = a * b;

s5: ab_rdy = 1;

On the surface, it would be expected that P0 sets a value to a and sets a_rdy. P1 spins until a_rady is set, at which point it assigns b = a + 1 = 2, and sets a flag indicating a and b are ready. P2 spins on ab_rdy until P1 sets it, when P2 finally sets c = a * b = 1 * 2 = 2.

Looking deeper, it is clear that this is only expected behavior because it is expected that s1 precedes s2 (s1->s2), and s3->s4->s5, and s6->s7. Expecting that the program execute as this is called the expectation of program order, which Solihin explains as "memory accesses are executed in a thread to follow the order in which they occur in the source code" [1, p. 285].

The other expectation is that the memory accesses are atomic, in that the memory accesses (namely writes) occur without overlapping with other memory accesses. An example of memory accesses being non-atomic (or overlapping) is from the example above. Consider that P0 sends "a" to P1 and P2 and the value arrives at P1 immediately, but due to an interconnection delay, takes longer time to propagate to P2. It is possible that P2 executes s7 and updates the value of c without having received the new value of "a" from P0, thereby setting c = a * b = 0 * 2 = 0, which is not the expected result. [1]

Combining the expectation of write atomicity and program order define the sequential consistency memory model.

Other relaxed memory consistency models also exist, but all serve to give the programmer an understanding of the memory consistency provided by the system to ensure that programs produce the expected output. More info on memory consistency models can be found in Solihin [1].

Interconnections

DSM systems consist of nodes that are connected together via interconnects. The collection of all interconnects combined is analogous to a bus on a bus-based multiprocessor system, but in the case of DSM systems, these interconnects are individual discrete connections.

Interconnects are the media through which cache coherence messages, such as invalidations, upgrades, cache to cache transfers, etc must be sent. Unlike in bus based systems where all processors exist on the same bus and will generally see things occur on the bus at the same time and in the same order, interconnects are point to point connections, so it is likely that a message sent from one node to two others will not arrive at the others at the same time nor in order.

Since the interconnects do not preserve serialization, protocols must be developed that dictate that messages must be sent, but where in a bus based system it could be taken for granted that if a message is put on the bus that it will be received, it can't be taken for granted in interconnects, thus the protocols must include a series of acknowledgement messages as responses to initiated messages. Since messages must be sent as discrete packets, these messages incur latency in being created, and since they must route from point to point, and sometimes are relayed through nodes as a series of responses / exchanges, extra latency can be incurred for a transaction that would've been much faster on a bus based system.

Special care must be taken to understand the latency and design it through protocol implementations.

Performance Concerns

A DSM machine has unique requirements compared to shared memory / bus based machines in order to provide cache coherence and memory consistency, as well as having interconnects. Performance concerns of each of these concepts in DSM machines are discussed in detail.

Maintaining cache coherence

To maintain correct cache coherence, write propagation and write serialization must be provided, both of which can have adverse effects on performance.

Write serialization requires that all writes to a memory location be seen in the same order by other processors. Earlier, an example was given indicating how write serialization can be violated by observing writes out of order. A naive implementation of write serialization would require that a request and all it's messages are performed atomically to avoid overlapping of requests [1, p. 338]. Solihin [1, p. 342-344] discusses correctness issues that can occur if requests are allowed to overlap without special consideration. A non-overlapping approach would require that each request has conditions defined that indicate when it was begun and when it ends, in order for the home node to observe and wait for completion prior to processing other requests to the same block.

The performance concern of disallowing overlapping of requests is that subsequent read or write operations to the same block would be delayed from initiating, even if some of the messages within the requests can be overlapped without correctness concerns.

Another performance problem that can arise through cache coherence are false sharing misses. False sharing can be explained by an example. Suppose two processors have a cache block caches in the shared state, but processor A is reading and writing to a variable x within this block, and processor B is reading and writing to a variable y within this block. Although both processors are not attempting to access each others variables, since both variables map to the same cache block, then each processor either invalidates or sends updates to the other without the other actually needing the data. in a DSM system, these invalidations or updates can unnecessarily utilize a significant amount of interconnect bandwidth.

Maintaining memory consistency

Depending on the memory consistency model that is being enforced, performance can be lost be having to ensure various degrees of atomicity of memory accesses and program ordering.

For sequential consistency, to follow program order requires that the program executes statements in the order defined by the source code for a thread. The implication is that statements within a thread cannot be executed out of order, so compiler optimizations and processor optimizations that include out of order execution as an attempt to reduce the latency of individual instructions and increase instruction level parallelism on pipelined architectures must be avoided to varying degrees. [1, p. 293]

For atomicity of memory accesses to be seen by all processors, special considerations need to be made for DSM systems. In general, all processors must be able to detect when a load or a store has completed. For a store, or write atomicity, on a bus based system, the completion can be assumed as occurring as soon as a read exclusive request reach the bus. This is because all processors on the bus are guaranteed to see the request and will immediately invalidate themselves, resulting in the next read to the location becoming a read miss, requiring the block to be re-cached with the most up to date value. On a DSM system, however, there is no bus, so a write cannot be assumed complete as soon as invalidations are sent on the network. Rather, they must wait until acknowledgements of the invalidations are received which can take many hops and incur high latency, especially if there is network congestion. [1, p.292]

Overall, memory consistency on DSM systems can require latencies while waiting for acknowledgements to see completion of writes and loss of performance from in-order execution.

Relaxed memory consistency models are techniques normally used to alleviate performance concerns, and are discussed in detail as improvements in specific DSM systems.

Latency of interconnections

A mentioned in the cache coherency and memory consistency sections, interconnections unique distinguish DSM systems from bus based systems. Interconnections are unlike a bus in that they do not guarantee that messages reach recipients, and certainly aren't seen by the receivers at the same moment. Each message must be sent as a transaction in a networking protocol, and each packet sent has at least the latency of hops through routers in the network, and can also incur latency in being generated.

Since messages can become ubiquitous if the DSM system was naively designed to perform identically to a bus based system, care must be taken to design coherence protocols and consistency models that minimize the sending and receiving of messages, and if they must be sent or received, allow for overlapping of execution without blocking while waiting for messages or their receipt.