CSC/ECE 506 Spring 2012/10a dr: Difference between revisions

| Line 63: | Line 63: | ||

Sequential Consistency Example: | Sequential Consistency Example: | ||

From figure 2.1, P2 does not see P1’s write of z = 5 before its first read of z, so it happens to have an out-of-date value. | |||

However, the write propagates to P2 before its second read of z. | |||

This is legal under SC because, | |||

-> Processors do not always have to see up-to-date values | -> Processors do not always have to see up-to-date values | ||

-> Processors just need to see writes in the order they happen | -> Processors just need to see writes in the order they happen | ||

Note: it would also have been legal under SC for W(z) 1 to propagate to P2 before its first read of z or after its second read of z. | |||

===''Relaxed Consistency Models'' === | ===''Relaxed Consistency Models'' === | ||

Revision as of 04:22, 3 April 2012

Prefetching and consistency models

Introduction

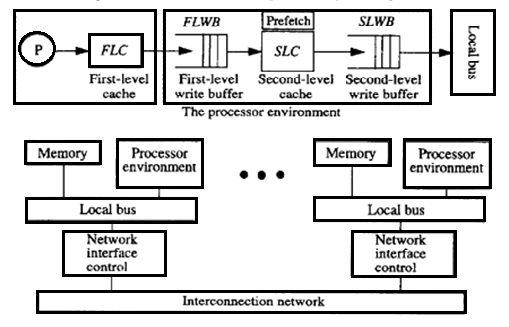

In

Prefetching

Sequential prefetching is a simple hardware controlled pre fetching technique which relies on the automatic prefetch of consecutive blocks following the block that misses in the cache, thus exploiting spatial locality. In its simplest for, the number of prefetched blocks on each miss is fixed throughout the execution<ref>http://129.16.20.23/~pers/pub/j5.pdf</ref>.

Prefetching is a common technique to reduce the read miss penalty. Prefetching relies on predicting which blocks currently missing in the cache will be read in the future and on bringing these blocks into the cache prior to the reference triggering the miss. Prefetching approaches proposed in the literature are software or hardware based.

Software controlled prefetching schemes rely on the programmer/compiler to insert prefetch instructions prior to the instructions that trigger a miss. In addition, both the processor and the memory system must be able to support prefetch instructions which can potentially increase the code size and the run-time overhead. By contrast, hardware-controlled prefetch relieve the programmer/compiler from the burden of deciding what and when to prefetch. Usually, these schemes take advantage of the regularity of data access in scientific computations by dynamically detecting access strides.

Basically there are two types of prefetching techniques

1. Fixed sequential prefetching

2. Adaptive sequential prefetching

More details about these is discussed in the following sections:

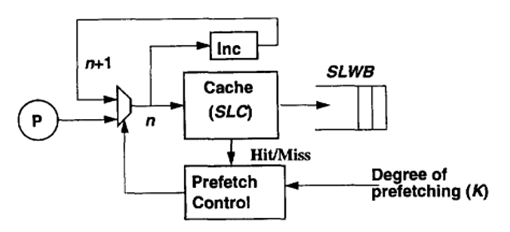

B

C

If k

D

| PrefetchBit (per Cache Line) | Used to detect useful prefetches (needed when prefetching is tumed on.) |

| ZeroBit (per cache line) | Used to detect when a prefetch would have been useful (needed when prefetching is turned off.) |

| LookaheadCounter (per cache) | The current degree of prefetching (per cache) |

| PrefetchCounter (per cache) | Counts the number of prefetches that have been I returned after each read miss |

| UsefulCounter (per cache) | Counts the number of useful prefetches |

Consistency models <ref>http://titanium.cs.berkeley.edu/papers/kamil-su-yelick-sc05.pdf</ref> <ref>http://www.hpl.hp.com/techreports/Compaq-DEC/WRL-95-7.pdf</ref>

The interface for memory in a shared memory multiprocessor is called a memory consistency model. As the interface between the programmer and the system, the effect of the memory consistency model is pervasive in a shared memory system. The model affects programmability because programmers must use it to reason about the correctness of their programs. The model affects the performance of the system because it determines the types of optimizations that may be exploited by the hardware and the system software. Finally, due to a lack of consensus on a single model, portability can be affected when moving software across systems supporting different models.

A memory consistency model specification is required for every level at which an interface is defined between the programmer and the system. At the machine code interface, the memory model specification affects the designer of the machine hardware and the programmer who writes or reasons about machine code. At the high level language interface, the specification affects the programmers who use the high level language and the designers of both the software that converts high-level language code into machine code and the hardware that executes this code. Therefore, the programmability, performance, and portability concerns may be present at several different levels.

In summary, the memory model influences the writing of parallel programs from the programmer’s perspective, and virtually all aspects of designing a parallel system (including the processor, memory system, interconnection network, compiler, and programming languages) from a system designer’s perspective.

The memory consistency model in shared memory parallel programming controls the order in which memory operations performed by one thread may be observed by another. Consistency models are used in distributed systems like distributed shared memory systems or distributed data stores (such as a filesystems, databases, optimistic replication systems or Web caching). The system supports a given model, if operations on memory follow specific rules. The data consistency model specifies a contract between programmer and system, wherein the system guarantees that if the programmer follows the rules, memory will be consistent and the results of memory operations will be predictable.

Sequential Consistency Model (SC) <ref>A Primer on Memory Consistency and Cache Coherence</ref>

Arguably the most intuitive memory consistency model is sequential consistency (SC). Sequential consistency was first formalized by Lamport. Lamport first called a single processor (core) sequential if “the result of an execution is the same as if the operations had been executed in the order specified by the program.” He then called a multiprocessor sequentially consistent if “the result of any execution is the same as if the operations of all processors (cores) were executed in some sequential order, and the operations of each individual processor (core) appear in this sequence in the order specified by its program.” This total order of operations is called memory order. In SC, memory order respects each core’s program order, but other consistency models may permit memory orders that do not always respect the program orders.

Sequential Consistency Example:

From figure 2.1, P2 does not see P1’s write of z = 5 before its first read of z, so it happens to have an out-of-date value.

However, the write propagates to P2 before its second read of z.

This is legal under SC because,

-> Processors do not always have to see up-to-date values

-> Processors just need to see writes in the order they happen

Note: it would also have been legal under SC for W(z) 1 to propagate to P2 before its first read of z or after its second read of z.

Relaxed Consistency Models

So

Conclusion

H

Using

I

Even

External links

1. Sequential hardware prefetching in shared-memory multiprocessors

2. Making Sequential Consistency Practical in Titanium

References

<references/>