CSC/ECE 506 Fall 2007/wiki3 1 ncdt: Difference between revisions

| Line 13: | Line 13: | ||

If the word (in a block) modified is actually used by the processor that received the invalidation, then the reference was a true sharing reference and would have caused a miss independent of the block size. If, however, the word being written and the word read are different and the invalidation does not cause a new value to be communicated, but only causes an extra cache miss, then it is a false sharing miss. It is evident if the cache block size is increased, false sharing misses would also increase. Though, large block sizes exploit locality and decrease the effective memory access time, it also has a tendency to group data together even though only a part of it is needed by any one processor. In general, past studies have shown the miss rate of the data cache in multiprocessors changes less predictably than in uniprocessors with increasing cache block size [2]. | If the word (in a block) modified is actually used by the processor that received the invalidation, then the reference was a true sharing reference and would have caused a miss independent of the block size. If, however, the word being written and the word read are different and the invalidation does not cause a new value to be communicated, but only causes an extra cache miss, then it is a false sharing miss. It is evident if the cache block size is increased, false sharing misses would also increase. Though, large block sizes exploit locality and decrease the effective memory access time, it also has a tendency to group data together even though only a part of it is needed by any one processor. In general, past studies have shown the miss rate of the data cache in multiprocessors changes less predictably than in uniprocessors with increasing cache block size [2]. | ||

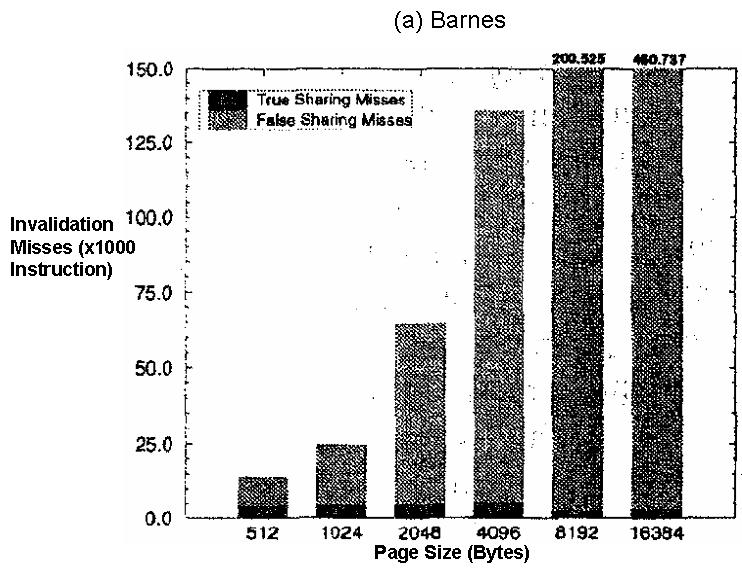

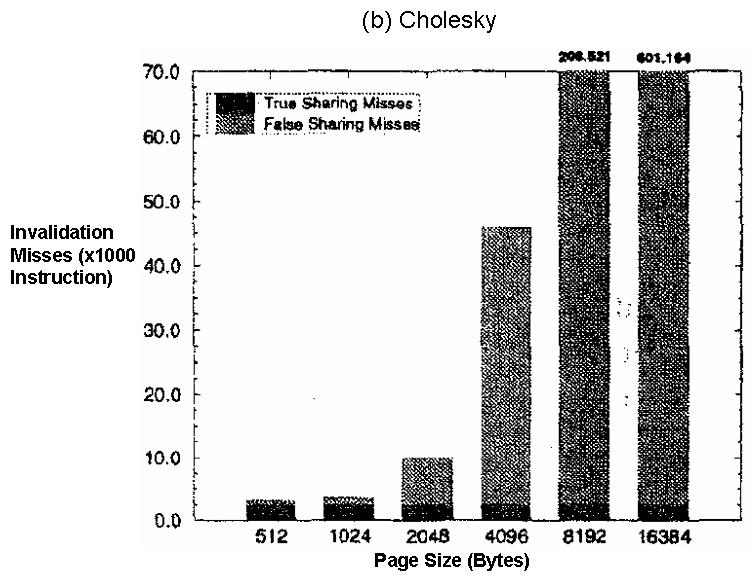

Following figures depicts the impact of false sharing for different parallel application using 32 processors: | Following figures depicts the impact of false sharing for different parallel application using 32 processors: | ||

[[Image:False01.JPG]] | [[Image:False01.JPG]] [[Image:False02.JPG]] | ||

Figure 1: The number of false sharing misses and true sharing misses that happen during executions of each parallel application (a) Barnes and (b) Cholesky [3]. | Figure 1: The number of false sharing misses and true sharing misses that happen during executions of each parallel application (a) Barnes and (b) Cholesky [3]. | ||

Revision as of 03:18, 20 October 2007

Introduction

In a multiprocessor using a snoopy coherence protocol, the overall performance is a combination of the behavior of uniprocessor cache miss traffic and the traffic caused by the communication, which results in invalidations and subsequent cache misses. The uniprocessor miss rate is classified into the three C’s (capacity, compulsory, and conflict [1]). Similarly, the misses that arise from interprocessor communication are called coherence misses and can be divided into two different sources:

1. True sharing misses: Theses misses are caused by the communication of data through the cache coherence mechanism. For example, in an invalidation-based protocol, the first write by a processor to a shared cache block causes an invalidation to establish ownership of that block. Subsequently, when another processor attempts to read a modified word in that cache block, a miss occurs and the resultant block is transferred.

2. False sharing misses: These misses arise from the use of an invalidation-based coherence protocol with a single valid bit per cache block. False sharing occurs when a block is invalidated and a subsequent reference causes a miss because some word in the block, other than one being read, is written into.

The effect of coherence misses become significant for tightly coupled applications that share significant amount of data for example commercial workloads.

Effect of false sharing

If the word (in a block) modified is actually used by the processor that received the invalidation, then the reference was a true sharing reference and would have caused a miss independent of the block size. If, however, the word being written and the word read are different and the invalidation does not cause a new value to be communicated, but only causes an extra cache miss, then it is a false sharing miss. It is evident if the cache block size is increased, false sharing misses would also increase. Though, large block sizes exploit locality and decrease the effective memory access time, it also has a tendency to group data together even though only a part of it is needed by any one processor. In general, past studies have shown the miss rate of the data cache in multiprocessors changes less predictably than in uniprocessors with increasing cache block size [2].

Following figures depicts the impact of false sharing for different parallel application using 32 processors:

Figure 1: The number of false sharing misses and true sharing misses that happen during executions of each parallel application (a) Barnes and (b) Cholesky [3].

Not only do false misses increase the latency of memory accesses, they also generate traffic between processors and memory. As the block size increases, a miss produces a higher volume of traffic. The traffic increase with larger blocks occurs because many of the words transferred are not used. Between two consecutive misses on a given block, a processor usually references a very small number of distinct words in that block.