CSC/ECE 506 Spring 2012/7b yw: Difference between revisions

| Line 35: | Line 35: | ||

Problems occur when we extend TLB table into multi-processor systems. That is when memory is shared, two processors may have different virtual names for the same block. | Problems occur when we extend TLB table into multi-processor systems. That is when memory is shared, two processors may have different virtual names for the same block. | ||

tlbCo.PNG | [[Image:tlbCo.PNG|thumbnail|right|200px|Virtual Memory in modern computer systems<sup><span id="3body"></span></sup>]] | ||

This cause inconsistent loads and stores when more than one processors are trying to access the same blocks of data. | This cause inconsistent loads and stores when more than one processors are trying to access the same blocks of data. | ||

Revision as of 02:20, 19 March 2012

Introduction

In this chapter we will first introduce the concept of virtual address memories, more precisely, virtually addressed caches. Pros and cons of virtually addressed caches are discussed. Then we discuss the need for TLB, a cache-like construct that translate virtual address to physical address. Then we raise the issue of TLB coherence.

Virtually addressed caches

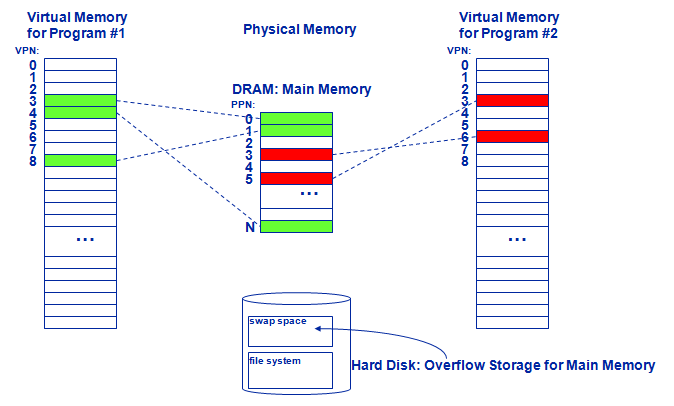

Virtual memory is a memory management technology developed for multithread operating systems. This technique virtualizes a computer architecture's various forms of computer data storage (such as random-access memory and disk storage), allowing a program to be designed as though there is only one kind of memory, "virtual" memory, which behaves like directly addressable read/write memory (RAM).

This technique greatly simplifies programmers job because modern operating system runs each process on its own dedicated virtual memory space. Thus each program runs as if it has the sole access of the virtual memory. In this way, programmers don't have to worry about how operating system switches between processes or how other process operates. Also, Virtual memory makes application programming easier by hiding fragmentation of physical memory; by delegating to the kernel the burden of managing the memory hierarchy (eliminating the need for the program to handle overlays explicitly); and, when each process is run in its own dedicated address space, by obviating the need to relocate program code or to access memory with relative addressing.

The introducing of virtual memory raise the problem of translating virtual address to physical address since we need physical address to access the actual content stored in cache. And that's where Translation Lookaside Buffer comes into use.

What is TLB

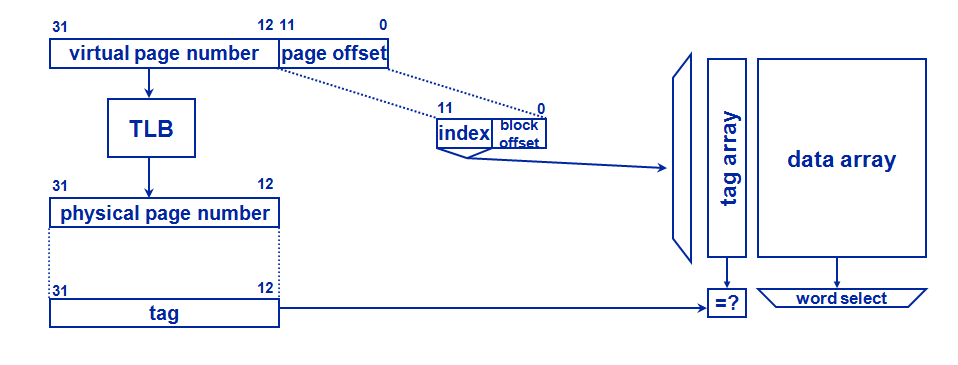

A TLB has a fixed number of slots that contain page table entries, which map virtual addresses to physical addresses. The virtual memory is the space seen from a process. This space is segmented in pages of a prefixed size. The page table (generally loaded in memory) keeps track of where the virtual pages are loaded in the physical memory. The TLB is a cache of the page table; that is, only a subset of its content are stored. The TLB references physical memory addresses in its table. It may reside between the CPU and the CPU cache, between the CPU cache and primary storage memory, or between levels of a multi-level cache. The placement determines whether the cache uses physical or virtual addressing. If the cache is virtually addressed, requests are sent directly from the CPU to the cache, and the TLB is accessed only on a cache miss. If the cache is physically addressed, the CPU does a TLB lookup on every memory operation and the resulting physical address is sent to the cache. There are pros and cons to both implementations. Caches that use virtual addressing have for their key part of the virtual address plus, optionally, a key called an "address space identifier" (ASID). Caches that don't have ASIDs must be flushed every context switch in a multiprocessing environment. In a Harvard architecture or hybrid thereof, a separate virtual address space or memory access hardware may exist for instructions and data. This can lead to distinct TLBs for each access type. A common optimization for physically addressed caches is to perform the TLB lookup in parallel with the cache access. The low-order bits of any virtual address (e.g., in a virtual memory system having 4 KB pages, the lower 12 bits of the virtual address) represent the offset of the desired address within the page, and thus they do not change in the virtual-to-physical translation. During a cache access, two steps are performed: an index is used to find an entry in the cache's data store, and then the tags for the cache line found are compared. If the cache is structured in such a way that it can be indexed using only the bits that do not change in translation, the cache can perform its "index" operation while the TLB translates the upper bits of the address. Then, the translated address from the TLB is passed to the cache. The cache performs a tag comparison to determine if this access was a hit or miss. It is possible to perform the TLB lookup in parallel with the cache access even if the cache must be indexed using some bits that may change upon address translation; see the address translation section in the cache article for more details about virtual addressing as it pertains to caches and TLBs.

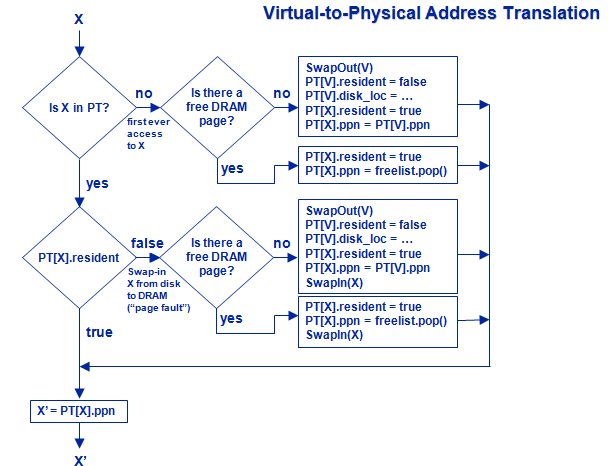

And here is generally how a typical TLB is working. Note that X is the virtual page number to be translated into a physical page number, X' is the Physical number corresponding to X. and V is the Virtual page number of a victim page(that is a page to be kicked off the table).

Next in this chapter we are going to discuss problems that are going to occur when we extend this TLB structure to multi-processor systems. The solution to these problems is presented in next chapter.

TLB Coherence issues

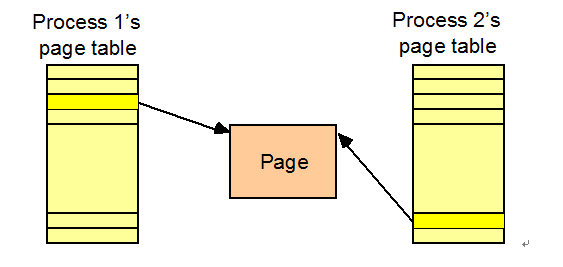

Problems occur when we extend TLB table into multi-processor systems. That is when memory is shared, two processors may have different virtual names for the same block.

This cause inconsistent loads and stores when more than one processors are trying to access the same blocks of data.

For example, Assume:

- The processor has been running Process 1

- It then switches to Process 2

- Later it switches back to Process 1

Now Process 2 caches a different copy of the information than Process 1. When Process 2 makes a change, that change is not reflected in Process 1's copy of the information.

Solutions

In this section, we will talk about several solutions to handle TLB coherence problem. We will focus most on the last approach - TLB shootdown - a commonlly used software approach to enforce TLB coherence. Some other approaches will be briefly introduced.

Virtually addressed caches

This method is to get rid of TLB. If we do not need TLB, we will not have the Coherence problem.

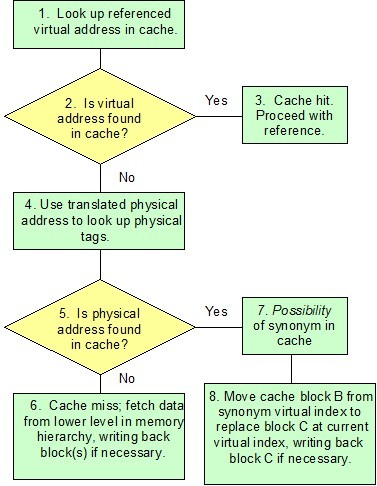

When virtually addressed cache is used, address translations only happen when there is a cache miss. Since it is not frequently to access page mappings, we do not need to use TLB any more. Figure shows how virtually addressed cache works.

The key distinction between virtually and physically addressed caches is that Virtually addressed caches are indexed using part of a virtual address rather than a physical address. Virtually addressed caches offer potentially faster access times by avoiding the delay associated with address translation. <ref>the effects of virtually addressed caches on virtual memory design and performance JonInouye - et al.</ref>

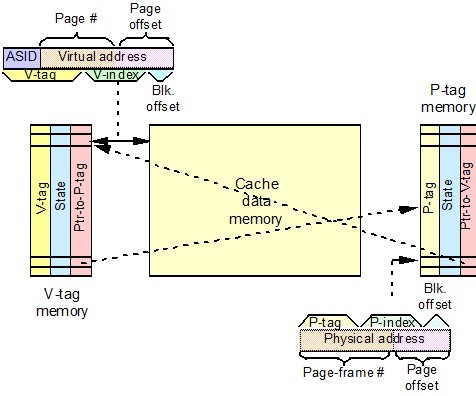

Figure shows the organization of virtually addressed caches.

From the figure “ASID” means “address-space identifier,” which is often called a process identifier (PID) in some books. The diagram shows the “V-index” overlapping the (virtual) page number. If not, we wouldn’t need a virtually addressed cache. We could do address translation in parallel with cache access with a physically addressed cache. Also the V-index actually does not stored in the cache. Like the index (“set” or “line”) field in physically addressed caches, it is not stored, but just tells what line or set to look in for the data<ref>lec15 from NCSU ECE506</ref>.

Given the virtually addressed cache which can help us get rid of TLB, problems of coherent still exist to some level. Swap-out and protection information(read or read/write) coherent still need to be enforced. However, since there is no TLB, such information is stored in cache, the coherence problem will be handled by cache-coherence hardware.

Invalidate instructions<ref>lec15 from NCSU ECE506</ref>

Some processors, notably the PowerPC, have a “TLB_invalidate_entry” instruction.

This instruction broadcasts the page address on the bus so that the snooping hardware on other processors can automatically invalidate the corresponding TLB entries without interrupting the processor.

A processor simply issues a TLB_invalidate_entry instruction immediately after changing a page-table entry. This works well on a shared-bus system; if two processors change the same entry at the same time, only one change can be broadcast first on the bus.

TLB shootdown

General Concept

TLB shootdown is another approach to enforce TLB coherence. It is a software approach using inter-processor interrupts. Also, it is a very common technique to enforce TLB coherence. How it works? In general, when a processor changes a TLB entry, it will make the other processors which contain the same TLB entries to invalidate their copies. A quick example as below may explain it more clearly.

Assuming you have some memory shared by all of the processors in your system. One of your processors restricts access to a page of that shared memory now, all of the processors have to flush their TLBs, so that the ones that aren't allowed to access that page can't do so anymore.The actions of one processor causing the TLBs to be flushed on other processors is what is called a TLB shootdown<ref>http://stackoverflow.com/questions/3748384/what-is-tlb-shootdown</ref>.

Detailed Steps

Some steps are needed to implement this approach.<ref>lec15 from NCSU ECE506</ref>.

Step 1. A processor p that wants to modify a page table disables inter-processor interrupts and locks the page table. It also clears its active flag, which indicates whether it is actively using any page table. Step 2. Processor p sends an interrupt to other processors that might be using the page table, describing the TLB actions to be performed. Step 3. Each processor that receives the interrupt clears its active flag. Step 4. Processor p busy-waits till the active flags of all interrupted processors are clear, then modifies the page table. Processor p then releases the page-table lock, sets its active flag, and resumes execution. Step 5. Each interrupted processor busy-waits until none of the page tables it is using are locked. After executing the required TLB actions and setting its active flag, it resumes execution.

Example

An example of TLB shootdown solution is the one described in Mach VM System. In this module, when an action may potentially cause TLB inconsistency, it will invoke the shootdown algorithm. The algorithm proceeds in four phases after it is invoked<ref>Translation Look aside Buffer Consistency: A Software Approach, David L. Black - et al. 1989</ref>.

1 Initiator: The initiator queues consistency action requests for all processors using the pmap and sets their “action needed” flags. It then sends interrupts to the processors and waits for responses. 2 Responders: Each responder receives its interrupt and removes itself from the set of active processors to acknowledge the interrupt. The responders then spin until the initiator completes its changes to pmap. (This spinning is necessary to ensure that responders neither read nor write the pmap while the update is in progress.) 3 Initiator: The initiator performs its pmap changes after all responders using the pmap are spinning. It unlocks the pmap when it is done. 4 Responders: The responders perform their required TLB invalidations after the pmap is unlocked and dequeue the corresponding actions. They also clear their “action needed” flags and rejoin the set of active processors.

The Pseudo-Code of Mach Shootdown Algorithm is as below<ref>Translation Look aside Buffer Consistency: A Software Approach, David L. Black - et al. 1989</ref>.

Initiator:

s = disable_interrupts();

active[mycpul = FALSE;

lockgmap(pmap);

if (inconsistent TLB may result) {

if (pmap->in-uselmycpul) {

invalidate-tlb(pmap,start,end);

}

/* Phase 1 */

if (other cpus using pmap) {

list_type shoot-list = EMPTY-LIST;

for (every cpu in system) {

if (pmap->in-uselcpul && cpu != mycpu) {

lock-action-structure(cpu):

queue_action(cpu,pmap,start,end);

action-neededlcpul = TRUE;

unlock-action-structure(cpu);

if (idle[cpul == FALSE) {

add cpu to shoot-list

}

}

}

for (every cpu on shoot-list) {

send-shootdown-interrupt(cpu);

}

for (every cpu on shoot-list) {

while (activelcpul && pmap->in-uselcpul) {

pmap->in_use[cpu]){

/* spin */ ;

}

}

}

}

/* Phase 3 */

make changes to physical map

unlock-pmap(pmap);

active[mycpu] = TRUE;

restore-interrupt-state(s);

Responders: /* Phase 2 */

s = disable-interrupts();

while (action-needed[mycpu]) {

active[mycpu] = FALSE;

while (pmap is locked(kernel-pmap) && pmapIisIlocked(user-pmap(mycpu)))

/* spin */ ;

/* Phase 4 */

lock-action-structure(mycpu);

process-queuedactions(mycpu);

action-neededlmycpul = FALSE;

unlock-action-structure(mycpu);

active[mycpu] = TRUE;

}

restore-interrupt-state(s);

Performance Analysis

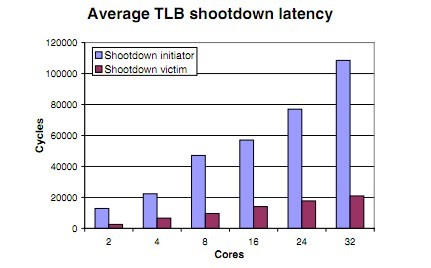

Apparently, as number of processors increases, the overhead of this approach scales linearly. Because number of interprocessors interrupts invoked will be O(n) to the number of processors.

Figure indicates the latency increases linearly as number of cores goes up.

Some Other Approaches<ref>Translation-Lookaside Buffer Consistency Patricia J. Teller - et al.</ref>

1. Modified TLB shootdown Overhead is the same as for TLB shootdown with two exceptions: Interrupted processors are not idled, and their participation is small and possibly constant. Page-table modification and use are not serialized.

2. Lazy devaluation The counter is updated on each TLB reload, invalidation, and replacement. When an unsafe change cannot be postponed, overhead is the same as for TLB shootdown.

3. Validation Memory requests contain a generation count. The modifying processor updates the generation count. An extra network trip is needed when a stale TLB entry is used. Overhead also includes a solution to the generation-count wraparound problem.

Summary

The main goal of multiprocessor is to increase the execution speed. While enforcing the TLB coherent, we should well understand the trade-off of different approaches. Some of the approaches require specific hardware and others(like shootdown) don't. Actually, in real design, it is difficult to conclude which solution will be better, it all depends on what applications running in what kind of environment.

Quiz

1. Which below is(are) solution(s) to TLB coherence problem

A. MESI protocol

B. TLB shootdown

C. using virtually addressed cache

D. all of above

2. which is(are) true below

A. TLB shootdown is a software approach

B. using virually addressed cache will decrease cache misses

C. PowerPC has a unique instruction to handle TLB coherence problem

D. TLB is shared by different processors

3. what is(are) step(s) of TLB shootdown

A. Locks the page table

B. Busy-wait the other processors to inactivate the flags

C. Send interrupt signal

D. Release Locks

4. Which is(are) incorrect about virtually addressed caches

A. It can be used to decrease the frequency to access map table

B. It can only addressed by virtual address

C. V-index should overlap the page number

D. V-index number is stored in the cache

5. Which is the correct order of phases in shootdown algorithm

A. responders initiators responders initiators

B. initiators initiators responders responders

C. initiators responders initiators responders

D. responders responders initiators initiators

References

<references></references>