CSC/ECE 506 Spring 2012/2a bm: Difference between revisions

| Line 72: | Line 72: | ||

==== Case studies ==== | ==== Case studies ==== | ||

[http://escholarship.org/uc/item/76p9b40g#page-1 Shan et al.] presented a comparison of the performance and programming effort of MP versus SAS running on clusters of '''Symmetric Memory Processors''' (SMPs). They highlighted the "automatic management and coherent replication" of the SAS programming model which facilitates the programming tasks in these types of clusters. This study uses MPI/Pro protocol for the MP programming model and GeNIMA SVM protocol (a page-based shared virtual memory protocol) for SAS on a 32 processors system (using a cluster of 8 machines with 4-way SMPs each). The subset of applications used involves regularly structured applications as FFT, Ocean, and LU contrasting with irregular ones as for example RADIX sort among others. | In 2001, [http://escholarship.org/uc/item/76p9b40g#page-1 Shan et al.] presented a comparison of the performance and programming effort of MP versus SAS running on clusters of '''Symmetric Memory Processors''' (SMPs). They highlighted the "automatic management and coherent replication" of the SAS programming model which facilitates the programming tasks in these types of clusters. This study uses MPI/Pro protocol for the MP programming model and GeNIMA SVM protocol (a page-based shared virtual memory protocol) for SAS on a 32 processors system (using a cluster of 8 machines with 4-way SMPs each). The subset of applications used involves regularly structured applications as FFT, Ocean, and LU contrasting with irregular ones as for example RADIX sort among others. | ||

The complexity of development is represented by the number of code lines per application as shown in the table below this lines. It is observed that SAS complexity is significantly lower than that of MP and this difference increases as applications are more irregular and dynamic in nature (almost doubles for Radix). | |||

{| class="wikitable" | |||

|- | |||

!Appl. | |||

!FFT | |||

!OCEAN | |||

!LU | |||

!RADIX | |||

!SAMPLE | |||

!N-BODY | |||

|- style="text-align: center;" | |||

| MPI ||222||4320||470||384||479||1371 | |||

|- style="text-align: center;" | |||

| SAS ||210 ||2878 ||309||201||450||950 | |||

|} | |||

The results performance-wise indicated that SAS was only half efficiently dealing with parallelism for most of the applications in this study. The only application that showed similar performance for both methods was the LU application. The authors concluded that the overhead of the SVM protocol for maintaining page coherence and synchronization were the handicap of the easier SAS programming model. | |||

=== Evolution === | === Evolution === | ||

Revision as of 15:34, 28 January 2012

SAS programming on distributed-memory machines

Shared Address Space (SAS) programming on distributed memory machines is a programming abstraction that provides less development effort than that of the traditional method of Message Passing (MP) on distributed memory machines, such as clusters of servers. Distributed systems are groups of computers that communicate through a network and share a common work goal. Distributed systems typically do not physically share the same memory (are not tightly coupled) but rather each processor or group of processors must depend on mechanisms other than direct memory access in order to communicate. Relevant issues that come to bear include memory coherence, types of memory access, data and process synchronization, and performance.

Background

Early distributed computer systems relied almost exclusively on message passing (MP) in order to communicate with one another, and this technique is still widely used today. In a message passing model, each processor's local memory can be considered as isolated from that of the rest of the system. Processes or objects can send or receive messages in order to communicate and this can occur in a synchronous or asynchronous manner. In distributed systems, and particularly with certain types of programs, the message passing model can become overly burdensome to the programmer as tracking data movement and maintaining data integrity can become quite challenging with many control threads. A shared address or shared-memory system, however, can provide a programming model that simplifies data sharing via uniform mechanisms of data structure reads and writes on common memory. Current distributed systems seek to take advantage both SAS and MP programming model principles in hybrid systems.

Generally a distributed system consists of a set of nodes connected by a network. Nodes may be comprised of individual processors or a multiprocessor system (e.g. Symmetric Multiprocessor (SMP)), the latter typically sharing a system bus. Each node itself contains a local memory, which maps partially to the distributed address space. Relevant design elements of early SAS implementations included scalability, coherence, structure and granularity. Most early examples did not structure memory, that is the layout of shared memory was simply a linear array of words. Some, however, structured data as objects or language types. IVY , an early example of a DSM system, implemented shared memory as virtual memory. The granularity, or unit share size, for IVY was in 1-Kbyte pages and the memory was unstructured. A problem when considering optimal page size is the balance between a process likely needing quick access to a large range of the shared address space, which argues for a larger page size, countered by the greater contention for individual pages that the larger page may cause amongst processes and the false sharing it may lead to. Memory coherence is another important design element consideration and semantics can be instituted that run gradations of strict to weak consistencies. The strictest consistency guarantees that a read returns the most recently written value. Weaker consistencies may use synchronization operations to guarantee sequential consistency.

Page Management and memory mapping in Mome

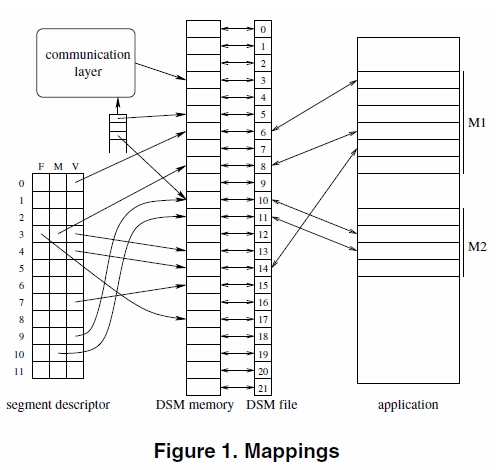

Mome is described by its developers as a user-level distributed shared memory. Mome, in 2003, was a run-time model that mapped Mome segments onto node private address space. Segment creation was initiated through a MomeCreateSegment(size) call which returned an identifier for mapping used by all nodes. Any process can request for a mapping of a section of its local memomy to a Mome segment section by calling MomeMap(Addr, Lg, Prot, Flags, Seg, Offset), which returns the starting address of the mapped region. Each mapping request made by a process is independent and the addresses of the mappings may or may not be consistent on all nodes. If mappings are consistent between processes, however, then pointers may be shared by them. Mome supports strong and weak consistency models, and for any particular page each node is able to dynamically manage its consistency during program execution.

Mome manages pages in a directory based scheme where each page directory maintains the status of six characteristics per page on each node. The page manager acts upon collections of nodes according to these characteristics for each page: V nodes posses the current version, M nodes have a modified version, S nodes want strong consistency, I nodes are invalidated, F nodes have initiated a modification merge and H nodes are a special type of hidden page. A new version of a page is created prior to a constraint violation and before modifications are integrated as a result of a consistency request.

The state of each segment page is

Cache-Coherent DSM

Implementations

Software DSM Implementations

Hardware DSM Implementations

Hybrid DSM Implementations

Performance

There are numerous studies of the performance of shared memory applications in distributed systems. The vast majority of them use a collection of programs named SPLASH and SPLASH-2.

SPLASH and SPLASH-2

The Stanford ParalleL Applications for SHared memory (SPLASH) is a collection of parallel programs engineered for the evaluation of shared address space machines. These programs have been used by research studies to provide measurements and analysis of different aspects of the emerging DSM architectures at the time. A subsequent suite of programs (SPLASH-2) evolved from the necessity of improving on the SPLASH programs limitations. SPLASH-2 covers a more ample domain of scientific programs, makes use of improved algorithms, and pays more attention to the architecture of the underlying systems.

Selected applications in the SPLASH-2 collections include:

- FFT: a Fast Fourier Transform implementation, in which the data is organized in source and destination matrices so that processors have stored in their local memory a contiguous set of rows. In this application all processors involved communicate among them, sending data to each other, to evaluate a matrix transposition.

- Ocean: calculations of large scale ocean movements simulating eddy currents. For the purpose of calculations, it shows "nearest neighbors patterns" in grid formation as opposed to using a single grid.

- LU: matrix decomposition in the product of an upper triangular and lower triangular matrices. LU exhibits a "one-to-many non-personalized communication".

- Barnes: simulates the interaction of a group of particles over time steps.

- Radix sort: integer sorting algorithm. This algorithm implementation displays an example of communication among all the processors involved, and the nature of this communication presents irregular patterns.

Case studies

In 2001, Shan et al. presented a comparison of the performance and programming effort of MP versus SAS running on clusters of Symmetric Memory Processors (SMPs). They highlighted the "automatic management and coherent replication" of the SAS programming model which facilitates the programming tasks in these types of clusters. This study uses MPI/Pro protocol for the MP programming model and GeNIMA SVM protocol (a page-based shared virtual memory protocol) for SAS on a 32 processors system (using a cluster of 8 machines with 4-way SMPs each). The subset of applications used involves regularly structured applications as FFT, Ocean, and LU contrasting with irregular ones as for example RADIX sort among others.

The complexity of development is represented by the number of code lines per application as shown in the table below this lines. It is observed that SAS complexity is significantly lower than that of MP and this difference increases as applications are more irregular and dynamic in nature (almost doubles for Radix).

| Appl. | FFT | OCEAN | LU | RADIX | SAMPLE | N-BODY |

|---|---|---|---|---|---|---|

| MPI | 222 | 4320 | 470 | 384 | 479 | 1371 |

| SAS | 210 | 2878 | 309 | 201 | 450 | 950 |

The results performance-wise indicated that SAS was only half efficiently dealing with parallelism for most of the applications in this study. The only application that showed similar performance for both methods was the LU application. The authors concluded that the overhead of the SVM protocol for maintaining page coherence and synchronization were the handicap of the easier SAS programming model.

Evolution

References

- Shan, H.; Singh, J.P.; Oliker, L.; Biswas, R.; , "Message passing vs. shared address space on a cluster of SMPs," Parallel and Distributed Processing Symposium., Proceedings 15th International , vol., no., pp.8 pp., Apr 2001

- Protic, J.; Tomasevic, M.; Milutinovic, V.; , "Distributed shared memory: concepts and systems," Parallel & Distributed Technology: Systems & Applications, IEEE , vol.4, no.2, pp.63-71, Summer 1996

- Chandola, V. , "Design Issues in Implementation of Distributed Shared Memory in User Space,"

- Nitzberg, B.; Lo, V. , "Distributed Shared Memory: A Survey of Issues and Algorithms"

- Steven Cameron Woo , Moriyoshi Ohara , Evan Torrie , Jaswinder Pal Singh , Anoop Gupta, "The SPLASH-2 programs: characterization and methodological considerations," Proceedings of the 22nd annual international symposium on Computer architecture, p.24-36, June 22-24, 1995, S. Margherita Ligure, Italy