2.Blade Servers: Difference between revisions

No edit summary |

|||

| Line 38: | Line 38: | ||

'''Power''' | '''Power''' | ||

Computers operate over a range of DC voltages, yet power is delivered from utilities as AC, and at higher voltages than required within the computer. Converting this current requires power supply units (or PSUs). To ensure | Computers operate over a range of DC voltages, yet power is delivered from utilities as AC, and at higher voltages than required within the computer. Converting this current requires power supply units (or PSUs). To ensure power failure of one source does not affect the operation of the computer, even entry-level servers have redundant power supplies, adding to the bulk and heat output of the design. | ||

The blade enclosure's power supply provides a single power source for all blades within the enclosure. This single power source may be in the form of a power supply in the enclosure or a dedicated separate PSU supplying DC to multiple enclosures [1]. This setup not only reduces the number of PSUs required to provide a resilient power supply, but it also improves efficiency | The blade enclosure's power supply provides a single power source for all blades within the enclosure. This single power source may be in the form of a power supply in the enclosure or a dedicated separate PSU supplying DC to multiple enclosures [1]. This setup not only reduces the number of PSUs required to provide a resilient power supply, but it also improves efficiency by reducing the number of idle PSUs. In the event of a PSU failure the blade chassis throttles down individual blade server performance until it matches the available power. This is carried out in steps of 12.5% per CPU until power balance is achieved. | ||

'''Cooling''' | '''Cooling''' | ||

During operation, electrical and mechanical components produce heat, which must be | During operation, electrical and mechanical components produce heat, which must be dissipated quickly to ensure the proper and safe functioning of the components. In blade enclosures, as in most computing systems, heat is removed with fans. | ||

A frequently underestimated problem when designing high-performance computer systems is the conflict between the amount of heat a system generates and the ability of its fans to remove the heat. The blade's shared power and cooling means that it does not generate as much heat as traditional servers. | A frequently underestimated problem when designing high-performance computer systems is the conflict between the amount of heat a system generates and the ability of its fans to remove the heat. The blade's shared power and cooling means that it does not generate as much heat as traditional servers. The recent blade enclosure designs include high speed, adjustable fans and control logic that tune the cooling to the systems requirements.[2] | ||

At the same time, the increased density of blade server configurations can still result in higher | At the same time, the increased density of blade server configurations can still result in a overall higher demand for cooling when a rack is populated at over 50%. This is especially true with early generation blades. In absolute terms, a fully populated rack of blade servers is likely to require more cooling capacity than a fully populated rack of standard 1U servers. | ||

'''Networking''' | '''Networking''' | ||

Computers are increasingly being produced with high-speed, integrated network interfaces, and most are expandable to allow for the addition of connections that are faster, more resilient and run over different media (copper and fiber). These may require extra engineering effort in the design and manufacture of the blade, consume space in both the installation and capacity for installation (empty expansion slots) and hence more complexity. High-speed network topologies require expensive, high-speed integrated circuits and media, while most computers do not | Computers are increasingly being produced with high-speed, integrated network interfaces, and most are expandable to allow for the addition of connections that are faster, more resilient and run over different media (copper and fiber). These may require extra engineering effort in the design and manufacture of the blade, consume space in both the installation and capacity for installation (empty expansion slots) and hence more complexity. High-speed network topologies require expensive, high-speed integrated circuits and media, while most computers do not utilize all the bandwidth available. | ||

Many network buses are provided in the enclosures to which the blade will connect, and either presents these ports individually in a single location (versus one in each computer chassis), or aggregates them into fewer ports, reducing the cost of connecting the individual devices. These may be presented in the chassis itself, or in networking blades[3]. | |||

'''Storage''' | '''Storage''' | ||

| Line 61: | Line 61: | ||

The ability to boot the blade from a storage area network (SAN) allows for an entirely disk-free blade. This may have higher processor density or better reliability than systems having individual disks on each blade. | The ability to boot the blade from a storage area network (SAN) allows for an entirely disk-free blade. This may have higher processor density or better reliability than systems having individual disks on each blade. | ||

== Advantages of Blade Servers == | == Advantages of Blade Servers == | ||

Revision as of 02:35, 11 September 2007

Introduction

Blade servers are a revolutionary new concept for enterprise applications currently using a “stack of PC servers” approach. Blade servers promise to greatly increase compute density, reduce cost, improve reliability, and simplify cabling. Companies such as Dell, Hewlett Packard, IBM, RLX, and Sun offer blade server solutions that reduce operating expense while increasing services density. Blade servers form the basis for a modular computing paradigm.

Evolution

For many years, traditional standalone servers grew larger and faster, taking on more and more tasks as networked computing expanded. New servers were added to data centers as the need arose, often as a quick fix with little coordination or planning; it was not unusual for data center operators to discover that servers had been added without their knowledge. The resulting complexity of boxes and cabling became a growing invitation to confusion, mistakes, and inflexibility.

Figure : Conventional Servers

Figure : Conventional Servers

Blade servers, first appearing in 2001, are a very simple and pure example of modular architecture – the blades in a blade server chassis are physically identical, with identical processors, ready to be configured and used for any purpose desired by the user. Their introduction brought many benefits of modularity to the server landscape – scalability, ease of duplication, specialization of function, and adaptability.Blade servers were developed in response to a critical and growing need in the datacenter: the requirement to increase server performance and availability without dramatically increasing the size, cost and management complexity of an ever growing data center. To keep up with user demand and because of the space and power demands of traditional tower and rackmount servers, data centers are being forced to expand their physical plant at an alarming rate.

But while these classic modular advantages have given blade servers a growing presence in data centers, their full potential awaits the widespread implementation of one remaining critical capability of modular design: fault tolerance. Fault tolerant blade servers – ones with built-in “failover” logic to transfer operation from failed to healthy blades – have only recently started to become available and affordable. The reliability of such fault tolerant servers will surpass that of current techniques involving redundant software and clusters of single servers, putting blade servers in a position to become the dominant server architecture of data centers. With the emergence of automated fault tolerance, industry observers predict rapid migration to blade servers over the forthcoming years.

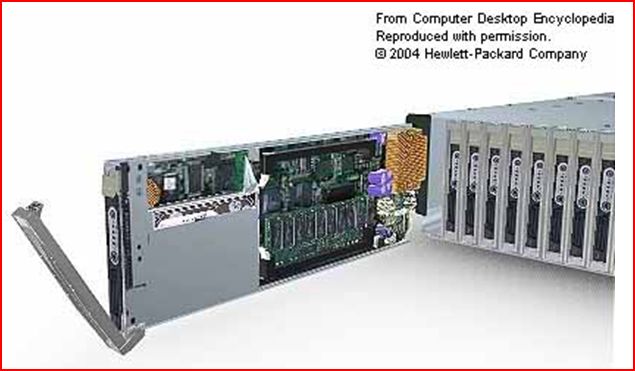

General blade server architecture

A general blade server architecture is shown in the figure. The hardware components of a blade server are the switch blade, chassis (with fans, temperature sensors, etc), and multiple compute blades. Some vendors offer, partner, or plan to partner with companies that provide application specific blades that provide traffic conditioning, protection, or network processing prior to the traffic reaching the compute blades. Often, these application specific blades may be functionally positioned between the switch blade and compute blades. However, these blades reside in a standard compute blade slot.

The outside world connects through the rear of the chassis to a switch card in the blade server. The switch card is provisioned to

distribute packets to blades within the blade server. All these components are wrapped together with network management system

software provided by the blade server vendor. The specifics on the blade server architecture vary from vendor to vendor. But before

you discount this as a bunch of proprietary architectures, think again. Remember that IBM and others dramatically advanced and

proliferated the PC architecture, changing the face of computing forever.

The blade server industry appears to be headed in the same direction. There are some areas where standardization of blade server components will prove helpful. However, blade server vendors ability to quickly adapt and advance their architectures to suite specific applications unencumbered by the standards process will prove to accelerate proliferation in the near term.

Blade Enclosure

The enclosure (or chassis) performs many of the non-core computing services found in most computers. Non-blade computers require components that are bulky, hot and space-inefficient, and duplicated across many computers that may or may not be performing at capacity. By locating these services in one place and sharing them between the blade computers, the overall utilization is more efficient. The specifics of which services are provided and how vary by vendor.

Power

Computers operate over a range of DC voltages, yet power is delivered from utilities as AC, and at higher voltages than required within the computer. Converting this current requires power supply units (or PSUs). To ensure power failure of one source does not affect the operation of the computer, even entry-level servers have redundant power supplies, adding to the bulk and heat output of the design.

The blade enclosure's power supply provides a single power source for all blades within the enclosure. This single power source may be in the form of a power supply in the enclosure or a dedicated separate PSU supplying DC to multiple enclosures [1]. This setup not only reduces the number of PSUs required to provide a resilient power supply, but it also improves efficiency by reducing the number of idle PSUs. In the event of a PSU failure the blade chassis throttles down individual blade server performance until it matches the available power. This is carried out in steps of 12.5% per CPU until power balance is achieved.

Cooling

During operation, electrical and mechanical components produce heat, which must be dissipated quickly to ensure the proper and safe functioning of the components. In blade enclosures, as in most computing systems, heat is removed with fans.

A frequently underestimated problem when designing high-performance computer systems is the conflict between the amount of heat a system generates and the ability of its fans to remove the heat. The blade's shared power and cooling means that it does not generate as much heat as traditional servers. The recent blade enclosure designs include high speed, adjustable fans and control logic that tune the cooling to the systems requirements.[2]

At the same time, the increased density of blade server configurations can still result in a overall higher demand for cooling when a rack is populated at over 50%. This is especially true with early generation blades. In absolute terms, a fully populated rack of blade servers is likely to require more cooling capacity than a fully populated rack of standard 1U servers.

Networking

Computers are increasingly being produced with high-speed, integrated network interfaces, and most are expandable to allow for the addition of connections that are faster, more resilient and run over different media (copper and fiber). These may require extra engineering effort in the design and manufacture of the blade, consume space in both the installation and capacity for installation (empty expansion slots) and hence more complexity. High-speed network topologies require expensive, high-speed integrated circuits and media, while most computers do not utilize all the bandwidth available.

Many network buses are provided in the enclosures to which the blade will connect, and either presents these ports individually in a single location (versus one in each computer chassis), or aggregates them into fewer ports, reducing the cost of connecting the individual devices. These may be presented in the chassis itself, or in networking blades[3].

Storage

While computers typically need hard-disks to store the operating system, application and data for the computer, these are not necessarily required locally. Many storage connection methods (e.g. FireWire, SATA, SCSI, DAS, Fibre Channel and iSCSI) are readily moved outside the server, though not all are used in enterprise-level installations. Implementing these connection interfaces within the computer presents similar challenges to the networking interfaces (indeed iSCSI runs over the network interface), and similarly these can be removed from the blade and presented individually or aggregated either on the chassis or through other blades.

The ability to boot the blade from a storage area network (SAN) allows for an entirely disk-free blade. This may have higher processor density or better reliability than systems having individual disks on each blade.

Advantages of Blade Servers

Reduced Space Requirements - Greater density provides up to 35 to 45 percent improvement compared to tower or rackmounted

servers.

Reduced Power Consumption and Improved Power Management - consolidating power supplies into the blade chassis reduces the number

of separate power supplies needed and reduces the power requirements per server.

Lower Management Cost - server consolidation and resource centralization simplifies server deployment, management and

administration and improves management and control.

Simplified Cabling - rack mount servers, while helping consolidate servers into a centralized location, create wiring

proliferation. Blade servers simplify cabling requirements and reduce wiring by up to 70 percent. Power cabling, operator wiring

(keyboard, mouse, etc.) and communications cabling (Ethernet, SAN connections, cluster connection) are greatly reduced.

Future Proofing Through Modularity - as new processor, communications, storage and interconnect technology becomes available, it

can be implemented in blades that install into existing equipment, upgrading server operation at a minimum cost and with no

disruption of basic server functionality.

Easier Physical Deployment - once a blade server chassis has been installed, adding additional servers is merely a matter of

sliding in additional blades into the chassis. Software management tools simplify the management and reporting functions for blade

servers. Redundant power modules and consolidated communication bays simplify integration into datacenters and increase

reliability.

Are blade servers an extension of message passing ?

Blade servers use message passing in order to achieve fast and efficient performance. Parallel computing frequently relies upon message passing to exchange information between computational units. In high-performance computing, the most common message passing technology is the Message Passing Interface (MPI), which is being developed in an open-source implementation supported by Cisco Systems® and other vendors.

High performance computing (HPC) Cluster applications require a high performance interconnect for blade servers to achieve fast and efficient performance for computation-intensive applications.When messages are passed between nodes , some time is spent transmitting these messages, and depending on the frequency of the data synchronization between processes, that factor can have a significant effect on total application run time. It is critically important to understand how the application works with respect to interprocess communications patterns and the frequency of updates, because these affect the performance and design of the parallel application, the design of the interconnecting network, and the choice of network technology.

Using traditional transport protocols such as TCP/IP, the CPU is responsible for managing how data is moved between I/O memory and for transport protocol processing. The effect of this is that time spent in communicating between nodes is time not spent on processing the application. Therefore, minimizing communications time is a key consideration for certain classes of applications.

MPI is “middleware” software that sits between the application and the network hardware. It provides a portable mechanism to enable messages to be exchanged between processes regardless of the underlying network or parallel computational environment. As such,implementations of the MPI standard use underlying communications stacks such as TCP or UDP over IP, InfiniBand, or Myrinet to communicate between processes. MPI offers a rich set of functions that can be combined in simple or complex ways to solve any type of parallel computation. The ability to exchange messages enables instructions or data to be passed between nodes to distribute data sets for calculation. MPI has been implemented on a wide variety of platforms, operating systems, and cluster and supercomputer architectures.

See Also [1] The best of both worlds