CSC/ECE 517 Fall 2009/wiki1b 11 cc: Difference between revisions

No edit summary |

No edit summary |

||

| Line 264: | Line 264: | ||

The focus will be on the "Large" set of applications since they are closer and realistic to the common applications, the following segment gives the definitions of TF and TI, their analysis and results of performance are based on them, TF and TI are Techniques to optimize dynamic and static code. | The focus will be on the "Large" set of applications since they are closer and realistic to the common applications, the following segment gives the definitions of TF and TI, their analysis and results of performance are based on them, TF and TI are Techniques to optimize dynamic and static code. | ||

==Execution Time: == | ===Execution Time: === | ||

Speed is the ultimate goal of an optimizing compiler. Figure 1 shows the relative execution time of the benchmarks compiled with TF and TI systems | Speed is the ultimate goal of an optimizing compiler. Figure 1 shows the relative execution time of the benchmarks compiled with TF and TI systems | ||

| Line 272: | Line 272: | ||

---- | ---- | ||

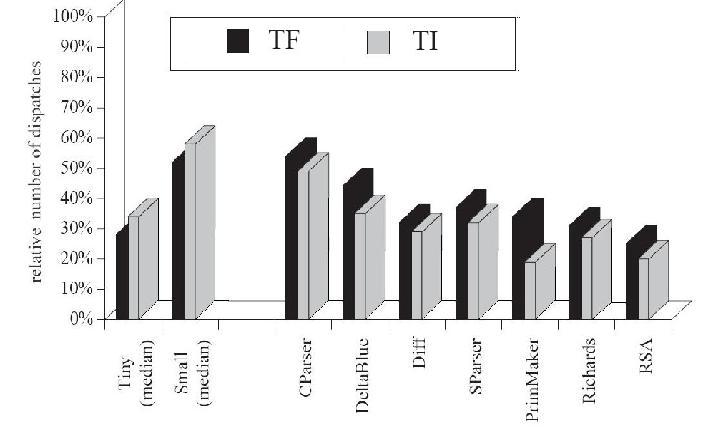

==Number of dispatches:== | ===Number of dispatches:=== | ||

A dispatch is the selection of the correct piece of code for a particular receiver-message pair, a dispatch is independent of whether it requires a call or not. By measuring the number of dispatches that a program performs, it is possible to determine the performance in creating monomorphic call. | A dispatch is the selection of the correct piece of code for a particular receiver-message pair, a dispatch is independent of whether it requires a call or not. By measuring the number of dispatches that a program performs, it is possible to determine the performance in creating monomorphic call. | ||

Revision as of 20:33, 20 September 2009

Static vs. dynamic o-o languages from the perspective of performance

Object-oriented languages can be analyzed into either dynamical or statical language by their type handling mechanism. The former delays the type decision until run time ( type feedback ), in turn the latter determines and strongly restricts type usage in compile time (concrete type inference ).

One parameter to measure the performance of the code are calls, they not only slow down the program through the calling overhead but also the opportunities to optimize the code are destroyed because of dynamically-dispatched calls. Object oriented programs tendency is to contain more calls at the source level rather than at procedural level since the programming style in object oriented encourages factoring code into small pieces to obtain fine-grained reuse, this pactice makes more difficult the optimization and thus the performance.

It is important to mention that traditional compilers are unable to remove dynamical calls, therefore the object oriented programs usually exhibit a higher calling frequency than procedural programs. The frequent calls, combined with the scarce opportunities for traditional code optimizations, can lead to poor run-time performance.

To eliminate dynamical calls by statically binding or in-lining them, however it is not that easy, even for the statically-typed lenguages

Consider the following C++ code fragment:

GraphicalObject* obj;

...

obj->moveTo(0, 0);

Although the type declaration, a compiler cannot statically bind the “moveTo” call because it does not know the object’s exact class and thus it cannot determine whether “Point::moveTo” or “Rectangle::moveTo” will be invoked.

In a statically typed language the compiler must know either by inferencing or through explicit declarations, the type of every object everywhere you use it. This places restrictions on what you can do, but because of these restrictions the compiler is able to provide you with extra information, is the program type safe and make performance optimisations.

So it is a trade-off, there are things you just can't do in a statically typed language that you can do in a dynamically typed language

Type Inference

Static Object-Oriented Language ( type inference TI)

Statically-typed languages require that all variables are declared with a specific type. The compiler will then ensure that at any given time the variable contains only an object compatible with that type. By enforcing the type constraint on objects contained or referred to by the variable, the compiler can ensure calling method error can never occur at run-time. It enforces safer, more reliable code, and increases efficiency of the resulting product as well. On the other hand, a static type system can hinder evolution of software in some circumstances. Upper classes restrict the inherited ones evolving into various form from its strict type-binding. Eiffel , Java , C# , C++ , Visual Basic

Type Feedback

Dynamic Object-Oriented Language ( type feedback TF)

A dynamic type system doesn't require variables to be declared as a specific type. Any variable can contain any value or object. In many cases this can make the software more flexible and amenable to change. However, care must be taken that variables hold the expected kind of object. Typically, if a variable contains an object of a different type than a user of the object expects, some sort of calling method error is raised at run-time. On the other hand , if the type of the object is not what it was originally expected to be, it may not understand the messages being sent to it. Even worse that it may be interpreted in a wrong way. Smalltalk , Ruby , Python , Perl

Principal Comparison Between Statyc and Dynamic

Table XX shows the principal differences between Dynamic and static for the performance point of view, below that are the explanations of each Feature

| Feature | Dynamic | Static |

|---|---|---|

| Generic Classes | Yes | No |

| Inheritance | Yes | No |

| Method Overloading | Yes | No |

| Higher Order Functions & Lexical Closures | Yes | No |

| Uniform Access | Yes | No |

| Class Variables/Methods | Yes | No |

| Reflection | Yes | No |

Table. Performance comparison of Static and Dynamic.

Generic Classes

Generic classes, and more generally parametric type facilities, refer to the ability to parameterize a class with specific data types. The primary benefit of parameterized types is that it allows statically typed languages to retain their compile-time type safety yet remain nearly as flexible as dynamically typed languages. Java's lack of generic classes is a severe hole in the Java type system, on the other hand Dynamically typed languages do not need parameterized types in order to support generic programming

Inheritance

Inheritance is the ability for a class or object to be defined as an extension or specialization of another class or object. Most object-oriented languages support class-based inheritance, while others such as SELF and JavaScript support object-based inheritance. A few languages, notably Python and Ruby, support both class- and object-based inheritance, in which a class can inherit from another class and individual objects can be extended at run time with the capabilities of other objects.

Method Overloading

Method overloading (also referred to as parametric polymorphism) is the ability for a class, module, or other scope to have two or more methods with the same name. Calls to these methods are disambiguated by the number and/or type of arguments passed to the method at the call site. For example, a class may have multiple print methods, one for each type of thing to be printed. The alternative to overloading in this scenario is to have a different name for each print method, such as print_string and print_integer.

Higher Order Functions & Lexical Closures

Higher order functions are, in the simplest sense, functions that can be treated as if they were data objects. In other words, they can be bound to variables including the ability to be stored in collections, they can be passed to other functions as parameters, and they can be returned as the result of other functions.

Lexical closures or static closures take this one step further by bundling up the lexical (static) scope surrounding the function with the function itself, so that the function carries its surrounding environment around with it wherever it may be used.

Among the languages we're considering, Smalltalk and Ruby have supported both higher order functions and lexical closures

Java's anonymous classes allow a function to be bundled with an object that can be treated much as a higher order function can. C++ similarly provides partial support for higher order functions using function objects (or "functors"), and add the further benefit that the function call operator may be overloaded so that functors may be treated generically,

Uniform Access

The Uniform Access Principle, as published in Bertrand Meyer's Object-Oriented Software Construction, states that "All services offered by a module should be available through a uniform notation, which does not betray whether they are implemented through storage or through computation." For example, in Java you would use foo.bar if it were an attribute, but you would use foo.bar() if it were a function. Having this notational difference means that users of Foo are exposed to unnecessary implementation details and are tightly coupled to Foo. If bar is changed from attribute to method (or vice versa), then any users of Foo must also be changed.

Ruby directly support the Uniform Access Principle

Class Variables/Methods

Smalltalk and Ruby support the most advanced notion of class variables and methods, due to their use of meta-classes and the fact that even classes are objects in these languages. Java and C++ provide "static" members which are effectively the same thing, yet more limited since they cannot be inherited.

Reflection

Reflection is the ability for a program to determine various pieces of information about an object at run-time. This includes the ability to determine the type of the object, its inheritance structure, and the methods it contains, including the number and types of parameters and return types.

Benchmarking Between Statyc and Dynamic

This page contains the same program, implemented in the same way, in C, Ada, FORTRAN, Lisp, FORTH, Java, Perl, R and Ruby and run on a 300MHz Pentium.

For such applications, performance translates directly into cost: the number of machines you need to buy and maintain in order to serve a given number of users or to achieve a required response time is directly proportional to computation time.

The program we benchmarked computes the same 100-term polynomial 500,000 times, using exactly the same algorithm. In all programs the indices of the polynomial are kept in a local float vector. In this, the program only tests the quality of code which accesses local vectors and performs simple arithmetics in loops, and is free from differences in the standard library, operating system calls and, indeed, the presence of any advanced language features.

Performance is governed by how quickly operations, loop, access to array elements, function calls, etc

The benchmarking is on C and Java versus Python and Ruby

C

#include <stdio.h>

#include <stdlib.h>

main(short argc, char **argv) {

float mu = 10.0;

float x,s;

float pu = 0.0;

int su, i, j, n;

float pol[100];

register tm1;

register int tp1;

n = atol(argv[1]);

x = atof(argv[2]);

for(i=0; i<n; i++) {

tp1=2;

tm1=1/2.0;

for (j=0; j<100; j++) {

pol[j] = mu = (mu + tp1) * tm1;

}

s = 0.0;

for (j=0; j<100; j++) {

s = x*s + pol[j];

}

pu += s;

}

printf("%f\n",pu);

}

Java

public class tpoly {

static public void main(String argv[]) {

float mu = (float)10.0;

float x,s;

float pu = (float)0.0;

int su, i, j, n;

float pol[] = new float[100];

n = 500000;

x = (float)0.2;

for(i=0; i<n; i++) {

for (j=0; j<100; j++) {

mu = (mu + (float)2.0) / (float)2.0;

pol[j] = mu;

}

s = (float)0.0;

for (j=0; j<100; j++) {

s = x*s + pol[j];

}

pu += s;

}

System.out.println(pu);

}

}

Python

n = 500000

x = 0.2

def t(x):

mu = 10.0

pu = 0.0

pol =[0] * 100

r = range(0,100)

for i in range(0,n):

for j in r:

pol[j] = mu = (mu + 2.0) / 2.0

su = 0.0

for j in r:

su = x * su + pol[j]

pu = pu + su

print pu

t(x)

Ruby

n = 500000

x = 0.2

mu = 10

pu = 0

pol = []

n.times do

0.upto(99) { |j| pol[j] = mu = (mu + 2) * 0.5 }

s = 0

0.upto(99) { |j| s = x * s + pol[j] }

pu += s

end

print pu,"\n"

Results are below (in seconds needed to perform the simple task outlined above).

| Language | Seconds to perform task |

|---|---|

| C, using gcc V2.95.4 | 2.73 |

| Java, gcj V3.0 | 3.03 |

| Python V2.1.2 | 515.04 |

| Ruby | 1074.52 |

CounterAct

Dynamic O-O languages monitors previous executions of the program to determine the set possible receiver classes. In turn , Static O-O languages computes the set of possible receiver classes by analyzing the program’s source code. This characteristics bring consideration opposed to the belief that the static O-O languages are efficient in performance. Compilers cannot predict the typical usage. With this reason, statical way shows more overhead when leading to calling methods called infrequently in case that the methods are small subset of vast method pool.

Optimization of Static and Dynamic Code and Benchmarking

Therefore a benchmarking in performance was made in [Set reference], to evaluate the relative performance of dynamic and static programs, in order to accomplish this the programs were divided into three groups,

• “Tiny” is a set of very small integer programs on which one would expect type inference to do particularly well since these programs do not use polymorphism. They are included for reference only.

• “Small” is a set of small benchmarks which primarily operate on integers and arrays and contain little polymorphism. These benchmarks are intended to represent the kernels of computationally intensive programs.

• “Large” is a set of application programs which were written by several different programmers and exhibit a variety of object-oriented programming styles.

After that a suite of 23 benchmarks were executed, Table XX shows them

The focus will be on the "Large" set of applications since they are closer and realistic to the common applications, the following segment gives the definitions of TF and TI, their analysis and results of performance are based on them, TF and TI are Techniques to optimize dynamic and static code.

Execution Time:

Speed is the ultimate goal of an optimizing compiler. Figure 1 shows the relative execution time of the benchmarks compiled with TF and TI systems

Number of dispatches:

A dispatch is the selection of the correct piece of code for a particular receiver-message pair, a dispatch is independent of whether it requires a call or not. By measuring the number of dispatches that a program performs, it is possible to determine the performance in creating monomorphic call.

Type inference (TI) can determine the exact receiver class for many sends. Since such sends no longer require a dispatch, the overall number of dispatches is reduced.

In contrast, type feedback (TF) requires a dispatch test even if it predicts a single class, because the receiver type always includes the unknown class. Therefore, one would expect TI-compiled programs to execute strictly fewer dispatches than TF-compiled programs.

The benchmark results are shown in Figure (XX)

Conclusions:

1.- It is all about the right tool for the job. Neither is better 100% of the time. Both systems were created by man and have flaws. Sorry, but we suck and making perfect stuff.

I like dynamic typing because it gets out of my way, but yes runtime errors can creep up that I didn't plan for. Where as static typing may fix the aforementioned errors, but drive a novice (in typed languages) programmer crazy trying to cast between a constant char and

2.- Modern JITs like V8 and TraceMonkey are coming dangerously-close to static language performance. Also, the fact that Java actually compiles down to an inherently dynamic intermediate language should be a hint that for most cases, dynamic typing isn't the huge performance-killer that some people make it out to be

3.- statically typed systems programming languages make code less reusable, more verbose, not more safe, and less expressive than dynamically typed scripting languages

The advantages of static typing include their earlier detection of programming mistakes, better documentation in the form of type signatures, more opportunities for compiler optimizations by replacing virtual calls by direct calls, increased runtime efficiency, since not all values need to carry dynamic and a better design time developer experience, in other words “well-typed programs cannot go wrong”. However the static type checking is a compile-time abstraction of the runtime behavior of the program, and hence it is partially incomplete. This means that programs can still go wrong because of properties that are not tracked by the type-checker, and that there are programs that while they cannot go wrong cannot be type-checked.

The impulse for making static typing less partial and more complete causes type systems to become overly complicated and exotic, this is like trying to run a marathon with a ball and chain tied to your leg and triumphantly shouting that you nearly made it even though you bailed out after the first mile. It is commonly said that static typing is too rigid.

On the other hand the softness of dynamically languages makes them ideally suited for prototyping systems with changing or unknown requirements, or that interact with other systems that change unpredictably in data and application integration. Dynamically typed languages are indispensable for dealing with truly dynamic program behavior such as method interception, dynamic loading, mobile code, runtime reflection, etc. On undeniable fact is that delaying all type-checking to runtime is a good thing, in other words, errors should be caught as early in the development process as possible.

References

External Links