CSC/ECE 517 Fall 2020 - E2087. Conflict notification. Improve Search Facility In Expertiza: Difference between revisions

| Line 116: | Line 116: | ||

UI before improvements. | UI before improvements. | ||

< | <br> | ||

< | <br> | ||

[[File:Chart after.png|1000px]] | [[File:Chart after.png|1000px]] | ||

Revision as of 04:04, 17 November 2020

Introduction

Expertiza includes the functionality of notifying instructors when a conflict occurs in review scores for a submission. Currently, when two reviews for the same submission differ significantly, an email is sent to the instructor of the course, but there is no link to the review that caused the conflict. This improvement will allow the professor to have links to the reviews that caused the conflict and will be formatted better to help the instructor understand the conflict.

Issues with previous submission

- The functionality is good but the UI of conflict report needs work.

- The UI needs to be cleaned up a little. When charts have only one or two bars, the chart can be compressed. The reviewer whose scores deviate from the threshold can be displayed in a different colored bar.

- Tests need to be refactored.

- They included their debug code in their pull request.

- They have included a lot of logic in the views.

- Shallow tests: one or more of their test expectations only focus on the return value not being `nil`, `empty` or not equal to `0` without testing the `real` value.

Proposed Solution

The previous team implemented new logic to determine if a review is in conflict with another and created a new page to link the instructors to in the email. Because their functionality was good, we will mainly be focused on improving the UI of the conflict reports. Furthermore, there is a lot of logic that lives in the views that can be refactored and moved to controllers. Additionally, there is debug code that can be removed and tests that can be fleshed out.

UI Imporvements

File: app/views/reports/_review_conflict_metric.html.erb

The UI can be improved for the conflict metric to give more information on the conflicted reviews.

Logic in Views

File: app/views/reports/_review_conflict_metric.html.erb

The logic to determine if answers are within or outside of the tolerance limits can be moved to functions outside of the .erb file.

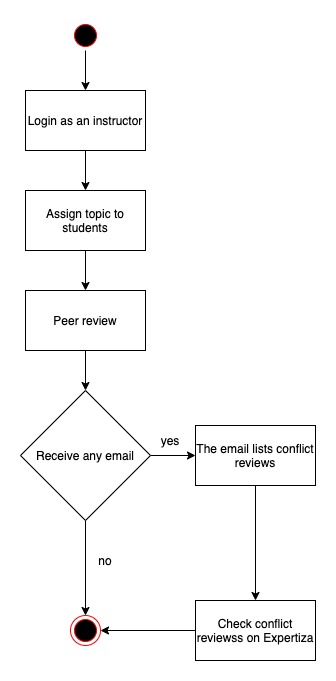

UML Activity Diagram

UI Changes

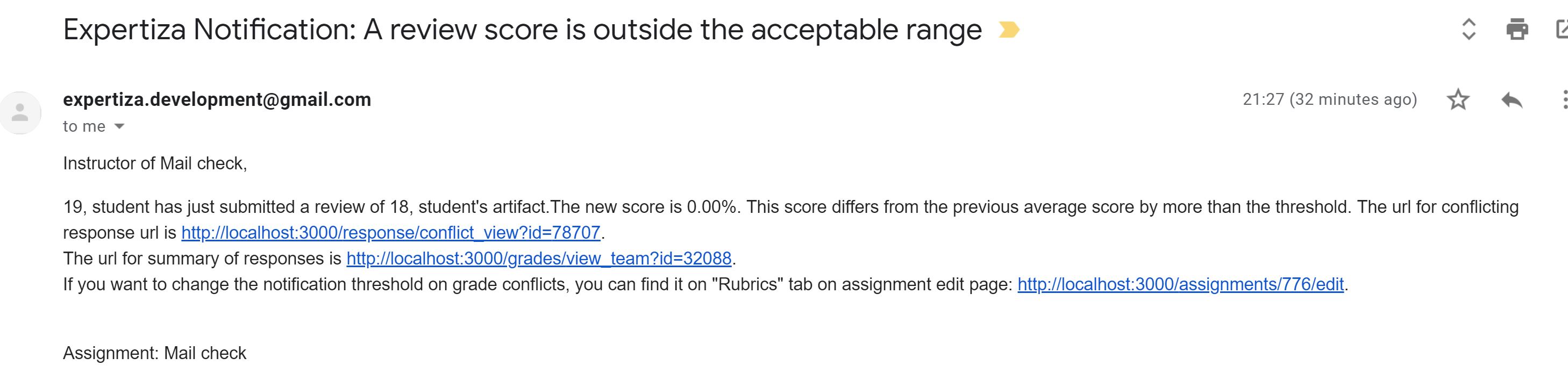

Based on the previous changes from last group, the notification email triggered will send to the instructor whenever two reviews for the same submission differ "significantly" on their scoring (the threshold is specified in the "Notification limit" on the Rubric tab of assignment creation).

On the email notification, the formatting can be improved to make it more readable and clear as to which links relate to what actions and what caused the conflict to trigger.

The previous group of snapshot for the view which the instructor receives

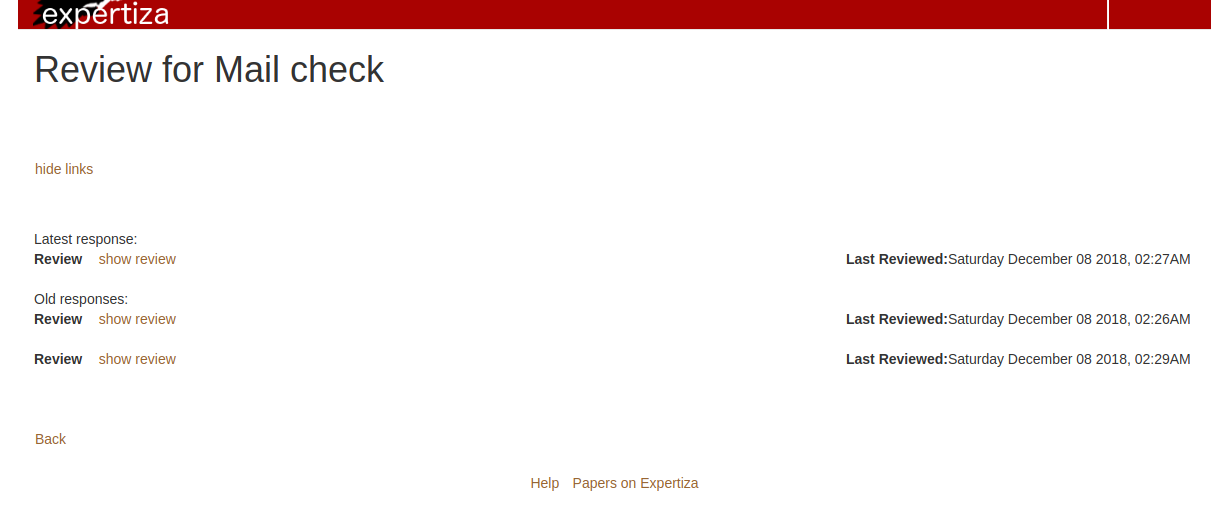

On the 'review for mail check' page, more information can be added to indicate if a review has a conflict. We will add indicators beside each review that have conflicts.

Test Plan

Manual Test Plan

To verify the conflict notification is working correctly, a mock assignment will be created and two reviews will be entered that should trigger a conflict. Successful retrieval of the email and verification of the links included in the email will provide sufficient verification that the changes were successful.

File Changes

File: spec/models/answer_spec.rb

Currently, tests only check if the values are not empty. More tests can be written to make sure the actual value is being returned correctly.

File: spec/controllers/reports_controller_spec.rb

More tests can be written to ensure correct names and values are written.

Implemented Solution

Refactoring

Moved following methods from review_mapping_helper.rb to report_formatter_helper.rb

- average_of_round

- std_of_round

- review_score_helper_for_team

- review_score_for_team

New Implementation

Adding base_url from the controller as this method is not accessible from model

@response.notify_instructor_on_difference(request.base_url) if (@map.is_a? ReviewResponseMap)

&& @response.is_submitted && @response.significant_difference?

@response.notify_instructor_on_difference(request.base_url)

This method generates statistics (average, standard deviation, tolerance limits) for each round. This method was created to move logic that was previously contained in a view to a helper class.

def review_statistics(id, team_name)

res = []

question_answers = review_score_for_team(id, team_name)

question_answers.each do |question_answer|

round = {}

round[:question_answer] = question_answer

round[:average] = average_of_round(question_answer)

round[:std] = std_of_round(round[:average], question_answer)

round[:upper_tolerance_limit] = (round[:average]+(2*round[:std])).round(2)

round[:lower_tolerance_limit] = (round[:average]-(2*round[:std])).round(2)

res.push(round)

end

res

end

This logic highlights all reviews' score in red which is out of limit range.

if answer > upper_tolerance_limit or answer < lower_tolerance_limit

colors << "rgba(255,99,132,0.8)" # red

end

else

colors << "rgba(77, 175, 124, 1)" # green

end

Passing the colors to bar background.

backgroundColor: colors

comprising the chart height for how much it need to clearly display all data.

height: 4*question_answer.size() + 14

UI Improvements

UI before improvements.

UI after improvements.

Relevant Links

GitHub - https://github.com/salmonandrew/expertiza

Pull Request - https://github.com/expertiza/expertiza/pull/1840

Demo Video - https://youtu.be/D_e80coRkLk

Team

Mentor: Sanket Pai (sgpai2@ncsu.edu)

- Yen-An Jou (yjou@ncsu.edu)

- Xiwen Chen (xchen33@ncsu.edu)

- Andrew Hirasawa (achirasa@ncsu.edu)

- Derrick Li (xli56@ncsu.edu)

References / Previous Implementation

- Previous Wikipedia page - E1865 Conflict Notification Fall 2018

- Previous Final Video - Final Video

- Previous Pull Request -Pull Request 1445

- Previous Github Repo - Github Repo