CSC/ECE 517 Fall 2019 - E1990. Integrate suggestion detection algorithm: Difference between revisions

No edit summary |

|||

| Line 29: | Line 29: | ||

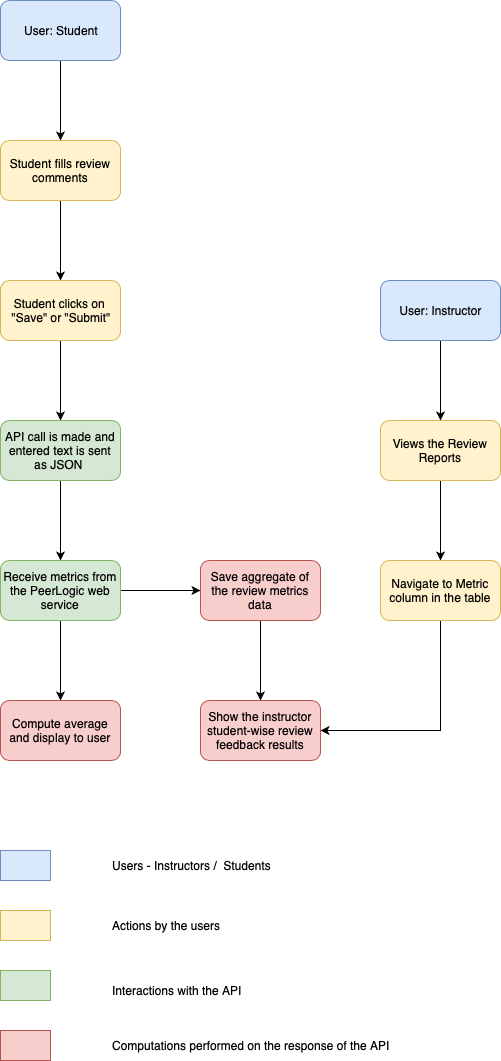

Given below is the design flowchart of our proposed solution: | Given below is the design flowchart of our proposed solution: | ||

[[File:CSC517 Final Project Flowchart(3).png]] | [[File:CSC517 Final Project Flowchart(3).png]] | ||

<p>Once the student finishes (partly or completely) giving his/her review and clicks on the "Save" or "Submit" button, the web service API will be called and the review's text will be sent as JSON. The PeerLogic web service will send back the output(number of suggestions in the review comments) which will then be displayed in an alert to the student.</p> | |||

<p> | |||

<p>For all the review scores received by the students, the aggregate data will be displayed to the instructor whenever he/she will view the Review Report for any assignment/project. This will be visible in the metrics column of the review report and will be displayed student-wise, i.e., for each student participating in the assignment.</p> | <p>For all the review scores received by the students, the aggregate data will be displayed to the instructor whenever he/she will view the Review Report for any assignment/project. This will be visible in the metrics column of the review report and will be displayed student-wise, i.e., for each student participating in the assignment.</p> | ||

== ''' | == '''Overview of code changes''' == | ||

Please refer to # [https://github.com/expertiza/expertiza/pull/1614/files Code changes] for the exact code changes. | |||

Revision as of 04:03, 7 December 2019

Introduction

- On Expertiza, students receive review comments for their work from their peers. This review mechanism provides the students a chance to correct/modify their work, based on the reviews they receive. It is expected that the reviewers identify problems and suggest solutions, so that the students can improve their projects.

- It is observed that the students learn more by reviewing others' work than working on the project as it gives them perspective about alternative approaches to solve a problem.

- The Instructor is facilitated with metrics such as average volume and total volume of the content of the reviews provided by a student.

Problem Statement

- The reviewers can fill in the review comments on others' work, however they do not receive feedback on how effective their reviews are for the receiver. It would thus make sense to have a feedback mechanism in place, which can identify whether a reviewer has identified problems and provided suggestions for a student or team's project. In order to achieve this, we need to identify the suggestions in the review comments as a way of determining how useful a review would be. This would motivate the reviewers to give better and constructive reviews.

- We would want the instructor of the course to be able to view how many constructive reviews were provided by a reviewer in comparison to the average number of constructive reviews provided by the other reviewers of the course. The reviews could be graded on this basis.

- To detect the number of suggestions in a text, there is a web service in place, developed previously by some contributors. We wish to integrate this suggestion detection algorithm and use its results to show the suggestion metrics to the reviewer as well as the instructor.

- Please note that the web service mentioned above is currently not working. Thus we will use simulations to mock the response from the web service for this project.

Implementation Details

Part 1 : Mocking the response which is to be received from the suggestion detection web service

- The suggestion detection algorithm was expected to identify the number of suggestions in the review comments given by a reviewer. To mock this number, we used a random number generator. Now we expect to receive a value between 0-10 as the number of suggestions provided by the student in one round of review. (Refer to method: num_suggestions_for_responses_by_a_reviewer in review_mapping_helper.rb)

- In order to be able to use integrate the suggestion detection algorithm in the future, we have included a function with the API calls to the service. This function and the corresponding call to this function is currently commented. (Refer to methods: num_suggestions_for_responses_by_a_reviewer and retrieve_review_suggestion_metrics in review_mapping_helper.rb.)

Part 2 : Suggestion metrics on saving / submitting review

- We wish to present the reviewer(student) with an idea of how useful their reviews are, in comparison to that of the class.

- To achieve this, we have included the following in an alert when the reviewer clicks on "Save" or "Submit":

- 1. The number of suggestions in the review comments provided by the reviewer for that round

- 2. The average number of suggestions in the review comments provided by all reviewers of the class

(Again, for the purpose of demonstration, we have used values from the random number generator mentioned above.)

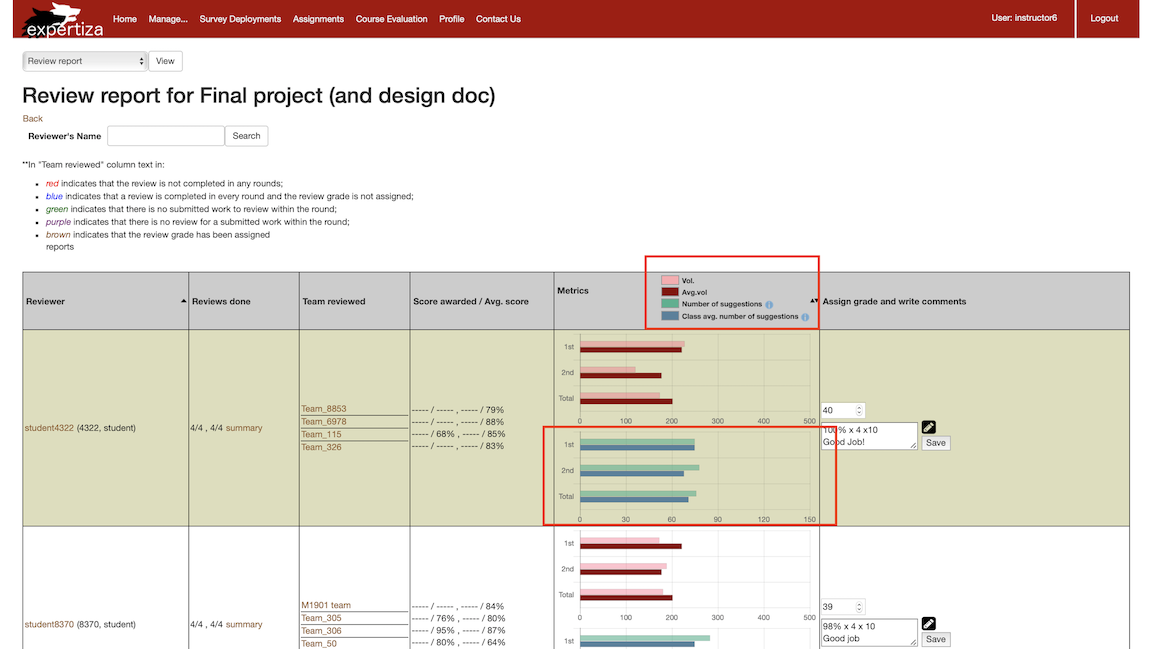

Part 3 : Suggestion metrics depicted as bar graphs in "Review Reports" page

- We wish to present the instructor with comparison of the number of suggestions present in the review comments provided by a student and the average number of suggestions typically provided by the class. We have represented this data in the form of a bar graph showing the round-wise comparison for each assignment. This visualization adheres to the pattern of visualization which was present on this page for volume of review comments and average volume of review comments.

Flowchart

Given below is the design flowchart of our proposed solution:

Once the student finishes (partly or completely) giving his/her review and clicks on the "Save" or "Submit" button, the web service API will be called and the review's text will be sent as JSON. The PeerLogic web service will send back the output(number of suggestions in the review comments) which will then be displayed in an alert to the student.

For all the review scores received by the students, the aggregate data will be displayed to the instructor whenever he/she will view the Review Report for any assignment/project. This will be visible in the metrics column of the review report and will be displayed student-wise, i.e., for each student participating in the assignment.

Overview of code changes

Please refer to # Code changes for the exact code changes.

Screenshots

Review reports page as visible to the Instructor, after the above mentioned changes.

Suggestion metrics as visible to the reviewer.

Anticipated Code Changes

Broadly speaking, the following changes will be made:

- User: Student

- Adding API calls of the suggestion-detection algorithm in the response_controller.rb - Adding an alert to display summarized analysis for all comments in the review - Write unit tests for our method(s) in review_mapping_helper.rb - Write unit tests for our changes in response.html.erb view

- User: Instructor

- Adding method call to display the aggregate analysis of student comments/reviews to reports_controller.rb under Metrics column - Write unit tests for our method(s) in reports_controller.rb - Write unit tests for our changes in _review_report.html.erb

Test Plan

Below given scenarios is the basic overview of the tests planned on being written.

Automated Testing Using Rspec

We will test our project and added functionality using RSepc. Automated tests are carried out to test -

- Whether the APIs are being called when the student clicks "Save" review button.

Steps: 1. Login to student profile. 2. Go to your open assignments and submit your work. 3. Go to that particular assignment and request a review. 4. Begin writing a review. 5. Click "Save" button to save the review. 6. A pop-up should be displayed that shows the review comment analysis for the student.

- Whether the APIs are being called when the student clicks "Submit Review" button.

Steps: 1. Login to student profile. 2. Go to your open assignments and submit your work. 3. Go to that particular assignment and request a review. 4. Begin writing a review. 5. Click "Submit Review" button to submit the review. 6. A pop-up should be displayed that shows the review comment analysis for the student.

- Whether the pop-up displays review comments analysis when student clicks "Save" button.

Steps:

1. (As a student) Click "Save" button to save your current review.

2. A pop-up should be displayed that shows the review comment analysis for the student.

3. Pop-up should display bar graph and comments on the student's review as compared to the average

review comments for that assignment.

4. Pop-up should display bar graph and comments on the student's review as compared to the total

review comments for that assignment.

- Whether the pop-up displays review comments analysis when student clicks "Submit Review" button.

Steps:

1. (As a student) Click "Submit Review" button to submit your current review.

2. A pop-up should be displayed that shows the review comment analysis for the student.

3. Pop-up should display bar graph and comments on the student's review as compared to the average

review comments for that assignment.

4. Pop-up should display bar graph and comments on the student's review as compared to the total

review comments for that assignment.

- Whether the instructor is able to see each student's aggregate review analysis score when he/she views Review Reports.

Steps: 1. Login as an instructor. 2. Navigate to all assignments list. 3. Click on View Reports button and open Review Report for that assignment. 4. The instructor should be able to see each student's review comment analysis for the submitted reviews under the metric column.

There can also be other test cases to check for UI elements and control flow tests.

Certain edge cases to look out for:

- Instructor should not be shown nil/error values when a student has not submitted any reviews.

- Student should not be shown the pop-up on clicking the "back" button or after refreshing the page.

Our Work

Team Information

- Mentor

- Ed Gehringer (efg@ncsu.edu)

- Team Name

- Pizzamas

- Members

- Maharshi Parekh (mgparekh)

- Pururaj Dave (pdave2)

- Roshani Narasimhan (rnarasi2)

- Yash Thakkar (yrthakka)

References

- Expertiza

- Pull request for a Metrics Legend change

- Expertiza Github Repo

- Our project's Github pull request