User:Ewhorton: Difference between revisions

(Rewriting motivation to discuss the need for automated acceptance testing.) |

(Adding link to calibration documentation and mentioning assignment submitters.) |

||

| Line 14: | Line 14: | ||

===Flow of the calibration function=== | ===Flow of the calibration function=== | ||

A more detailed description of the calibration may be found [https://docs.google.com/document/d/1LcicJCUY0GHN1qzwRQPL9A1Nv89sVtNtxlADOzVQeBk/edit#heading=h.g4vilf87e3f0 here] | |||

Before analyzing code, one must familiarize themselves with the steps involved in assignment submission by student on Expertiza. The steps involved are: | Before analyzing code, one must familiarize themselves with the steps involved in assignment submission by student on Expertiza. The steps involved are: | ||

# Login with valid instructor username and password | # Login with valid instructor username and password | ||

| Line 20: | Line 23: | ||

# Input all the information needed, check the “Calibrated peer-review for training?” box, click on Create | # Input all the information needed, check the “Calibrated peer-review for training?” box, click on Create | ||

# Select the review rubric in “Rubrics” tab | # Select the review rubric in “Rubrics” tab | ||

# Go to “Due dates” tab, make sure that this assignment is currently in Review phase, also, make the submission allowed in review phase. | # Go to “Due dates” tab, make sure that this assignment is currently in Review phase, also, make the submission allowed in review phase. | ||

# Add a submitter to the assignment. This submitter will add documents to be reviewed. | |||

# Go to the list of assignments, click “edit” icon of the assignment | # Go to the list of assignments, click “edit” icon of the assignment | ||

# Go to the “Calibration” tab, click on the "Begin" link next to the student artifact for the expert review, and click “Submit Review” | # Go to the “Calibration” tab, click on the "Begin" link next to the student artifact for the expert review, and click “Submit Review” | ||

| Line 26: | Line 30: | ||

# Login with valid student username and password who is a reviewer for the assignment | # Login with valid student username and password who is a reviewer for the assignment | ||

# Click on the assignment | # Click on the assignment | ||

# Click | # Click on “Other’s work” | ||

# After clicking “Begin”, Expertiza will display peer-review page | # After clicking “Begin”, Expertiza will display peer-review page | ||

# Click “Submit Review” | # Click “Submit Review” | ||

Revision as of 01:22, 19 March 2016

Expertiza is a web application where students can submit and peer-review learning objects (articles, code, web sites, etc). It is used in select courses at NC State and by professors at several other colleges and universities. <ref>Expertiza on GitHub</ref>

Introduction

Our contribution to this project is a suite of functional tests for the assignment calibration function. These tests are designed to provide integration, regression, and acceptance testing by using automation technologies.

Problem Statement

Expertiza has a feature called Calibration that can be turned on for an assignment. When this feature is enabled an expert, typically the instructor, can provide an expert review for the assignment artifacts. After students finish their own reviews they may compare their responses to the expert review. The instructor may also view calibration results to view the scores for the entire class.

While this is a preexisting feature within Expertiza, there currently exist no tests for it. The goal of this project is to fully test the entire flow of the calibration feature using the RSpec and Capybara frameworks.

Motivation

The moment a line of code is written it becomes legacy code in need of support. Test cases are used not only to ensure that code is operating properly, but also to ensure that it continues to do so after having been modified. An important aspect of this is acceptance testing to verify that the project meets customer requirements. Rather than performing these tests by hand, it is easier to combine an automated testing system like RSpec with a driver like Capybara which allows testing of an application's functionality from the outside either by running in a headless browser.

Flow of the calibration function

A more detailed description of the calibration may be found here

Before analyzing code, one must familiarize themselves with the steps involved in assignment submission by student on Expertiza. The steps involved are:

- Login with valid instructor username and password

- Click on "Assignment"

- Click on "New public assignment"

- Input all the information needed, check the “Calibrated peer-review for training?” box, click on Create

- Select the review rubric in “Rubrics” tab

- Go to “Due dates” tab, make sure that this assignment is currently in Review phase, also, make the submission allowed in review phase.

- Add a submitter to the assignment. This submitter will add documents to be reviewed.

- Go to the list of assignments, click “edit” icon of the assignment

- Go to the “Calibration” tab, click on the "Begin" link next to the student artifact for the expert review, and click “Submit Review”

- Go to “Review strategy” tab, select Instructor-Selected as review strategy, then select the radio button “Set number of reviews done by each student” and input the number of reviews

- Login with valid student username and password who is a reviewer for the assignment

- Click on the assignment

- Click on “Other’s work”

- After clicking “Begin”, Expertiza will display peer-review page

- Click “Submit Review”

- Click “Show calibration results” to see expert review

- Login with valid instructor username and password

- Click on "Assignment"

- Click on the “view review report” to see the calibration report

Creating the Tests

Gems involved

The gems used in feature testing are rspec-rails, capybara and selenium.

rspec-rails

Rspec-rails is a testing framework for Rails 3.x and 4.x. It supports testing of models, controllers, requests, features, views and routes. It does this by accepting test scenarios called specs.<ref>rpsce-rails on GitHub</ref>

capybara

Capybara helps you test web applications by simulating how a real user would interact with your application. It comes with built in Rack::Test and Selenium support. WebKit is supported through an external gem.<ref>capybara on GitHub</ref> To control the environments in which the scenarios are run, it provides before and after hooks.<ref>Before and after hooks</ref>

- before(:each) blocks are run before each scenario in the group

- before(:all) blocks are run once before all of the scenarios in the group

- after(:each) blocks are run after each scenario in the group

- after(:all) blocks are run once after all of the scenarios in the group

selenium

Selenium is a portable software testing framework for web applications. Selenium provides a record/playback tool for authoring tests without learning a test scripting language (Selenium IDE). It is the default javascript webdriver for Capybara.

Test Scenarios

Based on the steps involved in manual submission of the assignment, the following test scenarios are considered:

Scenario to check whether student is able to login

- Login with student13 and password

- Click on Sign In button

- Updated page should have text content Assignment

Scenario to check whether student is able to submit valid link to an ongoing assignment

- Login with student13 and password

- Click on Sign In button

- Click on OnGoing Assignment

- Click on Your work

- Upload link http://www.csc.ncsu.edu/faculty/efg/517/f15/schedule

- Updated page should have link http://www.csc.ncsu.edu/faculty/efg/517/f15/schedule

Scenario to check whether student is not able submit invalid link to an ongoing assignment

- Login with student13 and password

- Click on Sign In button

- Click on OnGoing Assignment

- Click on Your work

- Upload link http://

- Updated page should display flash message with text URI is not valid

Scenario to check whether student is able to upload a file to an ongoing assignment

- Login with student13 and password

- Click on Sign In button

- Click on OnGoing Assignment

- Click on Your work

- Upload file student_submission_spec.rb

- Updated page should contain filename student_submission_spec.rb

Scenario to check whether student is able to upload valid link and a file to an ongoing assignment

- Login with student13 and password

- Click on Sign In button

- Click on OnGoing Assignment

- Click on Your work

- Upload link http://www.csc.ncsu.edu/faculty/efg/517/f15/assignments

- Upload file users_spec.rb

- Updated page should have link http://www.csc.ncsu.edu/faculty/efg/517/f15/assignments

- Updated page should contain filename users_spec.rb

Scenario to check whether student is not able submit valid link to a finished assignment

- Login with student13 and password

- Click on Sign In button

- Click on Finished Assignment

- Click on Your work

- Page should not have Upload link button

Scenario to check whether student is able upload file to a finished assignment

- Login with student13 and password

- Click on Sign In button

- Click on Finished Assignment

- Click on Your work

- Upload file student_submission_spec.rb

- Updated page should contain filename student_submission_spec.rb

Code

Code for the feature test consist in two files:

- assignment_setup.rb which contains method for creating assignments using controller calls.

- student_assignment_submission.rb which contain calls to assignment_setup, scenarios to test assignment submission and commands to delete the created assignments.

Structure

require files

RSpec.feature 'assignment submission when student' do

- before(:all) do

- create assignments for submission

- end

- before(:each) do

- capybara steps to login

- end

- after(:all) do

- delete assignments created

- end

- scenario 'submits only valid link to ongoing assignment' do

- mock steps using capybara

- make assertions

- end

- ....

- ....

- ....

end

Sample Scenario

require 'rails_helper'

require 'spec_helper'

require 'assignment_setup'

RSpec.feature 'assignment submission when student' do

active_assignment="FeatureTest"

expired_assignment="LibraryRailsApp"

d = Date.parse(Time.now.to_s)

due_date1=(d >> 1).strftime("%Y-%m-%d %H:%M:00")

due_date2=(d << 1).strftime("%Y-%m-%d %H:%M:00")

# Before all block runs once before all the scenarios are tested

before(:all) do

# Create active/ongoing assignment

create_assignment(active_assignment, due_date1)

# Create expired/finished assignment

create_assignment(expired_assignment, due_date2)

end

# Before each block runs before every scenario

before(:each) do

# Login as a student before each scenario

visit root_path

fill_in 'User Name', :with => 'student13'

fill_in 'Password', :with => 'password'

click_on 'SIGN IN'

end

# After all block runs after all the scenarios are tested

after(:all)do

# Delete active/ongoing assignment created by the test

assignment = Assignment.find_by_name(active_assignment)

assignment.delete

# Delete expired/finished assignment created by the test

assignment = Assignment.find_by_name(expired_assignment)

assignment.delete

end

# Scenario to check whether student is able to submit valid link to an ongoing assignment

scenario 'submits only valid link to ongoing assignment' do

click_on active_assignment

click_on 'Your work'

fill_in 'submission', :with => 'http://www.csc.ncsu.edu/faculty/efg/517/f15/schedule'

click_on 'Upload link'

expect(page).to have_content 'http://www.csc.ncsu.edu/faculty/efg/517/f15/schedule'

end

end

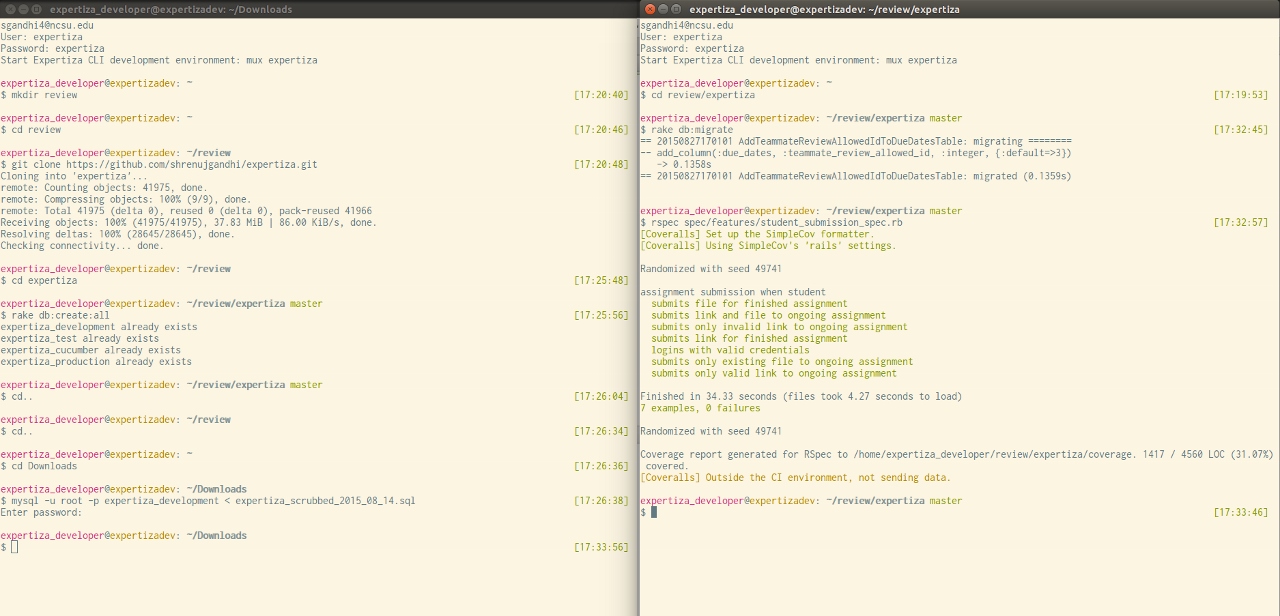

Running the tests

The following are steps required to run the test

- Clone the repository in a new directory

$ mkdir review $ cd review $ git clone https://github.com/shrenujgandhi/expertiza.git

In case you don't have a database with student and instructor entries then download the dump from https://drive.google.com/a/ncsu.edu/file/d/0B2vDvVjH76uESEkzSWpJRnhGbmc/view. Extract its contents.

$ cd expertiza $ rake db:create:all $ cd Downloads/ $ mysql -u root -p expertiza_development < expertiza_scrubbed_2015_08_14.sql password: $ cd expertiza $ rake db:migrate

- Type the command to run tests for assignment submission

rspec spec/features/student_submission_spec.rb

Test Results

The following screenshot shows the result of the rspec command.

Test Analysis

- 7 examples, 0 failures imply that there are 7 test scenarios and none of them failed.

- The tests take around 35 seconds to run, which implies that they are fast.

- They are also repeatable as the assignments that are created in the before all block are also deleted in the after all block.

- They are also self-verifying as all the results are in green, which mean that all the cases passed.

Project Resources

References

<references></references>