CSC/ECE 506 Spring 2011/ch6a jp: Difference between revisions

No edit summary |

|||

| (64 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

=Cache Hierarchy= | =Cache Hierarchy= | ||

[[Image: memchart.jpg|thumbnail|300px|right|Memory Hierarchy<sup><span id="9body">[[#9foot|[9]]]</span></sup>]] | |||

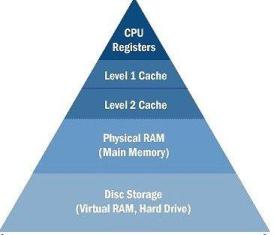

In a simple computer model, processor reads data and instructions from the memory and operates on the data. Operating frequency of CPU increased faster than the speed of memory and memory interconnects. For example, cores in Intel first generation i7 processors run at 3.2 GHz frequency, while the memory only runs at 1.3GHz frequency. Also, multi-core architecture started putting more demand on memory bandwidth. This increases the latency in memory access and CPU will have to be idle for most of the time. Due to this, memory became a bottle neck in performance. | In a simple computer model, processor reads data and instructions from the memory and operates on the data. Operating frequency of CPU increased faster than the speed of memory and memory interconnects. For example, cores in Intel first generation i7 processors run at 3.2 GHz frequency, while the memory only runs at 1.3GHz frequency. Also, multi-core architecture started putting more demand on memory bandwidth. This increases the latency in memory access and CPU will have to be idle for most of the time. Due to this, memory became a bottle neck in performance. | ||

To solve this problem, “cache” was invented. Cache is simply a temporary volatile storage space like primary memory but runs at the speed similar to core frequency. CPU can access data and instructions from cache in few clock cycles while accessing data from main memory can take more than 50 cycles. In early days of computing, cache was implemented as a stand alone chip outside the processor. In today’s processors, cache is implemented on same die as core. | To solve this problem, “cache” was invented. Cache is simply a temporary volatile storage space like primary memory but runs at the speed similar to core frequency. CPU can access data and instructions from cache in few clock cycles while accessing data from main memory can take more than 50 cycles. In early days of computing, cache was implemented as a stand alone chip outside the processor. In today’s processors, cache is implemented on same die as core. | ||

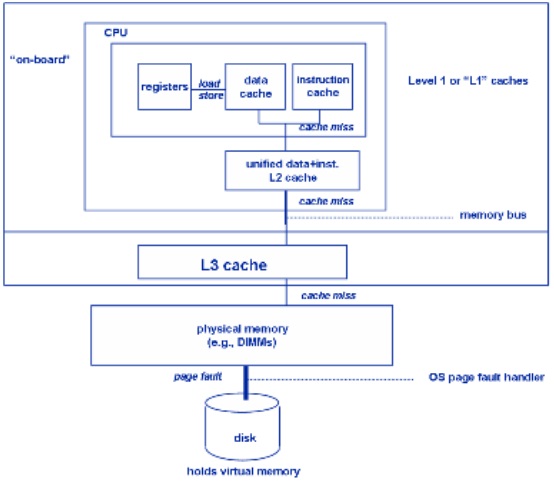

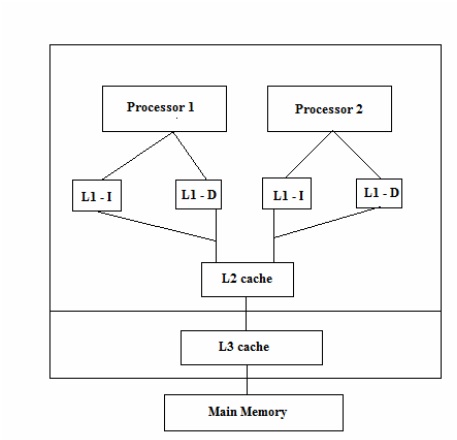

There can be multiple levels of caches, each cache subsequently away from the core and larger in size. L1 is closest to the CPU and as a result, fastest to excess. Next to L1 is L2 cache and then L3. L1 cache is divided into instruction cache and data cache. This is better than having a combined larger cache as instruction cache being read-only is easy to implement while data cache is read-write. | There can be multiple levels of caches, each cache subsequently away from the core and larger in size. L1 is closest to the CPU and as a result, fastest to excess. Next to L1 is L2 cache and then L3. L1 cache is divided into instruction cache and data cache. This is better than having a combined larger cache as instruction cache being read-only is easy to implement while data cache is read-write.<br> | ||

[[Image:Uniprocessor_memory_hierarchy.jpg|thumbnail|200px|Uniprocessor memory hierarchy<sup><span id="2body">[[#2foot|[2]]]</span></sup>]] | |||

[[Image:Dualcore_memory_hierarchy.jpg|thumbnail|200px|Dualcore memory hierarchy<sup><span id="3body">[[#3foot|[3]]]</span></sup>]] | |||

{| border='1' class="wikitable" style="text-align:center" | |||

|+style="white-space:nowrap"|Table 1: Cache on different Microprocessors | |||

|- | |||

! Company & Processor !! # cores !! L1 cache Per core !! L1 cache Per core !! L3 cache !! Year | |||

|- | |||

| Intel Pentium Dual Core || 2 || I:32KB D:32KB || 1MB 8 way set assoc. || - || 2006 | |||

|- | |||

| Intel Xeon Clovertown || 2 || I:4*32KB D:4*32KB || 2*4MB || - || Jan 2007 | |||

|- | |||

| Intel Xeon 3200 series || 4 || - || 2*4MB || - || Jan 2007 | |||

|- | |||

| AMD Athlon 64FX || 2 || I:64KB D:4KB Both 2-way set assoc. || 1MB 16way set assoc. || - || Jan 2007 | |||

|- | |||

| AMD Athlon 64X2 || 2 || I:64KB D:4KB Both 2-way set assoc. || 512KB/1MB 16way set assoc. || 2MB || Jan 2007 | |||

|- | |||

| AMD Barcelona || 4 || I:64KB D:64KB || 512KB || 2MB Shared || Aug 2007 | |||

|- | |||

| Sun Microsystems Ultra Sparc T2 || 8 || I:16KB D:8KB || 4MB (8 banks) 16way set assoc. || - || Oct 2007 | |||

|- | |||

| Intel Xeon Wolfdale DP || 2 || D:96KB || 6MB || - || Nov 2007 | |||

|- | |||

| Intel Xeon Hapertown || 4 || D:96KB || 2*6MB || - || Nov 2007 | |||

|- | |||

| AMD Phenom || 4 || I:64KB D:64KB || 512KB || 2MB Shared || Nov 2007 Mar 2008 | |||

|- | |||

| Intel Core 2 Duo || 2 || I:32KB D:32KB || 2/4MB 8 way set assoc. || - || 2008 | |||

|- | |||

| Intel Penryn Wolfdale DP || 4 || - || 6-12MB || 6MB || Mar 2008 Aug 2008 | |||

|- | |||

| Intel Core 2 Quad Yorkfield || 4 || D:96KB || 12MB || - || Mar 2008 | |||

|- | |||

| AMD Toliman || 3K10 || I:64KB D:64KB || 512KB || 2MB Shared || Apr 2008 | |||

|- | |||

| Azul Systems Vega3 7300 Series || 864 || 768GB || - || - || May 2008 | |||

|- | |||

| IBM RoadRunner || 8+1 || 32KB || 512KB || - || Jun 2008 | |||

|} | |||

=Cache Write Policies= | =Cache Write Policies= | ||

==Write hit policies== | ==Write hit policies== | ||

= | [[Image:Cache_policy_comparison.jpg|thumbnail|600px|Cache policy comparison<sup><span id="5body">[[#5foot|[5]]]</span></sup>]] | ||

Also known as store-through, this policy will write to main memory whenever a write is performed to cache. | *Write-through: Also known as store-through, this policy will write to main memory whenever a write is performed to cache. | ||

**Advantages: | |||

Also known as store-in or copy-back, this policy will write to main memory only when a block of data is purged from the cache storage. | ***Easy to implement | ||

***Main memory has most recent copy of the data | |||

***Read misses never result in writes to main memory | |||

**Disadvantages: | |||

***Every write needs to access main memory | |||

***Bandwidth intensive | |||

***Writes are slower | |||

*Write-back: Also known as store-in or copy-back, this policy will write to main memory only when a block of data is purged from the cache storage. | |||

**Advantages: | |||

***Writes are as fast as the speed of the cache memory | |||

***Multiple writes to a block require one write to main memory | |||

***Less bandwidth intensive | |||

**Disadvantages: | |||

***Harder to implement | |||

***Main memory may not be consistent with cache | |||

***Reads that result in data replacement may cause dirt blocks to be written to main memory | |||

==Write miss policies== | ==Write miss policies== | ||

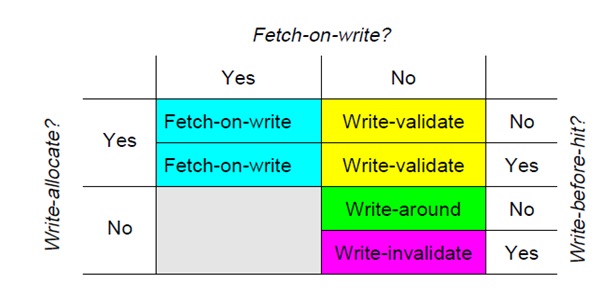

*Write-allocate vs Write no-allocate: When a write misses in the cache, there may or may not be a line in the cache allocated to the block. For write-allocate, there will be a line in the cache for the written data. This policy is typically associated with write-back caches. For no-write-allocate, there will not be a line in the cache. | |||

When a write misses in the cache, there may or may not be a line in the cache allocated to the block. For write-allocate, there will be a line in the cache for the written data. This policy is typically associated with write-back caches. For no-write-allocate, there will not be a line in the cache. | *Fetch-on-write vs no-fetch-on-write: The fetch-on-write will cause the block of data to be fetched from a lower memory hierarchy if the write misses. The policy fetches a block on every write miss. | ||

*Write-before-hit vs no-write-before-hit: The write-before-hit will write data to the cache before checking the cache tags for a match. In case of a miss, the policy will displace the block of data already in the cache. | |||

The fetch-on-write will cause the block of data to be fetched from a lower memory hierarchy if the write misses. The policy fetches a block on every write miss. | |||

The write-before-hit will write data to the cache before checking the cache tags for a match. In case of a miss, the policy will displace the block of data already in the cache. | |||

==Combination Policies== | ==Combination Policies== | ||

* Write-validate: It is a combination of no-fetch-on-write and write-allocate<sup><span id="4body">[[#4foot|[4]]]</span></sup>. The policy allows partial lines to be written to the cache on a miss. It provides for better performance as well as works with machines that have various line sizes and does not add instruction execution overhead to the program being run. Write-validate requires that the lower level system memory can support writes of partial lines. | |||

It is a combination of no-fetch-on-write and write-allocate. The policy allows partial lines to be written to the cache on a miss. It provides for better performance as well as works with machines that have various line sizes and does not add instruction execution overhead to the program being run. | * Write-invalidate: This policy is a combination of write-before-hit, no-fetch-on-write, and no-write-allocate<sup><span id="4body">[[#4foot|[4]]]</span></sup>. This policy invalidates lines when there is a miss. | ||

* Write-around: Combination of no-fetch-on-write, no-write-allocate, and no-write-before-hit<sup><span id="4body">[[#4foot|[4]]]</span></sup>. This policy uses a non-blocking write scheme to write to cache. It writes data to the next lower cache without modifying the data of the cache line. | |||

This policy is a combination of write-before-hit, no-fetch-on-write, and no-write-allocate. This policy invalidates lines when there is a miss. | |||

Combination of no-fetch-on-write, no-write-allocate, and no-write-before-hit. This policy uses a non-blocking write scheme to write to cache. It writes data to the next lower cache without modifying the data of the cache line. | |||

=Prefetching= | =Prefetching= | ||

Cache is very efficient in terms on access time once that data or instructions are in the cache. But when a process tries to access something that is not already in the cache, a cache miss occurs and those pages need to be brought into the cache from memory. Generally cache miss are expensive to the performance as the processor has to wait for that data (In parallel processing, a process can execute other tasks while it is waiting on data, but there will be some overhead for this). Prefetching is a technique in which data is brought into cache before the program needs it. In other words, it is a way to reduce cache misses. Prefetching uses some type of prediction to mechanism to anticipate the next data that will be needed and brings them into cache. It is not guaranteed that the perfected data will be used. Goal here is to reduce cache misses to improve overall performance.<br><br> | |||

Some architectures have instructions to prefetch data into cache. Programmers and compliers can insert this prefect instruction in the code. This is known as software prefetching. In hardware prefetching, processor observers the system behavior and issues requests for prefetching. Intel 8086 and Motorola 68000 were the first microprocessors to implement instruction prefetch. Graphics Processing Units benefit from prefetching due to spatial locality property of the data<sup><span id="8body">[[#8foot|[8]]]</span></sup>. | |||

==Advantages== | ==Advantages== | ||

*Improves overall performance by reducing cache misses. | |||

==Disadvantages== | ==Disadvantages== | ||

* Wastes bandwidth when prefetched data is not used. | |||

* Hardware prefetching requires complex architecture. Second order effect is cost of implementation on silicon and validation costs. | |||

* Software prefetching adds additional instructions to the program, making the program larger. | |||

* If same cache is used for prefetching, then prefetching could cause other cache blocks to be evicted. If the evicted blocks are needed, then that will generate a cache miss. This can be prevented by having a separate cache for prefetching but it comes with hardware costs. | |||

* When scheduler changes the task running on a processor, prefetched data may become useless. | |||

==Effectiveness== | ==Effectiveness== | ||

Prefetching effectiveness can be tracked by following matrices | |||

# Coverage is defined as fraction of original cache misses that were prefetched resulting in cache hit. | |||

# Accuracy is defined as fraction of prefetches that are useful. | |||

# Timeliness measures how early the prefetches arrive. | |||

Ideally, a system should have high coverage, high accuracy and optimum timeliness. Realistically, aggressive prefetches can increase coverage but decrease accuracy and vice versa. Also, if prefetching is done too early, the fetched data may have to be evicted without being used and if done too late, it can cause cache miss. | |||

==Stream Buffer Prefetching== | ==Stream Buffer Prefetching== | ||

This is a technique for prefetching which uses a FIFO buffer in which each entry is a cacheline and has address (or tag) and a available bit. System prefetches a stream of sequential data into a stream buffer and multiple stream buffers can be used to prefetch multiple streams in parallel. On a cache access, head entries of stream buffers are check for match along with cache check. If the block is not found in cache but found at the head of stream buffer, it is moved to cache and next entry in the buffer becomes the head. If the block is not found in cache or as head of buffer, data is brought from memory into cache and the subsequent address as assigned to a new stream buffer. Only the heads of the stream buffers are checked during cache access and not the whole buffer. Checking all the entries in all the buffers will increase hardware complexity. | |||

<br> | |||

<br> | |||

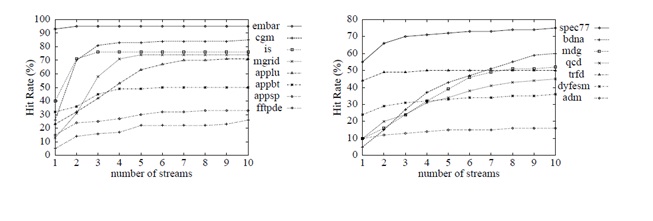

[[Image:Cache_hit_improvements.jpg|center]] | |||

<br><br> | |||

Above plot shows the cache hit improvements for with respect to number of stream buffers on different programs. Graph on left compares 8 programs from NAS suite while graph on right shows programs from Unix unity suite<sup><span id="7body">[[#7foot|[7]]]</span></sup>. | |||

==Prefetching in Parallel Computing== | ==Prefetching in Parallel Computing== | ||

On a uniprocessor system, prefetching is definitely helpful to improve performance. On a multiprocessor system prefetching is useful, but there are tighter constrains in implementing because of the fact that data will be shared between different processors or cores. In message passing parallel model, each parallel thread has its own memory space and prefetching can be implemented in same way as for uniprocessor system.<br><br> | |||

In shared memory parallel programming, multiple threads that run on different processors share common memory space. If multiple processors use common cache, then prefetching implementation is similar to uniprocessor system. Difficulties arise when each core has its own cache. Some of the case-scenarios that can occur are: | |||

# Processor P1 has prefetched some data D1 into its stream buffer but is not used it. At the same time processor P2 reads data D1 into its cache and modifies it and one of the cache coherency protocols would be used to inform processor P1 about this change. D1 is not in P1’s cache so it many simply ignore this. Now, when P1 ties to read D1, it will get the stale data from its stream buffer. One way to prevent this is by improving stream buffers so that they can modify their data just like a cache. This adds complexity to the architecture and increases cost<sup><span id="6body">[[#6foot|[6]]]</span></sup>. | |||

# Prefetching mechanism can fetch data D1, D2, D3,….D10 into P1’s buffer. Now due to parallel processing, P1 only needs to operate on D1 to D5 while P2 will operate on remaining data. Some bandwidth was wasted in fetching D6 to D10 into P1 even though it did not use it. There is a trade off to be made here, if prefetching is very conservative then it will lead to miss and if not then it will waste bandwidth. | |||

# In a multiprocessor system, if threads are not bound to a core, Operation system can rebalance the treads of different cores. This will require the prefetched buffers to be trashed. | |||

=References= | =References= | ||

<span id="1foot">[[#1body|1.]]</span> http://www.real-knowledge.com/memory.htm <br> | |||

<span id="2foot">[[#2body|2.]]</span> Computer Design & Technology- Lectures slides by Prof.Eric Rotenberg <br> | |||

| | <span id="3foot">[[#3body|3.]]</span> Fundamentals of Parallel Computer Architecture by Prof.Yan Solihin <br> | ||

<span id="4foot">[[#4body|4.]]</span> “Cache write policies and performance,” Norman Jouppi, Proc. 20th International Symposium on Computer Architecture (ACM Computer Architecture News 21:2), May 1993, pp. 191–201.<br> | |||

<span id="5foot">[[#5body|5.]]</span> Architecture of Parallel Computers, Lecture slides by Prof. Edward Gehringer <br> | |||

<span id="6foot">[[#6body|6.]]</span> “Parallel computer architecture: a hardware/software approach” by David. E. Culler, Jaswinder Pal Singh, Anoop Gupta (pg 887) <br> | |||

<span id="7foot">[[#7body|7.]]</span> “Evaluating Stream Buffers as a Secondary Cache Replacement” by Subbarao Palacharla and R. E. Kessler. (ref#2) <br> | |||

| | <span id="8foot">[[#8body|8.]]</span> http://en.wikipedia.org/wiki/Instruction_prefetch <br> | ||

<span id="9foot">[[#9body|9.]]</span> http://www.real-knowledge.com/memory.htm <br> | |||

| | |||

| | |||

| | |||

| | |||

| | |||

Latest revision as of 00:20, 28 February 2011

Cache Hierarchy

In a simple computer model, processor reads data and instructions from the memory and operates on the data. Operating frequency of CPU increased faster than the speed of memory and memory interconnects. For example, cores in Intel first generation i7 processors run at 3.2 GHz frequency, while the memory only runs at 1.3GHz frequency. Also, multi-core architecture started putting more demand on memory bandwidth. This increases the latency in memory access and CPU will have to be idle for most of the time. Due to this, memory became a bottle neck in performance.

To solve this problem, “cache” was invented. Cache is simply a temporary volatile storage space like primary memory but runs at the speed similar to core frequency. CPU can access data and instructions from cache in few clock cycles while accessing data from main memory can take more than 50 cycles. In early days of computing, cache was implemented as a stand alone chip outside the processor. In today’s processors, cache is implemented on same die as core.

There can be multiple levels of caches, each cache subsequently away from the core and larger in size. L1 is closest to the CPU and as a result, fastest to excess. Next to L1 is L2 cache and then L3. L1 cache is divided into instruction cache and data cache. This is better than having a combined larger cache as instruction cache being read-only is easy to implement while data cache is read-write.

| Company & Processor | # cores | L1 cache Per core | L1 cache Per core | L3 cache | Year |

|---|---|---|---|---|---|

| Intel Pentium Dual Core | 2 | I:32KB D:32KB | 1MB 8 way set assoc. | - | 2006 |

| Intel Xeon Clovertown | 2 | I:4*32KB D:4*32KB | 2*4MB | - | Jan 2007 |

| Intel Xeon 3200 series | 4 | - | 2*4MB | - | Jan 2007 |

| AMD Athlon 64FX | 2 | I:64KB D:4KB Both 2-way set assoc. | 1MB 16way set assoc. | - | Jan 2007 |

| AMD Athlon 64X2 | 2 | I:64KB D:4KB Both 2-way set assoc. | 512KB/1MB 16way set assoc. | 2MB | Jan 2007 |

| AMD Barcelona | 4 | I:64KB D:64KB | 512KB | 2MB Shared | Aug 2007 |

| Sun Microsystems Ultra Sparc T2 | 8 | I:16KB D:8KB | 4MB (8 banks) 16way set assoc. | - | Oct 2007 |

| Intel Xeon Wolfdale DP | 2 | D:96KB | 6MB | - | Nov 2007 |

| Intel Xeon Hapertown | 4 | D:96KB | 2*6MB | - | Nov 2007 |

| AMD Phenom | 4 | I:64KB D:64KB | 512KB | 2MB Shared | Nov 2007 Mar 2008 |

| Intel Core 2 Duo | 2 | I:32KB D:32KB | 2/4MB 8 way set assoc. | - | 2008 |

| Intel Penryn Wolfdale DP | 4 | - | 6-12MB | 6MB | Mar 2008 Aug 2008 |

| Intel Core 2 Quad Yorkfield | 4 | D:96KB | 12MB | - | Mar 2008 |

| AMD Toliman | 3K10 | I:64KB D:64KB | 512KB | 2MB Shared | Apr 2008 |

| Azul Systems Vega3 7300 Series | 864 | 768GB | - | - | May 2008 |

| IBM RoadRunner | 8+1 | 32KB | 512KB | - | Jun 2008 |

Cache Write Policies

Write hit policies

- Write-through: Also known as store-through, this policy will write to main memory whenever a write is performed to cache.

- Advantages:

- Easy to implement

- Main memory has most recent copy of the data

- Read misses never result in writes to main memory

- Disadvantages:

- Every write needs to access main memory

- Bandwidth intensive

- Writes are slower

- Advantages:

- Write-back: Also known as store-in or copy-back, this policy will write to main memory only when a block of data is purged from the cache storage.

- Advantages:

- Writes are as fast as the speed of the cache memory

- Multiple writes to a block require one write to main memory

- Less bandwidth intensive

- Disadvantages:

- Harder to implement

- Main memory may not be consistent with cache

- Reads that result in data replacement may cause dirt blocks to be written to main memory

- Advantages:

Write miss policies

- Write-allocate vs Write no-allocate: When a write misses in the cache, there may or may not be a line in the cache allocated to the block. For write-allocate, there will be a line in the cache for the written data. This policy is typically associated with write-back caches. For no-write-allocate, there will not be a line in the cache.

- Fetch-on-write vs no-fetch-on-write: The fetch-on-write will cause the block of data to be fetched from a lower memory hierarchy if the write misses. The policy fetches a block on every write miss.

- Write-before-hit vs no-write-before-hit: The write-before-hit will write data to the cache before checking the cache tags for a match. In case of a miss, the policy will displace the block of data already in the cache.

Combination Policies

- Write-validate: It is a combination of no-fetch-on-write and write-allocate[4]. The policy allows partial lines to be written to the cache on a miss. It provides for better performance as well as works with machines that have various line sizes and does not add instruction execution overhead to the program being run. Write-validate requires that the lower level system memory can support writes of partial lines.

- Write-invalidate: This policy is a combination of write-before-hit, no-fetch-on-write, and no-write-allocate[4]. This policy invalidates lines when there is a miss.

- Write-around: Combination of no-fetch-on-write, no-write-allocate, and no-write-before-hit[4]. This policy uses a non-blocking write scheme to write to cache. It writes data to the next lower cache without modifying the data of the cache line.

Prefetching

Cache is very efficient in terms on access time once that data or instructions are in the cache. But when a process tries to access something that is not already in the cache, a cache miss occurs and those pages need to be brought into the cache from memory. Generally cache miss are expensive to the performance as the processor has to wait for that data (In parallel processing, a process can execute other tasks while it is waiting on data, but there will be some overhead for this). Prefetching is a technique in which data is brought into cache before the program needs it. In other words, it is a way to reduce cache misses. Prefetching uses some type of prediction to mechanism to anticipate the next data that will be needed and brings them into cache. It is not guaranteed that the perfected data will be used. Goal here is to reduce cache misses to improve overall performance.

Some architectures have instructions to prefetch data into cache. Programmers and compliers can insert this prefect instruction in the code. This is known as software prefetching. In hardware prefetching, processor observers the system behavior and issues requests for prefetching. Intel 8086 and Motorola 68000 were the first microprocessors to implement instruction prefetch. Graphics Processing Units benefit from prefetching due to spatial locality property of the data[8].

Advantages

- Improves overall performance by reducing cache misses.

Disadvantages

- Wastes bandwidth when prefetched data is not used.

- Hardware prefetching requires complex architecture. Second order effect is cost of implementation on silicon and validation costs.

- Software prefetching adds additional instructions to the program, making the program larger.

- If same cache is used for prefetching, then prefetching could cause other cache blocks to be evicted. If the evicted blocks are needed, then that will generate a cache miss. This can be prevented by having a separate cache for prefetching but it comes with hardware costs.

- When scheduler changes the task running on a processor, prefetched data may become useless.

Effectiveness

Prefetching effectiveness can be tracked by following matrices

- Coverage is defined as fraction of original cache misses that were prefetched resulting in cache hit.

- Accuracy is defined as fraction of prefetches that are useful.

- Timeliness measures how early the prefetches arrive.

Ideally, a system should have high coverage, high accuracy and optimum timeliness. Realistically, aggressive prefetches can increase coverage but decrease accuracy and vice versa. Also, if prefetching is done too early, the fetched data may have to be evicted without being used and if done too late, it can cause cache miss.

Stream Buffer Prefetching

This is a technique for prefetching which uses a FIFO buffer in which each entry is a cacheline and has address (or tag) and a available bit. System prefetches a stream of sequential data into a stream buffer and multiple stream buffers can be used to prefetch multiple streams in parallel. On a cache access, head entries of stream buffers are check for match along with cache check. If the block is not found in cache but found at the head of stream buffer, it is moved to cache and next entry in the buffer becomes the head. If the block is not found in cache or as head of buffer, data is brought from memory into cache and the subsequent address as assigned to a new stream buffer. Only the heads of the stream buffers are checked during cache access and not the whole buffer. Checking all the entries in all the buffers will increase hardware complexity.

Above plot shows the cache hit improvements for with respect to number of stream buffers on different programs. Graph on left compares 8 programs from NAS suite while graph on right shows programs from Unix unity suite[7].

Prefetching in Parallel Computing

On a uniprocessor system, prefetching is definitely helpful to improve performance. On a multiprocessor system prefetching is useful, but there are tighter constrains in implementing because of the fact that data will be shared between different processors or cores. In message passing parallel model, each parallel thread has its own memory space and prefetching can be implemented in same way as for uniprocessor system.

In shared memory parallel programming, multiple threads that run on different processors share common memory space. If multiple processors use common cache, then prefetching implementation is similar to uniprocessor system. Difficulties arise when each core has its own cache. Some of the case-scenarios that can occur are:

- Processor P1 has prefetched some data D1 into its stream buffer but is not used it. At the same time processor P2 reads data D1 into its cache and modifies it and one of the cache coherency protocols would be used to inform processor P1 about this change. D1 is not in P1’s cache so it many simply ignore this. Now, when P1 ties to read D1, it will get the stale data from its stream buffer. One way to prevent this is by improving stream buffers so that they can modify their data just like a cache. This adds complexity to the architecture and increases cost[6].

- Prefetching mechanism can fetch data D1, D2, D3,….D10 into P1’s buffer. Now due to parallel processing, P1 only needs to operate on D1 to D5 while P2 will operate on remaining data. Some bandwidth was wasted in fetching D6 to D10 into P1 even though it did not use it. There is a trade off to be made here, if prefetching is very conservative then it will lead to miss and if not then it will waste bandwidth.

- In a multiprocessor system, if threads are not bound to a core, Operation system can rebalance the treads of different cores. This will require the prefetched buffers to be trashed.

References

1. http://www.real-knowledge.com/memory.htm

2. Computer Design & Technology- Lectures slides by Prof.Eric Rotenberg

3. Fundamentals of Parallel Computer Architecture by Prof.Yan Solihin

4. “Cache write policies and performance,” Norman Jouppi, Proc. 20th International Symposium on Computer Architecture (ACM Computer Architecture News 21:2), May 1993, pp. 191–201.

5. Architecture of Parallel Computers, Lecture slides by Prof. Edward Gehringer

6. “Parallel computer architecture: a hardware/software approach” by David. E. Culler, Jaswinder Pal Singh, Anoop Gupta (pg 887)

7. “Evaluating Stream Buffers as a Secondary Cache Replacement” by Subbarao Palacharla and R. E. Kessler. (ref#2)

8. http://en.wikipedia.org/wiki/Instruction_prefetch

9. http://www.real-knowledge.com/memory.htm