CSC/ECE 506 Spring 2015/10a dr: Difference between revisions

No edit summary |

|||

| (3 intermediate revisions by the same user not shown) | |||

| Line 30: | Line 30: | ||

= Sequential Consistency Examples = | = Sequential Consistency Examples = | ||

In Example 1 below we provide a run through of a typical program where several reads and writes of several variables occur in a sequentially consistent manner. This diagram of processor execution is motivated by Lamport | In Example 1 below we provide a run through of a typical program where several reads and writes of several variables occur in a sequentially consistent manner. This diagram of processor execution is motivated by Lamport <ref>Leslie Lamport, "How to Make a Multiprocessor Computer That Correctly Executes Multiprocess Programs", IEEE Trans. Comput. C-28,9 (Sept. 1979), 690-691.</ref>. In Example 1 below the example begins with processor 1 doing a write of 1 to variable x. Then processor 2 then performs two back-to-back reads of variable x. Note that the second read performed by P2 occurs at the same time as P3 reads the 1st value of x as well. Finally, P3 writes value 2 to y which is then read by P1. | ||

Example 1: Sequential Consistency[4] | Example 1: Sequential Consistency[4] | ||

| Line 125: | Line 125: | ||

This example demonstrates a violation of the write-atomicity property of SC. | This example demonstrates a violation of the write-atomicity property of SC. | ||

Example 5. | Example 5. | ||

{| | {| | ||

| Line 138: | Line 139: | ||

The programmer’s intuition is that the write by processor P0 should be propagated to both P1 and P2 instantaneously. Therefore c should be printed as 1. However it may happen that a is propagated to P1 before P2 and then P1 writes b=1 and datum1ready1=1. As a result P2 will print c = 0 as a is still not propagated to P2 yet. | The programmer’s intuition is that the write by processor P0 should be propagated to both P1 and P2 instantaneously. Therefore c should be printed as 1. However it may happen that a is propagated to P1 before P2 and then P1 writes b=1 and datum1ready1=1. As a result P2 will print c = 0 as a is still not propagated to P2 yet. | ||

[[Image:Figure5_write_buffer.png|frame|center|Write Buffer reordering]] | |||

Given all of the problems above with different optimizations how does one guarantee both program order and write atomicity. We begin with examining how to ensure program order in a bus-based SMP system. In order to guarantee program order, typically requires the system to receive an acknowledgement that the previous operation has completed before moving on to the next operation. | Given all of the problems above with different optimizations how does one guarantee both program order and write atomicity. We begin with examining how to ensure program order in a bus-based SMP system. In order to guarantee program order, typically requires the system to receive an acknowledgement that the previous operation has completed before moving on to the next operation. | ||

| Line 194: | Line 197: | ||

Speculating is store is however more difficult as stores are much harder to cancel if speculated wrongly than loads. The reason is that after accessing the cache, store already deposits the new value in the cache and it propagates to the main memory and other processors and as a result cancelling a store becomes a much harder problem. | Speculating is store is however more difficult as stores are much harder to cancel if speculated wrongly than loads. The reason is that after accessing the cache, store already deposits the new value in the cache and it propagates to the main memory and other processors and as a result cancelling a store becomes a much harder problem. | ||

Even with these improvements SC is still slower than other relaxed consistency models | Even with these improvements SC is still slower than other relaxed consistency models <ref>Memory Consistency Models: A Tutorial, http://www.hpl.hp.com/techreports/Compaq-DEC/WRL-95-7.pdf</ref>. The primary problem with Sequential Consistency (SC) is that it does not allow program instruction reordering ([[Instruction Level Parallelism]]) by the processor or by the compiler to occur to improve performance and as a result the SC is not implemented by any major hardware platforms <ref>Memory Consistency Models: A Tutorial, http://www.hpl.hp.com/techreports/Compaq-DEC/WRL-95-7.pdf</ref>. | ||

= Current work on Sequential Consistency = | = Current work on Sequential Consistency = | ||

There are two main areas of active research in SC. One area of research in SC is in finding new ways to optimize SC. One such implementation called BulkSC improves on SC, and provides an implementation comparable to Release Consistency by dynamically grouping sets of consecutive instructions into chunks that appear to execute atomically and in isolation. Then the hardware enforces SC at the coarse grain of chunks rather than at the conventional fine grain of memory accesses. It can be realized via simple hardware | There are two main areas of active research in SC. One area of research in SC is in finding new ways to optimize SC. One such implementation called BulkSC improves on SC, and provides an implementation comparable to Release Consistency by dynamically grouping sets of consecutive instructions into chunks that appear to execute atomically and in isolation. Then the hardware enforces SC at the coarse grain of chunks rather than at the conventional fine grain of memory accesses. It can be realized via simple hardware <ref>Bulk SC: Bulk Enforcement of Sequential Consistency, https://homes.cs.washington.edu/~luisceze/publications/isca07_bulksc.pdf</ref>. | ||

Another area of work is in verifying sequential consistency in implementations of compilers and hardware. This turns out to be a difficult problem | Another area of work is in verifying sequential consistency in implementations of compilers and hardware. This turns out to be a difficult problem <ref>Implementing Sequential Consistency In Cache-Based Systems, Sarita Adve Mark Hill, Proceedings of the 1990 Internation Conference on Parallel Processing</ref>. One area of research is to find ways to quickly and easily confirm an implementation will confirm to SC. | ||

= 10 Question Quiz = | = 10 Question Quiz = | ||

Latest revision as of 02:54, 6 April 2015

Writeup Topic: https://docs.google.com/document/d/1ETzpewRAcnL82uzc-MB2s47EmflyWkywh-tNlLtVjzY/edit

Sequential Consistency

Introduction

Sequential consistency is one of the consistency models used in the domain of concurrent computing (e.g. in distributed shared memory, distributed transactions, etc.).

It was first defined as the property that requires that

- "... the result of any execution is the same as if the operations of all the processors were executed in some sequential order, and the operations of each individual processor appear in this sequence in the order specified by its program."<ref>Leslie Lamport, "How to Make a Multiprocessor Computer That Correctly Executes Multiprocess Programs", IEEE Trans. Comput. C-28,9 (Sept. 1979), 690-691.</ref>

To understand this statement, it is necessary to consider a computer composed of several processors executing a concurrent system: some order of execution for the processors (seeing as sequential machines), and for each one of these processors, the execution order for the instructions must be the same specified by the concurrent program.

The system provides sequential consistency if every node of the system sees the (write) operations on the same memory part (page, virtual object, cell, etc.) in the same order, although the order may be different from the order as defined by real time (as observed by a hypothetical external observer or global clock) of issuing the operations.

The sequential consistency is weaker than strict consistency, which requires a read operation from a location to return the value of the last write operation to that location. Strict consistency would demand that operations are seen in order in which they were actually issued.

This definition of sequential consistency leads to two requirements (1) maintaining program order among operations from individual processors, and (2) maintaining a single sequential order among operations from all processors. <ref>Sarita V. Adve, Kourosh Gharachorloo, "Shared Memory Consistency Models: A Tutorial" http://www.hpl.hp.com/techreports/Compaq-DEC/WRL-95-7.pdf</ref>

Programmer’s Intuition vs Sequential Consistency

Sequential consistency is in general the closest model to what programmers implicitly expect from a processor; however there are several problems with this expectation. Below we define expectations that programmers typically have with respect to how they expect their programs to execute. Programmers typically have two expectations when they execute a program on a typical processor.

Programmer’s intuition assumption 1: Program Order

- Implicitly, programmers expect the order in which memory accesses are executed in a thread to follow the order in which they occur in the source code. <ref>Yan Solihin, "Fundamentals of Parallel Computer Architecture: Multichip and Mutlicore Systems" Programmers Intuition, 2008-2009, 285</ref>

Programmer’s intuition assumption 2: Atomicity

- Additionally, programmers expect that memory accesses coming out of a processor should be performed in program order and each of them should be performed atomically <ref>Yan Solihin, "Fundamentals of Parallel Computer Architecture: Multichip and Mutlicore Systems" Programmers Intuition, 2008-2009, 285</ref>

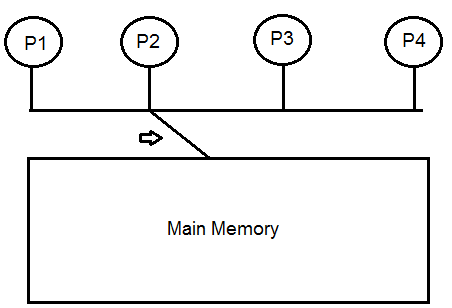

These two expectations lead us to the following conceptual model of a programmer’s intuition. The basic idea is that there is one large main memory which switches between processors in a global order committing operations as it goes.

These 'programmer’s intuitive' expectations closely match Sequential Consistency.

Sequential Consistency Examples

In Example 1 below we provide a run through of a typical program where several reads and writes of several variables occur in a sequentially consistent manner. This diagram of processor execution is motivated by Lamport <ref>Leslie Lamport, "How to Make a Multiprocessor Computer That Correctly Executes Multiprocess Programs", IEEE Trans. Comput. C-28,9 (Sept. 1979), 690-691.</ref>. In Example 1 below the example begins with processor 1 doing a write of 1 to variable x. Then processor 2 then performs two back-to-back reads of variable x. Note that the second read performed by P2 occurs at the same time as P3 reads the 1st value of x as well. Finally, P3 writes value 2 to y which is then read by P1.

Example 1: Sequential Consistency[4]

| P1: | W(x)1 | R(y)2 | ||

| P2: | R(x)0 | R(x)1 | ||

| P3: | R(x)1 | W(y)2 |

In Example 2 below we provide an example execution of several writes to two variables that is not sequentially consistent. In the program flow below the issue that occurs is that the final write to (y)2 is read by P1 but P1 then reads the original value of (y)0 which violates the SC model because the initial write shows up again.

Example 2: Program flow that is not Sequential Consistency

| P1: | W(x)1 | R(y)2 R(y)0 | ||

| P2: | R(x)1 | |||

| P3: | R(x)1 | W(y)2 |

Non-determinism

Even though Sequential consistency guarantees that writes will appear in program to each processor this does not necessarily guarantee that the outcome of a program is deterministic without synchronization. Below is an example of two executions of the same two threads of code. The first run through executes S1 and S2 first, then S3 and S4, providing sequential consistency; however, in the second run S1 executes, then S3, then S2, then S4 which will generate a different output.

Example 7.

| P1 | P2 | ||

| S1 | a = 0 | S3 | b = 0 |

| S2 | a = 1 | S4 | c = a + b |

| S3 | b = 2 | S5 | print(c) |

Possible Non deterministic output Execution 1.

| P1: | S1 | S2 | |||

| P2: | S3 | S4 |

Prints 0 as output

Execution 2.

| P1: | S1 | S2 | |||

| P2: | S3 | S4 |

Prints 1 as output

Implementing Sequential Consistency

How could something like Example 2 from the above section occur? We will present several examples of when Sequential Consistency can be violated in a two different types of multiprocessor machines. First we will start with a shared-memory bus-based SMP example with no cache. We will also assume in the following examples that no form of synchronization has been added to the code.

In the first example we will look at we will assume that both values start out as zero.

Example 3. Instruction Reorder between Processors

| P1 | P2 |

|---|---|

| a = 1 | while (a == 0) |

| data = 5 | print (data) |

It is actually possible in the above code for the code to print 5 or 0. How could this happen? Although it is our programmer’s intuition to think that the code must execute S1, S2, S3, S4, so that the value of data is printed as 5; there exists no synchronization or control flows here to force that to happen. Instead, because each processor is executing instructions independently; S1 executes then S3, then S4, S2 to print the value of data as 0.

Example 4.

| P1 | P2 |

|---|---|

| a = 1 | b = 1 |

| if (b == 0) | if (a == 0) |

| {critical section} | {critical section} |

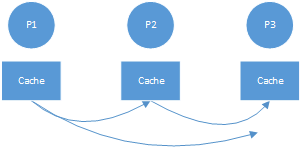

What could happen that could cause both of the critical sections to be entered at once? Write-buffer-reordering Some systems allow processors to re-order writes, which means that when a write occurs, the processor does not wait for it to complete instead it goes to the next read operation, as long as that read operation doesn’t read the same location in memory. In this example, S1 and S3 execute both writing to the bus in their independent processor. However, before those instructions can complete, the reads in statements S2 and S4 go racing by their processor’s write buffers to the shared bus, reading the opposite processors’ variable. Both processors enter the critical section.

This example demonstrates a violation of the write-atomicity property of SC.

Example 5.

| P0 | P1 | P2 |

|---|---|---|

| a = 1 | while(!datumready){}; | while(!datum1read){}; |

| datumready=1; | b=1; | c = a * b; |

| datum1ready = 1; | print(c); |

The programmer’s intuition is that the write by processor P0 should be propagated to both P1 and P2 instantaneously. Therefore c should be printed as 1. However it may happen that a is propagated to P1 before P2 and then P1 writes b=1 and datum1ready1=1. As a result P2 will print c = 0 as a is still not propagated to P2 yet.

Given all of the problems above with different optimizations how does one guarantee both program order and write atomicity. We begin with examining how to ensure program order in a bus-based SMP system. In order to guarantee program order, typically requires the system to receive an acknowledgement that the previous operation has completed before moving on to the next operation. What does it mean for the previous operation to have completed? Obviously, in the case of a load in a bus-based system, this is simple, the load is complete when the operand returns the memory address either from the cache or from the main memory. In the case of store operation however, it is less obvious. Let’s assume that we have a bus-based SMP system with two processors and each processor has its own cache. Further, let’s assume that the cache is using an invalidation based cache. In this case it is not enough for the store to be considered complete when the cache line is written to the cache, this is because the other processor could have a read occurring at the same time and as a result could read the wrong value of the variable. How? Let’s say processor P1 and P2 exist in an interconnection network, P1 has a variable in it’s cache A, let’s assume that P2 begins by making a write to A and B. Additionally, let’s assume that the change to A by P2 is propagated to memory but the change to B has only been invalidated in P2’s cache and is on its way through the network. P1 could now easily read the new value of A but read the old value of B from it’s cache, which would violate SC. This could have been avoided if P2 was forced to wait for acknowledgements before sending its next store. Therefore, according to SC, a store operation must await ACKs from each other cache-controllers before it is considered complete. This is true of a typical interconnection network processor, it must send out the store request and wait for the other cache-controllers (and memory controller) to snoop the exclusive bus read, and send out their ACKs to the request. However, on a bus-based multiprocessor the store is considered complete when the exclusive bus read (BusRdX) reaches the bus. This is because the bus serves as a synchronization medium and we know that when it reaches the bus that all other processors will have seen this BusRdX in order.

Consider the following code given in example 6

Example 6. Implementing Sequential Consistency

| S1: | LD R1, Z |

| S2: | LD R2, Y |

| S3: | ST C, R1 |

| S4: | ST D, R2 |

Sequential Consistency ensures that each memory access must complete before the next one (in program order) can start. Hence S2 (older load) cannot be issued to the cache until S1 (younger load) has been completed. Even in the case in which S1 suffers a cache miss while S2 will have a cache hit, due to strict ordering, the second load cannot be allowed to issue to the cache even though the block containing Y is in the cache. Therefore the second load must wait until the first load finishes its cache miss and obtains the value from the cache. In the same way, store instruction S3 cannot obtain the bus or cache until the load in S2 has been completed. Similarly store instruction of S4 cannot be allowed to access the cache until the store in S3 has generated a request on the bus. Bypassing a store value to a younger and dependent load is not allowed in SC.

Hence SC ensures the ordering of loads/stores as follows-

| LD⇒ LD |

| LD⇒ ST |

| ST⇒ LD |

| ST⇒ ST |

This causes a huge restriction on performance as many techniques which exploit out-of-order execution of instructions are disabled. Hence SC cannot exploit instruction level parallelism (ILP) to increase performance.

Improving the performance of Sequential Consistency

Via prefetching

The key to improving the performance of an SC implementation is to make the execution of memory accesses faster and allow memory accesses to be overlapped with respect to one another. For example, a load that hits in the cache can complete sooner than a load that misses in the cache and a store that finds the data block in the cache in an exclusive/modified state does not need to go to the bus.

To maximize the probability that a load hits in the cache and a store finds the block in the cache in an exclusive or modified state, we can issue prefetching requests as soon as effective addresses of loads and stores are available or predicted. When the older loads or stores are completed, the load which has already issued a prefetch can access the cache and completes sooner due to cache hit. Similarly when a store’s effective address is generated, we can issue a prefetch exclusive even when there are older stores or loads that have not completed.

However, there are two problems with this technique-

Prefetching too early- If we prefetch too long before the value is needed, another processor could store to the prefetched location, overwriting the prefetched value.

Prefetching too late- If the prefetched value does not get there before the value is needed, it could hurt performance, because the process still has to wait for the value to be fetched from memory.

Via speculating

Another technique for improving performance of an SC implementation is to rely on speculative accesses. We can overlap the execution of a load with the execution of an older load by speculatively assuming that the older load executes atomically. With speculation, we can allow younger loads to access the cache even if the older load has not obtained data from the cache. If the block obtained by the younger load has not been invalidated by the time the older load completes, then the value obtained the younger load is same if it had waited to issue to the cache after older load completed and speculation is successful without breaking atomicity.

However if block read by the second load has been invalidated then the illusion of atomicity is broken, and hence speculation has failed and the younger load must be re-executed. This incurs a performance penalty for having to flush the pipeline and re-execute a load. Similarly a younger load can be speculative with respect to an older store that is not yet completed. This allows the use of a write-buffer in a system that enforces SC.

Speculating is store is however more difficult as stores are much harder to cancel if speculated wrongly than loads. The reason is that after accessing the cache, store already deposits the new value in the cache and it propagates to the main memory and other processors and as a result cancelling a store becomes a much harder problem. Even with these improvements SC is still slower than other relaxed consistency models <ref>Memory Consistency Models: A Tutorial, http://www.hpl.hp.com/techreports/Compaq-DEC/WRL-95-7.pdf</ref>. The primary problem with Sequential Consistency (SC) is that it does not allow program instruction reordering (Instruction Level Parallelism) by the processor or by the compiler to occur to improve performance and as a result the SC is not implemented by any major hardware platforms <ref>Memory Consistency Models: A Tutorial, http://www.hpl.hp.com/techreports/Compaq-DEC/WRL-95-7.pdf</ref>.

Current work on Sequential Consistency

There are two main areas of active research in SC. One area of research in SC is in finding new ways to optimize SC. One such implementation called BulkSC improves on SC, and provides an implementation comparable to Release Consistency by dynamically grouping sets of consecutive instructions into chunks that appear to execute atomically and in isolation. Then the hardware enforces SC at the coarse grain of chunks rather than at the conventional fine grain of memory accesses. It can be realized via simple hardware <ref>Bulk SC: Bulk Enforcement of Sequential Consistency, https://homes.cs.washington.edu/~luisceze/publications/isca07_bulksc.pdf</ref>. Another area of work is in verifying sequential consistency in implementations of compilers and hardware. This turns out to be a difficult problem <ref>Implementing Sequential Consistency In Cache-Based Systems, Sarita Adve Mark Hill, Proceedings of the 1990 Internation Conference on Parallel Processing</ref>. One area of research is to find ways to quickly and easily confirm an implementation will confirm to SC.

10 Question Quiz

- Which of the following is true of memory consistency?

A. It is the same as cache coherence B. Memory consistency deals with how accesses (loads and stores) to any memory address are ordered with respect to one and other

- True or False - Sequential Consistency allows exploitation of instruction level parallelism(ILP)

A store is considered complete: A. On an interconnection network processor when the BusRdX hits the interconnect B. On a bus based SMP system when the BusRdX is no longer speculative C. On a bus-based SMP system when the BusRdX has been ACKed by all processors D. On a bus-based SMP system when the BusRdX hits the shared bus.

- True or False - A program that is sequentially consistent is also deterministic

- In example #6, what are the possible values of c printed by Processor P2, if write atomicity is not enforced?

- True or False - A SC System can allow write-reordering?

- Is the following Sequentially Consistent?

| P1: | W(x)1 R(y)2 |

| P2: | R(x)0 R(x)1 |

| P3: | R(x)1 W(y)2 |

- Is the following Sequentially Consistent?

| P1: | W(x)1 R(y)2 R(y)0 |

| P2: |

|

| P3: | R(x)1 W(y)2 |

- What are the problems that might occur if block is not prefetched properly?

- What could be the problem with speculating a store?

Answer Key 1.) b 2.) false 3.) d 4.) false, could be true if synchronization is enforced. 5.) 0 & 1 6.) false 7.) Yes 8.) No because Processor 1 seeing the final value of y as 0 and not 2 9)prefetching to early or prefetching too late (see above section on ‘Improving Sequential Consistency’) 10.) Cancelling a store is much harder than a load and therefore it not not generally implemented.

See also

- Linearizability

- Serializability

- https://corensic.wordpress.com/2011/06/13/understanding-violations-of-sequential-consistency/

References

<references/>