CSC/ECE 506 Spring 2015/1b DL: Difference between revisions

(Removed SMP discussion, reordered sections for better flow and readability, added subsection headers for further discussions) |

No edit summary |

||

| (11 intermediate revisions by the same user not shown) | |||

| Line 2: | Line 2: | ||

<p>[http://wiki.expertiza.ncsu.edu/index.php/CSC/ECE_506_Spring_2014/1b_ms Previous 2014/1b submission]</p> | <p>[http://wiki.expertiza.ncsu.edu/index.php/CSC/ECE_506_Spring_2014/1b_ms Previous 2014/1b submission]</p> | ||

== Introduction == | |||

<p>A [http://dictionary.reference.com/browse/supercomputer supercomputer] is a computer at the leading edge of state of the art processing capacity specifically designed for fast calculation speeds. The early 1960’s saw the advent of such machines and in the 1970’s, systems comprising a few processors were used which subsequently increased to thousands during the 1990’s and by the end of 20th century, massive parallel supercomputers with tens of thousands of processors with extremely high processing speed came into prominence<ref>http://en.wikipedia.org/wiki/Supercomputer#History</ref>. Supercomputers play an important role in the field of computational science as well as in cryptanalysis. They are also used in the quantum mechanics, weather forecasting and climate research and oil and gas exploration. Earlier supercomputer architectures were aimed at exploiting parallelism at the processor level like vector processing followed by multiprocessor systems with shared memory. With an increasing demand for more complex and faster computations, processors with shared memory architectures were not enough in terms of processing capabilities which paved the way for hybrid structures such as clusters of multi-node mesh networks where each node serves as a multiprocessing element<ref>http://en.wikipedia.org/wiki/Supercomputer#Performance_measurement</ref>.</p> | |||

== Supercomputing Applications == | |||

<p>Supercomputers are used by many different organizations functioning in both the private and public sectors. In the public sector, the U.S. federal government uses supercomputing resources in areas of medical research, weather simulations, and space technology development. The private sector has also seen growing usage of supercomputer environments and are seen commonly in academic and financial institutions and also telecommunications and manufacturing industries. Below lists applications used today:</p> | |||

<p>Supercomputing capabilities allows scientists to study simulations based on statistical modelling of large ordered data sets on phenomena that would otherwise be impossible to dissect in real time. For instance, the [http://en.wikipedia.org/wiki/Human_Brain_Project Human Brain Project] is a research project aimed to simulate brain activity which would foster research into neurology and brain development. According to some sources, Japan's K computer (ranked 4th on Top500's September 2014 listing, 10.5 petaflops/s) took 40 minutes to replicate only a second worth of brain activity<ref>http://www.livescience.com/42561-supercomputer-models-brain-activity.html</ref></p> | |||

<p>Supercomputing is also prominent in weather forecasting with meteorological models becoming increasingly data intensive<ref><http://www.cray.com/blog/the-critical-role-of-supercomputers-in-weather-forecasting</ref>. A typical workload is composed of data assimilation, deterministic forecast models and ensemble forecast models. In addition, specialized models may be applied for areas such as extreme events, air quality, ocean waves and road conditions. Quicker and more accurate responses to sudden fluctuations in climate changes are made possible by advents in the supercomputing industry.<ref><http://www.cray.com/blog/the-critical-role-of-supercomputers-in-weather-forecasting</ref></p> | |||

<p> | |||

=== On demand supercomputing and cloud === | |||

<p>The current paradigm in the cloud computing<ref>http://en.wikipedia.org/wiki/Cloud_computing</ref> industry is the availability of supercomputing applications to ubiquitous computer usage. Large analytical calculations using supercomputers have become available to smaller industries using a cloud environment and it is expected to change the dynamics of process manufacturing in these small scale industries. On-demand supercomputing will also enable companies and enterprises to significantly decrease the development and evaluation time of their prototypes helping shape their financial infrastructure while also leading to quicker product deployments in their respective markets<ref>http://arstechnica.com/information-technology/2013/11/18-hours-33k-and-156314-cores-amazon-cloud-hpc-hits-a-petaflop/</ref>.</p> | |||

<p>The [http://www.open-power.org/ OpenPOWER] Foundation is a prominent example in discussing future growth trends. It is a consortium initiated by IBM in collaboration between Google, [http://www.tyan.com/ Tyan], [http://www.tyan.com/ Nvidia] and [http://www.mellanox.com/ Mellanox] as its founding members. IBM is opening up its POWER Architecture technology on a liberal license which will enable the vendors build and configure their own customized servers, and also help design their networking and storage hardware for cloud computing and data centers<ref>http://en.wikipedia.org/wiki/OpenPOWER_Foundation</ref>.</p> | |||

<p>With the cloud becoming a viable platform for medium scale applications, interconnects and bandwidth scalability is still a major bottleneck in executing large scale tightly coupled high performance computing applications. The large scale interest and migration to optical networks does instill some hope but it is unlikely that the cloud can ever replace supercomputers<ref>http://www.computer.org/portal/web/computingnow/archive/september2012</ref><ref>http://gigaom.com/2011/11/14/how-the-cloud-is-reshaping-supercomputers/</ref>.</p> | |||

== Characteristics of supercomputers == | == Characteristics of supercomputers == | ||

[[Image:4.jpg|thumb|right|center|upright|500px|<b>Tianhe-2 - The fastest supercomputer as of 2013</b> http://www.engineering.com/DesignerEdge/DesignerEdgeArticles/ArticleID/6676/Tianhe-2-Tops-Supercomputer-List.aspx]] | [[Image:4.jpg|thumb|right|center|upright|500px|<b>Tianhe-2 - The fastest supercomputer as of 2013</b> http://www.engineering.com/DesignerEdge/DesignerEdgeArticles/ArticleID/6676/Tianhe-2-Tops-Supercomputer-List.aspx]] | ||

<p>The definition of a supercomputer established in the previous | <p>The definition of a supercomputer established in the previous sections provides insight into the applications and features of such computing systems. Certain patterns or a combination of different features emerge which are unique to supercomputers. This section discusses those unique features and characteristics<ref>http://royal.pingdom.com/2012/11/13/new-top-supercomputer-dumps-cores-and-increases-power-efficiency/</ref></p> | ||

=== | === Taxonomy === | ||

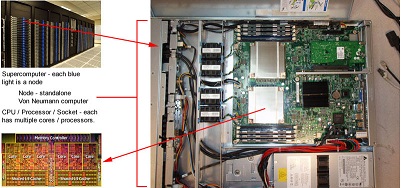

<p>Supercomputers are a collection of inter-networked nodes with each node having several CPUs (central processing units) in them. An individual CPU contains multiple cores with each core able to handle a unique thread of execution. Supercomputers are able to process data in parallel by having multiple processors run different sections of the same program concurrently resulting in quicker run times. Furthermore, multi-core processors allow for simultaneous handling of multiple tasks in parallel. The largest supercomputers can have processors numbering hundreds of thousands <ref>https://computing.llnl.gov/tutorials/parallel_comp</ref> that are able to achieve speeds up to the petascale.</p> | |||

<p>The majority of supercomputing architectures operate on some variant of a Linux distribution for its operating system and is typically optimized for the hardware components in the node. The basis for using Linux is because of its scalability and ease of configuration which can simplify the deployment of hundreds of thousands of CPUs significantly trivial. It also provides a cost effective solution and allows administrators more flexibility in terms of customization options so installation can be tailored to the application environment <ref>http://www.unixmen.com/why-do-super-computers-use-linux</ref>.</p> | |||

<p>The need to perform complex mathematical tasks demanded by several applications of the type discussed in the preceding paragraph is beyond the capabilities of a single or a group of processing units. A large number of processing units need to cooperate and collaborate to accomplish tasks of such magnitude in a timely fashion. This concept of a '''massive parallel system''' has become an inherent feature of contemporary supercomputers. In order for supercomputing nodes to run tasks in parallel, a software solution is used to provide a messaging API for communication between threads of a given task. OpenMP is considered the standard for applications using different levels of parallelism including loop-level, nested, and task level as part of the OpenMP 4.0 specification<ref>https://computing.llnl.gov/tutorials/parallel_comp</ref>.</p> | |||

<p>The need to perform complex mathematical tasks demanded by several applications of the type discussed in the preceding paragraph is beyond the capabilities of a single or a group of processing units. A large number of processing units need to cooperate and collaborate to accomplish tasks of such magnitude in a timely fashion. This concept of a '''massive parallel system''' has become an inherent feature of contemporary supercomputers.</p> | |||

[[File:NodeSocketCores.jpg|frame|left|Taken from https://computing.llnl.gov/tutorials/parallel_comp/]] | |||

=== Design Considerations === | |||

*'''Processing Speed:''' | *'''Processing Speed:''' | ||

<p>The common feature uniting the [http://en.wikipedia.org/wiki/Human_Genome_Project Human Genome Project], the [http://home.web.cern.ch/topics/large-hadron-collider Large Hadron collider] and other such challenging scientific experiments of our era has been | <p>The common feature uniting the [http://en.wikipedia.org/wiki/Human_Genome_Project Human Genome Project], the [http://home.web.cern.ch/topics/large-hadron-collider Large Hadron collider] and other such challenging scientific experiments of our era has been a demand for massive computing power. The unprecedented growth of our knowledge base coupled with the IT revolution has made processing speed one of the most critical parameters in characterizing supercomputers. The demand for increasing processing speed is evident from the fact that the faster supercomputer today is a thousand times faster than the fastest supercomputer a decade ago. As more data intensive applications begin to emerge, supercomputers and its usage will continue to grow to in fields such as meteorology, defense and biology.</p> | ||

*'''Power consumption:''' | |||

<p>The system comprising of hundreds and thousands of processing elements entails a huge demand of electrical power. As a result power consumption becomes an important factor in designing supercomputers with performance per watt being a critical metric. With the rapid increase in the usage of such systems, strides are being made towards designing more power efficient systems<ref>http://asmarterplanet.com/blog/2010/07/energy-efficiency-key-to-supercomputing-future.html</ref>. Along with the list of top 500 supercomputers, the top500 organization also publishes a [http://www.green500.org/ Green500] list which ranks the systems with their power efficiency measured in [http://www.webopedia.com/TERM/F/FLOPS.html FLOPS] per watt.</p> | |||

*'''Heat management:''' | *'''Heat management:''' | ||

<p>As mentioned in the previous paragraph, today’s supercomputers consume large amounts of power and a major chunk of it is converted to heat. This poses some significant | <p>As mentioned in the previous paragraph, today’s supercomputers consume large amounts of power and a major chunk of it is converted to heat. This poses some significant heat management issues for the designers. The thermal design considerations of supercomputers are far more complex than those of tradition home computers. These systems can be air cooled like the Blue Gene<ref>http://www-03.ibm.com/ibm/history/ibm100/us/en/icons/bluegene/</ref>, liquid cooled like the Cray 2<ref>http://www.craysupercomputers.com/cray2.htm</ref> or a combination of both like the System X<ref>http://www-03.ibm.com/systems/x/</ref>. Consistent efforts are being made to improve the heat management techniques and come up with more efficient metrics to determine a power efficiency of such systems<ref>http://en.wikipedia.org/wiki/Supercomputer#Energy_usage_and_heat_management</ref>.</p> | ||

*'''Cost:''' | *'''Cost:''' | ||

<p>The | <p>The cost of a typical supercomputer usually runs into multiple hundred thousand dollars with some of the fastest computers going into the multimillion dollar range. Apart from the cost of installation which involves the support architecture for heat management and electrical supply, these systems exhibit very high maintenance cost with a huge power consumption and a high failure rate of processing elements.</P> | ||

== Performance evaluation == | == Performance evaluation == | ||

<p>As supercomputers become more complex and powerful, measuring the performance of a particular supercomputer by just observing the specifications is rather tricky and is most likely to produce erroneous results. Benchmarks are dedicated programs which compare the characteristics of different supercomputers and are designed to mimic a particular type of workload on a component or system. Benchmarks provide a uniform framework to assess different characteristics of computer hardware such as floating point operations performance of a [http://www.webopedia.com/TERM/C/CPU.html CPU].</p> | <p>As supercomputers become more complex and powerful, measuring the performance of a particular supercomputer by just observing the specifications is rather tricky and is most likely to produce erroneous results. Benchmarks are dedicated programs which compare the characteristics of different supercomputers and are designed to mimic a particular type of workload on a component or system. Benchmarks provide a uniform framework to assess different characteristics of computer hardware such as floating point operations performance of a [http://www.webopedia.com/TERM/C/CPU.html CPU].</p> | ||

=== Benchmarks === | |||

==== LINPACK ==== | |||

<p>Amongst the various types of the benchmarks available in the market, kernel types such as [http://www.netlib.org/linpack/ LINPACK] are specially designed to check the performance of the supercomputers. [http://www.netlib.org/linpack/ LINPACK] benchmark is a simple program that factors and solves a large dense system of linear equations using Gaussian elimination with partial pivoting. Supercomputers are compared using [http://www.webopedia.com/TERM/F/FLOPS.html FLOPS]– floating point operations per second. In addition, a software package called LINPACK is a standard approach to testing or benchmarking supercomputers by solving a dense system of linear equations using the Gauss method. However, LINPACK benchmarking software is not only used to benchmark supercomputers, it can also be used to benchmark a typical user computer.</p> | <p>Amongst the various types of the benchmarks available in the market, kernel types such as [http://www.netlib.org/linpack/ LINPACK] are specially designed to check the performance of the supercomputers. [http://www.netlib.org/linpack/ LINPACK] benchmark is a simple program that factors and solves a large dense system of linear equations using Gaussian elimination with partial pivoting. Supercomputers are compared using [http://www.webopedia.com/TERM/F/FLOPS.html FLOPS]– floating point operations per second. In addition, a software package called LINPACK is a standard approach to testing or benchmarking supercomputers by solving a dense system of linear equations using the Gauss method. However, LINPACK benchmarking software is not only used to benchmark supercomputers, it can also be used to benchmark a typical user computer.</p> | ||

[[Image:super9.png|thumb|center|upright|800px|<b>The 10 fastest supercomputers as of 2013</b> | [[Image:super9.png|thumb|center|upright|800px|<b>The 10 fastest supercomputers as of 2013</b> | ||

| Line 55: | Line 58: | ||

<p>Certain new benchmarks introduced in November 2013 when TOP500 was updated. LINPACK is becoming obsolete as it measures the speed and efficiency of the linear equations calculations and fails when it comes to evaluating computations which are nonlinear in nature. A majority of differential equation calculations also require high bandwidth and low latency and access of data using irregular patterns. As a consequence, the founder of LINPACK has introduced new benchmark called high performance conjugate gradient (HPCG)<ref>http://www.sandia.gov/~maherou/docs/HPCG-Benchmark.pdf</ref> which is related to data access patterns and computations which relate closely to contemporary applications. Transcending to this new benchmark will help in rating computers and guiding their implementations in a direction which will better impact the performance improvement for real application rather than a blind race towards achieving the top spot on top500.</p> | <p>Certain new benchmarks introduced in November 2013 when TOP500 was updated. LINPACK is becoming obsolete as it measures the speed and efficiency of the linear equations calculations and fails when it comes to evaluating computations which are nonlinear in nature. A majority of differential equation calculations also require high bandwidth and low latency and access of data using irregular patterns. As a consequence, the founder of LINPACK has introduced new benchmark called high performance conjugate gradient (HPCG)<ref>http://www.sandia.gov/~maherou/docs/HPCG-Benchmark.pdf</ref> which is related to data access patterns and computations which relate closely to contemporary applications. Transcending to this new benchmark will help in rating computers and guiding their implementations in a direction which will better impact the performance improvement for real application rather than a blind race towards achieving the top spot on top500.</p> | ||

=== | ==== High Performance Conjugate Gradients (HPCG) ==== | ||

<p>In efforts to address supercomputing performance for practical environments, the HPCG benchmark was developed so that computational and data access patterns were designed to match realistically with functions of commonly used applications seen in the high performance computing industry. Instead of looking at measurable performance of scalability of an application, the shift in the HPCG benchmark was to measure performance of a given application collectivity in a scaled environment.<ref>http://hpcg-benchmark.org</ref> </p> | |||

<p>A key design limitation seen with the LINPACK was that it was incapable of measuring irregular data access patterns which is a common element in graph theory and accounts for much of the workload processed by a supercomputer. HPCG uses an algorithm composing of many irregular data access operations and many levels of recursion that are used in high performance computing environments. </p> | |||

==== STREAM ==== | |||

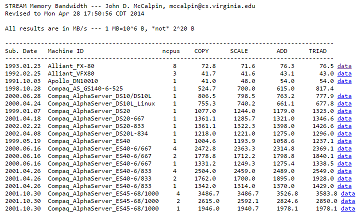

<p>Unlike the LINPACK benchmark suite which assess processing performance, the STREAM benchmark measures the sustainable memory bandwidth and computation rate of simple vector kernels. STREAM uses large datasets that exceed the size of a system’s immediate memory caches which is especially prevalent in applications using large-scale vector arrays<ref>http://www.cs.virginia.edu/stream</ref>. With CPU processors able to process data at quicker speeds, computational performance is less of a concern in the STREAM benchmark as memory latency can manifest in CPU idle times. </p> | |||

[[File:StreamMemoryBanwidth_4-28-14.png|frame|left|Stream Memory Bandwidth - 2014 - Top 20 Listings]] | |||

<p>STREAM tests the memory bandwidth by using COPY, SCALE, SUM, and TRIAD operations ordered by its computational complexity. This scale of complexity of each operation can be used to mimic real world applications when the data is stored in a location that is not quickly accessible. Memory bandwidth performance is measured in GB/s which can then further be extrapolated by the performance per CPU core.<ref>http://www.admin-magazine.com/HPC/Articles/Finding-Memory-Bottlenecks-with-Stream</ref></p> | |||

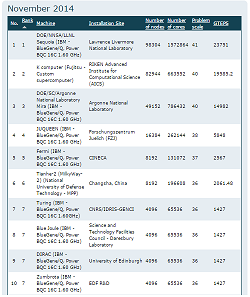

==== Graph500 ==== | |||

<p>The Graph500 organization proposes an alternative evaluation of supercomputing performance to the standard Top500 by introducing a new metric identified as traversed edges per second (TEPs)<ref>http://www.graph500.org</ref>. The justification on the Graph500 benchmark is to address data intensive applications designed for 3D simulations which is a commonly recognized pitfall of the LINPACK algorithm.</p> | |||

[[File:Graph500_11-2014_listings.png|frame|middle|800px|Graph 500 - November 2014 - Top 10 Listings]] | |||

<p>The Graph500 algorithm starts by generating an edge list for a large vector NxM graph predefined by the number of vertices and the edge factor (ratio of the edges to vertices). Using the edge list is generated, a list of tuples each containing an endpoint and an edge weight is assigned to construct a graph matrix. Using a random generated search key, the benchmark measures the time it takes to access data stored at a given vertex in TEPs by taking the number of traversed edges divided by the access time.</p> | |||

== Supercomputer Architecture == | == Supercomputer Architecture == | ||

The architecture of parallel systems<ref>https://computing.llnl.gov/tutorials/parallel_comp/#Whatis</ref> can be classified on the basis of the hardware level at which they support parallelism. This is usually determined by the manner in which the processing elements are connected with each other, the level at which they communicate the memory resources they share. | The architecture of parallel systems<ref>https://computing.llnl.gov/tutorials/parallel_comp/#Whatis</ref> can be classified on the basis of the hardware level at which they support parallelism. This is usually determined by the manner in which the processing elements are connected with each other, the level at which they communicate the memory resources they share. As part of the Top500's 2014 listings, the two primary supercomputing architectures are the MPP and Cluster systems. Based on the trends, clustered architectures account for nearly 86% of the supercomputers while the remaining 14% were had MPP architectures. | ||

<p>The processing elements in a distributed system are connected by a network. Unlike symmetric multiprocessors, they don’t share a common bus and have different memories. Since there is no shared memory, processing elements communicate by passing messages. Distributed computing systems exhibit high scalability as there are no bus contention problems.Supercomputers belonging to this category can be classified into the following domains<ref>http://en.wikipedia.org/wiki/Distributed_computing</ref>.</p> | <p>The processing elements in a distributed system are connected by a network. Unlike symmetric multiprocessors, they don’t share a common bus and have different memories. Since there is no shared memory, processing elements communicate by passing messages. Distributed computing systems exhibit high scalability as there are no bus contention problems.Supercomputers belonging to this category can be classified into the following domains<ref>http://en.wikipedia.org/wiki/Distributed_computing</ref>.</p> | ||

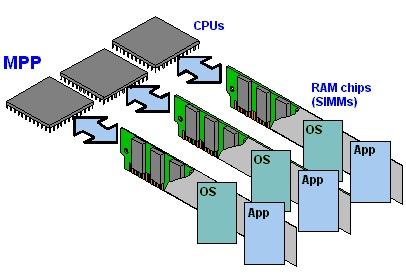

=== Massive Parallel Processing (MPP) Architecture === | |||

[[Image:mpp2.jpg|left|thumb|upleft|1000px|<b>A Generic MPP System</b> | [[Image:mpp2.jpg|left|thumb|upleft|1000px|<b>A Generic MPP System</b> | ||

http://encyclopedia2.thefreedictionary.com/MPP]] | http://encyclopedia2.thefreedictionary.com/MPP]] | ||

<p>[http://whatis.techtarget.com/definition/0,,sid9_gci214085,00.html Massively Parallel Processing] or MPP supercomputers are made up of hundreds of computing nodes and process data in a coordinated fashion .Each node of the MPP generally has its own memory and operating system and can be made up of nodes that have multiple processors and/or multiple cores.</p> | <p>[http://whatis.techtarget.com/definition/0,,sid9_gci214085,00.html Massively Parallel Processing] or MPP supercomputers are made up of hundreds of computing nodes and process data in a coordinated fashion .Each node of the MPP generally has its own memory and operating system and can be made up of nodes that have multiple processors and/or multiple cores. The interconnection of an MPP architecture is integrated into the memory bus. </p> | ||

<p>Processing elements in a Massive Parallel Processing [http://en.wikipedia.org/wiki/Massively_parallel_(computing) (MPP's)] have their own memory modules and communication circuitry and they are interconnected by a network. Contemporary topologies include meshes, hyper-cubes, rings and trees. These systems differ from cluster computers, the other class of distributed computers, in their communication scheme. MPP’s have specialized interconnect network where as clustered systems use off the shelf communication hardware. | <p>Processing elements in a Massive Parallel Processing [http://en.wikipedia.org/wiki/Massively_parallel_(computing) (MPP's)] have their own memory modules and communication circuitry and they are interconnected by a network. Contemporary topologies include meshes, hyper-cubes, rings and trees. These systems differ from cluster computers, the other class of distributed computers, in their communication scheme. MPP’s have specialized interconnect network where as clustered systems use off the shelf communication hardware. | ||

| Line 78: | Line 94: | ||

</p> | </p> | ||

<p>The main advantage of [http://en.wikipedia.org/wiki/Massively_parallel_(computing) MPP's] is their ability to exploit temporal locality and alleviate routing issues when a large number of processing elements are involved. These systems find extensive use in scientific simulations where a large problem can be broken into parallel segments – discrete evaluation of differential equations is one such example.</p> | <p>The main advantage of [http://en.wikipedia.org/wiki/Massively_parallel_(computing) MPP's] is their ability to exploit temporal locality and alleviate routing issues when a large number of processing elements are involved. These systems find extensive use in scientific simulations where a large problem can be broken into parallel segments – discrete evaluation of differential equations is one such example. Another advantage of MPP architectures is node scalability as they typically have smaller nodes connected by a high-speed interconnection network. This effectively allows for increased number of processors into the hundreds of thousands range.</p> | ||

<p>Disadvantages of [http://en.wikipedia.org/wiki/Massively_parallel_(computing) MPP] architecture includes absence of general memory which reduces speed of an inter-processor exchange as there is no way to store general data used by different processor. Secondly local memory and storage can result in bottlenecks as each processor can only use their individual memory. Full system resource use might not be possible with MPP architecture as each subsystem works individually. MPP architectures are costly to build as it requires separate memory, storage and CPU for each subsystem.</p> | <p>Disadvantages of [http://en.wikipedia.org/wiki/Massively_parallel_(computing) MPP] architecture includes absence of general memory which reduces speed of an inter-processor exchange as there is no way to store general data used by different processor. Secondly local memory and storage can result in bottlenecks as each processor can only use their individual memory. Full system resource use might not be possible with MPP architecture as each subsystem works individually. MPP architectures are costly to build as it requires separate memory, storage and CPU for each subsystem. Programming challenges are faced with an MPP architecture as the programmer must restructure their application to use a messaging API between each processor. Performance is also inhibited by the the messaging overhead which can translate to thousands of additional instructions that must be processed by each processor.</p> | ||

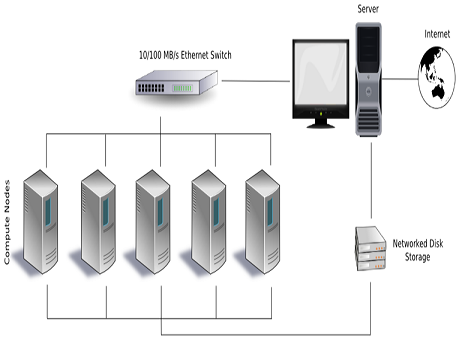

=== | === Clustered Architecutre === | ||

[[Image:3a.png|thumb|right|center|upright|600px|<b>A Generic Cluster System</b> | [[Image:3a.png|thumb|right|center|upright|600px|<b>A Generic Cluster System</b> | ||

http://en.wikipedia.org/wiki/File:Beowulf.png]] | http://en.wikipedia.org/wiki/File:Beowulf.png]] | ||

<p>Clusters are group of computers which are connected together through networking and they appear as a single system to the outside world. All the processing in this architecture is carried out using load balancing and resource sharing which is done completely on the back ground. Invented by Digital Equipment Corporation<ref>http://en.wikipedia.org/wiki/Digital_Equipment_Corporation</ref> in the 1980's, clusters of computers form the largest number of supercomputers available today.</p> | <p>Clusters are group of computers which are connected together through networking and they appear as a single system to the outside world. All the processing in this architecture is carried out using load balancing and resource sharing which is done completely on the back ground. Invented by Digital Equipment Corporation<ref>http://en.wikipedia.org/wiki/Digital_Equipment_Corporation</ref> in the 1980's, clusters of computers form the largest number of supercomputers available today.</p> | ||

<p>TOP500.org<ref>http://www.TOP500.org TOP500.org</ref> data as of November 2013 shows that Cluster computing makes up the largest subset of supercomputers at 84.6 percent.</p> | <p>TOP500.org<ref>http://www.TOP500.org TOP500.org</ref> data as of November 2013 shows that Cluster computing makes up the largest subset of supercomputers at 84.6 percent.</p> | ||

<p>In cluster architecture computers are harnessed together and they work independent of the application interacting with it. In fact the application or user running on the architecture sees them as a single resource<ref>http://wiki.expertiza.ncsu.edu/index.php/CSC/ECE_506_Spring_2013/1b_dj#Supercomputers Architecture</ref>.</p> | <p>In cluster architecture computers are harnessed together and they work independent of the application interacting with it. In fact the application or user running on the architecture sees them as a single resource<ref>http://wiki.expertiza.ncsu.edu/index.php/CSC/ECE_506_Spring_2013/1b_dj#Supercomputers Architecture</ref>.</p> | ||

<p>There are three main components to cluster architecture namely interconnect technology, storage and memory.</p> | <p>There are three main components to cluster architecture namely interconnect technology, storage and memory.</p> | ||

<p>Interconnect technology is responsible for coordinating the work of the nodes and for effecting fail over procedures in the case of a subsystem failure. Interconnect technology is responsible for making the cluster appear to be a monolithic system and is also the basis for system management tools. The main components used for this technology are mainly network or dedicated buses specifically used for achieving this interconnect reliability.</p> | <p>Interconnect technology is responsible for coordinating the work of the nodes and for effecting fail over procedures in the case of a subsystem failure. Interconnect technology is responsible for making the cluster appear to be a monolithic system and is also the basis for system management tools. The main components used for this technology are mainly network or dedicated buses specifically used for achieving this interconnect reliability.</p> | ||

<p>Storage in cluster architecture can be shared or distributed. The picture on the right shows an example of shared storage architecture for clusters. As you can see in here all the computers use the same storage. One of the benefit of using shared storage is it has less overhead of syncing different storages. Additionally, shared storage makes sense if the applications running on it have large shared databases. In distributed storage each node in cluster has its own storage. Information sharing between nodes is carried out using message passing on network. Additional work is needed to keep all the storage in sync in case of failover.</p> | <p>Storage in cluster architecture can be shared or distributed. The picture on the right shows an example of shared storage architecture for clusters. As you can see in here all the computers use the same storage. One of the benefit of using shared storage is it has less overhead of syncing different storages. Additionally, shared storage makes sense if the applications running on it have large shared databases. In distributed storage each node in cluster has its own storage. Information sharing between nodes is carried out using message passing on network. Additional work is needed to keep all the storage in sync in case of failover.</p> | ||

<p>Lastly memory in clusters also comes in shared or distributed flavors. Most commonly in clusters distributed memory is used but in certain cases shared memory can also be used depending on the final use of the system.</p> | <p>Lastly memory in clusters also comes in shared or distributed flavors. Most commonly in clusters distributed memory is used but in certain cases shared memory can also be used depending on the final use of the system.</p> | ||

<p>Some of the benefits of using cluster architecture are to produce higher performance, higher availability, greater scalability and lower operating costs. Cluster architectures are famous for providing continuous and uninterrupted service. This is achieved using redundancy<ref>http://en.wikipedia.org/wiki/Computer_cluster</ref></p> | |||

<p>Some of the benefits of using cluster architecture are to produce higher performance, higher availability, greater scalability and lower operating costs. Cluster architectures are famous for providing continuous and uninterrupted service. This is achieved using redundancy<ref>http://en.wikipedia.org/wiki/Computer_cluster</ref>. In a clustered architecture, the interconnection network connections directly to an I/O device. Since nodes have their own I/O controllers and memory storage, processors are not as close to each other which, they tend to have higher messaging overheads than a MPP architecture.</p> | |||

==== Other Cluster Types ==== | ==== Other Cluster Types ==== | ||

<p>As mention in the previous section, a cluster is parallel computer system consisting of independent nodes each of which is capable of individual operation.</p> | <p>As mention in the previous section, a cluster is parallel computer system consisting of independent nodes each of which is capable of individual operation.</p> | ||

<p>A commodity cluster is one in which the processing elements and network(s) are commercially available for procurement and application. A proprietary hardware is not essential. There are two broad classes of commodity clusters – [http://now.cs.berkeley.edu/ cluster-NOW] (network of workstations) and constellation systems. These systems are distinguished by the level of parallelism each one of them exhibit. The first level of parallelism is the number of nodes connected by a global communication backbone. The second level is the number of processing element in each node, usually configured as an SMP<ref>http://escholarship.org/uc/item/95d2c8xn#page-6</ref>.</p> | <p>A commodity cluster is one in which the processing elements and network(s) are commercially available for procurement and application. A proprietary hardware is not essential. There are two broad classes of commodity clusters – [http://now.cs.berkeley.edu/ cluster-NOW] (network of workstations) and constellation systems. These systems are distinguished by the level of parallelism each one of them exhibit. The first level of parallelism is the number of nodes connected by a global communication backbone. The second level is the number of processing element in each node, usually configured as an SMP<ref>http://escholarship.org/uc/item/95d2c8xn#page-6</ref>.</p> | ||

<p>If the number nodes in the network exceed the number of processing elements in each node, the dominant mode of parallelism is at the first level and such cluster are called cluster-NOW<ref>http://now.cs.berkeley.edu/</ref>. In constellation systems, the second level parallelism is dominant as the number of processing elements in each node is more than the number of nodes in the network.</p> | <p>If the number nodes in the network exceed the number of processing elements in each node, the dominant mode of parallelism is at the first level and such cluster are called cluster-NOW<ref>http://now.cs.berkeley.edu/</ref>. In constellation systems, the second level parallelism is dominant as the number of processing elements in each node is more than the number of nodes in the network.</p> | ||

<p>The difference also lies in the manner in which such systems are programmed. A [http://now.cs.berkeley.edu/ cluster-NOW] system is likely to be programmed exclusively with [http://en.wikipedia.org/wiki/Message_Passing_Interface MPI] where as a constellation is likely to be programmed, at least in part, with [https://computing.llnl.gov/tutorials/openMP/#Introduction OpenMP] using a threaded model.</p> | <p>The difference also lies in the manner in which such systems are programmed. A [http://now.cs.berkeley.edu/ cluster-NOW] system is likely to be programmed exclusively with [http://en.wikipedia.org/wiki/Message_Passing_Interface MPI] where as a constellation is likely to be programmed, at least in part, with [https://computing.llnl.gov/tutorials/openMP/#Introduction OpenMP] using a threaded model.</p> | ||

== Summary == | == Summary == | ||

<p>The supercomputer landscape of today is a very heterogeneous mix of varying architectures and infrastructures and this had made segregation of supercomputer into well defined subgroups rather arbitrary; there seem a lot many overlapping or hybrid examples. Supercomputing has found uses in a wide horizon of domains and their potential uses continue to increase at an exciting rate. High performance computing has made large scale simulations and calculations possible which has led to successful execution of some of humanities’ most ambitious projects and this has had profound impact on our well being.</p> | <p>The supercomputer landscape of today is a very heterogeneous mix of varying architectures and infrastructures and this had made segregation of supercomputer into well defined subgroups rather arbitrary; there seem a lot many overlapping or hybrid examples. Supercomputing has found uses in a wide horizon of domains and their potential uses continue to increase at an exciting rate. High performance computing has made large scale simulations and calculations possible which has led to successful execution of some of humanities’ most ambitious projects and this has had profound impact on our well being.</p> | ||

<p>Clustered computers have clearly taken over their MPP counterparts in terms of performance as well market share. This is primarily due to their cost effectiveness and the ability to scale well in terms of the number of processing elements.</p> | <p>Clustered computers have clearly taken over their MPP counterparts in terms of performance as well market share. This is primarily due to their cost effectiveness and the ability to scale well in terms of the number of processing elements.</p> | ||

<p>The future of supercomputing lies in its entwining with cloud based computing and development in the cloud infrastructure and high speed communication networks will lead to a rapid rise in cloud use especially by small to medium scale institutions.According to the current press release by IBM researchers supercomputer will have more optical components to reduce the power consumption<ref>http://www.technologyreview.com/view/415765/the-future-of-supercomputers-is-optical/</ref>.</p> | <p>The future of supercomputing lies in its entwining with cloud based computing and development in the cloud infrastructure and high speed communication networks will lead to a rapid rise in cloud use especially by small to medium scale institutions.According to the current press release by IBM researchers supercomputer will have more optical components to reduce the power consumption<ref>http://www.technologyreview.com/view/415765/the-future-of-supercomputers-is-optical/</ref>.</p> | ||

== References == | == References == | ||

<references /> | <references /> | ||

Latest revision as of 01:47, 3 February 2015

Introduction

A supercomputer is a computer at the leading edge of state of the art processing capacity specifically designed for fast calculation speeds. The early 1960’s saw the advent of such machines and in the 1970’s, systems comprising a few processors were used which subsequently increased to thousands during the 1990’s and by the end of 20th century, massive parallel supercomputers with tens of thousands of processors with extremely high processing speed came into prominence<ref>http://en.wikipedia.org/wiki/Supercomputer#History</ref>. Supercomputers play an important role in the field of computational science as well as in cryptanalysis. They are also used in the quantum mechanics, weather forecasting and climate research and oil and gas exploration. Earlier supercomputer architectures were aimed at exploiting parallelism at the processor level like vector processing followed by multiprocessor systems with shared memory. With an increasing demand for more complex and faster computations, processors with shared memory architectures were not enough in terms of processing capabilities which paved the way for hybrid structures such as clusters of multi-node mesh networks where each node serves as a multiprocessing element<ref>http://en.wikipedia.org/wiki/Supercomputer#Performance_measurement</ref>.

Supercomputing Applications

Supercomputers are used by many different organizations functioning in both the private and public sectors. In the public sector, the U.S. federal government uses supercomputing resources in areas of medical research, weather simulations, and space technology development. The private sector has also seen growing usage of supercomputer environments and are seen commonly in academic and financial institutions and also telecommunications and manufacturing industries. Below lists applications used today:

Supercomputing capabilities allows scientists to study simulations based on statistical modelling of large ordered data sets on phenomena that would otherwise be impossible to dissect in real time. For instance, the Human Brain Project is a research project aimed to simulate brain activity which would foster research into neurology and brain development. According to some sources, Japan's K computer (ranked 4th on Top500's September 2014 listing, 10.5 petaflops/s) took 40 minutes to replicate only a second worth of brain activity<ref>http://www.livescience.com/42561-supercomputer-models-brain-activity.html</ref>

Supercomputing is also prominent in weather forecasting with meteorological models becoming increasingly data intensive<ref><http://www.cray.com/blog/the-critical-role-of-supercomputers-in-weather-forecasting</ref>. A typical workload is composed of data assimilation, deterministic forecast models and ensemble forecast models. In addition, specialized models may be applied for areas such as extreme events, air quality, ocean waves and road conditions. Quicker and more accurate responses to sudden fluctuations in climate changes are made possible by advents in the supercomputing industry.<ref><http://www.cray.com/blog/the-critical-role-of-supercomputers-in-weather-forecasting</ref>

On demand supercomputing and cloud

The current paradigm in the cloud computing<ref>http://en.wikipedia.org/wiki/Cloud_computing</ref> industry is the availability of supercomputing applications to ubiquitous computer usage. Large analytical calculations using supercomputers have become available to smaller industries using a cloud environment and it is expected to change the dynamics of process manufacturing in these small scale industries. On-demand supercomputing will also enable companies and enterprises to significantly decrease the development and evaluation time of their prototypes helping shape their financial infrastructure while also leading to quicker product deployments in their respective markets<ref>http://arstechnica.com/information-technology/2013/11/18-hours-33k-and-156314-cores-amazon-cloud-hpc-hits-a-petaflop/</ref>.

The OpenPOWER Foundation is a prominent example in discussing future growth trends. It is a consortium initiated by IBM in collaboration between Google, Tyan, Nvidia and Mellanox as its founding members. IBM is opening up its POWER Architecture technology on a liberal license which will enable the vendors build and configure their own customized servers, and also help design their networking and storage hardware for cloud computing and data centers<ref>http://en.wikipedia.org/wiki/OpenPOWER_Foundation</ref>.

With the cloud becoming a viable platform for medium scale applications, interconnects and bandwidth scalability is still a major bottleneck in executing large scale tightly coupled high performance computing applications. The large scale interest and migration to optical networks does instill some hope but it is unlikely that the cloud can ever replace supercomputers<ref>http://www.computer.org/portal/web/computingnow/archive/september2012</ref><ref>http://gigaom.com/2011/11/14/how-the-cloud-is-reshaping-supercomputers/</ref>.

Characteristics of supercomputers

The definition of a supercomputer established in the previous sections provides insight into the applications and features of such computing systems. Certain patterns or a combination of different features emerge which are unique to supercomputers. This section discusses those unique features and characteristics<ref>http://royal.pingdom.com/2012/11/13/new-top-supercomputer-dumps-cores-and-increases-power-efficiency/</ref>

Taxonomy

Supercomputers are a collection of inter-networked nodes with each node having several CPUs (central processing units) in them. An individual CPU contains multiple cores with each core able to handle a unique thread of execution. Supercomputers are able to process data in parallel by having multiple processors run different sections of the same program concurrently resulting in quicker run times. Furthermore, multi-core processors allow for simultaneous handling of multiple tasks in parallel. The largest supercomputers can have processors numbering hundreds of thousands <ref>https://computing.llnl.gov/tutorials/parallel_comp</ref> that are able to achieve speeds up to the petascale.

The majority of supercomputing architectures operate on some variant of a Linux distribution for its operating system and is typically optimized for the hardware components in the node. The basis for using Linux is because of its scalability and ease of configuration which can simplify the deployment of hundreds of thousands of CPUs significantly trivial. It also provides a cost effective solution and allows administrators more flexibility in terms of customization options so installation can be tailored to the application environment <ref>http://www.unixmen.com/why-do-super-computers-use-linux</ref>.

The need to perform complex mathematical tasks demanded by several applications of the type discussed in the preceding paragraph is beyond the capabilities of a single or a group of processing units. A large number of processing units need to cooperate and collaborate to accomplish tasks of such magnitude in a timely fashion. This concept of a massive parallel system has become an inherent feature of contemporary supercomputers. In order for supercomputing nodes to run tasks in parallel, a software solution is used to provide a messaging API for communication between threads of a given task. OpenMP is considered the standard for applications using different levels of parallelism including loop-level, nested, and task level as part of the OpenMP 4.0 specification<ref>https://computing.llnl.gov/tutorials/parallel_comp</ref>.

Design Considerations

- Processing Speed:

The common feature uniting the Human Genome Project, the Large Hadron collider and other such challenging scientific experiments of our era has been a demand for massive computing power. The unprecedented growth of our knowledge base coupled with the IT revolution has made processing speed one of the most critical parameters in characterizing supercomputers. The demand for increasing processing speed is evident from the fact that the faster supercomputer today is a thousand times faster than the fastest supercomputer a decade ago. As more data intensive applications begin to emerge, supercomputers and its usage will continue to grow to in fields such as meteorology, defense and biology.

- Power consumption:

The system comprising of hundreds and thousands of processing elements entails a huge demand of electrical power. As a result power consumption becomes an important factor in designing supercomputers with performance per watt being a critical metric. With the rapid increase in the usage of such systems, strides are being made towards designing more power efficient systems<ref>http://asmarterplanet.com/blog/2010/07/energy-efficiency-key-to-supercomputing-future.html</ref>. Along with the list of top 500 supercomputers, the top500 organization also publishes a Green500 list which ranks the systems with their power efficiency measured in FLOPS per watt.

- Heat management:

As mentioned in the previous paragraph, today’s supercomputers consume large amounts of power and a major chunk of it is converted to heat. This poses some significant heat management issues for the designers. The thermal design considerations of supercomputers are far more complex than those of tradition home computers. These systems can be air cooled like the Blue Gene<ref>http://www-03.ibm.com/ibm/history/ibm100/us/en/icons/bluegene/</ref>, liquid cooled like the Cray 2<ref>http://www.craysupercomputers.com/cray2.htm</ref> or a combination of both like the System X<ref>http://www-03.ibm.com/systems/x/</ref>. Consistent efforts are being made to improve the heat management techniques and come up with more efficient metrics to determine a power efficiency of such systems<ref>http://en.wikipedia.org/wiki/Supercomputer#Energy_usage_and_heat_management</ref>.

- Cost:

The cost of a typical supercomputer usually runs into multiple hundred thousand dollars with some of the fastest computers going into the multimillion dollar range. Apart from the cost of installation which involves the support architecture for heat management and electrical supply, these systems exhibit very high maintenance cost with a huge power consumption and a high failure rate of processing elements.

Performance evaluation

As supercomputers become more complex and powerful, measuring the performance of a particular supercomputer by just observing the specifications is rather tricky and is most likely to produce erroneous results. Benchmarks are dedicated programs which compare the characteristics of different supercomputers and are designed to mimic a particular type of workload on a component or system. Benchmarks provide a uniform framework to assess different characteristics of computer hardware such as floating point operations performance of a CPU.

Benchmarks

LINPACK

Amongst the various types of the benchmarks available in the market, kernel types such as LINPACK are specially designed to check the performance of the supercomputers. LINPACK benchmark is a simple program that factors and solves a large dense system of linear equations using Gaussian elimination with partial pivoting. Supercomputers are compared using FLOPS– floating point operations per second. In addition, a software package called LINPACK is a standard approach to testing or benchmarking supercomputers by solving a dense system of linear equations using the Gauss method. However, LINPACK benchmarking software is not only used to benchmark supercomputers, it can also be used to benchmark a typical user computer.

Certain new benchmarks introduced in November 2013 when TOP500 was updated. LINPACK is becoming obsolete as it measures the speed and efficiency of the linear equations calculations and fails when it comes to evaluating computations which are nonlinear in nature. A majority of differential equation calculations also require high bandwidth and low latency and access of data using irregular patterns. As a consequence, the founder of LINPACK has introduced new benchmark called high performance conjugate gradient (HPCG)<ref>http://www.sandia.gov/~maherou/docs/HPCG-Benchmark.pdf</ref> which is related to data access patterns and computations which relate closely to contemporary applications. Transcending to this new benchmark will help in rating computers and guiding their implementations in a direction which will better impact the performance improvement for real application rather than a blind race towards achieving the top spot on top500.

High Performance Conjugate Gradients (HPCG)

In efforts to address supercomputing performance for practical environments, the HPCG benchmark was developed so that computational and data access patterns were designed to match realistically with functions of commonly used applications seen in the high performance computing industry. Instead of looking at measurable performance of scalability of an application, the shift in the HPCG benchmark was to measure performance of a given application collectivity in a scaled environment.<ref>http://hpcg-benchmark.org</ref>

A key design limitation seen with the LINPACK was that it was incapable of measuring irregular data access patterns which is a common element in graph theory and accounts for much of the workload processed by a supercomputer. HPCG uses an algorithm composing of many irregular data access operations and many levels of recursion that are used in high performance computing environments.

STREAM

Unlike the LINPACK benchmark suite which assess processing performance, the STREAM benchmark measures the sustainable memory bandwidth and computation rate of simple vector kernels. STREAM uses large datasets that exceed the size of a system’s immediate memory caches which is especially prevalent in applications using large-scale vector arrays<ref>http://www.cs.virginia.edu/stream</ref>. With CPU processors able to process data at quicker speeds, computational performance is less of a concern in the STREAM benchmark as memory latency can manifest in CPU idle times.

STREAM tests the memory bandwidth by using COPY, SCALE, SUM, and TRIAD operations ordered by its computational complexity. This scale of complexity of each operation can be used to mimic real world applications when the data is stored in a location that is not quickly accessible. Memory bandwidth performance is measured in GB/s which can then further be extrapolated by the performance per CPU core.<ref>http://www.admin-magazine.com/HPC/Articles/Finding-Memory-Bottlenecks-with-Stream</ref>

Graph500

The Graph500 organization proposes an alternative evaluation of supercomputing performance to the standard Top500 by introducing a new metric identified as traversed edges per second (TEPs)<ref>http://www.graph500.org</ref>. The justification on the Graph500 benchmark is to address data intensive applications designed for 3D simulations which is a commonly recognized pitfall of the LINPACK algorithm.

The Graph500 algorithm starts by generating an edge list for a large vector NxM graph predefined by the number of vertices and the edge factor (ratio of the edges to vertices). Using the edge list is generated, a list of tuples each containing an endpoint and an edge weight is assigned to construct a graph matrix. Using a random generated search key, the benchmark measures the time it takes to access data stored at a given vertex in TEPs by taking the number of traversed edges divided by the access time.

Supercomputer Architecture

The architecture of parallel systems<ref>https://computing.llnl.gov/tutorials/parallel_comp/#Whatis</ref> can be classified on the basis of the hardware level at which they support parallelism. This is usually determined by the manner in which the processing elements are connected with each other, the level at which they communicate the memory resources they share. As part of the Top500's 2014 listings, the two primary supercomputing architectures are the MPP and Cluster systems. Based on the trends, clustered architectures account for nearly 86% of the supercomputers while the remaining 14% were had MPP architectures.

The processing elements in a distributed system are connected by a network. Unlike symmetric multiprocessors, they don’t share a common bus and have different memories. Since there is no shared memory, processing elements communicate by passing messages. Distributed computing systems exhibit high scalability as there are no bus contention problems.Supercomputers belonging to this category can be classified into the following domains<ref>http://en.wikipedia.org/wiki/Distributed_computing</ref>.

Massive Parallel Processing (MPP) Architecture

Massively Parallel Processing or MPP supercomputers are made up of hundreds of computing nodes and process data in a coordinated fashion .Each node of the MPP generally has its own memory and operating system and can be made up of nodes that have multiple processors and/or multiple cores. The interconnection of an MPP architecture is integrated into the memory bus.

Processing elements in a Massive Parallel Processing (MPP's) have their own memory modules and communication circuitry and they are interconnected by a network. Contemporary topologies include meshes, hyper-cubes, rings and trees. These systems differ from cluster computers, the other class of distributed computers, in their communication scheme. MPP’s have specialized interconnect network where as clustered systems use off the shelf communication hardware.

The IBM Blue Gene/L<ref>http://en.wikipedia.org/wiki/Blue_Gene</ref> is a MPP system in which each node is connected to three parallel communication networks - a 3D toroidal for peer-to-peer communication between compute nodes, a network for collective communication (broadcasts and reduce operations), and a global interrupt network.

The main advantage of MPP's is their ability to exploit temporal locality and alleviate routing issues when a large number of processing elements are involved. These systems find extensive use in scientific simulations where a large problem can be broken into parallel segments – discrete evaluation of differential equations is one such example. Another advantage of MPP architectures is node scalability as they typically have smaller nodes connected by a high-speed interconnection network. This effectively allows for increased number of processors into the hundreds of thousands range.

Disadvantages of MPP architecture includes absence of general memory which reduces speed of an inter-processor exchange as there is no way to store general data used by different processor. Secondly local memory and storage can result in bottlenecks as each processor can only use their individual memory. Full system resource use might not be possible with MPP architecture as each subsystem works individually. MPP architectures are costly to build as it requires separate memory, storage and CPU for each subsystem. Programming challenges are faced with an MPP architecture as the programmer must restructure their application to use a messaging API between each processor. Performance is also inhibited by the the messaging overhead which can translate to thousands of additional instructions that must be processed by each processor.

Clustered Architecutre

Clusters are group of computers which are connected together through networking and they appear as a single system to the outside world. All the processing in this architecture is carried out using load balancing and resource sharing which is done completely on the back ground. Invented by Digital Equipment Corporation<ref>http://en.wikipedia.org/wiki/Digital_Equipment_Corporation</ref> in the 1980's, clusters of computers form the largest number of supercomputers available today.

TOP500.org<ref>http://www.TOP500.org TOP500.org</ref> data as of November 2013 shows that Cluster computing makes up the largest subset of supercomputers at 84.6 percent.

In cluster architecture computers are harnessed together and they work independent of the application interacting with it. In fact the application or user running on the architecture sees them as a single resource<ref>http://wiki.expertiza.ncsu.edu/index.php/CSC/ECE_506_Spring_2013/1b_dj#Supercomputers Architecture</ref>.

There are three main components to cluster architecture namely interconnect technology, storage and memory.

Interconnect technology is responsible for coordinating the work of the nodes and for effecting fail over procedures in the case of a subsystem failure. Interconnect technology is responsible for making the cluster appear to be a monolithic system and is also the basis for system management tools. The main components used for this technology are mainly network or dedicated buses specifically used for achieving this interconnect reliability.

Storage in cluster architecture can be shared or distributed. The picture on the right shows an example of shared storage architecture for clusters. As you can see in here all the computers use the same storage. One of the benefit of using shared storage is it has less overhead of syncing different storages. Additionally, shared storage makes sense if the applications running on it have large shared databases. In distributed storage each node in cluster has its own storage. Information sharing between nodes is carried out using message passing on network. Additional work is needed to keep all the storage in sync in case of failover.

Lastly memory in clusters also comes in shared or distributed flavors. Most commonly in clusters distributed memory is used but in certain cases shared memory can also be used depending on the final use of the system.

Some of the benefits of using cluster architecture are to produce higher performance, higher availability, greater scalability and lower operating costs. Cluster architectures are famous for providing continuous and uninterrupted service. This is achieved using redundancy<ref>http://en.wikipedia.org/wiki/Computer_cluster</ref>. In a clustered architecture, the interconnection network connections directly to an I/O device. Since nodes have their own I/O controllers and memory storage, processors are not as close to each other which, they tend to have higher messaging overheads than a MPP architecture.

Other Cluster Types

As mention in the previous section, a cluster is parallel computer system consisting of independent nodes each of which is capable of individual operation.

A commodity cluster is one in which the processing elements and network(s) are commercially available for procurement and application. A proprietary hardware is not essential. There are two broad classes of commodity clusters – cluster-NOW (network of workstations) and constellation systems. These systems are distinguished by the level of parallelism each one of them exhibit. The first level of parallelism is the number of nodes connected by a global communication backbone. The second level is the number of processing element in each node, usually configured as an SMP<ref>http://escholarship.org/uc/item/95d2c8xn#page-6</ref>.

If the number nodes in the network exceed the number of processing elements in each node, the dominant mode of parallelism is at the first level and such cluster are called cluster-NOW<ref>http://now.cs.berkeley.edu/</ref>. In constellation systems, the second level parallelism is dominant as the number of processing elements in each node is more than the number of nodes in the network.

The difference also lies in the manner in which such systems are programmed. A cluster-NOW system is likely to be programmed exclusively with MPI where as a constellation is likely to be programmed, at least in part, with OpenMP using a threaded model.

Summary

The supercomputer landscape of today is a very heterogeneous mix of varying architectures and infrastructures and this had made segregation of supercomputer into well defined subgroups rather arbitrary; there seem a lot many overlapping or hybrid examples. Supercomputing has found uses in a wide horizon of domains and their potential uses continue to increase at an exciting rate. High performance computing has made large scale simulations and calculations possible which has led to successful execution of some of humanities’ most ambitious projects and this has had profound impact on our well being.

Clustered computers have clearly taken over their MPP counterparts in terms of performance as well market share. This is primarily due to their cost effectiveness and the ability to scale well in terms of the number of processing elements.

The future of supercomputing lies in its entwining with cloud based computing and development in the cloud infrastructure and high speed communication networks will lead to a rapid rise in cloud use especially by small to medium scale institutions.According to the current press release by IBM researchers supercomputer will have more optical components to reduce the power consumption<ref>http://www.technologyreview.com/view/415765/the-future-of-supercomputers-is-optical/</ref>.

References

<references />