CSC/ECE 506 Spring 2014/10c gk: Difference between revisions

| (74 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

='''Memory Consistency models'''= | ='''Memory Consistency models'''= | ||

== '''Introduction''' == | == '''Introduction''' == | ||

The memory consistency model of a shared-memory multiprocessor provides a formal specification of how the memory system will appear to the programmer, eliminating the gap between the behavior expected by the programmer and the actual behavior supported by a system. | The memory consistency model of a shared-memory multiprocessor provides a formal specification of how the memory system will appear to the programmer, eliminating the gap between the behavior expected by the programmer and the actual behavior supported by a system. In other words, a memory consistency model is a set of rules that govern how memory systems will process memory access operations from multiple processors. In case of a uniprocessor, memory access operations occur in program order, so memory consistency may not be a significant issue. However, in the case of multiprocessor systems, the memory consistency model establishes the requirements for correct operation. These requirements then in turn govern the implementation of system optimizations that can have a direct impact on programming models. By this definition, it can be shown that, in effect, a low level memory consistency model can have a direct impact on algorithm design. Because of this dependency between consistency model and programming algorithm, a thorough understanding of memory consistency models is required while developing programs that run on distributed shared memory systems. | ||

The following wiki chapter provides a brief discussion on the intuition behind using relaxed memory consistency models for scalable design of multiprocessors. This chapter also provides an introduction to the consistency models implemented in real multiprocessor systems such as Digital Alpha, Sparc V9 , IBM Power PC and processors from Sun Microsystems. Memory consistency models used in the Android platform, specifically using the ARM CPU architecture as an example, are also discussed in the following sections. | |||

= '''Sequential Consistency Model (SC)''' = | = '''Sequential Consistency Model (SC)''' = | ||

=='''Introduction to Sequential Consistency Model'''== | =='''Introduction to Sequential Consistency Model'''== | ||

[[Image:SCM.jpg]] <br /> | <center>[[Image:SCM.jpg]]</center> <br /> | ||

In order to write correct and efficient shared memory programs, programmers need a precise notion of shared memory semantics. To ensure correct program execution, a programmer expects that the data value read should be the same as the latest value written to that variable in the system. | |||

However in many commercial shared memory systems,the processor may observe an older value, causing unexpected behavior. Intuitively, a read should return the value of the "last" write to the same memory location. In uniprocessors, "last" is precisely defined by the sequential order specified by the program called '''program order'''. | However in many commercial shared memory systems,the processor may observe an older value, causing unexpected behavior. Intuitively, a read should return the value of the "last" write to the same memory location. In uniprocessors, "last" is precisely defined by the sequential order specified by the program, called '''program order'''. However, this is not the case in multiprocessors. A write and read of a particular variable are not related by program order because they originate on two different processors. | ||

The uniprocessors model, however can be | The uniprocessors model, however, can be extended to apply to multiprocessors in a natural way. The resulting model is called '''Sequential consistency'''. In brief, sequential consistency requires that | ||

*all memory operations appear to execute one at a time, and | |||

*all memory operations of a single processor appear to execute in the order described by that processor's program. | *all memory operations of a single processor appear to execute in the order described by that processor's program. | ||

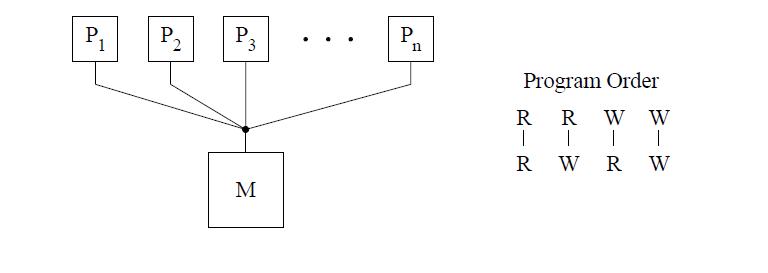

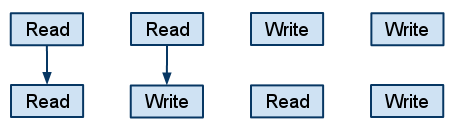

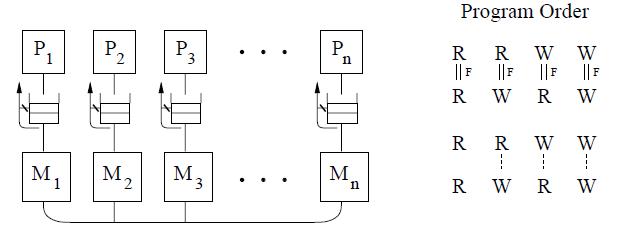

[[Image:SeqC.jpg]] <br /> | <center>[[Image:SeqC.jpg]] </center><br /> | ||

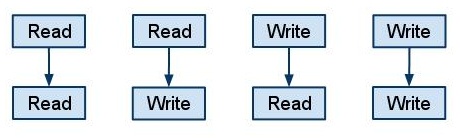

The figure above shows the basic representation for sequential consistency. This conceptual system for SC consists of n processors sharing a single logical memory. Though the figure does not show caches, an SC implementation may still cache data as long as the memory system appears as a single copy memory(i.e the writes should appear atomic). As SC requires program order to be maintained among all operation types, the pictorial representation of the program order shows all combinations of reads and writes, the line between them telling that the operations are required to complete in program order. | The figure above shows the basic representation for sequential consistency. This conceptual system for SC consists of n processors sharing a single logical memory. Though the figure does not show caches, an SC implementation may still cache data as long as the memory system appears as a single copy memory(i.e the writes should appear atomic). As SC requires program order to be maintained among all operation types, the pictorial representation of the program order shows all combinations of reads and writes, with the line between them telling that the operations are required to complete in program order. | ||

This model ensures that the reads of a variable | This model ensures that the reads of a variable will return the new values written to it by a processor. Sequential consistency provides a simple, intuitive programming model. Because of sequential consistency's strict consistency requirements, many of the architecture and compiler optimizations used in uniprocessors are not safely applicable to sequentially consistent multiprocessors.[ For more details on the sequential consistency model and its advantages/disadvantages refer to '''[http://www.cesr.ncsu.edu/solihin/Main.html Fundamentals of Parallel Computer Architecture]''' textbook by Yan Solihin , page 284 through 292]. For this reason, many '''Relaxed consistency models''' have been proposed, most of which | ||

are supported by commercial architectures. | are supported by commercial architectures. | ||

=='''Performance of Sequential Consistency on multiprocessors'''== | =='''Performance of Sequential Consistency on multiprocessors'''== | ||

Sequential Consistency (SC) is the most intuitive programming interface for shared memory multiprocessors. A system implementing SC appears to execute memory operations one at a time and in program order. A program written for an SC system requires and | Sequential Consistency (SC) is the most intuitive programming interface for shared memory multiprocessors. A system implementing SC appears to execute memory operations one at a time and in program order. A program written for an SC system requires and | ||

relies on a specified memory behavior to execute correctly. Implementing memory accesses according to the SC model constraints, however, would | relies on a specified memory behavior to execute correctly. Implementing memory accesses according to the SC model constraints, however, would create and adverse impact on system performance because memory accesses in shared-memory multiprocessors often incur prohibitively long | ||

latencies (tens of times longer than in uniprocessor systems). To enforce sequential consistency, illegal reordering caused by hardware optimizations like '''[http://en.wikipedia.org/wiki/Write_buffer Write buffers]''', '''[http://www.pcguide.com/ref/mbsys/cache/charTransactional-c.html Non-blocking caches]''' etc and compiler optimizations like '''[http://en.wikipedia.org/wiki/Loop-invariant_code_motion code motion]''', '''[http://en.wikipedia.org/wiki/Register_allocation register allocation]''','''[http://en.wikipedia.org/wiki/Common_subexpression_elimination eliminating common subexpressions]''', '''[http://www.cs.cmu.edu/afs/cs/academic/class/15828-s98/lectures/0318/index.htm loop transformations]''' etc resulting in reordering are not allowed. These are the optimizations which are implemented for better performance and are valid in uniprocessors. But in case of multiprocessors, these | latencies (tens of times longer than in uniprocessor systems). To enforce sequential consistency, illegal reordering caused by hardware optimizations like '''[http://en.wikipedia.org/wiki/Write_buffer Write buffers]''', '''[http://www.pcguide.com/ref/mbsys/cache/charTransactional-c.html Non-blocking caches]''' etc and compiler optimizations like '''[http://en.wikipedia.org/wiki/Loop-invariant_code_motion code motion]''', '''[http://en.wikipedia.org/wiki/Register_allocation register allocation]''','''[http://en.wikipedia.org/wiki/Common_subexpression_elimination eliminating common subexpressions]''', '''[http://www.cs.cmu.edu/afs/cs/academic/class/15828-s98/lectures/0318/index.htm loop transformations]''' etc resulting in reordering are not allowed. These are the optimizations which are implemented for better performance and are valid in uniprocessors. But in the case of multiprocessors, these optimizations fail to satisfy the requirements of sequential consistency and hence are not allowed. However, disallowing these optimization techniques has an adverse effect on the system's performance. | ||

A number of techniques have been proposed to enable the use of certain optimizations by the hardware and compiler without violating sequential consistency, those having the potential to substantially boost performance. Some of | A number of techniques have been proposed to enable the use of certain optimizations by the hardware and compiler without violating sequential consistency, specifically those optimizations having the potential to substantially boost performance. Some of these techniques are mentioned below: | ||

'''Hardware optimization techniques:''' | '''Hardware optimization techniques:''' | ||

| Line 35: | Line 35: | ||

of the roll back machinery is already present to deal with branch mispredictions. | of the roll back machinery is already present to deal with branch mispredictions. | ||

More information about these two techniques can be found in | More information about these two techniques can be found in the paper presented by Kourosh Gharachorloo, Anoop Gupta, and John Hennessy of Stanford University at International Conference on Parallel Processing, '''[http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.126.3128&rep=rep1&type=pdf Two techniques to enhance the performance of memory consistency models]''' | ||

These two techniques of '''Prefetching''' and '''Speculative Reads''' are expected to be supported by several next generation microprocessors like MIPS R10000 and Intel P6, thus enabling more efficient hardware implementations of sequential consistency. | These two techniques of '''Prefetching''' and '''Speculative Reads''' are expected to be supported by several next generation microprocessors like MIPS R10000 and Intel P6, thus enabling more efficient hardware implementations of sequential consistency. | ||

'''Software Optimization techniques''' | '''Software Optimization techniques''' | ||

*'''Shasha and Snir's agorithm ''' : A compiler algorithm proposed by Dennis Shasha and Marc Snir is used to detect when memory operations can be reordered without violating sequential consistency. It uses the technique where | *'''Shasha and Snir's agorithm ''' : A compiler algorithm proposed by Dennis Shasha and Marc Snir is used to detect when memory operations can be reordered without violating sequential consistency. It uses the technique where sequential consistency can be enforced by delaying each access to shared memory until the previous access of the same processor has terminated. For performance reasons, it allows several accesses by the same processor to proceed concurrently. The compiler algorithm then performs an analysis to find a minimal set of delays that enforces sequential consistency. The analysis extends to interprocessor synchronization constraints and to code where blocks of operations have to execute atomically thus providing new compiler optimization techniques for parallel languages that support shared variables. | ||

In detail implementation of this algorithm can be studied from the paper : '''[http://portal.acm.org/citation.cfm?id=42277 Efficient and correct execution of parallel programs that share memory]''' | In detail implementation of this algorithm can be studied from the paper : '''[http://portal.acm.org/citation.cfm?id=42277 Efficient and correct execution of parallel programs that share memory]''' | ||

| Line 47: | Line 47: | ||

More information about this can be found in this paper by Arvind Krishnamurthy and Katherine Yelick presented at 7th International Workshop on Languages and Compilers for Parallel Computing '''[http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.47.8185&rep=rep1&type=pdf Optimizing parallel SPMD programs]''' | More information about this can be found in this paper by Arvind Krishnamurthy and Katherine Yelick presented at 7th International Workshop on Languages and Compilers for Parallel Computing '''[http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.47.8185&rep=rep1&type=pdf Optimizing parallel SPMD programs]''' | ||

In general the performance of sequential consistency model on multiprocessors with shared memory is low. But | In general the performance of the sequential consistency model on multiprocessors with shared memory is low. But through the use of the above techniques, better performance can be achieved. | ||

All of the above schemes mentioned to improve performance of SC allow a processor to overlap or reorder its memory accesses without software support, | All of the above schemes mentioned to improve performance of SC allow a processor to overlap or reorder its memory accesses without software support. However, they also either require complex or restricted hardware (e.g., hardware prefetching and rollback) or the gains are expected to be small. Further, the optimizations of these schemes can be exploited by hardware (or the runtime | ||

system software), but cannot be exploited by compilers. Work related to compiler optimizations including that by Shasha and Snir motivate their work for hardware optimizations. | system software), but cannot be exploited by compilers. Work related to compiler optimizations including that by Shasha and Snir, motivate their work for hardware optimizations. Thus the hardware costs incurred to implement these techniques are high. | ||

Sequential consistency models can be quite prohibitive on mobile platforms. Until a few years ago, all Android devices were uniprocessors. More recently however, a slew of Android devices based on SMP designs have been released. SMP architectures have to rely on relaxed memory consistency models for performance. | |||

Due to these factors, researchers and vendors have alternatively relied on '''relaxed memory consistency models''' that embed the shared-address space programming interface with directives enabling software to inform hardware when memory ordering is necessary. | |||

= '''Relaxed Consistency Models''' = | = '''Relaxed Consistency Models''' = | ||

| Line 58: | Line 60: | ||

There are two main reasons to implement Relaxed consistency models | There are two main reasons to implement Relaxed consistency models | ||

# It is not always necessary to maintain sequential consistency | # It is not always necessary to maintain sequential consistency | ||

# | # Ease of programming does not justify the hardware overhead and performance degradation caused by sequential consistency | ||

For example, due to the fact that write buffers cause operations to be presented to the cache-coherence protocol out of program order, it is difficult to use write buffers and maintain sequential consistency. Straightforward processors are also precluded from overlapping multiple reads and writes in the memory system. This restriction is crippling in systems without caches, where all operations go to memory. In systems with cache coherence—which are the norm today—this restriction has an impact on activity whenever operations miss or bypass the cache. (Cache bypassing occurs on uncacheable operations to I/O space, some block transfer operations, and writes to some coalescing write buffers.) | |||

The basic idea behind relaxed memory models is to enable the use of more optimizations by eliminating some of the constraints that sequential consistency places on the overlap and reordering of memory operations. In contrast to sequential consistency models, relaxed models typically allow certain memory operations to execute out of program order or non-atomically. | |||

Relaxed consistency models can be partitioned into subgroups using four comparisons: Type of Relaxation, Synchronizing vs. Non-Synchronizing, Issue vs. View-Based, and Relative Model Strength. | |||

'''1. Type of Relaxation:''' - A simple and effective way of categorizing relaxed consistency models is by defining which requirement of sequential consistency is relaxed. Systems implementing a relaxed consistency model either relax the program order or the write atomicity requirement. Depending upon the sequence, one or more events of the following order can be relaxed. | |||

'''1. Type of Relaxation:''' - A simple and effective way of categorizing relaxed consistency models is by defining which requirement of sequential consistency is relaxed. relaxed consistency model either | |||

<div style='margin-left:40px;'> | <div style='margin-left:40px;'> | ||

Read - Read<br> | Read - Read<br> | ||

| Line 78: | Line 77: | ||

'''2. Synchronizing vs. Non-Synchronizing:''' A synchronizing model divides shared memory | '''2. Synchronizing vs. Non-Synchronizing:''' A synchronizing model divides shared memory | ||

accesses into at least two groups and assigns a different consistency restriction to each group. | accesses into at least two groups and assigns a different consistency restriction to each group. | ||

In contrast, a non-synchronizing model does not differentiate between individual memory accesses and assigns the same consistency model to all accesses collectively. | |||

'''3. Issue vs. View-Based:''' An issue-based relaxation focuses on how the ordering of an | '''3. Issue vs. View-Based:''' An issue-based relaxation focuses on how the ordering of an | ||

instruction issue will be seen by the entire system, as a collective unit. On the other hand, a view-based method does not aim to simulate sequential consistency; in | instruction issue will be seen by the entire system, as a collective unit. On the other hand, a view-based method does not aim to simulate sequential consistency; in | ||

this category of models, each processor is allowed | this category of models, each processor is allowed its own view of the ordering of events in | ||

the system, and these views do not need to match. | the system, and these views do not need to match. | ||

'''4. Relative Model Strength:''' Some models are inherently | '''4. Relative Model Strength:''' Some models are inherently stronger (or more strict) than other | ||

models. If rating the strength of a model by the relaxations of program order or atomicity, | models. If rating the strength of a model by the relaxations of program order or atomicity, | ||

it may be possible to directly compare the strength of different models, as in a case where | it may be possible to directly compare the strength of different models, such as in a case where | ||

one model relaxes everything another model | one model relaxes everything another model relaxes in addition to one or more things that the other restricts. | ||

In this chapter we will mainly distinguish between different models based on type of relaxation of read-write ordering they allow. Each of these models have some flavors depending on some subtle differences. | In this chapter we will mainly distinguish between the different relaxed consistency models based on the type of relaxation of read-write ordering they allow. Each of these models have some flavors depending on some subtle differences. | ||

=='''Different Relaxed consistency Models'''== | =='''Different Relaxed consistency Models'''== | ||

Let us now see the consistency models in some of the real multiprocessor systems listed above. | Let us now see the consistency models in some of the real multiprocessor systems listed above. In order to serve as a supplement to the available materials, we will not focus on those topics that are already thoroughly covered in Solihin's textbook, such as weak ordering and processor consistency models. We will instead provide a deeper examination of some of the relaxed consistency models mentioned in the book as well as provide examples of real-world processors that use those models. | ||

==='''RCsc''' and '''RCpc'''=== | |||

RCsc and RCpc are two flavors of the release consistency model that differ somewhat in what instruction ordering the permit. RCsc maintains sequential consistency among synchronization operations, while RCpc maintains processor consistency among synchronization operations. More specifically, RCpc will allow a read to return the value of another processor's write early, while RCsc will not.[[#References|<sup>[1]</sup>]] | |||

==='''Relax Write-to-Read program order'''=== | ==='''Relax Write-to-Read program order'''=== | ||

Generally | Generally, write instructions take more time than reads. Based on this, the key program order optimization enabled by relaxed write-to-read models is to allow a read to be reordered with respect to previous writes from the same processor. While maintaining sequential consistency typically requires the processor to wait for a previous write to complete before completing the next read operation, this optimization allows the processor to continue with a read without waiting for write operations to complete. As a result, the write latency can be effectively hidden. Additionally, many programs provide sequentially consistent results even if the program order from a write to a read is not maintained.[[#References|<sup>[25]</sup>]] | ||

This method is not sufficiently flexible for compiler optimizations but successfully mask the latency of the write operation. Compilers tend to require reordering both | This method is not sufficiently flexible for compiler optimizations, but it can successfully mask the latency of the write operation. Compilers, however, tend to require reordering both with regards to read and write instructions. | ||

Here is an example of a program[[#References|<sup>[29]</sup>]] that fails: | Here is an example of a program[[#References|<sup>[29]</sup>]] that fails: | ||

| Line 109: | Line 111: | ||

Enter Critical Section Enter Critical Section | Enter Critical Section Enter Critical Section | ||

This | This program fails because the reads are allowed to bypass writes. This can cause P1 to enter the critical section without setting the flag. If the flag is not set, P2 is also able to enter the critical section, thus causing the program to fail. | ||

However | However, the following code[[#References|<sup>[29]</sup>]] works well with this model: | ||

P1 P2 | P1 P2 | ||

Data=2000 while(Flag==0); | Data=2000 while(Flag==0); | ||

| Line 118: | Line 120: | ||

Since writes are not allowed to be reordered with respect to each other, this code works flawlessly. | Since writes are not allowed to be reordered with respect to each other, this code works flawlessly. | ||

Therefore, even though systems that exploit | Therefore, even though systems that exploit the write-to-read optimization are not sequentially consistent, they appear sequentially consistent to a large class of programs. | ||

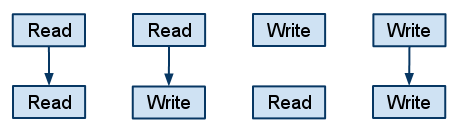

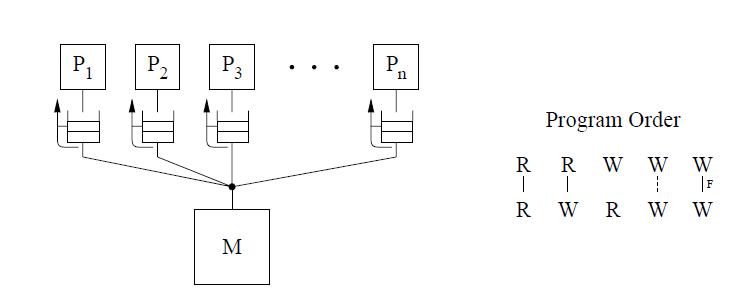

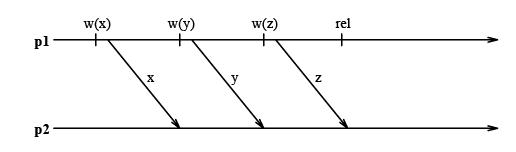

[[Image:rewr.png]] | <center>[[Image:rewr.png]]</center> | ||

Different flavors | Different flavors | ||

* processor consistency- PC | |||

* IBM 370 | |||

* Intel Pentium Pro | |||

* Sun’s Total Store Order | |||

These three models differ in when they allow a read to return the value of a write. They also differ in whether a processor is allowed to return the value of its own write before the write completes in memory. | |||

====''IBM-370''==== | ====''IBM-370''==== | ||

| Line 136: | Line 138: | ||

The IBM-370 model allows a write followed by a read to complete out of program order unless the two operations are to the same location, or if either operation is generated by a serialization instruction, or if there is a serialization instruction in program order between the two operations. | The IBM-370 model allows a write followed by a read to complete out of program order unless the two operations are to the same location, or if either operation is generated by a serialization instruction, or if there is a serialization instruction in program order between the two operations. | ||

As seen earlier write buffers are used to implement this reordering. As the writes are handled by write buffer reads can be performed before preceding write. | As seen earlier, write buffers are used to implement this reordering. As the writes are handled by the write buffer, reads can be performed before the preceding write completes in memory. | ||

To enforce the program order constraint from a write to a following read, the IBM 370 model provides special serialization instructions that may be placed between the two operations. Some serialization instructions are special memory instructions that are used for synchronization (e.g., compare&swap), while others are non-memory instructions such as a branch. Referring back to the first example program in this section, placing a serialization instruction after the write on each processor | To enforce the program order constraint from a write to a following read, the IBM 370 model provides special serialization instructions that may be placed between the two operations. Some serialization instructions are special memory instructions that are used for synchronization (e.g., compare&swap), while others are non-memory instructions such as a branch. Referring back to the first example program in this section, placing a serialization instruction after the write on each processor | ||

provides sequentially consistent results for the program even when it is executed on the IBM 370 model. | provides sequentially consistent results for the program even when it is executed on the IBM 370 model. | ||

[[Image:ibm370.jpg]] <br /> | <center>[[Image:ibm370.jpg]]</center> <br /> | ||

The conceptual system shown in the above figure is similar to that used for representing SC. The main difference is the presence of a buffer between each processor and the memory. Since we assume that each processor issues its operations in program order, we use the buffer to model the fact that the operations are not necessarily issued in the same order to memory. The cancelled reply path from the buffer to the processor implies that a read | The conceptual system shown in the above figure is similar to that used for representing SC. The main difference is the presence of a buffer between each processor and the memory. Since we assume that each processor issues its operations in program order, we use the buffer to model the fact that the operations are not necessarily issued in the same order to memory. The cancelled reply path from the buffer to the processor implies that a read is not allowed to return the value of a write to the same location from the buffer. <br /> | ||

The IBM-370 model has two types of serialization instructions: special instructions that generate memory operations (e.g., compare-and-swap) and special non-memory instructions (e.g., a special branch). | The IBM-370 model has two types of serialization instructions: special instructions that generate memory operations (e.g., compare-and-swap) and special non-memory instructions (e.g., a special branch). | ||

| Line 149: | Line 151: | ||

The total store ordering (TSO) model partially relaxes the above system's requirement by allowing a read to return the value of its own processor’s write even before the write is serialized with respect to other writes to the same location. However, as with sequential consistency, a read is not allowed to return the value of another processor’s write until it is made visible to all other processors. | The total store ordering (TSO) model partially relaxes the above system's requirement by allowing a read to return the value of its own processor’s write even before the write is serialized with respect to other writes to the same location. However, as with sequential consistency, a read is not allowed to return the value of another processor’s write until it is made visible to all other processors. | ||

The TSO model allows reordering of read followed by write without any constraint. All other program orders are maintained. The conceptual system is almost identical to that of IBM-370 except the forwarding path from the buffer to a read is no longer blocked. Therefore, if a read matches (i.e., is to the same location as) a write in the write buffer, the value of the last such write in the buffer that is before it in program order is forwarded to the read. Otherwise, the read returns the value in memory, as in the SC and IBM-370 models. | The TSO model allows reordering of a read followed by a write without any constraint. All other program orders are maintained. The conceptual system is almost identical to that of the IBM-370 except that the forwarding path from the buffer to a read is no longer blocked. Therefore, if a read matches (i.e., is to the same location as) a write in the write buffer, the value of the last such write in the buffer that is before it in program order is forwarded to the read. Otherwise, the read returns the value in memory, as is the case in the SC and IBM-370 models. | ||

If we consider operations as executing in some sequential order, the buffer-and-memory value requirement requires the read to return the value of either the last write to the same location that appears before the read in this sequence or the last write to the same location that is before the read in program order, whichever occurs later in the sequence. | If we consider operations as executing in some sequential order, the buffer-and-memory value requirement requires the read to return the value of either the last write to the same location that appears before the read in this sequence or the last write to the same location that is before the read in program order, whichever occurs later in the sequence. | ||

[[Image:TSO1.jpg]] <br /> | <center>[[Image:TSO1.jpg]]</center> <br /> | ||

For TSO, a safety net for write atomicity is required only for a write that is followed by a read to the same location in the same processor. The atomicity can be achieved by ensuring program order from the write to the read using read-modify-writes. | For TSO, a safety net for write atomicity is required only for a write that is followed by a read to the same location in the same processor. The atomicity can be achieved by ensuring program order from the write to the read using read-modify-writes. | ||

Unlike IBM 370, the TSO model does not provide explicit safety net. But read-modify-write operations can be used to provide the illusion that program order is maintained between a write and a following read. Program order appears to be maintained if either the write or the read is already part of a read-modify-write or is replaced by a read-modify-write. | Unlike IBM 370, the TSO model does not provide an explicit safety net. But read-modify-write operations can be used to provide the illusion that program order is maintained between a write and a following read. Program order appears to be maintained if either the write or the read is already part of a read-modify-write or is replaced by a read-modify-write. | ||

'''Difference between IBM370 and TSO:''' <br /> | '''Difference between IBM370 and TSO:''' <br /> | ||

| Line 174: | Line 176: | ||

d1:w=B d2:x=A | d1:w=B d2:x=A | ||

First consider the program segment in (a). Under the | First consider the program segment in (a). Under the sequential consistency or IBM-370 models, the outcome (u,v,w,x)=(1,1,0,0) is disallowed. However, this outcome is possible under TSO because reads are allowed to bypass all previous writes, even if they are to the same location. Therefore the sequence (b1,b2,c1,c2,a1,a2) is a valid total order for TSO. Of course, the consistency value requirement still requires b1 and b2 to return the values of a1 and a2, respectively, even though the reads occur earlier in the sequence than the writes. This requirement maintains the programmer's intuition that a read observes all the writes issued from the same processor as the read. Consider the program segment in (b). In this case, the outcome (u,v,w,x)=(1,2,0,0) is not allowed under SC or IBM-370, but is possible under TSO. | ||

writes. This maintains the intuition that a read observes all the writes issued from the same processor as the read. Consider the program segment in (b). In this case, the outcome (u,v,w,x)=(1,2,0,0)is not allowed under SC or IBM-370, but is possible under TSO. | |||

====''Processor Consistency (PC) ''==== | ====''Processor Consistency (PC) ''==== | ||

Finally, the PC model relaxes both constraints, such that a read can return the value of any write before the write is serialized or made visible to other processors. | Finally, the PC model relaxes both constraints, such that a read can return the value of any write before the write is serialized or made visible to other processors. | ||

Unlike the previous two models, Processor Consistency, first introduced in [21], is both view-based and non-synchronizing. In other words, each processor is allowed to have its own view of the system, and all memory accesses are treated the same. The order in which writes are observed must be the same as the order in which they were issued. However, if two processors issue writes, those writes do not need to appear to execute in the same order | Unlike the previous two models, Processor Consistency, first introduced in [21], is both view-based and non-synchronizing. In other words, each processor is allowed to have its own view of the system, and all memory accesses are treated the same. The order in which writes are observed must be the same as the order in which they were issued. However, if two processors both issue writes, those writes do not need to appear to execute in the same order from the perspective of either of the two processors or a third processor. The conditions of Processor Consistency phrased in another way are: | ||

* Before a read operation is allowed to perform with respect to any other processor, all previous read accesses must have already been performed. | |||

* Before any write operation is allowed to perform with respect to any other processor, all previous reads and writes must have been performed. | |||

The two conditions above imply one important fact: only the read-after-write program order requirement is relaxed. | |||

Even in | Even in the processor consistency model, there is no explicit safety net. Also, while the TSO approach to provide illusion of a safety net is enough in the case of reads, it cannot be used in the case of write instructions. | ||

====''Differences in IBM370, TSO and PC''==== | ====''Differences in IBM370, TSO and PC''==== | ||

| Line 199: | Line 201: | ||

register2 = register4 = 0 | register2 = register4 = 0 | ||

TSO and PC allow the results in part a to occur because they let the reads of the flags to happen before the writes. | TSO and PC both allow the results in part a) to occur because they let the reads of the flags to happen before the writes. However, this is not possible with the IBM-370 model as the read of A is not allowed on each processor until the write on that processor is done. | ||

Similarly, given part b results are allowed | Similarly, given part b), the results are allowed by the processor consistency model but not by IBM-370 or TSO. | ||

==='''Relax Write-to-Write program order'''=== | ==='''Relax Write-to-Write program order'''=== | ||

The second category of relaxed models that we consider | The second category of relaxed consistency models that we will consider allows two writes to execute out of program order in addition to allowing the reordering of a write followed by a read. This relaxation enables a number of hardware optimizations, including write merging in a write buffer and overlapping multiple write misses, all of which can lead to a reordering of write operations. Therefore, write operations can be serviced at a much faster rate. | ||

Even this model is not flexible enough for compiler optimizations. | Even this model is not flexible enough for compiler optimizations. | ||

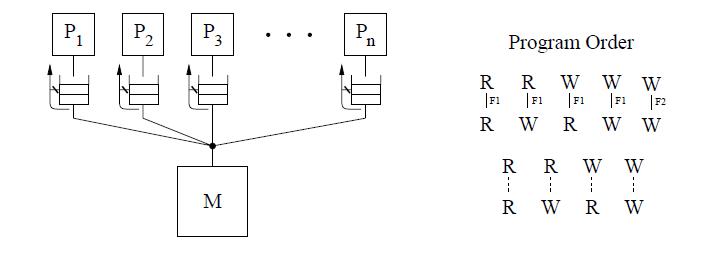

[[Image:reww.png]] | <center>[[Image:reww.png]]</center> | ||

==== ''SPARC V8 Partial Store Ordering'' ==== | ==== ''SPARC V8 Partial Store Ordering'' ==== | ||

One extra hardware optimization enabled by PSO in addition to the previous set of models is that writes to different locations from the same processor can be pipelined or overlapped and are allowed to reach memory or other cached copies out of program order. PSO and TSO have the same atomicity requirements by allowing a processor to read the value of its own write early, and preventing a processor from reading the value of another processor’s write before the write is visible to all other processors.[[#References|<sup>[26]</sup>]] | |||

This model can result in non sequentially consistent results in both of the below cases as opposed to the previously mentioned 3 protocols:<br> | This model can result in non-sequentially consistent results in both of the below cases as opposed to the previously mentioned 3 protocols:<br> | ||

a) | a) | ||

P1 P2 | P1 P2 | ||

| Line 223: | Line 225: | ||

Flag=1 Read Data | Flag=1 Read Data | ||

For maintaining order between two writes, PSO provides an instruction called STBAR (Barrier). | For maintaining order between two writes, PSO provides an instruction called STBAR (Barrier). | ||

The safety net provided by PSO for imposing the program order from a write to a read, and for enforcing write | The safety net provided by PSO for imposing the program order from a write to a read, and for enforcing write | ||

atomicity, is the same as TSO. PSO provides an explicit STBAR instruction for imposing program order between | atomicity, is the same as TSO. PSO also provides an explicit STBAR instruction for imposing program order between | ||

two writes. | two writes. | ||

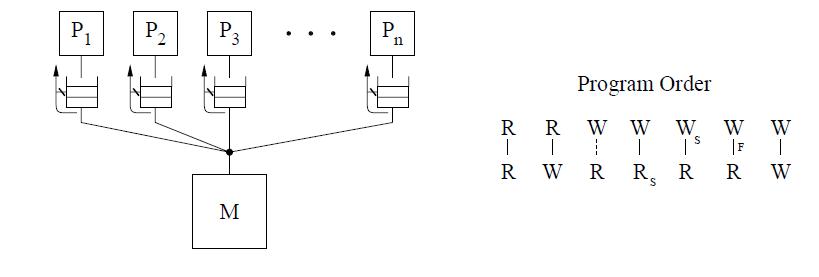

The '''Partial Store Ordering'''('''PSO''') model is very similar to the TSO model for SPARC V8. The figure below shows an identical conceptual system. There is only a slight difference in the program order, where writes to locations can be overlapped only if they are not to same locations. This is represented by the dashed line between the W's in the program order figure. | The '''Partial Store Ordering'''('''PSO''') model is very similar to the TSO model for SPARC V8. The figure below shows an identical conceptual system. There is only a slight difference in the program order, where writes to locations can be overlapped only if they are not to same locations. This is represented by the dashed line between the W's in the program order figure. A safety net for the program order is provided through a fence instruction, called the store barrier or STBAR, that may be used to enforce the program order between writes.<br /> | ||

[[Image:PSO.jpg]] <br /> | <center>[[Image:PSO.jpg]]</center> <br /> | ||

Let us consider an example from '''[http://infolab.stanford.edu/pub/cstr/reports/csl/tr/95/685/CSL-TR-95-685.pdf Technical Report by Kourosh Gharachorloo on Memory Consistency Models for shared-memory Multiprocessors]''' to demonstrate the working of PSO.<br /> | Let us consider an example from '''[http://infolab.stanford.edu/pub/cstr/reports/csl/tr/95/685/CSL-TR-95-685.pdf Technical Report by Kourosh Gharachorloo on Memory Consistency Models for shared-memory Multiprocessors]''' to demonstrate the working of PSO.<br /> | ||

| Line 240: | Line 241: | ||

c1:Flag=1 c2:v=B | c1:Flag=1 c2:v=B | ||

With relaxed Write to Read models, the only value for (u,v) is (1,1). With PSO, since it allows writes to different locations to complete out of program order, it also allows the outcomes (0,0) or (0,1) or (1,0) for (u,v). In this example, STBAR instruction needs to be placed immediately before ''c1'' on P1 in order to disallow all outcomes except (1,1). | With relaxed Write to Read models, the only value for (u,v) is (1,1). With PSO, since it allows writes to different locations to complete out of program order, it also allows the outcomes (0,0) or (0,1) or (1,0) for (u,v). In this example, a STBAR instruction needs to be placed immediately before ''c1'' on P1 in order to disallow all outcomes except (1,1). | ||

==='''Relax Read-to-Read and Read-to-Write program orders'''=== | ==='''Relax Read-to-Read and Read-to-Write program orders'''=== | ||

This model sheds the restriction on program order between all operations, including read to read and read followed by write to different locations . This flexibility provides the possibility of hiding the latency of read operations by implementing true non-blocking reads in the context of either static (in-order) or dynamic (out-of-order) scheduling processors, supported by techniques such as non-blocking (lockup-free) caches and speculative execution. The compiler also has full flexibility to reorder operations.<br /> | This model sheds the restriction on program order between all operations, including read to read and read followed by write to different locations . This flexibility provides the possibility of hiding the latency of read operations by implementing true non-blocking reads in the context of either static (in-order) or dynamic (out-of-order) scheduling processors, supported by techniques such as non-blocking (lockup-free) caches and speculative execution. The compiler also has full flexibility to reorder operations.<br /> | ||

'''Weak ordering (WO)''', '''Release consistency (RC)''', '''DEC Alpha''', '''Relaxed Memory Order (RMO)''', and '''PowerPC''' are examples of this model, with the last three models for commercial architectures. Except for Alpha,all the other models allow reordering of two reads to the same location. <br /> | '''Weak ordering (WO)''', '''Release consistency (RC)''', '''DEC Alpha''', '''Relaxed Memory Order (RMO)''', and '''PowerPC''' are examples of this model, with the last three models for commercial architectures. Except for Alpha,all the other models allow reordering of two reads to the same location.[[#References|<sup>[1]</sup>]] <br /> | ||

All of the models in this group allow a processor to read its own write early with the exception of | All of the models in this group allow a processor to read its own write early with the exception of RCpc, a flavor of Release consistency (RC), and PowerPC. All of the three commercial architectures provide explicit fence instructions to ensure program order. Frequent use of fence instructions can incur a significant overhead due to an increase in the number of instructions and the extra delay that may be associated with executing fence instructions. | ||

==== ''DEC Alpha''==== | ==== ''DEC Alpha''==== | ||

The program order constraints allow reads and writes to different locations to complete out of program order | The Alpha model's program order constraints allow reads and writes to different locations to complete out of program order | ||

unless there is a fence instruction between them. | unless there is a fence instruction between them. However, memory operations to the same location, including reads, are required to complete in program order. The safety net for program order is provided through the fence instructions, the memory barrier (MB) and the write memory barrier (WMB). The MB instruction can be used to maintain program order between any memory operations, while the WMB instruction provides this guarantee only among write operations. The Alpha model does not require a safety net for write atomicity. | ||

[[Image:alpha.jpg]] <br /> | <center>[[Image:alpha.jpg]]</center> <br /> | ||

The conceptual system shown above is the | The conceptual system shown above is the same as IBM-370 which requires a read to return the value of the last write operation to the same location. But since we can relax the program order from a write to a read, we can safely exploit optimizations such as read forwarding. | ||

==== ''Relaxed Memory Order (RMO)''==== | ==== ''Relaxed Memory Order (RMO)''==== | ||

The SPARC V9 architecture uses RMO which is an extension of the TSO and PSO models used in SPARC V8. | The SPARC V9 architecture uses RMO which is an extension of the TSO and PSO models used in SPARC V8. | ||

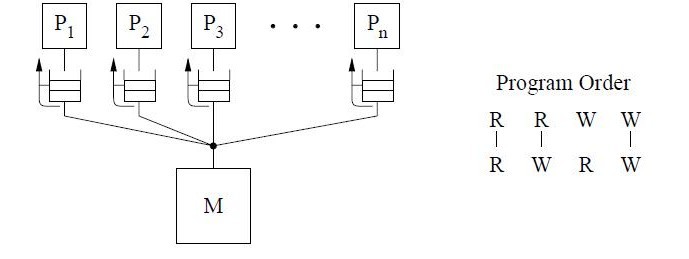

The Read to Read, Read to | The Read to Read, Read to Write program order is relaxed in this model, much like in PSO. But a read to write or the order between two writes to the same location are not relaxed. This is shown in the figure for program order shown below. <br /> | ||

[[Image:rmo1.jpg]] <br /> | <center>[[Image:rmo1.jpg]]</center> <br /> | ||

RMO provides four types of fences[F1 through F4] that allow program order to be selectively maintained between any two types of operations. A single fence instruction, MEMBAR, can specify a combination of the above fence types by setting the appropriate bits in a four-bit opcode. No safety net for write atomicity is required. | RMO provides four types of fences[F1 through F4] that allow program order to be selectively maintained between any two types of operations. A single fence instruction, MEMBAR, can specify a combination of the above fence types by setting the appropriate bits in a four-bit opcode. No safety net for write atomicity is required. | ||

==== ''IBM PowerPC'' ==== | |||

This model constrains the program order <br /> | |||

#Between sub-operations. That is each operation may consist of multiple sub-operations and that all sub-operations of the first operation must complete before any sub-operations of the second operation.[Represented by the double lines between operations in the figure below.] <br /> | |||

#Between writes to the same location.<br /> | |||

#Among conflicting operations. <br /> | |||

==== '' | <center>[[Image:powerpc.jpg]]</center> <br /> | ||

The IBM PowerPC model exposes multiple-copy semantics of memory to the programmer as shown in the conceptual system above. Safety net fence instruction is called SYNC which is similar to the MB fence instruction of the Alpha systems. However, a SYNC between two reads allows them to occur out of program order. Additionally, PowerPC allows a write to be seen early by another processor’s read. Hence a read-modify-write operation may be needed to enforce program order between two reads to the same location as well as to make writes appear atomic. | |||

==== ''Android Platform (ARM Architecture)'' ==== | |||

ARM SMP provides weak memory consistency guarantees. As we know with weak ordering consistency, unless the programmer explicitly defines the ordering using synchronization primitives, the hardware doesn't guarantee any ordering of memory accesses. | |||

There are four basic situations to consider:<br> | |||

1. store followed by another store<br> | |||

2. load followed by another load<br> | |||

3. load followed by store<br> | |||

4. store followed by load<br> | |||

===== '''Store/store and load/load''' ===== | |||

Thread 1 Thread 2 | |||

A = 100 loop_until(B == 1) | |||

B = 1 print A | |||

Thread 1 needs to ensure that the store to A happens before the store to B. This is a “store/store” situation. Similarly, thread 2 needs to ensure that the load of B happens before the load of A; this is a load/load situation. The loads and stores can be observed in any order. This can be corrected using barriers as follows: | |||

Thread 1 Thread 2 | |||

A = 100 loop_until(B == 1) | |||

store/store barrier load/load barrier | |||

B = 1 print A | |||

The store/store barrier guarantees that all threads will observe the write to A before they observe the write to B. It makes no guarantees about the ordering of loads in thread 1. The load/load barrier in thread 2 makes a similar guarantee for the loads there. | |||

===== '''Load/store and store/load''' ===== | |||

Thread 1 Thread 2 | |||

print A loop_until(B == 1) | |||

B = 1 A = 100 | |||

Thread 2 could observe thread 1’s store of B=1 before it observe’s thread 1’s load from A, and as a result store A=100 before thread 1 has a chance to read A. Inserting a load/store barrier in each thread solves the problem: | |||

Thread 1 Thread 2 | |||

print A loop_until(B == 1) | |||

load/store barrier load/store barrier | |||

B = 1 A = 100 | |||

The | The load/store barrier guarantees that thread 1's load of A happens before its B=1, thus guaranteeing that thread 2's A=100 does not overwrite thread 1's load of A. | ||

=='''The Big Picture - Relationship Between Different Models'''== | =='''The Big Picture - Relationship Between Different Models'''== | ||

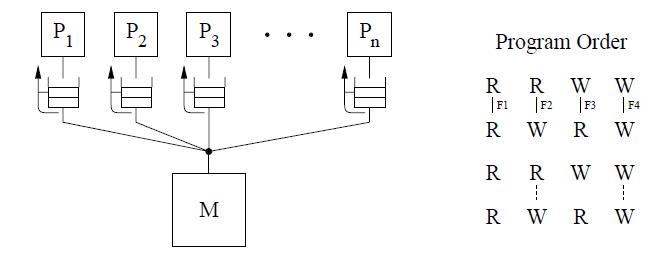

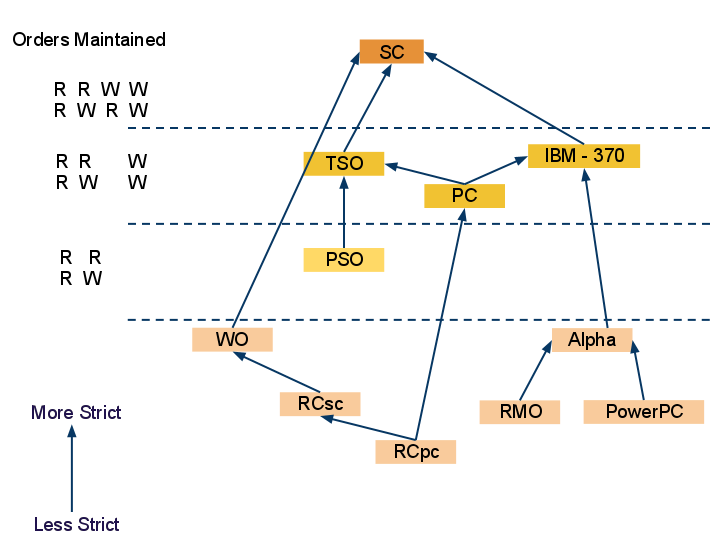

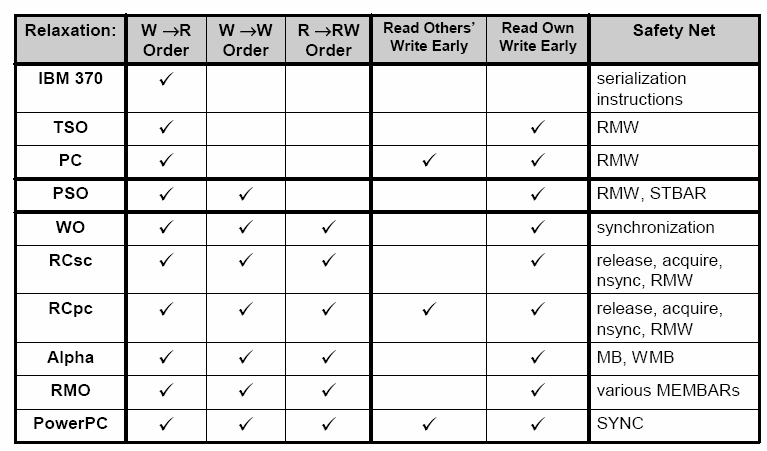

[[Image:rbdf.png]] | <center>[[Image:rbdf.png]]</center> | ||

In this diagram we can see the different memory consistency models and how they are related to each other. The strictest model SC, sequential consistency, is at the top level, which maintains all 4 orders of read-write. The first relaxation of write-read order is allowed in memory models TSO, PC and IBM-370 which can be seen at the second level from the top. The third level, which is comprised of the PSO model, also allows write - write order relaxation and hence is less strict than any of the TSO, PC or IBM-370 models. In the bottom level both read-read and read-write program orders are also relaxed and are thus the least strict of the models. Different flavors of the model in this level are discussed later in this chapter as well as in the Yan Solihin[9] text. A tabular representation of the differences in relaxation optimizations utilized by the different models can be seen in the table below[1]. | |||

<center>[[Image:Relax_consist_table.png]]</center> | |||

=='''Release Consistency Related Models'''== | =='''Release Consistency Related Models'''== | ||

'''Release consistency''' is one of the consistency models used in the domain of the concurrent programming (e.g. in distributed shared memory, distributed transactions etc.). | '''Release consistency''' is one of the consistency models used in the domain of the concurrent programming (e.g. in distributed shared memory, distributed transactions etc.). | ||

Systems of this kind are characterized by the existence of two special synchronization operations, release and acquire. Before issuing a write to a memory object a node must acquire the object via a special operation, and later release it. Therefore the application that runs within the | Systems of this kind are characterized by the existence of two special synchronization operations, release and acquire. Before issuing a write to a memory object, a node must acquire the object via a special operation, and after the operation is completed, the node must later release it. Therefore the application that runs within the operations acquire and release constitutes the critical region. The system is said to provide release consistency if all write operations by a certain node are seen by the other nodes after the former releases the object and before the latter acquires it. | ||

Release consistency provides | Release consistency provides two kinds of operations. '''Acquire''' operations are used to tell the memory system that a critical region is about to be entered. '''Release''' operations say that a critical region has just been exited. These operations can be implemented either as ordinary operations on special variables or as special operations. | ||

==='''Release Consistency Models'''=== | ==='''Release Consistency Models'''=== | ||

We can broadly divide the types of models based on their implementations into the following | We can broadly divide the types of models based on their implementations into the following two types: | ||

<br> | <br> | ||

'''Eager Release '''<br> | *'''Eager Release '''<br> | ||

'''Lazy Release''' <br> | *'''Lazy Release''' <br> | ||

===='''Eager Release Consistency Model'''==== | ===='''Eager Release Consistency Model'''==== | ||

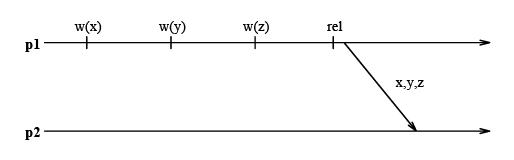

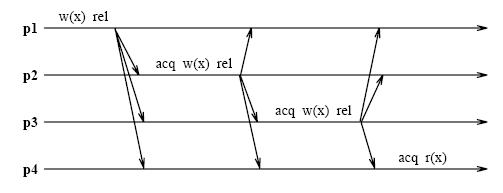

In Eager Release Consistency Model , the invalidation (or write notices) are propagated at release points. '''Munin's write shared protocol''' proposed by '''John K. Bennett, John B. Carter, and Willy Zwaenepoel''' of Rice University implemented this Eager Release Consistency Model. It is a software implementation of | In the Eager Release Consistency Model , the invalidation (or write notices) are propagated at release points. '''Munin's write shared protocol''' proposed by '''John K. Bennett, John B. Carter, and Willy Zwaenepoel''' of Rice University implemented this Eager Release Consistency Model. It is a software implementation of the release consistency model which buffers writes until a release, instead of pipelining them as in the DASH implementation. At the point of release all writes going to the same destination are merged into a single message. This is illustrated in the following diagram: | ||

<center> [[Image:munin.jpg]] </center> | <center> [[Image:munin.jpg]] </center> | ||

| Line 297: | Line 339: | ||

===='''Lazy Release Consistency Model'''==== | ===='''Lazy Release Consistency Model'''==== | ||

Lazy release consistency (LRC) is consistency model most frequently used in Software Distributed Shared Memory. It is used | Lazy release consistency (LRC) is the consistency model most frequently used in Software Distributed Shared Memory. It is used for implementing release consistency that lazily pulls modifications across the interconnect only when necessary. The basic concept behind the protocol is to allow processors to continue referencing cache blocks that have been written by other processors. Although write notices are sent as soon as a processor writes a shared block, invalidations occur only at acquire operations. This is sufficient to ensure that true sharing dependencies are observed. Lazy algorithms such as LRC do not make modifications globally visible at the time of a release. Instead, LRC guarantees only that a processor that acquires a lock will see all modifications that precede the lock acquire. | ||

<center> [[Image: LRC.jpg]] </center> | <center> [[Image: LRC.jpg]] </center> | ||

| Line 310: | Line 352: | ||

<br> | <br> | ||

If an update policy is used in conjunction with Munin's write shared protocol and x is present in all caches, then all of these cached copies are updated at every release. Logically, however it suffices to update each processor's copy only when it acquires l. This results in a single message exchange per acquire as in a message passing implementation. | If an update policy is used in conjunction with Munin's write shared protocol and x is present in all caches, then all of these cached copies are updated at every release. Logically, however, it suffices to update each processor's copy only when it acquires l. This results in a single message exchange per acquire as in a message passing implementation. | ||

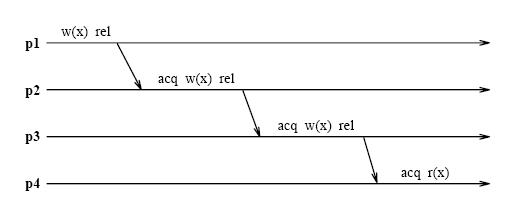

Unlike eager algorithms such as Munin's write shared protocol, lazy algorithms such as LRC (Lazy Release Consistency) do not make modications globally visible at the time of a release. Instead LRC guarantees only that a processor that acquires a lock will see all modications that precede the lock acquire. As indicated in the above figure , all | Unlike eager algorithms such as Munin's write shared protocol, lazy algorithms such as LRC (Lazy Release Consistency) do not make modications globally visible at the time of a release. Instead LRC guarantees only that a processor that acquires a lock will see all modications that precede the lock acquire. As indicated in the above figure, all modifications that occur in program order before any of the releases in p1 through p4 precede the lock acquisition in p4. With LRC, modifications are propagated at the time of an acquire. Only the modifications that precede the acquire are sent to the acquiring processor. The modifications can be piggybacked on the message that grants the lock, further reducing message traffic. The following figure shows the message traffic in LRC for the same shared data accesses as in the figure shown under lazy consistency model section. l and x are sent in a single message at each acquire. | ||

<center> [[Image:lazy2.jpg]] </center> | <center> [[Image:lazy2.jpg]] </center> | ||

| Line 320: | Line 362: | ||

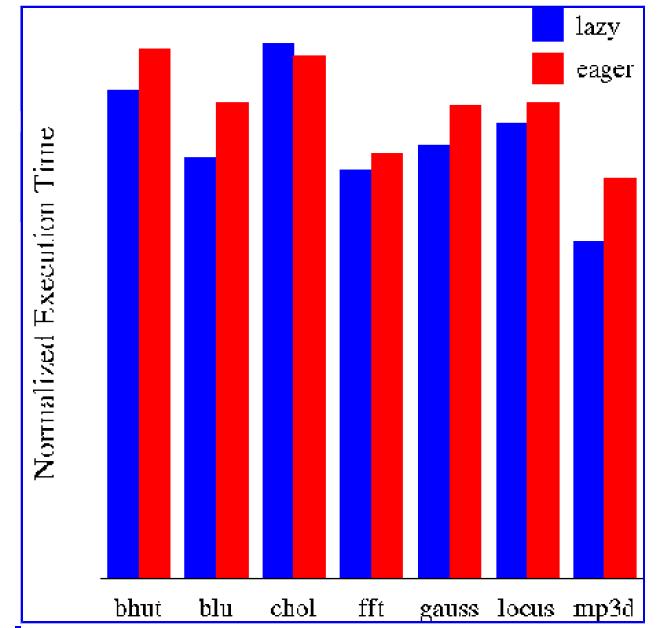

====='''Performance Analysis of Lazy and Eager Consistency Models'''===== | ====='''Performance Analysis of Lazy and Eager Consistency Models'''===== | ||

Leonidas I. Kontothanassis, Michael L. Scott, and Ricardo Bianchini from University of Rochester have performed | Leonidas I. Kontothanassis, Michael L. Scott, and Ricardo Bianchini from University of Rochester have performed experiments to evaluate a lazy release-consistent protocol suitable for machines with dedicated protocol processors. Their results indicate that the first protocol outperforms eager release consistency by as much as 20% across a variety of applications. The lazier protocol, on the other hand, is unable to recoup its high synchronization overhead. This represents a qualitative shift from the DSM world, where lazier protocols always yield performance improvements. Based on their results, they conclude that machines with flexible hardware support for coherence should use protocols based on lazy release consistency, but in a less ''aggressively lazy'' form than is appropriate for DSM. | ||

the other hand, is unable to recoup its high synchronization overhead. This represents a qualitative shift from the DSM world, where lazier protocols always yield performance improvements. Based on their results, they conclude that machines with flexible hardware | |||

support for coherence should use protocols based on lazy release consistency, but in a less ''aggressively lazy'' form than is appropriate for DSM. | |||

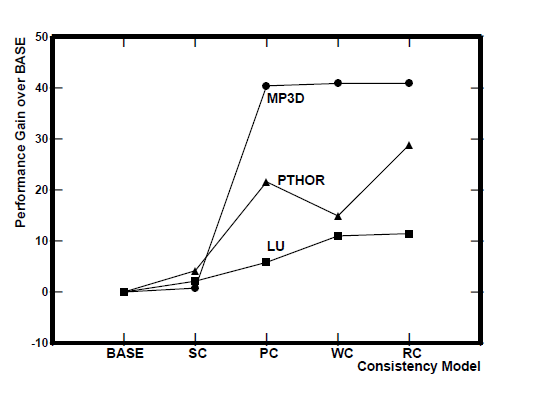

The following graph details the results Kontothanassis, et all. collected from their experiments to compare Lazy and Eager Consistency Models: | |||

<br> | <br> | ||

<center>[[Image:Perf.jpg|250px]]</center> | <center>[[Image:Perf.jpg|250px]]</center> | ||

<br> | <br> | ||

More information about this | More information about this experiment and the results can be obtained from their paper : '''[http://delivery.acm.org.www.lib.ncsu.edu:2048/10.1145/230000/224398/a61-kontothanassis.html?key1=224398&key2=6529911721&coll=ACM&dl=ACM&CFID=140829&CFTOKEN=60032635 Lazy Release Consistency for Hardware-Coherent Multiprocessors]''' | ||

='''Comparison Between Different Models'''= | ='''Comparison Between Different Models'''= | ||

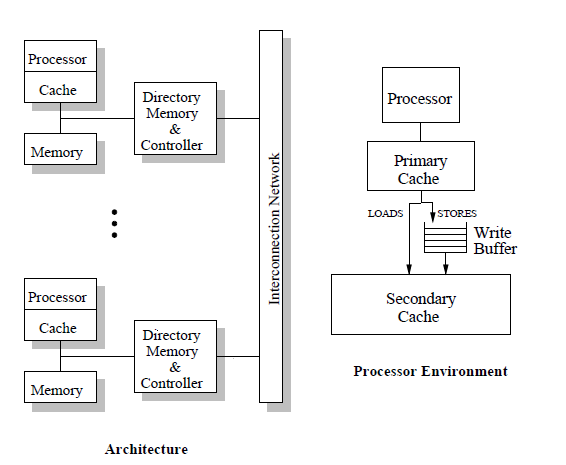

In this section we will consider the | In this section we will consider the comparisons made between the different consistency models in Performance Evaluation of Memory Consistency Models for Shared-Memory Multiprocessors by Kourosh Gharachorloo, Anoop Gupta, and John Hennessy. | ||

The authors chose an architecture that resembles the DASH shared-memory multiprocessor [13], in which the physical memory is distributed among the nodes and cache coherence | |||

the DASH shared-memory multiprocessor [13], | |||

memory is distributed among the nodes and cache coherence | |||

is maintained using a distributed directory-based protocol. For | is maintained using a distributed directory-based protocol. For | ||

each memory block, the directory keeps track of remote nodes | each memory block, the directory keeps track of remote nodes | ||

| Line 342: | Line 378: | ||

remote copies. Acknowledgement messages are used to | remote copies. Acknowledgement messages are used to | ||

inform the originating processing node when an invalidation has | inform the originating processing node when an invalidation has | ||

been completed. | been completed. <br /> | ||

[[Image:archused.png]] | <center>[[Image:archused.png]]</center> | ||

Here performance is defined as the processor utilization achieved in execution. reason for using processor utilization as the figure of merit is that | Here, performance is defined as the processor utilization achieved in execution. The reason for using processor utilization as the figure of merit is that | ||

it provides reasonable results even when the program’s control | it provides reasonable results even when the program’s control | ||

path is not deterministic and depends on relative timing of synchronization | path is not deterministic and depends on relative timing of synchronization | ||

| Line 351: | Line 387: | ||

is normalized to the performance of the BASE model for that | is normalized to the performance of the BASE model for that | ||

program. The results show a wide range of performance gains | program. The results show a wide range of performance gains | ||

due to the less strict models. Moving from BASE to SC, the | due to the usage of less strict models. Moving from BASE to SC, the | ||

gains are minimal. The largest gains in performance arise when | gains are minimal. The largest gains in performance arise when | ||

moving from SC to PC. Surprisingly, WC does worse than PC | moving from SC to PC. Surprisingly, WC does worse than PC | ||

| Line 359: | Line 395: | ||

and 11% for LU. | and 11% for LU. | ||

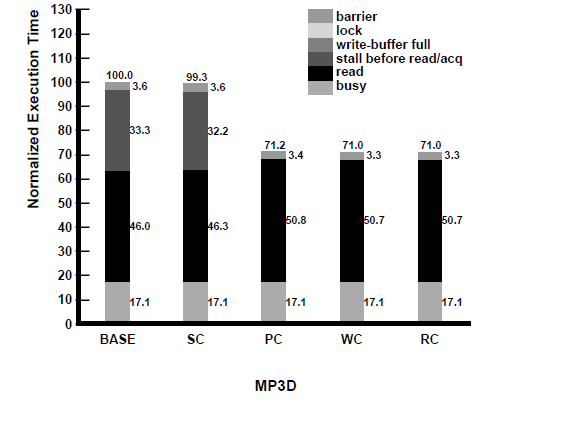

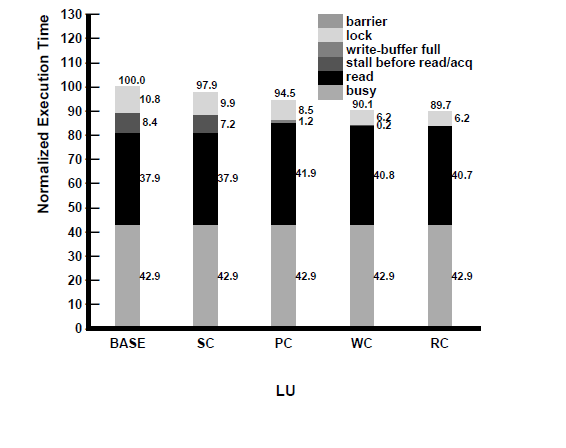

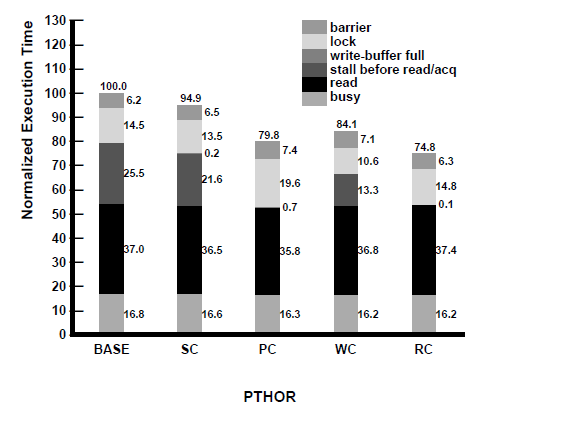

[[Image:perf1.png]] | <center>[[Image:perf1.png]]</center> | ||

To better understand the above results, in | To better understand the above results, in the following figures we present a | ||

breakdown of the execution time for the applications under each | breakdown of the execution time for the applications under each | ||

of the models. The execution time of the models are normalized | of the models. The execution time of the models are normalized | ||

| Line 371: | Line 407: | ||

be stalled waiting for previous writes to perform. This time is | be stalled waiting for previous writes to perform. This time is | ||

represented by the section above it. The three sections on top of | represented by the section above it. The three sections on top of | ||

that represent the stalls due to write buffer being full, time spent | that represent the stalls due to the write buffer being full, time spent | ||

spinning while waiting for acquires to complete, and time spent | spinning while waiting for acquires to complete, and time spent | ||

waiting at a barrier. | waiting at a barrier. Some general observations that can be made from the breakdown | ||

Some general observations that can be made from the breakdown | are: <br /> | ||

are: (i) the latency of read misses forms a large portion of | |||

the idle time, especially once we move to PC, WC, and RC; (ii) | (i) the latency of read misses forms a large portion of | ||

the major reason for BASE and SC to | the idle time, especially once we move to PC, WC, and RC; <br /> | ||

(ii)the major reason for BASE and SC to have worse performance than the other | |||

models is the stalling of the processor before reads (and acquires) | models is the stalling of the processor before reads (and acquires) | ||

for pending writes to complete; (iii) the write buffer being full | for pending writes to complete; <br /> | ||

does not seem to be a factor in hindering the performance of PC; | (iii) the write buffer being full does not seem to be a factor in hindering the performance of PC; | ||

and finally, (iv) the reason for WC performing worse than PC | and finally, <br /> | ||

(iv) the reason for WC performing worse than PC | |||

and RC is the extra processor stalls at acquires and the first read | and RC is the extra processor stalls at acquires and the first read | ||

after release accesses (as described in Section 4). The small variation | after release accesses (as described in Section 4). <br /> | ||

The small variation | |||

in busy times for PTHOR is due to the non-deterministic | in busy times for PTHOR is due to the non-deterministic | ||

behavior of the application for the same input. We now look at | behavior of the application for the same input. We now look at | ||

| Line 392: | Line 432: | ||

In another study Ronald Bos et al. investigated the performance benefits of relaxed consistency models on multiprocessors executing a process network application. They used a trace-driven simulator, developed using SystemC, to model the distributed shared memory system of a | In another study Ronald Bos et al. investigated the performance benefits of relaxed consistency models on multiprocessors executing a process network application. They used a trace-driven simulator, developed using SystemC, to model the distributed shared memory system of a | ||

prototype multiprocessor developed at Philips called Philips '''[http://www.es.ele.tue.nl/epicurus/files/report_jvrijnsen.pdf CAKE]''' (a nonuniform, distributed shared memory multiprocessor prototype). The simulator offers two consistency models: Sequential Consistency (SC) and a generalized Relaxed Consistency (RC) model. Input traces were generated by running a process network application in a cycle-accurate simulator of the prototype multiprocessor. The results showed that relaxed consistency has marginal performance benefits over sequential consistency. The advantage of relaxed memory consistency decreases for increasing network latency. More information about this study can be found in | prototype multiprocessor developed at Philips called Philips '''[http://www.es.ele.tue.nl/epicurus/files/report_jvrijnsen.pdf CAKE]''' (a nonuniform, distributed shared memory multiprocessor prototype). The simulator offers two consistency models: Sequential Consistency (SC) and a generalized Relaxed Consistency (RC) model. Input traces were generated by running a process network application in a cycle-accurate simulator of the prototype multiprocessor. The results showed that relaxed consistency has marginal performance benefits over sequential consistency. The advantage of relaxed memory consistency decreases for increasing network latency. More information about this study can be found in the paper '''[http://ce.et.tudelft.nl/publicationfiles/915_463_bos.pdf Performance Benefits of Relaxed Memory Consistency for Process Network Applications]''' | ||

='''Software based concurrency - C++ as an example'''= | |||

There are three different layers that reorder operations to improve performance while at the same time providing an illusion of sequential execution i.e maintaining program order. They are as follows: <br> | |||

'''1. Compilers''' : Reorder instructions at the software level.<br> | |||

'''2. Processors''': Instruction parallelism and out of order execution or re-ordering <br> | |||

'''3. Cache coherence protocols''': Write propagation and write serialization<br> | |||

In this section, we address how software based concurrency can be built upon the underlying hardware release consistency models to provide cleaner and faster synchronization primitives. Specifically, we use the concurrency model adopted in the latest C++11 standard library. A programming language such as C++ is an abstraction to support different platforms and hardware, which provide different abilities and interfaces according to their architecture. The C++ standard library provides high-level features like locks and mutexes as well as low-level features like atomics to deal with concurrent data accesses. We will not delve into locks and mutexes since it has already been covered in the text. We will describe how "lock-free" programming can be achieved using a low-level feature called atomics in C++. Atomics have lower latency and higher scalability and are the center piece of the new "lock-free" programming paradigm.<br> | |||

Consider the following example<br> | |||

long data; ---> data shared by multiple threads | |||

std::atomic<bool> readyFlag(false); ---> atomic variable instead of a lock | |||

void Thread1() | |||

{ | |||

data = 100; ---> setting the data | |||

readyFlag.store(true) ---> signalling readiness to the consumer (Thread 2) | |||

} | |||

void Thread2() | |||

{ | |||

while(!readyFlag.load()) { ---> wait for readiness | |||

cout <<data; ---> access shared data | |||

} | |||

} | |||

The store() operation in Thread 1 performs a "release" operation on the affected memory block, which ensures that all prior memory operations become visible to other threads before the effect of the store operation. The load() operation performs an "acquire" operation on the affected memory block which ensures that all following memory operations become visible to other threads after the load operation. As a consequence, since the setting of data happens before Thread1 stores true in the readyFlag and the processing of data happens after Thread2 has loaded true as value of the readyFlag, the processing of data is guaranteed to happen after the data was provided by Thread1. In this example, we have shown how synchronization can be achieved using atomics, without the use of expensive locks. | |||

='''Some Shortcomings of Relaxed Models'''= | ='''Some Shortcomings of Relaxed Models'''= | ||

| Line 416: | Line 486: | ||

With all their shortcomings, relaxed models are widely used in many commercial multiprocessor systems, | With all their shortcomings, relaxed models are widely used in many commercial multiprocessor systems, | ||

including systems designed by major computer manufacturers such as Digital Equipment, IBM, and Sun | including systems designed by major computer manufacturers such as Digital Equipment, IBM, and Sun | ||

Microsystems. The wide-spread use of these systems suggests that even though sequential consistency is | Microsystems (now Oracle). The wide-spread use of these systems suggests that even though sequential consistency is | ||

simpler to use for programmers, performance often plays an important role in the ultimate choice made by | simpler to use for programmers, performance often plays an important role in the ultimate choice made by | ||

system designers and programmers. Nevertheless, we would ideally like to provide the extra performance | system designers and programmers. Nevertheless, we would ideally like to provide the extra performance | ||

with as little programming complexity as possible. | with as little programming complexity as possible. | ||

= '''Relaxed Memory Consistency Model Concept Quiz''' = | |||

1. Sequential Consistency requires that | |||

:: a. all memory operations appear to execute at the same time | |||

:: b. all memory operations of a single processor appear to execute in the order described by that processor’s program | |||

:: c. both of the above | |||

:: d. none of the above <br /> | |||

2. The synchronizing model | |||

:: a. Assigns different consistency models to each group | |||

:: b. Assigns the same consistency model to each group | |||

:: c. Doesn’t differentiate between memory accesses | |||

:: d. None of the above <br /> | |||

3. In the Processor Consistency model, which requirement is relaxed? | |||

:: a. Write-after-write | |||

:: b. Read-after-read | |||

:: c. Write-after-read | |||

:: d. Read-after-write <br /> | |||

4. Weak ordering and Release consistency are examples of which model? | |||

:: a. Write-to-Write program order | |||

:: b. Write-to-Read program order | |||

:: c. Read-to-Read and Read-to-Write | |||

:: d. None of the above <br /> | |||

5. Eager Release and Lazy Release are examples of what model? | |||

:: a. Sequential consistency | |||

:: b. Release consistency | |||

:: c. Weak ordering | |||

:: d. Processor consistency <br /> | |||

6. Which of these is the strictest model? | |||

:: a. Sequential consistency | |||

:: b. Release consistency | |||

:: c. Weak ordering | |||

:: d. Processor consistency <br /> | |||

7. Which of these is the least strict model? | |||

:: a. Sequential consistency | |||

:: b. Release consistency | |||

:: c. Weak ordering | |||

:: d. Processor consistency | |||

8. In Release Consistency protocols, the lazier protocol always performs better. | |||

:: a. True | |||

:: b. False | |||

9. Increased programming complexity is a drawback of relaxed models. | |||

:: a. True | |||

:: b. False | |||

10. Partial Store Ordering (PSO) is an example of which model? | |||

:: a. Write-to-write | |||

:: b. Read-to-write and read-to-read | |||

:: c. Write-to-read | |||

:: d. None of the above | |||

Answers: 1. c 2. a 3. d 4. c 5. b 6. a 7. b 8. b 9. a 10. a | |||

='''References'''= | ='''References'''= | ||

# [http://www.cs.rochester.edu/u/sandhya/csc258/seminars/bhardwaj_Consistency_Models.pdf Consistency Models] <br /> | |||

# [http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.5.7132&rep=rep1&type=pdf Speculative Sequential Consistency with Little Custom Storage] <br /> | # [http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.5.7132&rep=rep1&type=pdf Speculative Sequential Consistency with Little Custom Storage] <br /> | ||

# [http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.126.3128&rep=rep1&type=pdf Two techniques to enhance the performance of memory consistency models] <br /> | # [http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.126.3128&rep=rep1&type=pdf Two techniques to enhance the performance of memory consistency models] <br /> | ||

| Line 457: | Line 579: | ||

#http://rsim.cs.illinois.edu/~sadve/JavaWorkshop00/talk.pdf | #http://rsim.cs.illinois.edu/~sadve/JavaWorkshop00/talk.pdf | ||

#[https://www.google.com/url?sa=t&rct=j&q=&esrc=s&source=web&cd=8&cad=rja&ved=0CGsQFjAH&url=http%3A%2F%2Ffaculty.kfupm.edu.sa%2FCOE%2Fmudawar%2Fcs282%2Flectures%2F09-Consistency.pps&ei=zZpbUaOkFozI9gTqzoDgCg&usg=AFQjCNF_vDcWurneM_hObF2iWZ-rxJKBHw&sig2=f9Qc1zCcwiosxiKKzyNO8Q&bvm=bv.44697112,d.eWU Consistency models by KFPM university ] | #[https://www.google.com/url?sa=t&rct=j&q=&esrc=s&source=web&cd=8&cad=rja&ved=0CGsQFjAH&url=http%3A%2F%2Ffaculty.kfupm.edu.sa%2FCOE%2Fmudawar%2Fcs282%2Flectures%2F09-Consistency.pps&ei=zZpbUaOkFozI9gTqzoDgCg&usg=AFQjCNF_vDcWurneM_hObF2iWZ-rxJKBHw&sig2=f9Qc1zCcwiosxiKKzyNO8Q&bvm=bv.44697112,d.eWU Consistency models by KFPM university ] | ||

#http://developer.android.com/training/articles/smp.html | |||

#The C++ Standard Library (second edition) by Nicolai Josuttis | |||

Latest revision as of 00:57, 24 April 2014

Memory Consistency models

Introduction

The memory consistency model of a shared-memory multiprocessor provides a formal specification of how the memory system will appear to the programmer, eliminating the gap between the behavior expected by the programmer and the actual behavior supported by a system. In other words, a memory consistency model is a set of rules that govern how memory systems will process memory access operations from multiple processors. In case of a uniprocessor, memory access operations occur in program order, so memory consistency may not be a significant issue. However, in the case of multiprocessor systems, the memory consistency model establishes the requirements for correct operation. These requirements then in turn govern the implementation of system optimizations that can have a direct impact on programming models. By this definition, it can be shown that, in effect, a low level memory consistency model can have a direct impact on algorithm design. Because of this dependency between consistency model and programming algorithm, a thorough understanding of memory consistency models is required while developing programs that run on distributed shared memory systems.

The following wiki chapter provides a brief discussion on the intuition behind using relaxed memory consistency models for scalable design of multiprocessors. This chapter also provides an introduction to the consistency models implemented in real multiprocessor systems such as Digital Alpha, Sparc V9 , IBM Power PC and processors from Sun Microsystems. Memory consistency models used in the Android platform, specifically using the ARM CPU architecture as an example, are also discussed in the following sections.

Sequential Consistency Model (SC)

Introduction to Sequential Consistency Model

In order to write correct and efficient shared memory programs, programmers need a precise notion of shared memory semantics. To ensure correct program execution, a programmer expects that the data value read should be the same as the latest value written to that variable in the system. However in many commercial shared memory systems,the processor may observe an older value, causing unexpected behavior. Intuitively, a read should return the value of the "last" write to the same memory location. In uniprocessors, "last" is precisely defined by the sequential order specified by the program, called program order. However, this is not the case in multiprocessors. A write and read of a particular variable are not related by program order because they originate on two different processors.

The uniprocessors model, however, can be extended to apply to multiprocessors in a natural way. The resulting model is called Sequential consistency. In brief, sequential consistency requires that

- all memory operations appear to execute one at a time, and

- all memory operations of a single processor appear to execute in the order described by that processor's program.