CSC 456 Fall 2013/4b cv: Difference between revisions

No edit summary |

No edit summary |

||

| (15 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

Non-Uniform Memory Access (NUMA) technology has become the optimal solution for more complex systems in terms of the increase of processors. NUMA provides the functionality to distribute memory to each processor, giving each processor local access to its own share, as well as giving each processor the ability to access remote memory located in other processors. NUMA is a very important processor feature and if it is ignored one can expect sub-par application memory performance. | |||

===Background=== | ===Background=== | ||

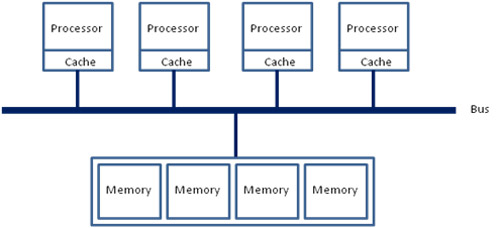

NUMA is often grouped together with Uniform Memory Access (UMA) because the two methods of memory management have similar features. The architecture of UMA (see figure 1.1)<ref name="ott11">David Ott. Optimizing Applications for NUMA. http://software.intel.com/en-us/articles/optimizing-applications-for-numa | |||

{{cite web | |||

| url = http://software.intel.com/en-us/articles/optimizing-applications-for-numa | |||

| title = Optimizing Applications for NUMA | |||

| last1 = Ott | |||

| first1 = David | |||

| location = Intel | |||

| date = November 02, 2011 | |||

| accessdate = November 18, 2013 | |||

| separator = , | |||

}} | |||

</ref>has a bus inbetween the processors/cache and the memory for each processor. NUMA (see figure 1.2)<ref name = "ott11"/> however has a directl connection between the processor/cache and the memory for the processor, the bus is then connected to the memory. The main trade off between UMA and NUMA is related to memory access time. Since the NUMA memory is directly linked to the processor/cache it provides faster access to local data but is slower when accessing remote data<ref name = "ott11"/>. | |||

[[File:UMA.jpg|center]] | |||

<div style="text-align:center">'''Figure 1.1 Diagram of the UMA memory configuration'''</div> | |||

[[File:NUMA.jpg|center]] | |||

<div style="text-align:center">'''Figure 1.2 Diagram of the NUMA memory configuration'''</div> | |||

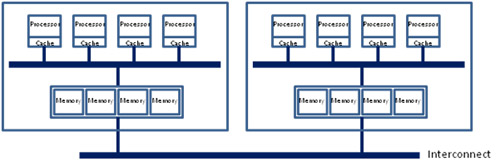

= | The NUMA system memory is managed in a node based model (see figure 1.3)<ref name = "ott11"/>. This design implements a mixture of UMA and NUMA by creating a node at encompasses a NUMA system and connects the nodes so the entire architecture resembles the UMA system. | ||

[[File:UMA&NUMA.jpg|center]] | |||

<div style="text-align:center">'''Figure 1.3 Diagram of the mixture of the UMA and NUMA memory configurations'''</div> | |||

====Issues with NUMA==== | |||

As previously stated one of the advantages from using the NUMA system is the fast local memory access due to the location of the memory in relation to the processor/cache. This is also a disadvantage, when comparing NUMA to UMA, when a thread tries to access memory not located locally. NUMA is slower and less efficient about obtaining the non-localized data from memory but this can be solved in two different ways<ref name = "ott11"/>. | |||

The first method of improving the performance of NUMA is using processor affinity<ref name ="ott11"/>. Processor affinity can be used when multiple threads are running on different processors. In order to reduce the amount of overhead from running these different threads processor affinity is used to manage them. Processor affinity is the practice of assigning threads that correspond with a certain application to certain cores because of the memory they are locally storing<ref name = "ott11"/>. This is designed the decrease the amount of memory requests that are not within the local memory. However this practice can also cause issues because it does not balance the work evenly so the resources can be under or over utilized<ref name ="ott11"/>. The second way of improving the performance of NUMA memory management is by using different page allocation strategies to utilize the local memory in more efficient ways. | |||

===Different Strategies=== | ===Different Strategies=== | ||

Page Allocation strategies are broken down to three categories when concerning with NUMA: | Page Allocation strategies are broken down to three categories when concerning with NUMA:<ref name="fasdf">http://www.sciencedirect.com/science/article/pii/074373159190117R</ref> | ||

* Fetch - determining which page to be brought to main memory | * Fetch - determining which page to be brought to main memory | ||

** demand fetching | ** demand fetching | ||

| Line 26: | Line 50: | ||

===Page Allocation Support in OpenMP=== | ===Page Allocation Support in OpenMP=== | ||

* has directives for allocating blocks a certain way | * has directives for allocating blocks a certain way (concerning NUMA) | ||

** !dec$ migrate_next_touch(v1,...,v2) - migrates selected pages to referencing thread for easy access | ** <code>!dec$ migrate_next_touch(v1,...,v2)</code> - migrates selected pages to referencing thread for easy access<ref name="craig00">http://www.sc2000.org/techpapr/papers/pap.pap226.pdf</ref> | ||

<ref name=" | ** <code>!dec$ memories</code> - interprets memories of a machine to be seen as an array | ||

** <code>!dec$ template</code> - defines virtual array | |||

===References=== | |||

<ref name="lee96">JongWoo Lee and Yookun Cho. An Effective Shared Memory Allocator for Reducing False Sharing in NUMA Processors. https://parasol.tamu.edu/~rwerger/Courses/689/spring2002/day-3-ParMemAlloc/papers/lee96effective.pdf | |||

{{cite web | {{cite web | ||

| url = | | url = https://parasol.tamu.edu/~rwerger/Courses/689/spring2002/day-3-ParMemAlloc/papers/lee96effective.pdf | ||

| title = | | title = An Effective Shared Memory Allocator for Reducing False Sharing in NUMA Processors | ||

| last1 = | | last1 = Lee | ||

| first1 = | | first1 = JongWoo | ||

| last2 = Cho | |||

| last2 = | | first2 = Yookun | ||

| first2 = | | location = Korea | ||

| date = 1996 | |||

| location = | | accessdate = November 19, 2013 | ||

| date = | |||

| accessdate = | |||

| separator = , | | separator = , | ||

}} | }} | ||

</ref> | </ref> | ||

<references/> | |||

Latest revision as of 00:23, 16 December 2013

Non-Uniform Memory Access (NUMA) technology has become the optimal solution for more complex systems in terms of the increase of processors. NUMA provides the functionality to distribute memory to each processor, giving each processor local access to its own share, as well as giving each processor the ability to access remote memory located in other processors. NUMA is a very important processor feature and if it is ignored one can expect sub-par application memory performance.

Background

NUMA is often grouped together with Uniform Memory Access (UMA) because the two methods of memory management have similar features. The architecture of UMA (see figure 1.1)<ref name="ott11">David Ott. Optimizing Applications for NUMA. http://software.intel.com/en-us/articles/optimizing-applications-for-numa

</ref>has a bus inbetween the processors/cache and the memory for each processor. NUMA (see figure 1.2)<ref name = "ott11"/> however has a directl connection between the processor/cache and the memory for the processor, the bus is then connected to the memory. The main trade off between UMA and NUMA is related to memory access time. Since the NUMA memory is directly linked to the processor/cache it provides faster access to local data but is slower when accessing remote data<ref name = "ott11"/>.

The NUMA system memory is managed in a node based model (see figure 1.3)<ref name = "ott11"/>. This design implements a mixture of UMA and NUMA by creating a node at encompasses a NUMA system and connects the nodes so the entire architecture resembles the UMA system.

Issues with NUMA

As previously stated one of the advantages from using the NUMA system is the fast local memory access due to the location of the memory in relation to the processor/cache. This is also a disadvantage, when comparing NUMA to UMA, when a thread tries to access memory not located locally. NUMA is slower and less efficient about obtaining the non-localized data from memory but this can be solved in two different ways<ref name = "ott11"/>.

The first method of improving the performance of NUMA is using processor affinity<ref name ="ott11"/>. Processor affinity can be used when multiple threads are running on different processors. In order to reduce the amount of overhead from running these different threads processor affinity is used to manage them. Processor affinity is the practice of assigning threads that correspond with a certain application to certain cores because of the memory they are locally storing<ref name = "ott11"/>. This is designed the decrease the amount of memory requests that are not within the local memory. However this practice can also cause issues because it does not balance the work evenly so the resources can be under or over utilized<ref name ="ott11"/>. The second way of improving the performance of NUMA memory management is by using different page allocation strategies to utilize the local memory in more efficient ways.

Different Strategies

Page Allocation strategies are broken down to three categories when concerning with NUMA:<ref name="fasdf">http://www.sciencedirect.com/science/article/pii/074373159190117R</ref>

- Fetch - determining which page to be brought to main memory

- demand fetching

- prefetching

- Placement - determining where to hold the page

- Fixed-Node

- Preferred-Node

- Random-Node

- Replacement - determining which page to remove for new pages

- Per-Task

- Per-Computation

- Global

- first touch - allocates the frame on the node that incurs the page fault, i.e. on the same node where the processor that accesses it resides.

- round robin - pages are allocated in different memory nodes and are accessed based on time slices.

- random -

Page Allocation Support in OpenMP

- has directives for allocating blocks a certain way (concerning NUMA)

!dec$ migrate_next_touch(v1,...,v2)- migrates selected pages to referencing thread for easy access<ref name="craig00">http://www.sc2000.org/techpapr/papers/pap.pap226.pdf</ref>!dec$ memories- interprets memories of a machine to be seen as an array!dec$ template- defines virtual array

References

<ref name="lee96">JongWoo Lee and Yookun Cho. An Effective Shared Memory Allocator for Reducing False Sharing in NUMA Processors. https://parasol.tamu.edu/~rwerger/Courses/689/spring2002/day-3-ParMemAlloc/papers/lee96effective.pdf

</ref> <references/>