CSC/ECE 517 Fall 2013/ch1 1w46 ka: Difference between revisions

No edit summary |

No edit summary |

||

| (54 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

''' Data Mining in Rails Application''' | |||

This wiki discusses the implementation of Data Mining tasks i.e automatic or semi-automatic analysis of large quantities of data to extract previously unknown interesting patterns such as groups of data records, unusual records and dependencies in Rails Application. | |||

== Introduction to Data Mining in Rails Application == | |||

Data mining <ref name="mining> Usama Fayyad, Gregory Piatetsky-Shapiro and Padhraic Smyth. (2008, Dec 17). ''From Data Mining to Knowledge Discoveries in Databases.'' Retrieved from http://www.kdnuggets.com/gpspubs/aimag-kdd-overview-1996-Fayyad.pdf</ref> (the analysis step of the "Knowledge Discovery in Databases" process, or KDD) is the computational process of discovering patterns in large data sets involving methods at the intersection of artificial intelligence, machine learning, statistics, and database systems. The overall goal of the data mining process is to extract information from a data set and transform it into an understandable structure for further use. | |||

Ruby on Rails, often simply Rails, is an open source web application framework which runs on the Ruby programming language. Data mining techniques like <code> [http://en.wikipedia.org/wiki/K-means_clustering K-means clustering] </code> can be developed on ruby on rails by using various gems which ruby provides. | |||

Thus it becomes easier for a data mining analyst to write mining code in a ruby on rails application. | |||

== | ==Weka and Ruby== | ||

< | <code>[http://www.cs.waikato.ac.nz/ml/weka/ Weka]</code> is a collection of <code>[http://en.wikipedia.org/wiki/Machine_learning machine learning]</code> algorithms for data mining tasks. The algorithms can either be applied directly to a dataset or called from an embedded code. Weka contains tools for data pre-processing, classification, regression, clustering, association rules, and visualization.[http://www.ibm.com/developerworks/library/ba-data-mining-techniques/] It is also well-suited for developing new machine learning schemes. Weka is written in Java however it is possible to use Weka’s libraries inside Ruby. To do this, we must install Java, <code>[http://rubygems.org/gems/rjb Rjb]</code>, and of course obtain the Weka source code. We use <code>[https://github.com/jruby/jruby/wiki/AboutJRuby JRuby]</code> and this is illustrated as follows: <ref name="wekaruby">Peter Lane. (2009, Aug 15). ''Accessing Weka with JRuby.'' Retrieved from http://rubyforscientificresearch.blogspot.com/2009/08/accessing-weka-from-jruby.html</ref> | ||

===Clustering Data using WEKA from JRuby=== | |||

JRuby provides easy access to Java classes and methods, and WEKA is no exception. The following program builds a simple k-means cluster on a supplied input file, and then prints out the assigned cluster for each data instance. The 'include_class' statements are there to simplify references to classes in the API. When classifying each instance, we must watch for the exception thrown in case a classification cannot be made. Finally, notice that the filename is passed as a command-line parameter: the parameters after the name of the JRuby program are packaged up into ARGV in the usual ruby style. Assuming weka.jar, jruby.jar, and your program are in the same folder, a sample Ruby example is shown bellow: | |||

</ | # Weka scripting from jruby | ||

</ | require "java" | ||

require "weka" | |||

include_class "java.io.FileReader" | |||

include_class "weka.clusterers.SimpleKMeans" | |||

include_class "weka.core.Instances" | |||

# load data file | |||

file = FileReader.new ARGV[0] | |||

data = Instances.new file | |||

# create the model | |||

kmeans = SimpleKMeans.new | |||

kmeans.buildClusterer data | |||

# print out the built model | |||

print kmeans | |||

# Display the cluster for each instance | |||

data.numInstances.times do |i| | |||

cluster = "UNKNOWN" | |||

begin | |||

cluster = kmeans.clusterInstance(data.instance(i)) | |||

rescue java.lang.Exception | |||

end | |||

puts "#{data.instance(i)},#{cluster}" | |||

end | |||

We can see that the WEKA API makes it easy to pass in a data file. Data can be in a number of formats, including <code>[http://www.cs.waikato.ac.nz/ml/weka/arff.html ARFF]</code> and <code>[http://docs.python.org/2/library/csv.html CSV]</code>. When run on the weather.arff example (in WEKA's 'data' folder), the output looks like the following: | |||

Number of iterations: 3 | |||

Within cluster sum of squared errors: 16.237456311387238 | |||

Missing values globally replaced with mean/mode | |||

Cluster centroids: | |||

Cluster# | |||

Attribute Full Data 0 1 | |||

(14) (9) (5) | |||

outlook sunny sunny overcast | |||

temperature 73.5714 75.8889 69.4 | |||

humidity 81.6429 84.1111 77.2 | |||

windy FALSE FALSE TRUE | |||

play yes yes yes | |||

===Advantages of using Weka from jRuby=== | |||

One of the advantages of using a language like jruby to talk to WEKA is that we should have more control on how our data is constructed and passed to the machine-learning algorithms. A good start is how to construct our own set of instances, rather than reading them directly in from file. There are some quirks to WEKA's construction of a set of instances. In particular, each attribute must be defined through an instance of the Attribute class. This class gives a string name to the attribute and if the attribute is a nominal attribute, the class also holds a vector of the nominal values. Each instance can then be constructed and added to the growing set of instances. | |||

==Popular gems for Data Mining== | |||

Since data mining is a complex process of retrieving data from a huge set of databases, many gems are used to ease this procedure in a rails application. Some of the popular gems are RVol, Rbbt and RubyBand. They are explained below. | |||

===RVol=== | |||

Gem for creating a database for data mining stock markets focusing on <code> [http://www.spindices.com/indices/equity/sp-500 SP500]</code> data. Rvol enables investors to study market volatility from free data on the internet. RVol is the most popular gem for data mining applications developed in Rails. The created database could be used for instance from <code>[https://www.stat.auckland.ac.nz/~ihaka/downloads/Interface98.pdf R] </code> or <code> [http://www.altiusdirectory.com/Computers/matlab-programming-language.php Matlab]</code> or similar to do quantitative analysis. You could script new data mining functions extending the Rvol library, load the data into a statistics package, or use it with your own quantitative framework. Usage: rvol -s will create the database. The database contains stocks (with industries), options, implied volatility and calculated standard deviations for the day. Earnings are downloaded and listed with implied volatilities for front and back month options. There are some reports, which can be generated after the database is downloaded by looking at rvol -p. There is a function to calculate correlations between stocks in the same industry groups (--correlationAll, --correlation10 (10 day correlation),this will take a long time and jruby is recommended for a better use of system resources. Rvol downloads option chains, calculates implied volatilities for them and has features to list top 10 type of lists for potential investment opportunities. 'Put' call ratios are calculated , total amount of 'puts' or 'calls' for a particular company etc are available. These can be used to measure the market sentiment. Different filters can be used to find stocks/options with high volatilities, stocks with high options volume for the day etc which are indicators of forthcoming events. <ref name="rvol">Toni Karhu. (2012, Aug 18). ''The Ruby ToolBox: RVol.'' Retrieved from https://www.ruby-toolbox.com/projects/rvol</ref> | |||

====Installation of RVol==== | |||

Since RVol is a Ruby Gem, we must install it by including it in the Gem file of our Rails application. Version 0.6.4 is the latest and the most stable version by Tonic. | |||

gem install rvol -v 0.6.4 | |||

====Example: Use of RVol to Generate stock market databases==== | |||

RVol is commonly used to populate databases for Mining stock market data. A sample code snippet below shows the simple way to generate a test database from internet data. <ref name="rvolEg">Toni Karhu. (2012, Aug 19). ''A ruby gem for downloading and processing options chains and financial data.'' Retrieved from https://github.com/tonik/rvol</ref> | |||

require 'Rvol' | |||

require 'test/unit' | |||

require 'dm-core' | |||

require 'dm-migrations' | |||

require 'model/stock' | |||

require 'model/chain' | |||

require 'model/earning' | |||

require 'model/stockcorrelation' | |||

#set the test db an in memory db | |||

DataMapper::Logger.new($stdout, :debug) | |||

puts File.dirname(__FILE__) | |||

DataMapper.setup(:default, "sqlite::memory:") | |||

DataMapper.finalize | |||

DataMapper.auto_migrate! | |||

# GENERATE THE TEST DATABASE FROM INTERNET DATA | |||

puts 'GENERATING TEST DATABASE AND TESTING SCRAPERS' | |||

end | |||

===Rbbt=== | |||

Rbbt stands for Ruby Bio-Text, it started as an API for <code> [http://people.ischool.berkeley.edu/~hearst/text-mining.html text mining] </code> developed for <code> [http://sent.dacya.ucm.es/ SENT] </code>, but its functionality has been used for other applications as well, such as <code> [http://marq.dacya.ucm.es/ MARQ] </code>. Rbbt covers several functionalities, some will work right away, some require to install dependencies or download and process data from the internet. Since not all users are likely to need all the functionalities, the dependencies of this gem include only the very basic requirements. Dependencies may appear unexpectedly when using new parts of the API. <ref name="rbbt">Miguel Vazquez. (2013, June 10). ''Rbbt.'' Retrieved from http://rubydoc.info/gems/rbbt/frames</ref> | |||

====Installation of Rbbt==== | |||

Install the gem normally gem install rbbt. The gem includes a configuration tool rbbt_config. The first time you run it, it will ask you to configure some paths. After that you may use it to process the data for different tasks. | |||

====Example: Use of Rbbt to translate identifiers==== | |||

1. Do rbbt_config prepare identifiers to do deploy the configuration files and enter 'gene' data, this needs to be done just once. | |||

2. Now you may do rbbt_config install 'organisms' to process all the 'organisms', or rbbt_config install organisms such as 'yeast'. | |||

3. You may now use a script like this to translate 'gene' identifiers from 'yeast' feed from the standard input. | |||

require 'rbbt/sources/organism' | |||

index = Organism.id_index('Sce', :native => 'Enter Gene Id') | |||

STDIN.each_line{|l| puts "#{l.chomp} => #{index[l.chomp]}"} | |||

===Ruby Band=== | |||

Ruby Band is a Ruby Gem that makes use of some selected Java software for data mining and machine learning applications available to the JRuby/Ruby users. Ruby Band features a comprehensive collection of data preprocessing and modeling techniques, and supports several standard data mining tasks, more specifically: data pre-processing (filtering), clustering, classification, regression, and <code> [http://jmlr.org/papers/v3/guyon03a.html feature selection] </code>. <ref name="rubyband">Alberto Arrigoni. (2013, Sept 24). ''Data Mining in JRuby with Ruby Band.'' Retrieved from http://sciruby.com/blog/2013/09/24/gsoc-2013-data-mining-in-jruby-with-ruby-band</ref> | |||

====Installation of Ruby Band==== | |||

Install the 'jbundle' gem and 'bundle' for JRuby before trying to install the 'ruby-band' gem. | |||

If you want to use 'ruby-band' APIs without installing the gem you need to run command 'rake -T' once before requiring the gem in your script (this is necessary for jbundler to download the '.jar' files and subsequently set the Java classpath). Otherwise use: | |||

gem install ruby-band | |||

====Example: Use of Ruby Band for Data Parsing==== | |||

One central datatype of ruby-band is derived from the Weka counterpart (the class Weka.core.Instances). By instantiating this class, we obtain a matrix-like structure for storing an entire dataset. Ad-hoc methods were created to guarantee that 'Instances' class objects can be converted to other datatypes (e.g. Apache matrix) and back. There are currently many ways to import data into ruby-band. But the basic way is to Parse data from ARFF/CSV files. <ref name="rubybandGit">Alberto Arrigoni. (2013, Sept 23). ''Ruby-Band Examples.'' Retrieved from https://github.com/arrigonialberto86/ruby-band</ref> | |||

You can simply parse an external Weka ARFF/CSV file by doing: | |||

require 'ruby-band' | |||

dataset = Core::Parser.parse_ARFF(my_file.arff) | |||

dataset = Core::Parser.parse_CSV(my_file.csv) | |||

====Example: Use of Ruby Band for Data Classification==== | |||

Classification and regression algorithms in WEKA are called “classifiers” and are located below the Weka::Classifier:: module. Currently, ruby-band only supports batch-trainable classifiers: this means they get trained on the whole dataset at once. Below is a quick example of how to train a classifier on a dataset parsed from an '''<code>[http://www.cs.waikato.ac.nz/ml/weka/arff.html ARFF]</code>'''(attribute relation file format). <ref name="rubybandGit">Alberto Arrigoni. (2013, Sept 23). ''Ruby-Band Examples.'' Retrieved from https://github.com/arrigonialberto86/ruby-band</ref> | |||

require 'ruby-band' | |||

# parse a dataset | |||

dataset = Core::Parser.parse_ARFF(my_file.arff) | |||

# initialize and train a classifier | |||

classifier = Weka::Classifier::Lazy::KStar::Base.new do | |||

set_options '-M d' | |||

set_data dataset | |||

set_class_index 4 | |||

end | |||

# cross-validate the trained classifier | |||

puts classifier.cross_validate(3) | |||

====Example: Use of Ruby Band for Data Clustering==== | |||

Clustering is an unsupervised Machine Learning technique of finding patterns in the data, i.e., these algorithms work without class attributes. Classifiers, on the other hand, are supervised and need a class attribute. This section, similar to the one about classifiers, covers the following topics: | |||

* Building a clusterer - batch (incremental must still be implemented) learning. | |||

* Evaluating a clusterer - how to evaluate a built clusterer. | |||

* Clustering instances - determining what clusters unknown instances belong to. <ref name="rubybandGit">Alberto Arrigoni. (2013, Sept 23). ''Ruby-Band Examples.'' Retrieved from https://github.com/arrigonialberto86/ruby-band</ref> | |||

==Data Mining with Ruby and Twitter== | |||

The following sections present a few scripts for collecting and presenting data available through the Twitter API. These scripts focus on simplicity, but you can extend and combine them to create new capabilities. <ref name="TwitterData">M. Tim Jones. (2011, Oct 11). ''Data Mining with Ruby and Twitter.'' Retrieved from http://www.ibm.com/developerworks/library/os-dataminingrubytwitter/os-dataminingrubytwitter-pdf.pdf</ref> | |||

===Twitter User Information=== | |||

A large amount of information is available about each Twitter user. This information is only accessible if the user isn't protected. Let's look at how you can extract a user's data and present it in a more convenient way. Listing below presents a simple Ruby script to retrieve a user's information (based on his or her screen name), and then emit some of the more useful elements. You use the 'to_s' Ruby method to convert the value to a string as needed. Note that you first ensure that the user isn't protected; otherwise, this data wouldn't be accessible. | |||

#!/usr/bin/env ruby | |||

require "rubygems" | |||

require "twitter" | |||

screen_name = String.new ARGV[0] | |||

a_user = Twitter.user(screen_name) | |||

if a_user.protected != true | |||

puts "Username : " + a_user.screen_name.to_s | |||

puts "Name : " + a_user.name | |||

puts "Id : " + a_user.id_str | |||

puts "Location : " + a_user.location | |||

puts "User since : " + a_user.created_at.to_s | |||

puts "Bio : " + a_user.description.to_s | |||

puts "Followers : " + a_user.followers_count.to_s | |||

puts "Friends : " + a_user.friends_count.to_s | |||

puts "Listed Cnt : " + a_user.listed_count.to_s | |||

puts "Tweet Cnt : " + a_user.statuses_count.to_s | |||

puts "Geocoded : " + a_user.geo_enabled.to_s | |||

puts "Language : " + a_user.lang | |||

if (a_user.url != nil) | |||

puts "URL : " + a_user.url.to_s | |||

end | |||

if (a_user.time_zone != nil) | |||

puts "Time Zone : " + a_user.time_zone | |||

end | |||

puts "Verified : " + a_user.verified.to_s | |||

puts | |||

tweet = Twitter.user_timeline(screen_name).first | |||

puts "Tweet time : " + tweet.created_at | |||

puts "Tweet ID : " + tweet.id.to_s | |||

puts "Tweet text : " + tweet.text | |||

end | |||

===Twitter User Behavior=== | |||

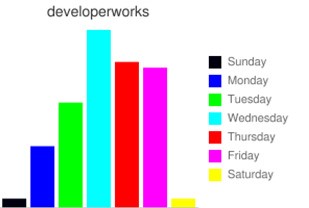

Twitter contains a large amount of data that you can mine to understand some elements of user behavior. Two simple examples are to analyze when a Twitter user tweets and from what application the user tweets. You can use the following two simple scripts to extract and visualize this information. Listing below presents a script that iterates the tweets from a particular user (using the user_timeline method), and then for each tweet, extracts the particular day on which the tweet originated. You use a simple hash again to accumulate your weekday counts, then generate a bar chart using Google Charts in a similar fashion to the previous time zone example. Note also the use of default for the hash, which specifies the value to return for undefined hashes. | |||

#!/usr/bin/env ruby | |||

require "rubygems" | |||

require "twitter" | |||

require "google_chart" | |||

screen_name = String.new ARGV[0] | |||

dayhash = Hash.new | |||

# Initialize to avoid a nil error with GoogleCharts (undefined is zero) | |||

dayhash.default = 0 | |||

timeline = Twitter.user_timeline(screen_name, :count => 200 ) | |||

timeline.each do |t| | |||

tweetday = t.created_at.to_s[0..2] | |||

if dayhash.has_key?(tweetday) | |||

dayhash[tweetday] = dayhash[tweetday] + 1 | |||

else | |||

dayhash[tweetday] = 1 | |||

end | |||

end | |||

We need to retrieve a BarChart to display per day tweet activity. | |||

GoogleChart::BarChart.new('300x200', screen_name, :vertical, false) do |bc| | |||

bc.data "Sunday", [dayhash["Sun"]], '00000f' | |||

bc.data "Monday", [dayhash["Mon"]], '0000ff' | |||

bc.data "Tuesday", [dayhash["Tue"]], '00ff00' | |||

bc.data "Wednesday", [dayhash["Wed"]], '00ffff' | |||

bc.data "Thursday", [dayhash["Thu"]], 'ff0000' | |||

bc.data "Friday", [dayhash["Fri"]], 'ff00ff' | |||

bc.data "Saturday", [dayhash["Sat"]], 'ffff00' | |||

puts bc.to_url | |||

end | |||

[[File:img2333.jpg]] | |||

Running the above mining script provides the result of the execution of the tweet-days script in the above Listing for the developerWorks account. As shown, Wednesday tends to be the most active tweet day, with Saturday and Sunday the least active. | |||

==References== | |||

<references/> | |||

==Further Reading== | |||

# Rudi Cilibrasi(2008,Oct 4)''CompLearn: A compression-based data-mining toolkit.'' Retrieved from http://www.ruby-doc.org/gems/docs/c/complearn-0.6.2/doc/readme_txt.html | |||

# Seamus Abshere and Andy Rossmeissl (2012, May 14) Real time example of Data Mining Rails Example.[http://impact.brighterplanet.com/] Retrieved from http://www.rubydoc.info/gems/data_miner-ruby19/frames | |||

Latest revision as of 00:36, 8 October 2013

Data Mining in Rails Application

This wiki discusses the implementation of Data Mining tasks i.e automatic or semi-automatic analysis of large quantities of data to extract previously unknown interesting patterns such as groups of data records, unusual records and dependencies in Rails Application.

Introduction to Data Mining in Rails Application

Data mining <ref name="mining> Usama Fayyad, Gregory Piatetsky-Shapiro and Padhraic Smyth. (2008, Dec 17). From Data Mining to Knowledge Discoveries in Databases. Retrieved from http://www.kdnuggets.com/gpspubs/aimag-kdd-overview-1996-Fayyad.pdf</ref> (the analysis step of the "Knowledge Discovery in Databases" process, or KDD) is the computational process of discovering patterns in large data sets involving methods at the intersection of artificial intelligence, machine learning, statistics, and database systems. The overall goal of the data mining process is to extract information from a data set and transform it into an understandable structure for further use.

Ruby on Rails, often simply Rails, is an open source web application framework which runs on the Ruby programming language. Data mining techniques like K-means clustering can be developed on ruby on rails by using various gems which ruby provides.

Thus it becomes easier for a data mining analyst to write mining code in a ruby on rails application.

Weka and Ruby

Weka is a collection of machine learning algorithms for data mining tasks. The algorithms can either be applied directly to a dataset or called from an embedded code. Weka contains tools for data pre-processing, classification, regression, clustering, association rules, and visualization.[1] It is also well-suited for developing new machine learning schemes. Weka is written in Java however it is possible to use Weka’s libraries inside Ruby. To do this, we must install Java, Rjb, and of course obtain the Weka source code. We use JRuby and this is illustrated as follows: <ref name="wekaruby">Peter Lane. (2009, Aug 15). Accessing Weka with JRuby. Retrieved from http://rubyforscientificresearch.blogspot.com/2009/08/accessing-weka-from-jruby.html</ref>

Clustering Data using WEKA from JRuby

JRuby provides easy access to Java classes and methods, and WEKA is no exception. The following program builds a simple k-means cluster on a supplied input file, and then prints out the assigned cluster for each data instance. The 'include_class' statements are there to simplify references to classes in the API. When classifying each instance, we must watch for the exception thrown in case a classification cannot be made. Finally, notice that the filename is passed as a command-line parameter: the parameters after the name of the JRuby program are packaged up into ARGV in the usual ruby style. Assuming weka.jar, jruby.jar, and your program are in the same folder, a sample Ruby example is shown bellow:

# Weka scripting from jruby

require "java"

require "weka"

include_class "java.io.FileReader"

include_class "weka.clusterers.SimpleKMeans"

include_class "weka.core.Instances"

# load data file

file = FileReader.new ARGV[0]

data = Instances.new file

# create the model

kmeans = SimpleKMeans.new

kmeans.buildClusterer data

# print out the built model

print kmeans

# Display the cluster for each instance

data.numInstances.times do |i|

cluster = "UNKNOWN"

begin

cluster = kmeans.clusterInstance(data.instance(i))

rescue java.lang.Exception

end

puts "#{data.instance(i)},#{cluster}"

end

We can see that the WEKA API makes it easy to pass in a data file. Data can be in a number of formats, including ARFF and CSV. When run on the weather.arff example (in WEKA's 'data' folder), the output looks like the following:

Number of iterations: 3

Within cluster sum of squared errors: 16.237456311387238

Missing values globally replaced with mean/mode

Cluster centroids:

Cluster#

Attribute Full Data 0 1

(14) (9) (5)

outlook sunny sunny overcast

temperature 73.5714 75.8889 69.4

humidity 81.6429 84.1111 77.2

windy FALSE FALSE TRUE

play yes yes yes

Advantages of using Weka from jRuby

One of the advantages of using a language like jruby to talk to WEKA is that we should have more control on how our data is constructed and passed to the machine-learning algorithms. A good start is how to construct our own set of instances, rather than reading them directly in from file. There are some quirks to WEKA's construction of a set of instances. In particular, each attribute must be defined through an instance of the Attribute class. This class gives a string name to the attribute and if the attribute is a nominal attribute, the class also holds a vector of the nominal values. Each instance can then be constructed and added to the growing set of instances.

Popular gems for Data Mining

Since data mining is a complex process of retrieving data from a huge set of databases, many gems are used to ease this procedure in a rails application. Some of the popular gems are RVol, Rbbt and RubyBand. They are explained below.

RVol

Gem for creating a database for data mining stock markets focusing on SP500 data. Rvol enables investors to study market volatility from free data on the internet. RVol is the most popular gem for data mining applications developed in Rails. The created database could be used for instance from R or Matlab or similar to do quantitative analysis. You could script new data mining functions extending the Rvol library, load the data into a statistics package, or use it with your own quantitative framework. Usage: rvol -s will create the database. The database contains stocks (with industries), options, implied volatility and calculated standard deviations for the day. Earnings are downloaded and listed with implied volatilities for front and back month options. There are some reports, which can be generated after the database is downloaded by looking at rvol -p. There is a function to calculate correlations between stocks in the same industry groups (--correlationAll, --correlation10 (10 day correlation),this will take a long time and jruby is recommended for a better use of system resources. Rvol downloads option chains, calculates implied volatilities for them and has features to list top 10 type of lists for potential investment opportunities. 'Put' call ratios are calculated , total amount of 'puts' or 'calls' for a particular company etc are available. These can be used to measure the market sentiment. Different filters can be used to find stocks/options with high volatilities, stocks with high options volume for the day etc which are indicators of forthcoming events. <ref name="rvol">Toni Karhu. (2012, Aug 18). The Ruby ToolBox: RVol. Retrieved from https://www.ruby-toolbox.com/projects/rvol</ref>

Installation of RVol

Since RVol is a Ruby Gem, we must install it by including it in the Gem file of our Rails application. Version 0.6.4 is the latest and the most stable version by Tonic.

gem install rvol -v 0.6.4

Example: Use of RVol to Generate stock market databases

RVol is commonly used to populate databases for Mining stock market data. A sample code snippet below shows the simple way to generate a test database from internet data. <ref name="rvolEg">Toni Karhu. (2012, Aug 19). A ruby gem for downloading and processing options chains and financial data. Retrieved from https://github.com/tonik/rvol</ref>

require 'Rvol' require 'test/unit' require 'dm-core' require 'dm-migrations' require 'model/stock' require 'model/chain' require 'model/earning' require 'model/stockcorrelation' #set the test db an in memory db DataMapper::Logger.new($stdout, :debug) puts File.dirname(__FILE__) DataMapper.setup(:default, "sqlite::memory:") DataMapper.finalize DataMapper.auto_migrate! # GENERATE THE TEST DATABASE FROM INTERNET DATA puts 'GENERATING TEST DATABASE AND TESTING SCRAPERS' end

Rbbt

Rbbt stands for Ruby Bio-Text, it started as an API for text mining developed for SENT , but its functionality has been used for other applications as well, such as MARQ . Rbbt covers several functionalities, some will work right away, some require to install dependencies or download and process data from the internet. Since not all users are likely to need all the functionalities, the dependencies of this gem include only the very basic requirements. Dependencies may appear unexpectedly when using new parts of the API. <ref name="rbbt">Miguel Vazquez. (2013, June 10). Rbbt. Retrieved from http://rubydoc.info/gems/rbbt/frames</ref>

Installation of Rbbt

Install the gem normally gem install rbbt. The gem includes a configuration tool rbbt_config. The first time you run it, it will ask you to configure some paths. After that you may use it to process the data for different tasks.

Example: Use of Rbbt to translate identifiers

1. Do rbbt_config prepare identifiers to do deploy the configuration files and enter 'gene' data, this needs to be done just once.

2. Now you may do rbbt_config install 'organisms' to process all the 'organisms', or rbbt_config install organisms such as 'yeast'.

3. You may now use a script like this to translate 'gene' identifiers from 'yeast' feed from the standard input.

require 'rbbt/sources/organism'

index = Organism.id_index('Sce', :native => 'Enter Gene Id')

STDIN.each_line{|l| puts "#{l.chomp} => #{index[l.chomp]}"}

Ruby Band

Ruby Band is a Ruby Gem that makes use of some selected Java software for data mining and machine learning applications available to the JRuby/Ruby users. Ruby Band features a comprehensive collection of data preprocessing and modeling techniques, and supports several standard data mining tasks, more specifically: data pre-processing (filtering), clustering, classification, regression, and feature selection . <ref name="rubyband">Alberto Arrigoni. (2013, Sept 24). Data Mining in JRuby with Ruby Band. Retrieved from http://sciruby.com/blog/2013/09/24/gsoc-2013-data-mining-in-jruby-with-ruby-band</ref>

Installation of Ruby Band

Install the 'jbundle' gem and 'bundle' for JRuby before trying to install the 'ruby-band' gem.

If you want to use 'ruby-band' APIs without installing the gem you need to run command 'rake -T' once before requiring the gem in your script (this is necessary for jbundler to download the '.jar' files and subsequently set the Java classpath). Otherwise use:

gem install ruby-band

Example: Use of Ruby Band for Data Parsing

One central datatype of ruby-band is derived from the Weka counterpart (the class Weka.core.Instances). By instantiating this class, we obtain a matrix-like structure for storing an entire dataset. Ad-hoc methods were created to guarantee that 'Instances' class objects can be converted to other datatypes (e.g. Apache matrix) and back. There are currently many ways to import data into ruby-band. But the basic way is to Parse data from ARFF/CSV files. <ref name="rubybandGit">Alberto Arrigoni. (2013, Sept 23). Ruby-Band Examples. Retrieved from https://github.com/arrigonialberto86/ruby-band</ref>

You can simply parse an external Weka ARFF/CSV file by doing:

require 'ruby-band' dataset = Core::Parser.parse_ARFF(my_file.arff) dataset = Core::Parser.parse_CSV(my_file.csv)

Example: Use of Ruby Band for Data Classification

Classification and regression algorithms in WEKA are called “classifiers” and are located below the Weka::Classifier:: module. Currently, ruby-band only supports batch-trainable classifiers: this means they get trained on the whole dataset at once. Below is a quick example of how to train a classifier on a dataset parsed from an ARFF(attribute relation file format). <ref name="rubybandGit">Alberto Arrigoni. (2013, Sept 23). Ruby-Band Examples. Retrieved from https://github.com/arrigonialberto86/ruby-band</ref>

require 'ruby-band' # parse a dataset dataset = Core::Parser.parse_ARFF(my_file.arff) # initialize and train a classifier classifier = Weka::Classifier::Lazy::KStar::Base.new do set_options '-M d' set_data dataset set_class_index 4 end # cross-validate the trained classifier puts classifier.cross_validate(3)

Example: Use of Ruby Band for Data Clustering

Clustering is an unsupervised Machine Learning technique of finding patterns in the data, i.e., these algorithms work without class attributes. Classifiers, on the other hand, are supervised and need a class attribute. This section, similar to the one about classifiers, covers the following topics:

- Building a clusterer - batch (incremental must still be implemented) learning.

- Evaluating a clusterer - how to evaluate a built clusterer.

- Clustering instances - determining what clusters unknown instances belong to. <ref name="rubybandGit">Alberto Arrigoni. (2013, Sept 23). Ruby-Band Examples. Retrieved from https://github.com/arrigonialberto86/ruby-band</ref>

Data Mining with Ruby and Twitter

The following sections present a few scripts for collecting and presenting data available through the Twitter API. These scripts focus on simplicity, but you can extend and combine them to create new capabilities. <ref name="TwitterData">M. Tim Jones. (2011, Oct 11). Data Mining with Ruby and Twitter. Retrieved from http://www.ibm.com/developerworks/library/os-dataminingrubytwitter/os-dataminingrubytwitter-pdf.pdf</ref>

Twitter User Information

A large amount of information is available about each Twitter user. This information is only accessible if the user isn't protected. Let's look at how you can extract a user's data and present it in a more convenient way. Listing below presents a simple Ruby script to retrieve a user's information (based on his or her screen name), and then emit some of the more useful elements. You use the 'to_s' Ruby method to convert the value to a string as needed. Note that you first ensure that the user isn't protected; otherwise, this data wouldn't be accessible.

#!/usr/bin/env ruby require "rubygems" require "twitter" screen_name = String.new ARGV[0] a_user = Twitter.user(screen_name) if a_user.protected != true puts "Username : " + a_user.screen_name.to_s puts "Name : " + a_user.name puts "Id : " + a_user.id_str puts "Location : " + a_user.location puts "User since : " + a_user.created_at.to_s puts "Bio : " + a_user.description.to_s puts "Followers : " + a_user.followers_count.to_s puts "Friends : " + a_user.friends_count.to_s puts "Listed Cnt : " + a_user.listed_count.to_s puts "Tweet Cnt : " + a_user.statuses_count.to_s puts "Geocoded : " + a_user.geo_enabled.to_s puts "Language : " + a_user.lang if (a_user.url != nil) puts "URL : " + a_user.url.to_s end if (a_user.time_zone != nil) puts "Time Zone : " + a_user.time_zone end puts "Verified : " + a_user.verified.to_s puts tweet = Twitter.user_timeline(screen_name).first puts "Tweet time : " + tweet.created_at puts "Tweet ID : " + tweet.id.to_s puts "Tweet text : " + tweet.text end

Twitter User Behavior

Twitter contains a large amount of data that you can mine to understand some elements of user behavior. Two simple examples are to analyze when a Twitter user tweets and from what application the user tweets. You can use the following two simple scripts to extract and visualize this information. Listing below presents a script that iterates the tweets from a particular user (using the user_timeline method), and then for each tweet, extracts the particular day on which the tweet originated. You use a simple hash again to accumulate your weekday counts, then generate a bar chart using Google Charts in a similar fashion to the previous time zone example. Note also the use of default for the hash, which specifies the value to return for undefined hashes.

#!/usr/bin/env ruby require "rubygems" require "twitter" require "google_chart" screen_name = String.new ARGV[0] dayhash = Hash.new # Initialize to avoid a nil error with GoogleCharts (undefined is zero) dayhash.default = 0 timeline = Twitter.user_timeline(screen_name, :count => 200 ) timeline.each do |t| tweetday = t.created_at.to_s[0..2] if dayhash.has_key?(tweetday) dayhash[tweetday] = dayhash[tweetday] + 1 else dayhash[tweetday] = 1 end end

We need to retrieve a BarChart to display per day tweet activity.

GoogleChart::BarChart.new('300x200', screen_name, :vertical, false) do |bc|

bc.data "Sunday", [dayhash["Sun"]], '00000f'

bc.data "Monday", [dayhash["Mon"]], '0000ff'

bc.data "Tuesday", [dayhash["Tue"]], '00ff00'

bc.data "Wednesday", [dayhash["Wed"]], '00ffff'

bc.data "Thursday", [dayhash["Thu"]], 'ff0000'

bc.data "Friday", [dayhash["Fri"]], 'ff00ff'

bc.data "Saturday", [dayhash["Sat"]], 'ffff00'

puts bc.to_url

end

Running the above mining script provides the result of the execution of the tweet-days script in the above Listing for the developerWorks account. As shown, Wednesday tends to be the most active tweet day, with Saturday and Sunday the least active.

References

<references/>

Further Reading

- Rudi Cilibrasi(2008,Oct 4)CompLearn: A compression-based data-mining toolkit. Retrieved from http://www.ruby-doc.org/gems/docs/c/complearn-0.6.2/doc/readme_txt.html

- Seamus Abshere and Andy Rossmeissl (2012, May 14) Real time example of Data Mining Rails Example.[2] Retrieved from http://www.rubydoc.info/gems/data_miner-ruby19/frames