CSC/ECE 506 Spring 2012/10a jp: Difference between revisions

(→==) |

|||

| (97 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

='''Prefetching and | ='''Prefetching and Consistency Models'''= | ||

=='''Introduction'''== | =='''Introduction'''== | ||

Buffering and pipelining are attractive techniques for hiding the latency of memory accesses in large scale shared-memory multiprocessors. However, the unconstrained use of these techniques can result in an intractable programming model for the machine. Consistency models provide more tractable programming models by introducing various restrictions on the amount of buffering and pipelining allowed. | Buffering and pipelining are attractive techniques for hiding the latency of memory accesses in large scale shared-memory multiprocessors. However, the unconstrained use of these techniques can result in an intractable programming model for the machine. Consistency models provide more tractable programming models by introducing various restrictions on the amount of buffering and pipelining allowed. | ||

Several memory consistency models have been proposed in the literature. The strictest model is sequential consistency (which requires the execution of a parallel program to appear as some interleaving of the execution of the parallel processes on a sequential machine. Sequential consistency imposes severe restrictions on buffering and pipelining of memory accesses. | Several memory consistency models have been proposed in the literature. The strictest model is sequential consistency (which requires the execution of a parallel program to appear as some interleaving of the execution of the parallel processes on a sequential machine.Sequential consistency imposes severe restrictions on buffering and pipelining of memory accesses. One of the least strict models is release consistency (RC) , which allows significant overlap of memory accesses given synchronization accesses are identified and classified into acquires and releases. Other relaxed models that have been discussed in the literature are processor consistency (PC), weak consistency (WC), and data-race-free-0 (DRF0). These models fall between sequential and release consistency models in terms of strictness. | ||

=='''Prefetching'''== | =='''Prefetching'''== | ||

| Line 23: | Line 22: | ||

For a write operation, a read-exclusive prefetch can be used to acquire exclusive ownership of the line, enabling the write to that location to complete quickly once it is allowed to perform. A read-exclusive prefetch is only possible if the coherence scheme is invalidation-based. Similar to the read prefetch case, the line is invalidated if another processor writes to the location between the time the read-exclusive prefetch completes and the actual write operation is allowed to proceed. In addition, exclusive ownership is surrendered if another processor reads the location during that time. | For a write operation, a read-exclusive prefetch can be used to acquire exclusive ownership of the line, enabling the write to that location to complete quickly once it is allowed to perform. A read-exclusive prefetch is only possible if the coherence scheme is invalidation-based. Similar to the read prefetch case, the line is invalidated if another processor writes to the location between the time the read-exclusive prefetch completes and the actual write operation is allowed to proceed. In addition, exclusive ownership is surrendered if another processor reads the location during that time. | ||

==='''Software Implementation'''<ref>Fu, J.W.C. and J.H. Patel, “Data Prefetching in Multiprocessor Vector Cache Memories,” | |||

Proc. 18th International Symposium on Computer Architecture, Toronto, Ont., Canada, May | |||

1991, p. 54-63.</ref>=== | |||

[[Image:P4.PNG|thumbnail|right|x500px|Figure 1:Inner product calculation using a) no prefetching, b) simple prefetching, c)prefetching with loop unrolling and d) software pipelining.<sup><span id="3body"></span></sup>]] | |||

Most contemporary microprocessors support some form of fetch instruction which can be used to implement prefetching. The implementation of a fetch can be as simple as a load into a processor register that has been hardwired to zero. Slightly more sophisticated implementations provide hints to the memory system as to how the prefetched block will be used. Such information may be useful in multiprocessors where data can be prefetched in different sharing states, for example, although particular implementations will vary, all fetch instructions share some common characteristics. Fetches are non-blocking memory operations and therefore require a lockup-free cache that allows prefetches to bypass other outstanding memory operations in the cache. | |||

Prefetches are typically implemented in such a way that fetch instructions cannot cause exceptions. Exceptions are suppressed for prefetches to insure that they remain an optional optimization feature that does not affect program correctness or initiate large and potentially unnecessary overhead, such as page faults or other memory exceptions.The task of choosing where in the program to place a fetch instruction relative to the matching load or store instruction is known as prefetch scheduling. | |||

Fetch instructions may be added by the programmer or by the compiler during an optimization pass. Unlike many optimizations which occur too frequently in a program or are too tedious to implement by hand, prefetch scheduling can often be done effectively by the programmer.Whether hand-coded or automated by a compiler, prefetching is most often used within loops responsible for large array calculations. Such loops provide excellent prefetching opportunities because they are common in scientific codes, exhibit poor cache utilization and often have predictable array referencing patterns. By establishing these patterns at compile-time, fetch instructions can be placed inside loop bodies so that data for a future loop iteration can be prefetched during the current iteration. | |||

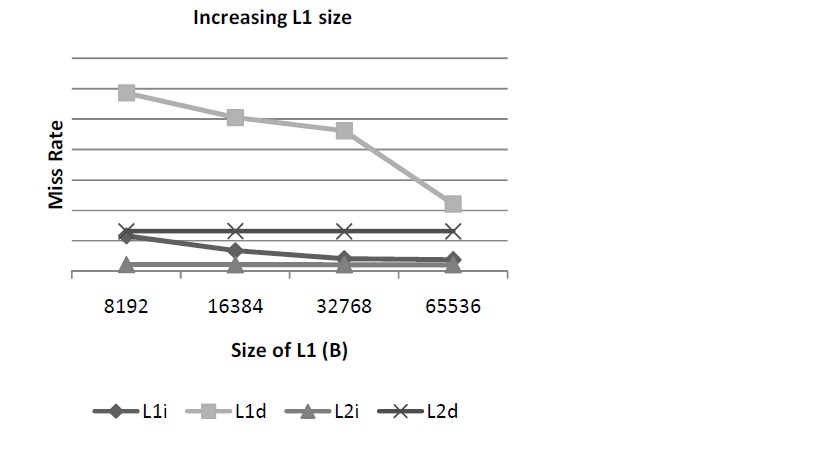

As an example of how loop-based prefetching may be used, consider the code segment shown in Figure 1a. This loop calculates the inner product of two vectors, a and b, in a manner similar to the innermost loop of a matrix multiplication calculation. If we assume a four-word cache block, this code segment will cause a cache miss every fourth iteration. We can attempt to avoid these cache misses by adding the prefetch directives shown in Figure 1b. Note that this figure is a source code representation of the assembly code that would be generated by the compiler.The code segment given in Figure 1c removes most cache misses and unnecessary prefetches but further improvements are possible. Note that cache misses will occur during the first iteration of the loop since prefetches are never issued for the initial iteration. Unnecessary prefetches will occur in the last iteration of the unrolled loop where the fetch commands attempt to access data past the loop index boundary. Both of the above problems can be remedied by using software pipelining techniques as shown in Figure 1d.<ref>Luk, C-K. and T.C. Mowry, “Compiler-based Prefetching for Recursive Data Structures,” | |||

Proc. 7th Conf. on Architectural Support for Programming Languages and Operating | |||

Systems, Cambridge, MA, October 1996, p. 222-233. | |||

</ref> | |||

==='''Hardware Implementation'''=== | ==='''Hardware Implementation'''=== | ||

| Line 29: | Line 46: | ||

Multiprocessors with Memory Hierarchies,” Proc. International Conference on | Multiprocessors with Memory Hierarchies,” Proc. International Conference on | ||

Supercomputing, Amsterdam, Netherlands, June 1990, p. 354-68.</ref>==== | Supercomputing, Amsterdam, Netherlands, June 1990, p. 354-68.</ref>==== | ||

[[Image:P1.PNG|thumbnail|right|x200px|Figure | [[Image:P1.PNG|thumbnail|right|x200px|Figure 2:Interfacing in Simulated Processing Node Architecture <sup><span id="5body"></span></sup>]] | ||

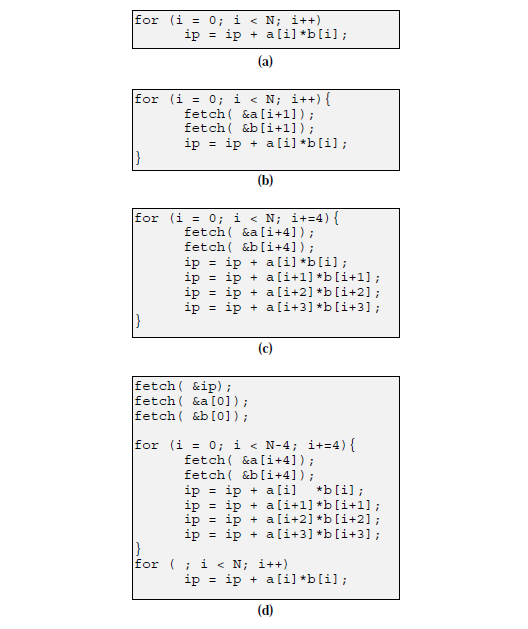

In contrast to software-controlled prefetching, the processors do not need to support specific prefetch instructions.The environment to which these processors are interfaced is shown in figure below.According to the figure, the processing node consists of a processor, a first-level cache (FLC), a second-level cache (SLC), a first- and second-level write buffer (FLWB and SLWB), a local bus, a network interface controller, and a memory module. The FLC is a direct-mapped write-through cache with no allocation of blocks on wirte misses and is blocking on read misses. The SLC is a direct-mapped write-back cache.For both prefetching techniques, we only prefetch into the SLC. In addition, SLWB keeps track of the outstanding requests ( SLC read miss, prefetch and write requests). The SLC controller deals with all the complexities of the cache coherence protocol and the prefetching mechanism.The SLC snoops on the local bus for consistency actions: if an invalidation to a block residing in the SLC is detected, the copy of teh block is invalidated in both the SLC and the FLC. Requests from the local bus have priority over those from the FLWB. These interferences with the processor accesses are tolerable because most accesses hit in the FLC. | In contrast to software-controlled prefetching, the processors do not need to support specific prefetch instructions.The environment to which these processors are interfaced is shown in figure below.According to the figure, the processing node consists of a processor, a first-level cache (FLC), a second-level cache (SLC), a first- and second-level write buffer (FLWB and SLWB), a local bus, a network interface controller, and a memory module. The FLC is a direct-mapped write-through cache with no allocation of blocks on wirte misses and is blocking on read misses. The SLC is a direct-mapped write-back cache.For both prefetching techniques, we only prefetch into the SLC. In addition, SLWB keeps track of the outstanding requests ( SLC read miss, prefetch and write requests). The SLC controller deals with all the complexities of the cache coherence protocol and the prefetching mechanism.The SLC snoops on the local bus for consistency actions: if an invalidation to a block residing in the SLC is detected, the copy of teh block is invalidated in both the SLC and the FLC. Requests from the local bus have priority over those from the FLWB. These interferences with the processor accesses are tolerable because most accesses hit in the FLC. | ||

<ref>Sequential Hardware Prefetching in Shared-Memory Multiprocessors by Fredrik Dahlgren and Michel Dubois</ref> | <ref>Sequential Hardware Prefetching in Shared-Memory Multiprocessors by Fredrik Dahlgren and Michel Dubois</ref> | ||

===='''Sequential Prefetching'''<ref>Data Prefetch Mechanisms by Steven P. VanderWiel and David J. Lilja | ===='''Sequential Prefetching'''<ref>Data Prefetch Mechanisms by Steven P. VanderWiel and David J. Lilja | ||

</ref>==== | </ref>==== | ||

[[Image:P2.PNG|thumbnail|right|x300px|Figure | [[Image:P2.PNG|thumbnail|right|x300px|Figure 3:Three forms of sequential prefetching: a) Prefetch on miss, b) tagged prefetch and | ||

c) sequential prefetching with K = 2<sup><span id="3body"></span></sup>]] | c) sequential prefetching with K = 2<sup><span id="3body"></span></sup>]] | ||

Increasing the cache block size increases the likelihood of two processors sharing data from the same block and hence false sharing is more likely to arise. Sequential prefetching can take advantage of spatial locality without introducing some of the problems associated with large cache blocks. The simplest sequential prefetching schemes are variations upon the one block lookahead (OBL) approach which initiates a prefetch for block b+1 when block b is accessed. For example, a large block may contain one word which is frequently referenced and several other words which are not in use. Assuming an LRU replacement policy, the entire block will be retained even though only a portion of the block’s data is actually in use. If this large block were replaced with two smaller blocks, one of them could be evicted to make room for more active data. The reason prefetch-on miss is less effective is illustrated in Figure beside where the behavior of each algorithm when accessing three contiguous blocks is shown. Here, it can be seen that a strictly sequential access pattern will result in a cache miss for every other cache block when the prefetchon-miss algorithm is used but this same access pattern results in only one cache miss when employing a tagged prefetch algorithm. | Most (but not all) prefetching schemes are designed to fetch data from main memory into the processor cache in units of cache blocks. It should be noted, however, that multiple word cache blocks are themselves a form of data prefetching. By grouping consecutive memory words into single units, caches exploit the principle of spatial locality to implicitly prefetch data that is likely to be referenced in the near future. The degree to which large cache blocks can be effective in prefetching data is limited by the ensuing cache pollution effects. That is, as the cache block size increases, so does the amount of potentially useful data displaced from the cache to make room for the new block. In shared memory multiprocessors with private caches, large cache blocks may also cause false sharing which occurs when two or more processors wish to access different words within the same cache block and at least one of the accesses is a store. Although the accesses are logically applied to separate words, the cache hardware is unable to make this distinction since it operates only on whole cache blocks. The accesses are therefore treated as operations applied to a single object and cache coherence traffic is generated to ensure that the changes made to a block by a store operation are seen by all processors caching the block. In the case of false sharing, this traffic is unnecessary since only the processor executing the store references the word being written. | ||

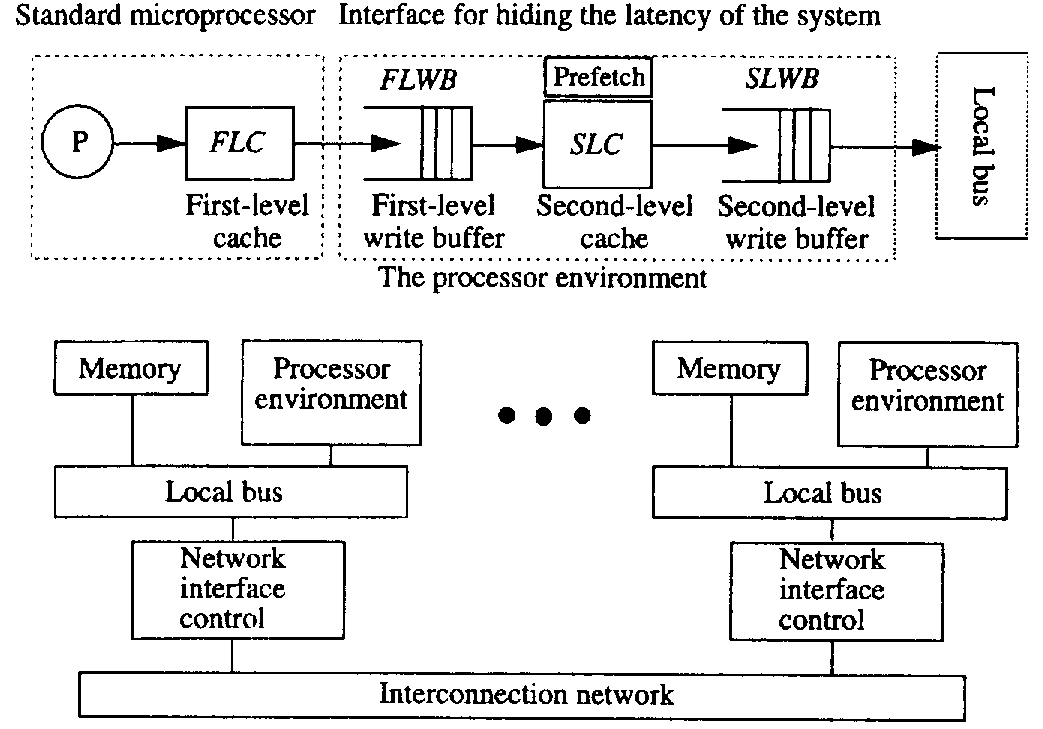

Increasing the cache block size increases the likelihood of two processors sharing data from the same block and hence false sharing is more likely to arise. Sequential prefetching can take advantage of spatial locality without introducing some of the problems associated with large cache blocks. The simplest sequential prefetching schemes are variations upon the one block lookahead (OBL) approach which initiates a prefetch for block b+1 when block b is accessed. For example, a large block may contain one word which is frequently referenced and several other words which are not in use. Assuming an Least recently used (LRU) replacement policy, the entire block will be retained even though only a portion of the block’s data is actually in use. If this large block were replaced with two smaller blocks, one of them could be evicted to make room for more active data. The reason prefetch-on miss is less effective is illustrated in Figure beside where the behavior of each algorithm when accessing three contiguous blocks is shown. Here, it can be seen that a strictly sequential access pattern will result in a cache miss for every other cache block when the prefetchon-miss algorithm is used but this same access pattern results in only one cache miss when employing a tagged prefetch algorithm. | |||

==='''Prefetching on Multiprocessors'''=== | ==='''Prefetching on Multiprocessors'''=== | ||

| Line 50: | Line 69: | ||

A prefetch request first checks the cache to see whether the line is already present. If so, the prefetch is discarded. Otherwise the prefetch is issued to the memory system. When the prefetch response returns to the processor, it is placed in the cache. If a processor references a location it has prefetched before the result has returned, the reference request is combined with the prefetch request so that a duplicate request is not sent out and the reference completes as soon as the prefetch result returns. The prefetch technique discussed imposes several requirements on the memory system. Most importantly, the architecture requires hardware coherent caches. In addition, the location to be prefetched needs to be cachable. Also, to be effective for writes, prefetching requires an invalidation-based coherence scheme. In update-based schemes, it is difficult to partially service a write operation without making the new value available to other processors, which results in the write being performed.The strengths and weaknesses of hardware controlled non-binding prefetching are discussed in the next subsection. | A prefetch request first checks the cache to see whether the line is already present. If so, the prefetch is discarded. Otherwise the prefetch is issued to the memory system. When the prefetch response returns to the processor, it is placed in the cache. If a processor references a location it has prefetched before the result has returned, the reference request is combined with the prefetch request so that a duplicate request is not sent out and the reference completes as soon as the prefetch result returns. The prefetch technique discussed imposes several requirements on the memory system. Most importantly, the architecture requires hardware coherent caches. In addition, the location to be prefetched needs to be cachable. Also, to be effective for writes, prefetching requires an invalidation-based coherence scheme. In update-based schemes, it is difficult to partially service a write operation without making the new value available to other processors, which results in the write being performed.The strengths and weaknesses of hardware controlled non-binding prefetching are discussed in the next subsection. | ||

=='''Evolution of Prefetching'''== | |||

During the 1990s, one of the major developments in the computer industry was the very rapid increase in the speed of processors.<ref>http://www.multicoreinfo.com/prefetching-multicore-processors/</ref> Unfortunately, main memory access did not increase at nearly the same type of rate, thus giving a rather large advantage to the processor and the use of the cache vs RAM. Having to suffer a cache miss and access memory during this period threatened to undermine all the gains that were being achieved with these new processors. Thus, new techniques were required to keep data as close to the local processor as possible. Prefetching helped significantly with this issue. By having a good algorithm in place to properly identify blocks of memory that are likely to be needed in the near future, even though currently not stored in the cache, the bus latency necessary to locate data from main memory can decrease significantly and performance improved. We will explore some of the architectures utilizing prefetching and also give a discussion of the pitfalls and future of this cache performance technique. | |||

==='''Prefetching Results and Analysis'''=== | |||

Numerous studies have been conducted to create benchmarks for various prefetching designs. One such study was conducted on the Intel Pentium 3 processor, which showed quite a bit of progress. | |||

===='''Intel Pentium III'''==== | |||

The Laboratory of Computer Architecture at the University of Texas<ref>http://lca.ece.utexas.edu/sponsors/dell_project1.html</ref> ran an analysis of the Pentium III processor and arrived at quite positive conclusions. Specifically, media instructions were isolated and analyzed in terms of their effectiveness. It was found that the prefetch instructions added to the media portion of the Intel chip improved clock speed by 13-15%, even without compiler optimizations. They found that the more an application becomes memory bound, as it often does with media-intensive applications, the more benefit that can be derived from prefetching. They also found, however, that prefetches needed to be inserted very carefully and that it wasn't often better to only include prefetching strategies where using memory-intensive applications. Indiscriminate prefetching may actually do more harm than good according to their study. | |||

They also discovered that, in fact, many of the applications did not really benefit from prefetching. This is because a number of applications actually had relatively high cache hit ratios. If the cache hit ratios are fairly high, the overhead of prefetching often can even degrade performance slightly. The best solution is to focus on situations with high cache missing. | |||

In summary, it seems that, while effective, prefetching needs to be used judiciously and that compiler optimizations are often more significant in terms of performance improvement. | |||

=='''Prefetching Disadvantages'''== | |||

In time, however, with the turn of the century, a number of problems and challenges began to emerge with prefetching. | |||

One common issue with prefetching has always been the increased complexity and overhead of handing the prefetching algorithms. There is great risk that this overhead can outweigh any benefits if the prefetching algorithm is not accurate, either fetching too early or fetching too late to be effective (as discussed in prior sections). Performance must be improved significantly to counterbalance this overhead and complexity or the efforts are not worthwhile. | |||

Additional issues, for example, came about with the increasing popularity of multicore architectures. In a single core architecture, prefetching requests are able to originate simply from one core. With multiple cores, prefetching requests can originate from a variety of different cores. This puts additional stress on memory to not only deal with regular prefetch requests but also to handle prefetch from different sources, thus greatly increasing the overhead and complexity of logic. Additionally, coherence algorithms must account not only for typical sequential consistency issues, but also account for the possibility of data in yet another location, the location of prefetched data. Flushing data becomes significantly more complicated and cumbersome. | |||

Lastly, and most importantly, is the following ongoing conflict: If prefetched data is stored in the data cache, then cache conflict (or cache pollution(See [[#Definitions]])), can become a significant burden. This is because the current and predictive sets of data must exist in the cache at the same time. Without prefetching, you could use some of this additional space to simply increase the cache size itself. The solution here would be to add extra hardware to act as a buffer to prevent utilizing this cache space. This, however, requires extra hardware and utilizes precious CPU space at a time when cost pressures in the PC world were becoming more acute. | |||

=='''Prefetching Improvements'''== | |||

Fortunately a number of techniques have evolved to address many of the concerns about prefetching, thus making it significantly more effective. | |||

==='''Cache Sizing'''=== | |||

Increasing the dimensions of the cache greatly improves the performance of prefetching. This can be done either through increasing the cache size itself or increasing set associativity. A small cache size is a significant problem with prefetching because conflict misses increase dramatically. Any benefit you might derive from having fewer cache lookup misses is negated by this higher incidence of conflict miss. The following study assembled some benchmarks for a given SPARC(See [[#Definitions]]) processor that utilized prefetching. The following graph shows various levels of cache misses based on various cache sizes. With 16K and 32K cache sizes, prefetching reduces cache misses to fairly close to 0, which the number of misses without prefetching is rather high. | |||

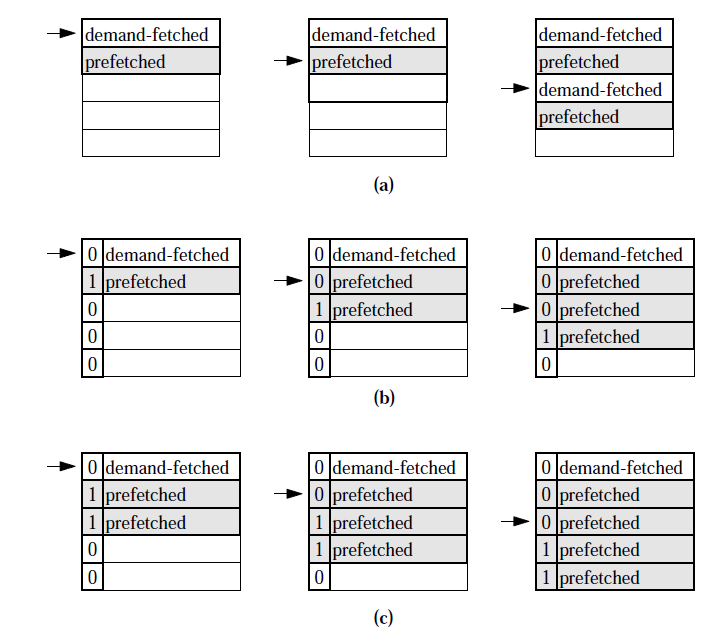

For example, the following study of a BioInformatics application showed the effects of prefetching cache misses based on the size of the L1 cache. As can be seen, cache size has a major impact on the miss rate and, subsequently, performance in the system.<ref>http://www.nikolaylaptev.com/master/classes/cs254.pdf</ref> | |||

[[File:Cachesize.jpg]] | |||

==='''Improved Prefetch Timing'''=== | |||

Improving prefetch timing algorithms can also significantly improve performance and prevent the issue with data being prefetched too late or too early to be useful. One possibility in this case is to implement a technique called Aggressive Prefetching<ref>http://static.usenix.org/events/hotos05/prelim_papers/papathanasiou/papathanasiou_html/paper.html</ref>. Traditionally, prefetch algorithms have only incremental amounts of data with higher levels of confidence. The more aggressive approach, however, seeks to search more deeply in the reference stream to bring in a wider array of data that may be used in the near future. This does, however, require a more resource-intensive machine to avoid negatively impacting performance. | |||

Greater amounts of processing power and storage have made this more aggressive approach possible. While older architectures with slower processors and less memory might have had issues with larger volumes of data being prefetched, this is much less of a concern now. Varying performance from a variety of devices also changes the need to be conservative in prefetching. Aggressive prefetching can take advantage of some latencies being higher than others, which older, more conservative architectures had more consistent levels of bandwidth throughout. | |||

==='''Memory Pattern Recognition'''=== | |||

Effective memory pattern recognition algorithms can go a long way towards resolving issues with prefetching values unnecessarily (ie ones that might not be used in a sufficient timeframe). One example is the stride technique. For example, memory addresses that are sequential in nature, like an array, would all be brought in together as a unit since more than likely most or all of these elements will be utilized. This is an improvement over a more conservative prefetcher that may just assume memory located together will be used. The more conservative version has been known to have a much higher incidence of bringing in unnecessary blocks of data. | |||

= | Another variation of this is the linked memory reference pattern. Linked lists(See [[#Definitions]]) and tree structures are most often associated with this type. Generally, occurrences of code similar to ptr = ptr->next are considered as one structure and subsequently brought into memory together.<ref>http://www.bergs.com/stefan/general/papers/general.pdf</ref> | ||

==''' | For example, instead of: | ||

while (p) { | |||

work(p) | |||

p = next(p) | |||

} | |||

the linked memory reference pattern may work in the following way: | |||

while (p) { | |||

prefetch(next(p)) | |||

work(p) | |||

p = next(p) | |||

} | |||

Here, p will be brought in ahead of time and be closer to the processor for subsequent use. This is more efficient than the initial loop. | |||

==='''Markov Prefetcher'''=== | |||

This particular prefetch technique<ref>http://www.bergs.com/stefan/general/papers/general.pdf</ref> is built around the idea of remembering past sequences of cache misses and, utilizing this memory, it will bring it subsequent misses in the sequence. Generally a rather large table would be used containing previous address misses. This table is maintained in a similar manner as a cache. Sequences stored in this table are used to then help predict future sequences that may need to be used by prefetching. | |||

==='''Stealth Prefetching'''=== | |||

A more modern and innovative technique, stealth prefetching<ref>http://arnetminer.org/publication/stealth-prefetching-53889.html</ref>, attempts to deal with issues surrounding the increase of interconnection bandwidth and increased memory latency. It also helps to avoiding the problem of using bandwidth to prematurely access shared data that will later result in state downgrades. Basically, this technique attempts to focus on specific regions of memory in a more coarsely designed fashion to identify which segments are heavily used by multiple processors and which ones are not. Lines that are not being shared by multiple processors are more aggressively brought nearer to the local processor, thus improving performance by as much as 20%. With the increased use of fast multiprocessors, this alleviates some of the bandwidth bottlenecks. | |||

=='''Definitions'''== | |||

1) '''Linked List''' - In computer science, a linked list is a data structure consisting of a group of nodes which together represent a sequence. Under the simplest form, each node is composed of a datum and a reference (in other words, a link) to the next node in the sequence; more complex variants add additional links. This structure allows for efficient insertion or removal of elements from any position in the sequence | |||

2) '''Cache Pollution''' - Cache pollution describes situations where an executing computer program loads data into CPU cache unnecessarily, thus causing other needed data to be evicted from the cache into lower levels of the memory hierarchy, potentially all the way down to main memory, thus causing a performance hit. | |||

3) '''SPARC''' - SPARC (from Scalable Processor Architecture) is a RISC instruction set architecture (ISA) developed by Sun Microsystems and introduced in mid-1987. | |||

=='''References'''== | =='''References'''== | ||

| Line 59: | Line 156: | ||

<references></references> | <references></references> | ||

==See Also== | |||

http://cdmetcalf.home.comcast.net/~cdmetcalf/papers/prefetch/node6.html | |||

http://www.multicoreinfo.com/prefetching-multicore-processors/ | http://www.multicoreinfo.com/prefetching-multicore-processors/ | ||

http:// | http://www.cs.cmu.edu/~tcm/thesis/subsubsection2_10_1_4_1.html | ||

http:// | http://software.intel.com/en-us/articles/how-to-choose-between-hardware-and-software-prefetch-on-32-bit-intel-architecture/ | ||

http://www. | http://www.futurechips.org/chip-design-for-all/prefetching.html | ||

Latest revision as of 00:46, 2 April 2013

Prefetching and Consistency Models

Introduction

Buffering and pipelining are attractive techniques for hiding the latency of memory accesses in large scale shared-memory multiprocessors. However, the unconstrained use of these techniques can result in an intractable programming model for the machine. Consistency models provide more tractable programming models by introducing various restrictions on the amount of buffering and pipelining allowed. Several memory consistency models have been proposed in the literature. The strictest model is sequential consistency (which requires the execution of a parallel program to appear as some interleaving of the execution of the parallel processes on a sequential machine.Sequential consistency imposes severe restrictions on buffering and pipelining of memory accesses. One of the least strict models is release consistency (RC) , which allows significant overlap of memory accesses given synchronization accesses are identified and classified into acquires and releases. Other relaxed models that have been discussed in the literature are processor consistency (PC), weak consistency (WC), and data-race-free-0 (DRF0). These models fall between sequential and release consistency models in terms of strictness.

Prefetching

The delay constraints imposed by a consistency model limit the amount of buffering and pipelining among memory accesses. Prefetching provides one method for increasing performance by partially proceeding with an access that is delayed due to consistency model constraints. Prefetches can be initiated either by an explicit fetch operation within a program, by logic that monitors the processor’s referencing pattern to infer prefetching, or by a combination of these approaches. However they are initiated, prefetches must be issued in a timely manner. If a prefetch is issued too early there is a chance that the prefetched data will displace other useful data from the higher levels of the memory hierarchy or be displaced itself before use. If the prefetch is issued too late, it may not arrive before the actual memory reference and thereby introduce processor stall cycles. Software prefetching issues fetches only for data that is likely to be used while hardware schemes tend data in a more speculative manner.

The decision of where to place prefetched data in the memory hierarchy is a fundamental design decision. Clearly, data must be moved into a higher level of the memory hierarchy to provide a performance benefit. The majority of schemes place prefetched data in some type of cache memory. Other schemes place prefetched data in dedicated buffers to protect the data from premature cache evictions and prevent cache pollution. When prefetched data are placed into named locations, such as processor registers or memory, the prefetch is said to be binding and additional constraints must be imposed on the use of the data. Finally, multiprocessor systems can introduce additional levels into the memory hierarchy which must be taken into consideration.Data can be prefetched in units of single words, cache blocks, contiguous blocks of memory or program data objects. Often, the amount of data fetched is determined by the organization of the underlying cache and memory system. Cache blocks may be the most appropriate size for uniprocessors and Symmetric Multiprocessors (SMPs) while larger memory blocks may be used to amortize the cost of initiating a data transfer across an interconnection network of a large, distributed memory multiprocessor.

===Description<ref>Two Techniques to Enhance the Performance of Memory Consistency Models by Kourosh Gharachorloo, Anoop Gupta, and John Hennessy

</ref>===

Prefetching can be classified based on whether it is binding or non-binding, and whether it is controlled by hardware or software. With a binding prefetch, the value of a later reference (e.g., a register load) is bound at the time the prefetch completes. This places restrictions on when a binding prefetch canbe issued, since the value will become stale if another processor modifies the location during the interval between prefetch and reference. Hardware cache-coherent architectures, such as the Stanford DASH multiprocessor, can provide prefetching that is non-binding. With a non-binding prefetch, the data is brought close to the processor (e.g., into the cache) and is kept coherent until the processor actually reads the value. Thus, non-binding prefetching does not affect correctness for any of the consistency models and can be used as simply a performance boosting technique. The technique described in this section assumes hardware-controlled non-binding prefetch.

Prefetching can enhance performance by partially servicing large latency accesses that are delayed due to consistency model constraints. For a read operation, a read prefetch can be used to bring the data into the cache in a read-shared state while the operation is delayed due to consistency constraints. Since the prefetch is non-binding, we are guaranteed that the read operation will return a correct value once it is allowed to perform, regardless of when the prefetch completed. In the majority of cases, we expect the result returned by the prefetch to be the correct result. The only time the result may be different is if the location is written to between the time the prefetch returns the value and the time the read is allowed to perform. In this case, the prefetched location would either get invalidated or updated, depending on the coherence scheme. If invalidated, the read operation will miss in the cache and access the new value from the memory system, as if the prefetch never occurred. In the case of an update protocol, the location is kept up-to-date, thus providing the new value to the read operation.

For a write operation, a read-exclusive prefetch can be used to acquire exclusive ownership of the line, enabling the write to that location to complete quickly once it is allowed to perform. A read-exclusive prefetch is only possible if the coherence scheme is invalidation-based. Similar to the read prefetch case, the line is invalidated if another processor writes to the location between the time the read-exclusive prefetch completes and the actual write operation is allowed to proceed. In addition, exclusive ownership is surrendered if another processor reads the location during that time.

===Software Implementation<ref>Fu, J.W.C. and J.H. Patel, “Data Prefetching in Multiprocessor Vector Cache Memories,”

Proc. 18th International Symposium on Computer Architecture, Toronto, Ont., Canada, May

1991, p. 54-63.</ref>===

Most contemporary microprocessors support some form of fetch instruction which can be used to implement prefetching. The implementation of a fetch can be as simple as a load into a processor register that has been hardwired to zero. Slightly more sophisticated implementations provide hints to the memory system as to how the prefetched block will be used. Such information may be useful in multiprocessors where data can be prefetched in different sharing states, for example, although particular implementations will vary, all fetch instructions share some common characteristics. Fetches are non-blocking memory operations and therefore require a lockup-free cache that allows prefetches to bypass other outstanding memory operations in the cache.

Prefetches are typically implemented in such a way that fetch instructions cannot cause exceptions. Exceptions are suppressed for prefetches to insure that they remain an optional optimization feature that does not affect program correctness or initiate large and potentially unnecessary overhead, such as page faults or other memory exceptions.The task of choosing where in the program to place a fetch instruction relative to the matching load or store instruction is known as prefetch scheduling.

Fetch instructions may be added by the programmer or by the compiler during an optimization pass. Unlike many optimizations which occur too frequently in a program or are too tedious to implement by hand, prefetch scheduling can often be done effectively by the programmer.Whether hand-coded or automated by a compiler, prefetching is most often used within loops responsible for large array calculations. Such loops provide excellent prefetching opportunities because they are common in scientific codes, exhibit poor cache utilization and often have predictable array referencing patterns. By establishing these patterns at compile-time, fetch instructions can be placed inside loop bodies so that data for a future loop iteration can be prefetched during the current iteration.

As an example of how loop-based prefetching may be used, consider the code segment shown in Figure 1a. This loop calculates the inner product of two vectors, a and b, in a manner similar to the innermost loop of a matrix multiplication calculation. If we assume a four-word cache block, this code segment will cause a cache miss every fourth iteration. We can attempt to avoid these cache misses by adding the prefetch directives shown in Figure 1b. Note that this figure is a source code representation of the assembly code that would be generated by the compiler.The code segment given in Figure 1c removes most cache misses and unnecessary prefetches but further improvements are possible. Note that cache misses will occur during the first iteration of the loop since prefetches are never issued for the initial iteration. Unnecessary prefetches will occur in the last iteration of the unrolled loop where the fetch commands attempt to access data past the loop index boundary. Both of the above problems can be remedied by using software pipelining techniques as shown in Figure 1d.<ref>Luk, C-K. and T.C. Mowry, “Compiler-based Prefetching for Recursive Data Structures,” Proc. 7th Conf. on Architectural Support for Programming Languages and Operating Systems, Cambridge, MA, October 1996, p. 222-233. </ref>

Hardware Implementation

====Simulated Processing Node Architecture<ref>Gornish, E.H., E.D. Granston and A.V. Veidenbaum, “Compiler-directed Data Prefetching in Multiprocessors with Memory Hierarchies,” Proc. International Conference on Supercomputing, Amsterdam, Netherlands, June 1990, p. 354-68.</ref>====

In contrast to software-controlled prefetching, the processors do not need to support specific prefetch instructions.The environment to which these processors are interfaced is shown in figure below.According to the figure, the processing node consists of a processor, a first-level cache (FLC), a second-level cache (SLC), a first- and second-level write buffer (FLWB and SLWB), a local bus, a network interface controller, and a memory module. The FLC is a direct-mapped write-through cache with no allocation of blocks on wirte misses and is blocking on read misses. The SLC is a direct-mapped write-back cache.For both prefetching techniques, we only prefetch into the SLC. In addition, SLWB keeps track of the outstanding requests ( SLC read miss, prefetch and write requests). The SLC controller deals with all the complexities of the cache coherence protocol and the prefetching mechanism.The SLC snoops on the local bus for consistency actions: if an invalidation to a block residing in the SLC is detected, the copy of teh block is invalidated in both the SLC and the FLC. Requests from the local bus have priority over those from the FLWB. These interferences with the processor accesses are tolerable because most accesses hit in the FLC.

<ref>Sequential Hardware Prefetching in Shared-Memory Multiprocessors by Fredrik Dahlgren and Michel Dubois</ref>

====Sequential Prefetching<ref>Data Prefetch Mechanisms by Steven P. VanderWiel and David J. Lilja

</ref>====

Most (but not all) prefetching schemes are designed to fetch data from main memory into the processor cache in units of cache blocks. It should be noted, however, that multiple word cache blocks are themselves a form of data prefetching. By grouping consecutive memory words into single units, caches exploit the principle of spatial locality to implicitly prefetch data that is likely to be referenced in the near future. The degree to which large cache blocks can be effective in prefetching data is limited by the ensuing cache pollution effects. That is, as the cache block size increases, so does the amount of potentially useful data displaced from the cache to make room for the new block. In shared memory multiprocessors with private caches, large cache blocks may also cause false sharing which occurs when two or more processors wish to access different words within the same cache block and at least one of the accesses is a store. Although the accesses are logically applied to separate words, the cache hardware is unable to make this distinction since it operates only on whole cache blocks. The accesses are therefore treated as operations applied to a single object and cache coherence traffic is generated to ensure that the changes made to a block by a store operation are seen by all processors caching the block. In the case of false sharing, this traffic is unnecessary since only the processor executing the store references the word being written.

Increasing the cache block size increases the likelihood of two processors sharing data from the same block and hence false sharing is more likely to arise. Sequential prefetching can take advantage of spatial locality without introducing some of the problems associated with large cache blocks. The simplest sequential prefetching schemes are variations upon the one block lookahead (OBL) approach which initiates a prefetch for block b+1 when block b is accessed. For example, a large block may contain one word which is frequently referenced and several other words which are not in use. Assuming an Least recently used (LRU) replacement policy, the entire block will be retained even though only a portion of the block’s data is actually in use. If this large block were replaced with two smaller blocks, one of them could be evicted to make room for more active data. The reason prefetch-on miss is less effective is illustrated in Figure beside where the behavior of each algorithm when accessing three contiguous blocks is shown. Here, it can be seen that a strictly sequential access pattern will result in a cache miss for every other cache block when the prefetchon-miss algorithm is used but this same access pattern results in only one cache miss when employing a tagged prefetch algorithm.

Prefetching on Multiprocessors

This subsection discusses the requirements that the prefetch technique imposes on a multiprocessor architecture. Let us first consider how the proposed prefetch technique can be incorporated into the processor environment. Assume the general case where the processor has a load and a store buffer. The usual way to enforce a consistency model is to delay the issue of accesses in the buffer until certain previous accesses complete. Prefetching can be incorporated in this framework by having the hardware automatically issue a prefetch (read prefetch for reads and read-exclusive prefetch for writes and atomic read-modify writes for accesses that are in the load or store buffer, but are delayed due to consistency constraints. A prefetch buffer may be used to buffer multiple prefetch requests. Prefetches can be retired from this buffer as fast as the cache and memory system allow.

A prefetch request first checks the cache to see whether the line is already present. If so, the prefetch is discarded. Otherwise the prefetch is issued to the memory system. When the prefetch response returns to the processor, it is placed in the cache. If a processor references a location it has prefetched before the result has returned, the reference request is combined with the prefetch request so that a duplicate request is not sent out and the reference completes as soon as the prefetch result returns. The prefetch technique discussed imposes several requirements on the memory system. Most importantly, the architecture requires hardware coherent caches. In addition, the location to be prefetched needs to be cachable. Also, to be effective for writes, prefetching requires an invalidation-based coherence scheme. In update-based schemes, it is difficult to partially service a write operation without making the new value available to other processors, which results in the write being performed.The strengths and weaknesses of hardware controlled non-binding prefetching are discussed in the next subsection.

Evolution of Prefetching

During the 1990s, one of the major developments in the computer industry was the very rapid increase in the speed of processors.<ref>http://www.multicoreinfo.com/prefetching-multicore-processors/</ref> Unfortunately, main memory access did not increase at nearly the same type of rate, thus giving a rather large advantage to the processor and the use of the cache vs RAM. Having to suffer a cache miss and access memory during this period threatened to undermine all the gains that were being achieved with these new processors. Thus, new techniques were required to keep data as close to the local processor as possible. Prefetching helped significantly with this issue. By having a good algorithm in place to properly identify blocks of memory that are likely to be needed in the near future, even though currently not stored in the cache, the bus latency necessary to locate data from main memory can decrease significantly and performance improved. We will explore some of the architectures utilizing prefetching and also give a discussion of the pitfalls and future of this cache performance technique.

Prefetching Results and Analysis

Numerous studies have been conducted to create benchmarks for various prefetching designs. One such study was conducted on the Intel Pentium 3 processor, which showed quite a bit of progress.

Intel Pentium III

The Laboratory of Computer Architecture at the University of Texas<ref>http://lca.ece.utexas.edu/sponsors/dell_project1.html</ref> ran an analysis of the Pentium III processor and arrived at quite positive conclusions. Specifically, media instructions were isolated and analyzed in terms of their effectiveness. It was found that the prefetch instructions added to the media portion of the Intel chip improved clock speed by 13-15%, even without compiler optimizations. They found that the more an application becomes memory bound, as it often does with media-intensive applications, the more benefit that can be derived from prefetching. They also found, however, that prefetches needed to be inserted very carefully and that it wasn't often better to only include prefetching strategies where using memory-intensive applications. Indiscriminate prefetching may actually do more harm than good according to their study.

They also discovered that, in fact, many of the applications did not really benefit from prefetching. This is because a number of applications actually had relatively high cache hit ratios. If the cache hit ratios are fairly high, the overhead of prefetching often can even degrade performance slightly. The best solution is to focus on situations with high cache missing.

In summary, it seems that, while effective, prefetching needs to be used judiciously and that compiler optimizations are often more significant in terms of performance improvement.

Prefetching Disadvantages

In time, however, with the turn of the century, a number of problems and challenges began to emerge with prefetching.

One common issue with prefetching has always been the increased complexity and overhead of handing the prefetching algorithms. There is great risk that this overhead can outweigh any benefits if the prefetching algorithm is not accurate, either fetching too early or fetching too late to be effective (as discussed in prior sections). Performance must be improved significantly to counterbalance this overhead and complexity or the efforts are not worthwhile.

Additional issues, for example, came about with the increasing popularity of multicore architectures. In a single core architecture, prefetching requests are able to originate simply from one core. With multiple cores, prefetching requests can originate from a variety of different cores. This puts additional stress on memory to not only deal with regular prefetch requests but also to handle prefetch from different sources, thus greatly increasing the overhead and complexity of logic. Additionally, coherence algorithms must account not only for typical sequential consistency issues, but also account for the possibility of data in yet another location, the location of prefetched data. Flushing data becomes significantly more complicated and cumbersome.

Lastly, and most importantly, is the following ongoing conflict: If prefetched data is stored in the data cache, then cache conflict (or cache pollution(See #Definitions)), can become a significant burden. This is because the current and predictive sets of data must exist in the cache at the same time. Without prefetching, you could use some of this additional space to simply increase the cache size itself. The solution here would be to add extra hardware to act as a buffer to prevent utilizing this cache space. This, however, requires extra hardware and utilizes precious CPU space at a time when cost pressures in the PC world were becoming more acute.

Prefetching Improvements

Fortunately a number of techniques have evolved to address many of the concerns about prefetching, thus making it significantly more effective.

Cache Sizing

Increasing the dimensions of the cache greatly improves the performance of prefetching. This can be done either through increasing the cache size itself or increasing set associativity. A small cache size is a significant problem with prefetching because conflict misses increase dramatically. Any benefit you might derive from having fewer cache lookup misses is negated by this higher incidence of conflict miss. The following study assembled some benchmarks for a given SPARC(See #Definitions) processor that utilized prefetching. The following graph shows various levels of cache misses based on various cache sizes. With 16K and 32K cache sizes, prefetching reduces cache misses to fairly close to 0, which the number of misses without prefetching is rather high.

For example, the following study of a BioInformatics application showed the effects of prefetching cache misses based on the size of the L1 cache. As can be seen, cache size has a major impact on the miss rate and, subsequently, performance in the system.<ref>http://www.nikolaylaptev.com/master/classes/cs254.pdf</ref>

Improved Prefetch Timing

Improving prefetch timing algorithms can also significantly improve performance and prevent the issue with data being prefetched too late or too early to be useful. One possibility in this case is to implement a technique called Aggressive Prefetching<ref>http://static.usenix.org/events/hotos05/prelim_papers/papathanasiou/papathanasiou_html/paper.html</ref>. Traditionally, prefetch algorithms have only incremental amounts of data with higher levels of confidence. The more aggressive approach, however, seeks to search more deeply in the reference stream to bring in a wider array of data that may be used in the near future. This does, however, require a more resource-intensive machine to avoid negatively impacting performance.

Greater amounts of processing power and storage have made this more aggressive approach possible. While older architectures with slower processors and less memory might have had issues with larger volumes of data being prefetched, this is much less of a concern now. Varying performance from a variety of devices also changes the need to be conservative in prefetching. Aggressive prefetching can take advantage of some latencies being higher than others, which older, more conservative architectures had more consistent levels of bandwidth throughout.

Memory Pattern Recognition

Effective memory pattern recognition algorithms can go a long way towards resolving issues with prefetching values unnecessarily (ie ones that might not be used in a sufficient timeframe). One example is the stride technique. For example, memory addresses that are sequential in nature, like an array, would all be brought in together as a unit since more than likely most or all of these elements will be utilized. This is an improvement over a more conservative prefetcher that may just assume memory located together will be used. The more conservative version has been known to have a much higher incidence of bringing in unnecessary blocks of data.

Another variation of this is the linked memory reference pattern. Linked lists(See #Definitions) and tree structures are most often associated with this type. Generally, occurrences of code similar to ptr = ptr->next are considered as one structure and subsequently brought into memory together.<ref>http://www.bergs.com/stefan/general/papers/general.pdf</ref>

For example, instead of:

while (p) {

work(p)

p = next(p)

}

the linked memory reference pattern may work in the following way:

while (p) {

prefetch(next(p))

work(p)

p = next(p)

}

Here, p will be brought in ahead of time and be closer to the processor for subsequent use. This is more efficient than the initial loop.

Markov Prefetcher

This particular prefetch technique<ref>http://www.bergs.com/stefan/general/papers/general.pdf</ref> is built around the idea of remembering past sequences of cache misses and, utilizing this memory, it will bring it subsequent misses in the sequence. Generally a rather large table would be used containing previous address misses. This table is maintained in a similar manner as a cache. Sequences stored in this table are used to then help predict future sequences that may need to be used by prefetching.

Stealth Prefetching

A more modern and innovative technique, stealth prefetching<ref>http://arnetminer.org/publication/stealth-prefetching-53889.html</ref>, attempts to deal with issues surrounding the increase of interconnection bandwidth and increased memory latency. It also helps to avoiding the problem of using bandwidth to prematurely access shared data that will later result in state downgrades. Basically, this technique attempts to focus on specific regions of memory in a more coarsely designed fashion to identify which segments are heavily used by multiple processors and which ones are not. Lines that are not being shared by multiple processors are more aggressively brought nearer to the local processor, thus improving performance by as much as 20%. With the increased use of fast multiprocessors, this alleviates some of the bandwidth bottlenecks.

Definitions

1) Linked List - In computer science, a linked list is a data structure consisting of a group of nodes which together represent a sequence. Under the simplest form, each node is composed of a datum and a reference (in other words, a link) to the next node in the sequence; more complex variants add additional links. This structure allows for efficient insertion or removal of elements from any position in the sequence

2) Cache Pollution - Cache pollution describes situations where an executing computer program loads data into CPU cache unnecessarily, thus causing other needed data to be evicted from the cache into lower levels of the memory hierarchy, potentially all the way down to main memory, thus causing a performance hit.

3) SPARC - SPARC (from Scalable Processor Architecture) is a RISC instruction set architecture (ISA) developed by Sun Microsystems and introduced in mid-1987.

References

<references></references>

See Also

http://cdmetcalf.home.comcast.net/~cdmetcalf/papers/prefetch/node6.html

http://www.multicoreinfo.com/prefetching-multicore-processors/

http://www.cs.cmu.edu/~tcm/thesis/subsubsection2_10_1_4_1.html

http://www.futurechips.org/chip-design-for-all/prefetching.html