CSC/ECE 506 Spring 2013/8b ap: Difference between revisions

(Created page with "=Update and adaptive coherence protocols on real architectures, and power considerations= ===Introduction=== In a [http://en.wikipedia.org/wiki/Shared_memory shared memory] -...") |

|||

| (56 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

= | =Cache Coherence Protocols on Real Architectures= | ||

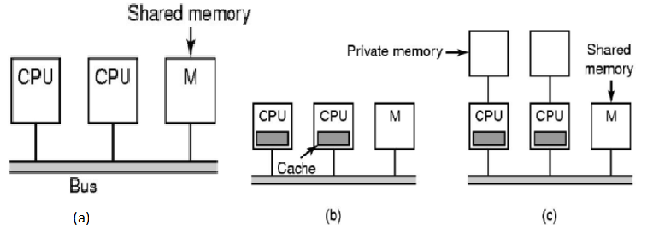

In parallel computer architectures, [http://en.wikipedia.org/wiki/Cache_coherence '''cache coherence'''] refers to the consistency of data that is stored throughout the caches on individual processors or throughout the shared memory. The problem here is that we have multiple caches on multiple processors. When an update to a single cache makes changes to a shared memory, you will need to have all the caches be coherent on the value change. This is better shown below. | |||

[[File:Shared memory.png]]<br /> | |||

'''Figure 1. Multiple Caches of Shared Resource''' | |||

< | There are two ways to maintain cache consistency<ref name="glasco">Glasco, D.B.; Delagi, B.A.; Flynn, M.J.; , "Update-based cache coherence protocols for scalable shared-memory multiprocessors," System Sciences, 1994. Proceedings of the Twenty-Seventh Hawaii International Conference on , vol.1, no., pp.534-545, 4-7 Jan. 1994 doi: 10.1109/HICSS.1994.323135 | ||

[http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=323135&isnumber=7709 Paper]</ref>, invalidation based and update based. | |||

Invalidation based protocol will purge the copies of the line from the other caches which results in a single copy of the line whereas updating forwards the write value to the other caches, after which all caches are consistent.One of the drawbacks of an invalidation-based protocol is that it incurs high number of coherence misses. To solve this, one can use a update coherence protocol, or a new type of protocol called adaptive coherence protocol. We shall discuss all three below. | |||

== Invalidation Coherence Protocols == | |||

Invalidate all remote copies of cache when a local cache block is updated. Under the invalidation scheme, updates are only propagated when data are read, and several updates can take place before communication is necessary. In the multiple-reader-single-write scheme described, this is potentially expensive. But, if the read/write ratio is sufficiently high, then the parallelism obtained by allowing multiple simultaneous readers offsets this cost. However, for multiple read after write command, there would be continuous misses and subsequent fetches for those misses. | |||

=== Write-Through Write Invalidate Caches === | |||

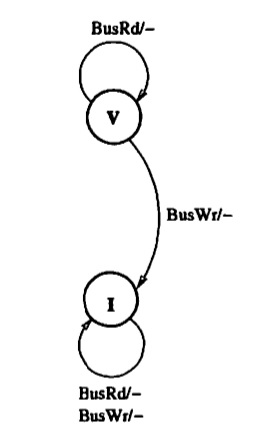

The state of a cache block copy of local processor can take one of the two | |||

states : | |||

Valid State: | |||

All processors can read safely. The cache block is valid and clean, i.e. the cached value is the same as that at a lower memory level | |||

Local processor can also write | |||

Invalid State: (not in cache) | |||

Block being invalidated. | |||

Block being replaced | |||

Any requests to the cache block will result into cache misses. | |||

The requests are: | |||

PrRd (Processor-Read) - processor-side request to read data from a cache block | |||

PrWr (Processor-Write) - request sent when processor wants to write into a cache block | |||

The Snooped Bus requests are: | |||

BusRd (Bus-Read) - When request that indicates there is a read request to a block made by a processor | |||

BusWr (Bus-Write) - Request that indicates there is a write request to a block made by a processor. In case of a write-through cache, the BusWr is a write-through to the main memory performed by another processor. | |||

In the beginning the state of the cache block is Invalid state. If a | |||

When a remote processor writes to its cache copy, all other cache copies become invalidated. When there is a processor-side read request, the processor suffers a cache miss. This results in a BusRd request on the bus. The memory block is fetched from main memory. The block goes to the valid state. When there is a PrWr request, then a BusWr command is sent on bus. Other caches that have the block invalidate their copy. The main memory (write-through) loads the correct value into the requested cache. Therefore, the state remains invalid. | |||

For the case, when the block is the valid state, on a PrRd, it is a cache hit since the block resides in the cache. The state remains valid. On a PrWr, if there is a cache hit, that means, no other cache block resides. Thus no bus snooping protocol has to be sent. | |||

On a BusRd, a cache block in the valid state remains in the valid state. While a block in the invalid state remains in the invalid state. | |||

On a BusWr, a cache block in the valid state gets into the invalid state and an invalid block remain in the invalid state. | |||

[[File:Wiki_invalidate_bus.jpg]]<br /> | |||

'''Figure 1. Write-through Write Invalidate''' | |||

==== Advantages ==== | |||

A processor can modify a cache block by invalidating other copies in other caches. Thus cache block that is being modifies resides only in one cache giving exclusive membership to that processor. | |||

=== Write Back Write Invalidate caches === | |||

• Processor / Cache Operations | |||

PrRd, PrWr, block Replace | |||

States | |||

Invalid, Valid (clean) - same as above | |||

Modified (dirty) - A block that is valid and has value written into; thus, is different from the one in main memory | |||

• Bus Transactions | |||

Bus Read (BusRd), Write-Back (BusWB) | |||

[[File:wiki_invalidate_states.jpg]]<br /> | |||

< | '''Figure 1. Write-through Write Invalidate States Bus Snooping Protocol''' | ||

==== Write-through Advantages : ==== | |||

When a processor continuously writes into the same cache block, only a single command is used to invalidate the cache blocks | |||

==== Disadvantage : ==== | |||

requires a high amount of bandwidth. | |||

==Update Coherence Protocol== | |||

===Introduction=== | |||

Update-based cache coherence protocols work by directly updating all the cache values in the system. This differs from the invalidation-based protocols because it achieves write propagation without having to invalidate data and thus not resulting in a cache miss. This saves on numerous coherence misses, time spent to correct the miss, and bandwidth usage. The update-based protocols we will be discussing in this section are | |||

* [http://en.wikipedia.org/wiki/Dragon_protocol Dragon Protocol] | |||

* [http://en.wikipedia.org/wiki/Firefly_protocol Firefly protocol]. | |||

===Dragon Protocol=== | ===Dragon Protocol=== | ||

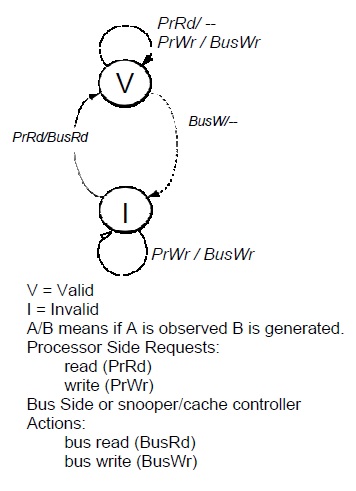

Dragon protocol saves on bandwidth by updating the specific words within the cache instead of the entire block. The caches use write allocate and write update policies. The Dragon Protocol is made up of four states and does not include an invalidation state. | |||

The | |||

* '''Modified (M)''' - cache block is exclusively owned, however it can be different from main memory | |||

* '''Exclusive (E)''' - cache block is clean and is only in one cache | |||

* '''Shared Modified (Sm)''' - cache block resides in multiple caches and is possible dirty | |||

* '''Shared Clean (Sc)''' - cache block resides in multiple caches and is clean | |||

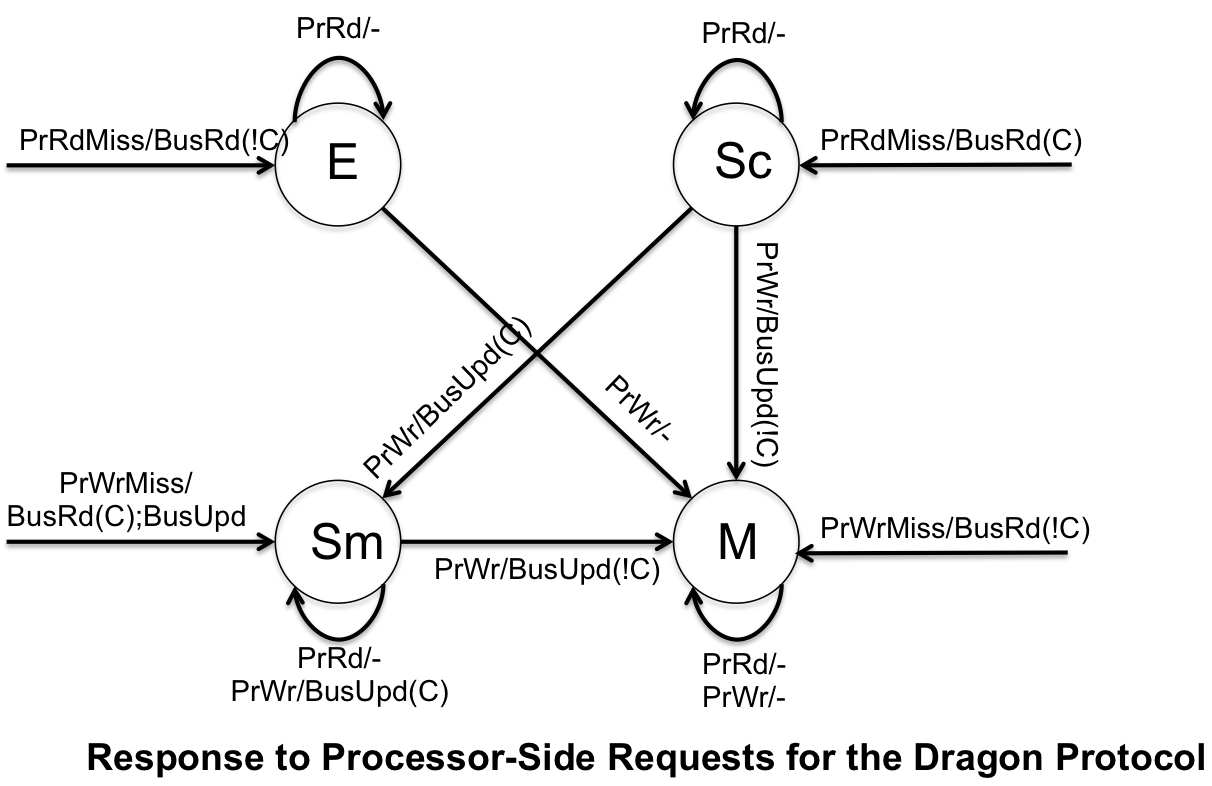

< | There is not an invalidation state, because if a block is cached then it assumed to be valid. However,it can differ from main memory. Below are the finite state machines for the processor-side calls and bus-side calls. Dragon protocol utilizes snoopy caches to appear as if it as a uniform memory space even though there are multiple processors. | ||

[[File:Dragon Protocol Processor-Side.png|550px|center]] | |||

<center> '''Figure 2. Dragon Protocol Processor-Side''' </center> | |||

< | [[File:Dragon Protocol Bus-Side.png|500px|center]] | ||

<center> '''Figure 3. Dragon Protocol Bus-Side''' </center> | |||

The Dragon Protocol is implemented in the [http://en.wikipedia.org/wiki/Cray_CS6400 Cray CS6400] (also know as the Xerox Dragon multiprocessor workstation). It was available with either 60Mhz or 85Mhz processors. The Xerox Dragon was designed to be a research numerous processors. | |||

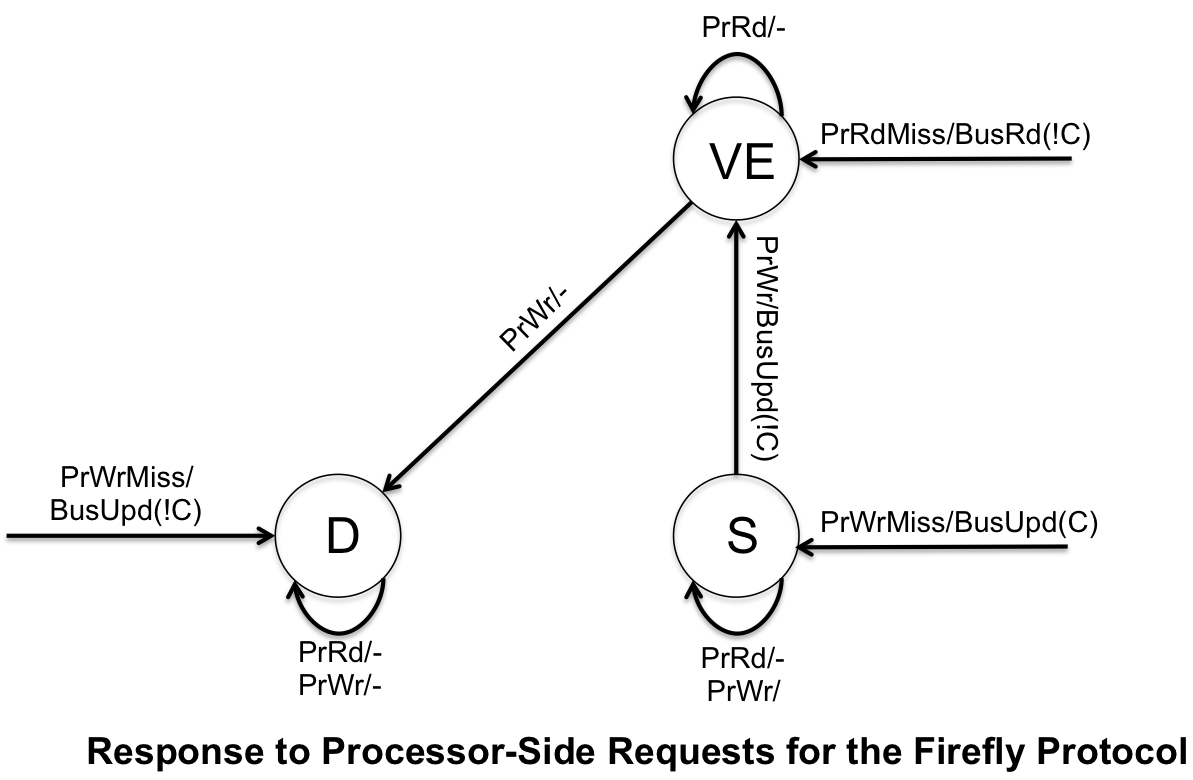

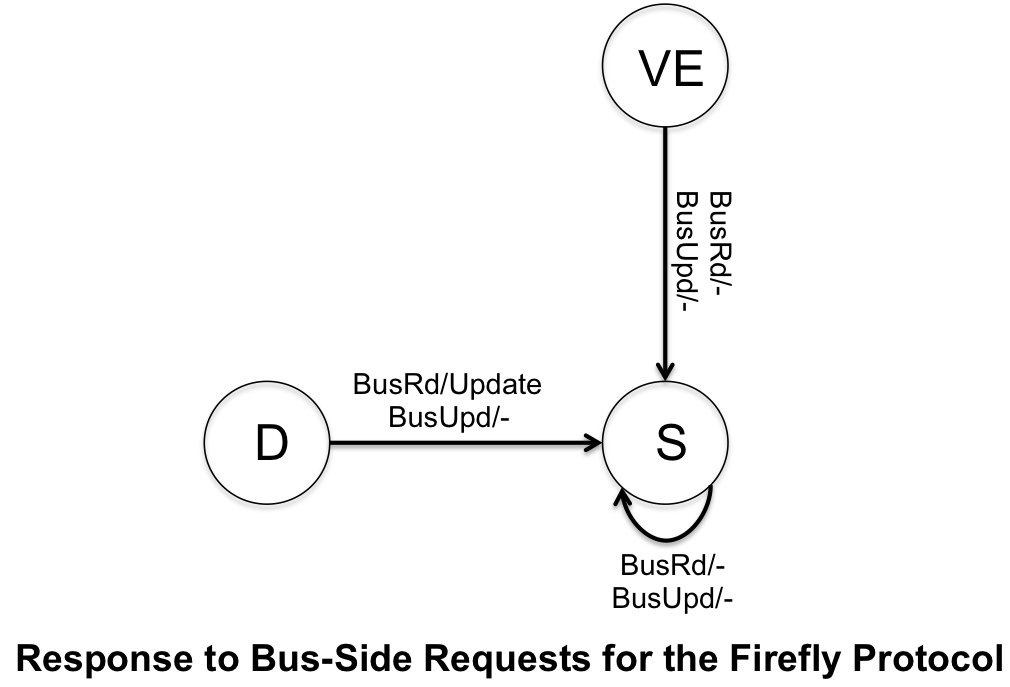

===Firefly Protocol=== | |||

Firefly protocol is another example of update coherence cache protocols. However, unlike the Dragon Protocol, it uses write-through policy (which writes all changes back to memory). The following states can be assigned to a block in this protocol. | |||

* '''Valid Exclusive (VE)''' - cache block is exclusively owned, cache block is clean | |||

* '''Dirty (D)''' - exclusive rights to the cache block, cache block is dirty | |||

* '''Shared (S)''' - cache block is shared but is not modified | |||

The Firefly Protocol uses a special bus technique called SharedLine to allow quick detection to copies of the block in other caches. It is similar to the COPIES_EXIST (C) and !COPIES_EXIST, and is shown that way in the finite state machines below. Similar to the Dragon protocol, there is no invalidation state because no cache blocks are ever invalidated. | |||

[[File:Firefly Protocol Processor-Side.png|550px|center]] | |||

<center> '''Figure 4. Firefly Protocol Processor-Side''' </center> | |||

[[File: | [[File:Firefly Protocol Bus-Side.png|550px|center]] | ||

< | <center> '''Figure 5. Firefly Protocol Bus-Side''' </center> | ||

< | |||

The Firefly protocol is used in the [http://en.wikipedia.org/wiki/DEC_Firefly DEC Firefly] multiprocessor workstation, developed by [http://en.wikipedia.org/wiki/Digital_Equipment_Corporation Digital Equipment Corporation]. The system is asymmetic and the cache is direct-mapped to support multiprocessing. The cache capicity was 16KB for the original [http://en.wikipedia.org/wiki/MicroVAX_78032 MicroVAX 78032] microprocessor were latter increased to 64KB when upgraded to the [http://en.wikipedia.org/wiki/CVAX#CVAX_78034 CVAX_78034] microprocessor. | |||

==== Advantages of Write Update Protocol ==== | |||

The simplest, most obvious and fastest. | |||

Also, for a bandwidth-restricted architecture, using write back caches does not prevent scalability. | |||

==== | ==== Disadvantages ==== | ||

• Multiple writes to the same word (no intervening read) need only one invalidate message but would require an update for each | |||

• Writes to same block in (usual) multi-word cache block require only one invalidate but would require multiple updates. Due to both spatial and temporal locality, previous cases occur often. | |||

• Bus bandwidth is a precious commodity in shared memory multi-processors | |||

• Experience has shown that invalidate protocols use significantly less bandwidth | |||

==Adaptive Coherence Protocols== | |||

=== | ===Introduction=== | ||

Even though there are clear advantages to using either update protocols or invalidate protocols, there are still disadvantages for each. In a write invalidate protocol, any update/write operation in a processor invalidates the shared cache blocks of other processors. This will force the other caches to do read requests such that the new data is retrieved. This tends to cause a high bus bandwidth and can be especially bad if there is few processors that frequently update the cache. Fortunately, write update protocols mitigate this issue. It will update all other caches at the same time it propagates an update itself. Unfortunately, this creates a different problem, there will sometime be unnecessary update to the cache on the other processors. This tends to increase conflict and capacity cache misses. | |||

Adaptive protocols tries to mitigate these problems. It will both tend to have some high bus traffic as well as some unnecessary updates. But, these can be mitigated based on how the adaptive algorithm switches between write-invalidate and write-update. There is also adaptive directory-based protocols, but these are not discussed here. | |||

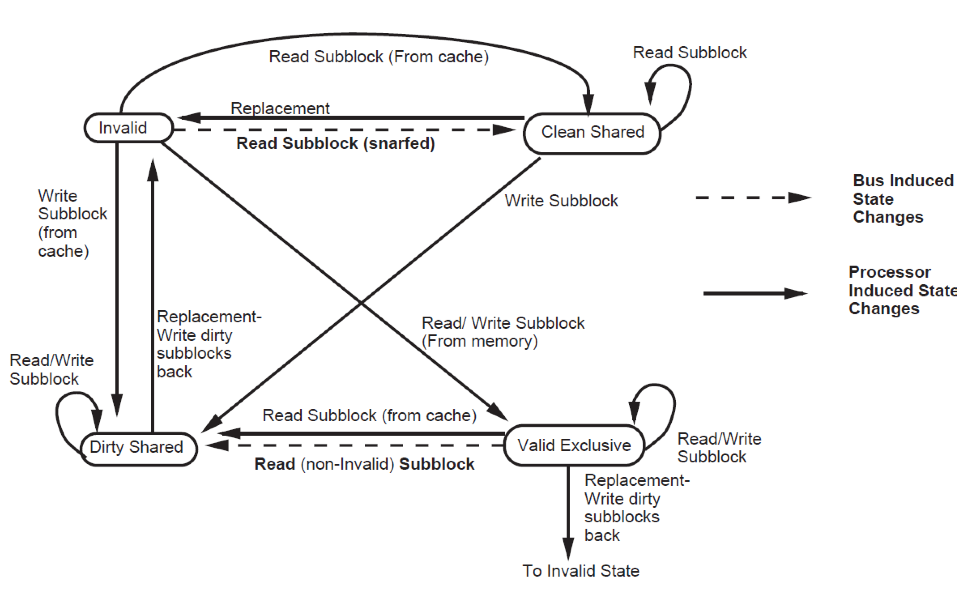

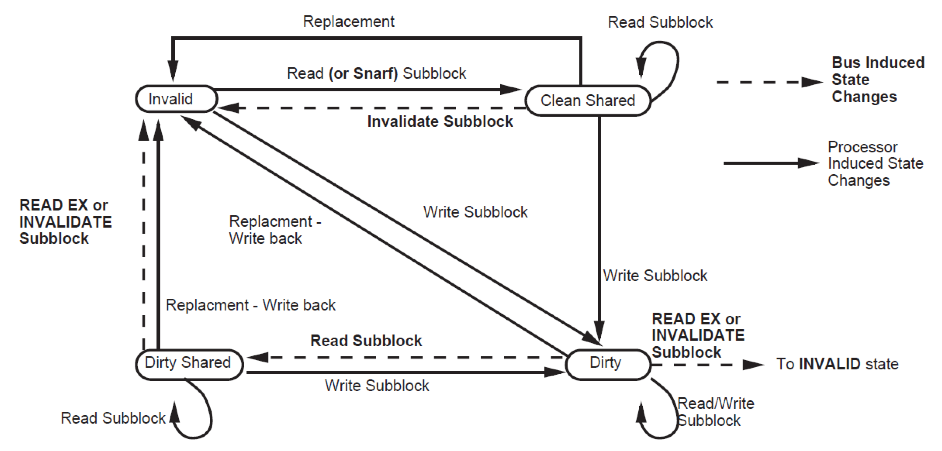

===Subblock protocol=== | ===Subblock protocol=== | ||

This snoopy-based protocol mitigate the features of [http://en.wikipedia.org/wiki/MESI_protocol Illinois MESI protocol] and write policies with subblock validation to take the advantages of both small and large cache block size by using subblock. <ref>[http://www.google.com/url?sa=t&rct=j&q=&esrc=s&source=web&cd=1&ved=0CCIQFjAA&url=ftp%3A%2F%2Fftp.cs.washington.edu%2Ftr%2F1994%2F05%2FUW-CSE-94-05-02.PS.Z&ei=2n9kT8gjhPDSAaO2nb4P&usg=AFQjCNFFRgsJiBWjKAMOHcGcRL_vkkSqLg&sig2=aYWddXJdXsNNIFQ5U4zoqg Two techniques for improving performance on bus-based mu1tiprocessors]</ref> | This snoopy-based protocol mitigate the features of [http://en.wikipedia.org/wiki/MESI_protocol Illinois MESI protocol] and write policies with subblock validation to take the advantages of both small and large cache block size by using subblock. <ref>[http://www.google.com/url?sa=t&rct=j&q=&esrc=s&source=web&cd=1&ved=0CCIQFjAA&url=ftp%3A%2F%2Fftp.cs.washington.edu%2Ftr%2F1994%2F05%2FUW-CSE-94-05-02.PS.Z&ei=2n9kT8gjhPDSAaO2nb4P&usg=AFQjCNFFRgsJiBWjKAMOHcGcRL_vkkSqLg&sig2=aYWddXJdXsNNIFQ5U4zoqg Two techniques for improving performance on bus-based mu1tiprocessors]</ref> | ||

'''Block states''' | |||

'''Invalid''': All subblocks are invalid | |||

'''Valid Exclusive''': All valid subblocks in this block are not present in any other caches. All subblocks that are clean shared may be written without a bus transaction. Any subblocks in the block may be invalid. There also may be Dirty blocks which must be written back upon replacement. | |||

'''Clean Shared''': The block contains subblocks that are either Clean Shares or Invalid. The block can be replaced without a bus transaction. | |||

'''Dirty Shared''': Subblocks in this block may be in any state. There may be Dirty blocks which must be written back on replacement.<ref>[http://www.google.com/url?sa=t&rct=j&q=&esrc=s&source=web&cd=1&ved=0CCIQFjAA&url=ftp%3A%2F%2Fftp.cs.washington.edu%2Ftr%2F1994%2F05%2FUW-CSE-94-05-02.PS.Z&ei=2n9kT8gjhPDSAaO2nb4P&usg=AFQjCNFFRgsJiBWjKAMOHcGcRL_vkkSqLg&sig2=aYWddXJdXsNNIFQ5U4zoqg Two techniques for improving performance on bus-based mu1tiprocessors]</ref> | |||

| Line 122: | Line 147: | ||

<center> '''Figure 4. Finite state diagram of block''' </center> | <center> '''Figure 4. Finite state diagram of block''' </center> | ||

'''Subblock states''' | |||

'''Invalid''': The subblock is invalid | |||

'''Clean Shared''': A read access to the block will succeed. Unless the block the subblock is a part of is in the Valid Exclusive state, a write to the subblock will force an invalidation transaction on the bus. | |||

'''Dirty Shared''': The subblock is treated like a Clean Shared sbublock, except that it must be written back on replacement. At most one cache will have a given subblock in either the Dirty Shared or Dirty state. | |||

'''Dirty''': The subblocks is exclusive to this cache. It must be written back on replacement. Read and write access to this subblock hit with no bus transaction. | |||

| Line 143: | Line 168: | ||

<b>In contrast to the Illinois MESI Protocol, which requires extra power cycle to maintain the extra states and additional logic, the subblock protocol reduces number of cache blocks, compared to any cache update protocols, and thus reduces power consumption.. </b> <ref>[http://www.google.com/url?sa=t&rct=j&q=&esrc=s&source=web&cd=1&ved=0CCIQFjAA&url=ftp%3A%2F%2Fftp.cs.washington.edu%2Ftr%2F1994%2F05%2FUW-CSE-94-05-02.PS.Z&ei=2n9kT8gjhPDSAaO2nb4P&usg=AFQjCNFFRgsJiBWjKAMOHcGcRL_vkkSqLg&sig2=aYWddXJdXsNNIFQ5U4zoqg Two techniques for improving performance on bus-based mu1tiprocessors]</ref> | <b>In contrast to the Illinois MESI Protocol, which requires extra power cycle to maintain the extra states and additional logic, the subblock protocol reduces number of cache blocks, compared to any cache update protocols, and thus reduces power consumption.. </b> <ref>[http://www.google.com/url?sa=t&rct=j&q=&esrc=s&source=web&cd=1&ved=0CCIQFjAA&url=ftp%3A%2F%2Fftp.cs.washington.edu%2Ftr%2F1994%2F05%2FUW-CSE-94-05-02.PS.Z&ei=2n9kT8gjhPDSAaO2nb4P&usg=AFQjCNFFRgsJiBWjKAMOHcGcRL_vkkSqLg&sig2=aYWddXJdXsNNIFQ5U4zoqg Two techniques for improving performance on bus-based mu1tiprocessors]</ref> | ||

===Read-snarfing protocol=== | ===Read-snarfing protocol=== | ||

| Line 179: | Line 203: | ||

Block will be invalidated immediately with no wasted updates when Threshold reduces to 0. | Block will be invalidated immediately with no wasted updates when Threshold reduces to 0. | ||

When block is actively shared, block is not invalidated by adjusting the Tb upward. <ref>[http://www.google.com/url?sa=t&rct=j&q=&esrc=s&source=web&cd=1&ved=0CCIQFjAA&url=ftp%3A%2F%2Fftp.cs.washington.edu%2Ftr%2F1994%2F05%2FUW-CSE-94-05-02.PS.Z&ei=2n9kT8gjhPDSAaO2nb4P&usg=AFQjCNFFRgsJiBWjKAMOHcGcRL_vkkSqLg&sig2=aYWddXJdXsNNIFQ5U4zoqg Two techniques for improving performance on bus-based mu1tiprocessors]</ref> | When block is actively shared, block is not invalidated by adjusting the Tb upward. <ref>[http://www.google.com/url?sa=t&rct=j&q=&esrc=s&source=web&cd=1&ved=0CCIQFjAA&url=ftp%3A%2F%2Fftp.cs.washington.edu%2Ftr%2F1994%2F05%2FUW-CSE-94-05-02.PS.Z&ei=2n9kT8gjhPDSAaO2nb4P&usg=AFQjCNFFRgsJiBWjKAMOHcGcRL_vkkSqLg&sig2=aYWddXJdXsNNIFQ5U4zoqg Two techniques for improving performance on bus-based mu1tiprocessors]</ref> | ||

=====Example 1 ===== | =====Example 1 ===== | ||

Suppose invalidation ratio (R) = 5 | |||

Current threshold block (Tb) = 3 | |||

If the processor writes 4 times before it is accessed by other processor, according to the above logic, Tb will be 4. | |||

This means Tb is at the best possible value and only update can be issues. | |||

=====Example 2 ===== | =====Example 2 ===== | ||

Consider, R= 5 and Tb = 3 for a particular block | |||

If the processor writes 10 times before it is accessed by other processor | |||

Tb will be 2. (Decreased) | |||

So the protocol can incur a cost of 2 updates, 1 invalidate and 1 reread. | |||

After 2 more write, Tb will be 0 and invalidation will occur immediately. | |||

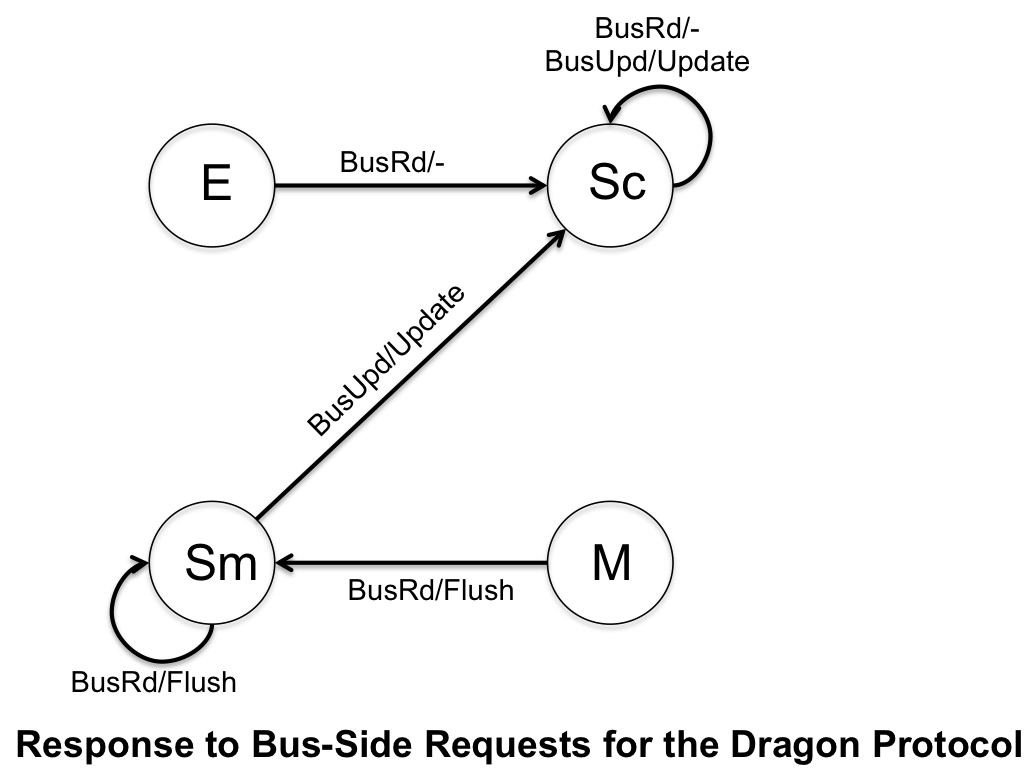

===Competitive Update Protocol=== | |||

A competitive-update protocol is a "..hybrid protocols between write-invalidate and write-update.."<ref name="nilsson"> | |||

H. Nilsson, P. Stenström "An adaptive update-based cache coherence protocol for reduction of miss rate and traffic" | |||

Proc. Parallel Architectures and Languages Europe (PARLE) Conf., Lecture Notes in Computer Science, Athens, Greece, 817, Springer-Verlag, Berlin (Jul. 1994), pp. 363–374 | |||

[http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.26.7116&rep=rep1&type=pdf Paper]</ref> | |||

These hybrid protocols are used to reduce the coherence miss rate caused by invalidation or update alone. The sole issue here is that there can be high traffic peeks and these peeks can offset the performance gain<ref name="nilsson"></ref> | |||

According to Nilsson in <ref name="nilsson2">H. Nilsson, P. Stenström, and M. Dubois, “Implementation and Evaluation of Update- | |||

Based Cache Protocols Under Relaxed Memory Consistency Models”, Technical Report, | |||

Dept. of Computer Engineering, Lund University, Sweden, July 1993</ref>, competitive-update protocols will outperform write-invalidate protocols under relaxed memory consistency. The concept presented is very simple. The first write to a block causes an update to the copy of the block. If instead the local processor does not access it, it will then propagate an invalidate. What this effectively does is make regularly accessed copies of the memory block be updated. The limitation here is that migratory data makes this protocol sub-optimal. The latest research done in this area is Competitive Update Protocol with Migratory Detection<ref name="nilsson"></ref>. This recognizes when there is migratory data and compensates. | |||

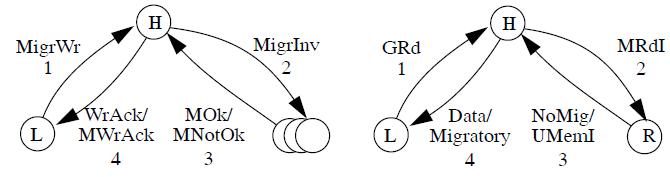

<center>[[ | <center>[[Image:CompetitiveUpdateProtocolWithMigratoryDetection.jpg|800px]]</center> | ||

<center> '''Figure 6. Competitive Update Protocol With Migratory Detection<ref name="nilsson"></ref>''' </center> | |||

<center> '''Coherence actions for detection of migratory blocks (left) and coherence actions for read misses to migratory blocks (right).''' </center> | |||

This is only one of many ways to deal with migratory data. For further reading, a Google Scholar search on "Adaptive Protocols and Migratory" will return many papers published on different ways to deal with migratory data issue that arises when using adaptive protocols. | |||

===Cachet=== | |||

Cachet is an adaptive cache coherence protocol that uses micro-protocols <ref name="shen">Xiaowei Shen, Arvind, and Larry Rudolph. 1999. CACHET: an adaptive cache coherence protocol for distributed shared-memory systems. In Proceedings of the 13th international conference on Supercomputing (ICS '99). ACM, New York, NY, USA, 135-144. DOI=10.1145/305138.305187[http://doi.acm.org/10.1145/305138.305187 Paper]</ref> Cachet recognizes that shared-memory programs have various access patterns and no fixed cache coherence protocol works well for all access patterns.<ref name="bennet">J. K. Bennett, J. B. Carter, and W. Zwaenepoel. Adaptive Software Cache Management for Distributed Shared Memory Architectures. In Proceedings of the 17th Annual International Symposium on Computer Architecture, May 1990</ref><ref name="eggers">S. Eggers and R. H. Katz. Evaluating the Performance for Four Snooping Cache Coherency Protocols. In Proceedings of the 16th Annual International Symposium on Computer Architecture, May 1989</ref><ref name="falsafi">B. Falsafi, A. R. Lebeck, S. K. Reinhardt, I. Schoinas, M. D. Hill, J. R. Larus, A. Rogers, and D. A. Wood. Application specific protocols for user-level shared memory. In Supercomputing, Nov. 1994</ref><ref name="weber">W. D. Weber and A. Gupta. Analysis of Cache Invalidation Patterns in Multiprocessors. In Proceedings of the 3rd International Conference on Architectural Support for Programming Languages and Operating Systems, 1989</ref>. What cachet attempts to do is either take in the access pattern through program annotations from the programmer or recognition by the compiler. | |||

So how does it work? | |||

< | <blockquote>"Cachet-Base: The most straightforward implementation simply uses the memory as the rendezvous. When a Commit instruction is executed for an address that is cached in the Dirty state, the data must be written back to the memory before the instruction can complete. A Reconcile instruction for an address cached in the Clean state requires the data be purged from the cache before the instruction can complete. An attractive characteristic of Cachet-Base is its simplicity; no state needs to be maintained at the memory side."<ref name=shen></ref></blockquote> | ||

< | <blockquote>Cachet-WriterPush: Since load operations are usually more frequent than store operations, it is desirable to allow a Reconcile instruction to complete even when the address is cached in the Clean state. Thus, the following load access to the address causes no cache miss. Correspondingly, when a Commit instruction is performed on a dirty cell, it cannot complete before clean copies of the address are purged from all other caches. Therefore, it can be a lengthy process to commit an address that is cached in the Dirty state."<ref name=shen></ref></blockquote> | ||

<blockquote>"Cachet-Migratory: When an address is exclusively accessed by one processor for a reasonable time period, it makes sense to give the cache the exclusive ownership so that all instructions on the address become local operations. This is reminiscent of the exclusive state in conventional MESI like protocols. The protocol ensures that an address can be cached in at most one cache at any time. Therefore, a Commit instruction can complete even when the address is cached in the Dirty state, and a Reconcile instruction can complete even when the address is cached in the Clean state. The exclusive ownership can migrate among different caches whenever necessary."<ref name=shen></ref></blockquote> | |||

<blockquote>"Different micro-protocols are optimized for different access patterns. Cachet-Base is ideal when the location is randomly accessed by multiple processors, and only necessary commit and reconcile operations are invoked. A conventional implementation of release consistency usually requires that all addresses be indistinguishably committed before a release, and reconciled after an acquire. Such excessive use of commit and reconcile operations can result in performance degradation under Cachet-Base."<ref name=shen></ref></blockquote> | |||

< | <blockquote>"Cachet-WriterPush is appropriate when certain processors are likely to read an address many times before another processor writes the address. A reconcile operation performed on a clean copy causes no purge operation, regardless of whether the reconcile is necessary. Thus, subsequent load operations to the address can continually use the cached data without causing any cache miss. Cachet Migratory fits well when one processor is likely to read and write an address many times before another processor accesses the address."<ref name=shen></ref></blockquote> | ||

What is so interesting about Cachet is its ability to switch between these mirco-protocols. This excerpt from the paper does the best of explaining it. | |||

==Power Considerations== | |||

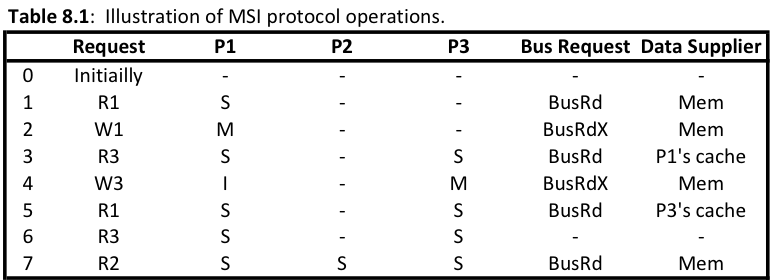

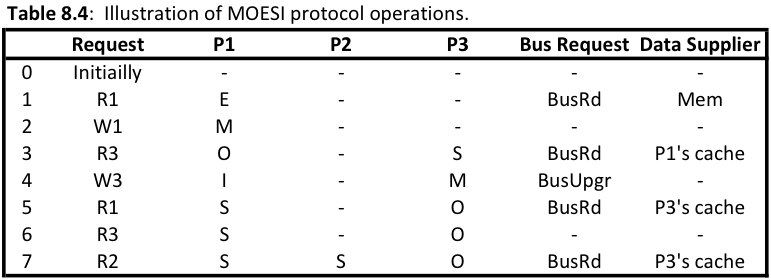

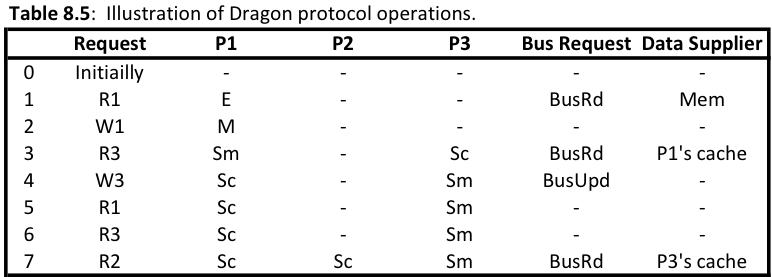

A major issue when considering power is how many bus transaction are incurring over the bus. Different protocols require different bus transactions, so we are able to loosely demonstrate how much power is being utilized by each of the different techniques by comparing there bus transactions over the same read/write pattern. We will use the patterns found in '''Ch 8 of Solihin'''. | |||

[[File:MSI_Protocol_operation.png|600px]] | |||

[[File:MOESI_Protocol_operation.png|600px]] | |||

[[File:Dragon_Protocol_operation.png|600px]] | |||

As you can see from the above spreadsheets the update-based protocols demonstrate beter power consumption in terms of bus transactions and memory access. The invalidation protocols require 6 bus transactions plus 4 memory access for the MSI protocol, as well as 5 bus transactions and 1 memory access for the MOESI protocol. This is higher compared to the Dragon protocol which only used 4 bus transactions and 1 memory access. By counting the number of bus transactions and memory access over the same procedure of processor calls we are able to put the different protocols on the same field and compare them. The Dragon protocol also requires less time on the bus because it is only passing modified words instead of whole cache blocks. | |||

The | |||

Unfortunately for adaptive protocols, we are unable to provide rough estimates. Most cases with competitive update and cachet, it depends on the problem itself. For example, bus traffic can vary in a competitive update. Competitive update does invalidates on non-regularly used cache blocks. This can vary between program to program. What make power estimates hard for adaptive protocols is the nature of being adaptive. Depending on the program involved, the performance/power used can vary drastically. | |||

==References== | ==References== | ||

<references /> | |||

<references | |||

Latest revision as of 18:43, 27 March 2013

Cache Coherence Protocols on Real Architectures

In parallel computer architectures, cache coherence refers to the consistency of data that is stored throughout the caches on individual processors or throughout the shared memory. The problem here is that we have multiple caches on multiple processors. When an update to a single cache makes changes to a shared memory, you will need to have all the caches be coherent on the value change. This is better shown below.

Figure 1. Multiple Caches of Shared Resource

There are two ways to maintain cache consistency<ref name="glasco">Glasco, D.B.; Delagi, B.A.; Flynn, M.J.; , "Update-based cache coherence protocols for scalable shared-memory multiprocessors," System Sciences, 1994. Proceedings of the Twenty-Seventh Hawaii International Conference on , vol.1, no., pp.534-545, 4-7 Jan. 1994 doi: 10.1109/HICSS.1994.323135 Paper</ref>, invalidation based and update based.

Invalidation based protocol will purge the copies of the line from the other caches which results in a single copy of the line whereas updating forwards the write value to the other caches, after which all caches are consistent.One of the drawbacks of an invalidation-based protocol is that it incurs high number of coherence misses. To solve this, one can use a update coherence protocol, or a new type of protocol called adaptive coherence protocol. We shall discuss all three below.

Invalidation Coherence Protocols

Invalidate all remote copies of cache when a local cache block is updated. Under the invalidation scheme, updates are only propagated when data are read, and several updates can take place before communication is necessary. In the multiple-reader-single-write scheme described, this is potentially expensive. But, if the read/write ratio is sufficiently high, then the parallelism obtained by allowing multiple simultaneous readers offsets this cost. However, for multiple read after write command, there would be continuous misses and subsequent fetches for those misses.

Write-Through Write Invalidate Caches

The state of a cache block copy of local processor can take one of the two states : Valid State: All processors can read safely. The cache block is valid and clean, i.e. the cached value is the same as that at a lower memory level Local processor can also write Invalid State: (not in cache) Block being invalidated. Block being replaced Any requests to the cache block will result into cache misses. The requests are: PrRd (Processor-Read) - processor-side request to read data from a cache block PrWr (Processor-Write) - request sent when processor wants to write into a cache block The Snooped Bus requests are: BusRd (Bus-Read) - When request that indicates there is a read request to a block made by a processor BusWr (Bus-Write) - Request that indicates there is a write request to a block made by a processor. In case of a write-through cache, the BusWr is a write-through to the main memory performed by another processor. In the beginning the state of the cache block is Invalid state. If a When a remote processor writes to its cache copy, all other cache copies become invalidated. When there is a processor-side read request, the processor suffers a cache miss. This results in a BusRd request on the bus. The memory block is fetched from main memory. The block goes to the valid state. When there is a PrWr request, then a BusWr command is sent on bus. Other caches that have the block invalidate their copy. The main memory (write-through) loads the correct value into the requested cache. Therefore, the state remains invalid. For the case, when the block is the valid state, on a PrRd, it is a cache hit since the block resides in the cache. The state remains valid. On a PrWr, if there is a cache hit, that means, no other cache block resides. Thus no bus snooping protocol has to be sent. On a BusRd, a cache block in the valid state remains in the valid state. While a block in the invalid state remains in the invalid state. On a BusWr, a cache block in the valid state gets into the invalid state and an invalid block remain in the invalid state.

Figure 1. Write-through Write Invalidate

Advantages

A processor can modify a cache block by invalidating other copies in other caches. Thus cache block that is being modifies resides only in one cache giving exclusive membership to that processor.

Write Back Write Invalidate caches

• Processor / Cache Operations

PrRd, PrWr, block Replace

States

Invalid, Valid (clean) - same as above Modified (dirty) - A block that is valid and has value written into; thus, is different from the one in main memory

• Bus Transactions

Bus Read (BusRd), Write-Back (BusWB)

Figure 1. Write-through Write Invalidate States Bus Snooping Protocol

Write-through Advantages :

When a processor continuously writes into the same cache block, only a single command is used to invalidate the cache blocks

Disadvantage :

requires a high amount of bandwidth.

Update Coherence Protocol

Introduction

Update-based cache coherence protocols work by directly updating all the cache values in the system. This differs from the invalidation-based protocols because it achieves write propagation without having to invalidate data and thus not resulting in a cache miss. This saves on numerous coherence misses, time spent to correct the miss, and bandwidth usage. The update-based protocols we will be discussing in this section are

Dragon Protocol

Dragon protocol saves on bandwidth by updating the specific words within the cache instead of the entire block. The caches use write allocate and write update policies. The Dragon Protocol is made up of four states and does not include an invalidation state.

- Modified (M) - cache block is exclusively owned, however it can be different from main memory

- Exclusive (E) - cache block is clean and is only in one cache

- Shared Modified (Sm) - cache block resides in multiple caches and is possible dirty

- Shared Clean (Sc) - cache block resides in multiple caches and is clean

There is not an invalidation state, because if a block is cached then it assumed to be valid. However,it can differ from main memory. Below are the finite state machines for the processor-side calls and bus-side calls. Dragon protocol utilizes snoopy caches to appear as if it as a uniform memory space even though there are multiple processors.

The Dragon Protocol is implemented in the Cray CS6400 (also know as the Xerox Dragon multiprocessor workstation). It was available with either 60Mhz or 85Mhz processors. The Xerox Dragon was designed to be a research numerous processors.

Firefly Protocol

Firefly protocol is another example of update coherence cache protocols. However, unlike the Dragon Protocol, it uses write-through policy (which writes all changes back to memory). The following states can be assigned to a block in this protocol.

- Valid Exclusive (VE) - cache block is exclusively owned, cache block is clean

- Dirty (D) - exclusive rights to the cache block, cache block is dirty

- Shared (S) - cache block is shared but is not modified

The Firefly Protocol uses a special bus technique called SharedLine to allow quick detection to copies of the block in other caches. It is similar to the COPIES_EXIST (C) and !COPIES_EXIST, and is shown that way in the finite state machines below. Similar to the Dragon protocol, there is no invalidation state because no cache blocks are ever invalidated.

The Firefly protocol is used in the DEC Firefly multiprocessor workstation, developed by Digital Equipment Corporation. The system is asymmetic and the cache is direct-mapped to support multiprocessing. The cache capicity was 16KB for the original MicroVAX 78032 microprocessor were latter increased to 64KB when upgraded to the CVAX_78034 microprocessor.

Advantages of Write Update Protocol

The simplest, most obvious and fastest. Also, for a bandwidth-restricted architecture, using write back caches does not prevent scalability.

Disadvantages

• Multiple writes to the same word (no intervening read) need only one invalidate message but would require an update for each • Writes to same block in (usual) multi-word cache block require only one invalidate but would require multiple updates. Due to both spatial and temporal locality, previous cases occur often. • Bus bandwidth is a precious commodity in shared memory multi-processors • Experience has shown that invalidate protocols use significantly less bandwidth

Adaptive Coherence Protocols

Introduction

Even though there are clear advantages to using either update protocols or invalidate protocols, there are still disadvantages for each. In a write invalidate protocol, any update/write operation in a processor invalidates the shared cache blocks of other processors. This will force the other caches to do read requests such that the new data is retrieved. This tends to cause a high bus bandwidth and can be especially bad if there is few processors that frequently update the cache. Fortunately, write update protocols mitigate this issue. It will update all other caches at the same time it propagates an update itself. Unfortunately, this creates a different problem, there will sometime be unnecessary update to the cache on the other processors. This tends to increase conflict and capacity cache misses.

Adaptive protocols tries to mitigate these problems. It will both tend to have some high bus traffic as well as some unnecessary updates. But, these can be mitigated based on how the adaptive algorithm switches between write-invalidate and write-update. There is also adaptive directory-based protocols, but these are not discussed here.

Subblock protocol

This snoopy-based protocol mitigate the features of Illinois MESI protocol and write policies with subblock validation to take the advantages of both small and large cache block size by using subblock. <ref>Two techniques for improving performance on bus-based mu1tiprocessors</ref>

Block states

Invalid: All subblocks are invalid

Valid Exclusive: All valid subblocks in this block are not present in any other caches. All subblocks that are clean shared may be written without a bus transaction. Any subblocks in the block may be invalid. There also may be Dirty blocks which must be written back upon replacement.

Clean Shared: The block contains subblocks that are either Clean Shares or Invalid. The block can be replaced without a bus transaction.

Dirty Shared: Subblocks in this block may be in any state. There may be Dirty blocks which must be written back on replacement.<ref>Two techniques for improving performance on bus-based mu1tiprocessors</ref>

Finite state diagram of block/ line states is as follows:

Subblock states

Invalid: The subblock is invalid

Clean Shared: A read access to the block will succeed. Unless the block the subblock is a part of is in the Valid Exclusive state, a write to the subblock will force an invalidation transaction on the bus.

Dirty Shared: The subblock is treated like a Clean Shared sbublock, except that it must be written back on replacement. At most one cache will have a given subblock in either the Dirty Shared or Dirty state.

Dirty: The subblocks is exclusive to this cache. It must be written back on replacement. Read and write access to this subblock hit with no bus transaction.

Finite state diagram of subblock is as follows:

Basic idea of this protocol is to do more data transfer between caches and less off-chip memory access. In contrast with Illinois protocol, on read misses, shared cache block sends cached sublock and also all other clean and dirty shared subblockes in that block. If the subblock is in the main memory, cache snooper pass the information of caches subblocks and memory will provide the requested subblock along with sublocks which are not currently cached. On write to clean subblockes and write misses, snooper invalidates only the subblock to be written to avoid the penalties associated with false sharing.

In contrast to the Illinois MESI Protocol, which requires extra power cycle to maintain the extra states and additional logic, the subblock protocol reduces number of cache blocks, compared to any cache update protocols, and thus reduces power consumption.. <ref>Two techniques for improving performance on bus-based mu1tiprocessors</ref>

Read-snarfing protocol

This is an enhancement to snoopy-cache coherence protocols that takes advantage of the inherent broadcast nature of the bus.

In contrast with MESI protocol, if one processor wants to reload data in its cache due cache miss, read-snarfing protocol will effectively supply the block to other processors whose blocks were invalidated in the past. Only one read is required to restore the block to all caches which are invalidated.

Protocol modifies the normal updated protocol by updating only those sub-blocks which are modified and update only need to broadcast when the data is actively shared. Read-snarfing protocol maintains a counter and invalidation threshold (Tb) for each cache block “b” to overcome the drawback of WI’s write after read problem. Protocol predicts the number of write operations happens on a single cache block before a read request to the same cache block. Invalidation request is being broadcasted when the write counter reaches the Tb and protocol dynamically adjust the value of Tb based on the nature of program execution.

Simple algorithm of Read-snarfing Random Walk protocol is as follows: Initially Tb of each cache block b is set to 0. <ref>Two techniques for improving performance on bus-based mu1tiprocessors</ref>

// Number of Write operation happens before being accessed by other processor

If (most recent write run > R) {

If(Tb > 1) {

Tb--;

}

} else {

If(R > Tb) {

Tb++;

}

}

R = Invalidation Ratio which is (Ci + Cr) / Cu

Ci: The cost in bus cycles of an invalidation transaction

Cu: The cost in bus cycles of an update transaction

Cr: The cost in bus cycles of reading a cache block

Block will be invalidated immediately with no wasted updates when Threshold reduces to 0. When block is actively shared, block is not invalidated by adjusting the Tb upward. <ref>Two techniques for improving performance on bus-based mu1tiprocessors</ref>

Example 1

Suppose invalidation ratio (R) = 5 Current threshold block (Tb) = 3 If the processor writes 4 times before it is accessed by other processor, according to the above logic, Tb will be 4. This means Tb is at the best possible value and only update can be issues.

Example 2

Consider, R= 5 and Tb = 3 for a particular block If the processor writes 10 times before it is accessed by other processor Tb will be 2. (Decreased) So the protocol can incur a cost of 2 updates, 1 invalidate and 1 reread. After 2 more write, Tb will be 0 and invalidation will occur immediately.

Competitive Update Protocol

A competitive-update protocol is a "..hybrid protocols between write-invalidate and write-update.."<ref name="nilsson"> H. Nilsson, P. Stenström "An adaptive update-based cache coherence protocol for reduction of miss rate and traffic" Proc. Parallel Architectures and Languages Europe (PARLE) Conf., Lecture Notes in Computer Science, Athens, Greece, 817, Springer-Verlag, Berlin (Jul. 1994), pp. 363–374 Paper</ref> These hybrid protocols are used to reduce the coherence miss rate caused by invalidation or update alone. The sole issue here is that there can be high traffic peeks and these peeks can offset the performance gain<ref name="nilsson"></ref> According to Nilsson in <ref name="nilsson2">H. Nilsson, P. Stenström, and M. Dubois, “Implementation and Evaluation of Update- Based Cache Protocols Under Relaxed Memory Consistency Models”, Technical Report, Dept. of Computer Engineering, Lund University, Sweden, July 1993</ref>, competitive-update protocols will outperform write-invalidate protocols under relaxed memory consistency. The concept presented is very simple. The first write to a block causes an update to the copy of the block. If instead the local processor does not access it, it will then propagate an invalidate. What this effectively does is make regularly accessed copies of the memory block be updated. The limitation here is that migratory data makes this protocol sub-optimal. The latest research done in this area is Competitive Update Protocol with Migratory Detection<ref name="nilsson"></ref>. This recognizes when there is migratory data and compensates.

This is only one of many ways to deal with migratory data. For further reading, a Google Scholar search on "Adaptive Protocols and Migratory" will return many papers published on different ways to deal with migratory data issue that arises when using adaptive protocols.

Cachet

Cachet is an adaptive cache coherence protocol that uses micro-protocols <ref name="shen">Xiaowei Shen, Arvind, and Larry Rudolph. 1999. CACHET: an adaptive cache coherence protocol for distributed shared-memory systems. In Proceedings of the 13th international conference on Supercomputing (ICS '99). ACM, New York, NY, USA, 135-144. DOI=10.1145/305138.305187Paper</ref> Cachet recognizes that shared-memory programs have various access patterns and no fixed cache coherence protocol works well for all access patterns.<ref name="bennet">J. K. Bennett, J. B. Carter, and W. Zwaenepoel. Adaptive Software Cache Management for Distributed Shared Memory Architectures. In Proceedings of the 17th Annual International Symposium on Computer Architecture, May 1990</ref><ref name="eggers">S. Eggers and R. H. Katz. Evaluating the Performance for Four Snooping Cache Coherency Protocols. In Proceedings of the 16th Annual International Symposium on Computer Architecture, May 1989</ref><ref name="falsafi">B. Falsafi, A. R. Lebeck, S. K. Reinhardt, I. Schoinas, M. D. Hill, J. R. Larus, A. Rogers, and D. A. Wood. Application specific protocols for user-level shared memory. In Supercomputing, Nov. 1994</ref><ref name="weber">W. D. Weber and A. Gupta. Analysis of Cache Invalidation Patterns in Multiprocessors. In Proceedings of the 3rd International Conference on Architectural Support for Programming Languages and Operating Systems, 1989</ref>. What cachet attempts to do is either take in the access pattern through program annotations from the programmer or recognition by the compiler.

So how does it work?

"Cachet-Base: The most straightforward implementation simply uses the memory as the rendezvous. When a Commit instruction is executed for an address that is cached in the Dirty state, the data must be written back to the memory before the instruction can complete. A Reconcile instruction for an address cached in the Clean state requires the data be purged from the cache before the instruction can complete. An attractive characteristic of Cachet-Base is its simplicity; no state needs to be maintained at the memory side."<ref name=shen></ref>

Cachet-WriterPush: Since load operations are usually more frequent than store operations, it is desirable to allow a Reconcile instruction to complete even when the address is cached in the Clean state. Thus, the following load access to the address causes no cache miss. Correspondingly, when a Commit instruction is performed on a dirty cell, it cannot complete before clean copies of the address are purged from all other caches. Therefore, it can be a lengthy process to commit an address that is cached in the Dirty state."<ref name=shen></ref>

"Cachet-Migratory: When an address is exclusively accessed by one processor for a reasonable time period, it makes sense to give the cache the exclusive ownership so that all instructions on the address become local operations. This is reminiscent of the exclusive state in conventional MESI like protocols. The protocol ensures that an address can be cached in at most one cache at any time. Therefore, a Commit instruction can complete even when the address is cached in the Dirty state, and a Reconcile instruction can complete even when the address is cached in the Clean state. The exclusive ownership can migrate among different caches whenever necessary."<ref name=shen></ref>

"Different micro-protocols are optimized for different access patterns. Cachet-Base is ideal when the location is randomly accessed by multiple processors, and only necessary commit and reconcile operations are invoked. A conventional implementation of release consistency usually requires that all addresses be indistinguishably committed before a release, and reconciled after an acquire. Such excessive use of commit and reconcile operations can result in performance degradation under Cachet-Base."<ref name=shen></ref>

"Cachet-WriterPush is appropriate when certain processors are likely to read an address many times before another processor writes the address. A reconcile operation performed on a clean copy causes no purge operation, regardless of whether the reconcile is necessary. Thus, subsequent load operations to the address can continually use the cached data without causing any cache miss. Cachet Migratory fits well when one processor is likely to read and write an address many times before another processor accesses the address."<ref name=shen></ref>

What is so interesting about Cachet is its ability to switch between these mirco-protocols. This excerpt from the paper does the best of explaining it.

Power Considerations

A major issue when considering power is how many bus transaction are incurring over the bus. Different protocols require different bus transactions, so we are able to loosely demonstrate how much power is being utilized by each of the different techniques by comparing there bus transactions over the same read/write pattern. We will use the patterns found in Ch 8 of Solihin.

As you can see from the above spreadsheets the update-based protocols demonstrate beter power consumption in terms of bus transactions and memory access. The invalidation protocols require 6 bus transactions plus 4 memory access for the MSI protocol, as well as 5 bus transactions and 1 memory access for the MOESI protocol. This is higher compared to the Dragon protocol which only used 4 bus transactions and 1 memory access. By counting the number of bus transactions and memory access over the same procedure of processor calls we are able to put the different protocols on the same field and compare them. The Dragon protocol also requires less time on the bus because it is only passing modified words instead of whole cache blocks.

Unfortunately for adaptive protocols, we are unable to provide rough estimates. Most cases with competitive update and cachet, it depends on the problem itself. For example, bus traffic can vary in a competitive update. Competitive update does invalidates on non-regularly used cache blocks. This can vary between program to program. What make power estimates hard for adaptive protocols is the nature of being adaptive. Depending on the program involved, the performance/power used can vary drastically.

References

<references />