CSC/ECE 506 Spring 2012/10a vm: Difference between revisions

No edit summary |

(→Quiz) |

||

| (31 intermediate revisions by 2 users not shown) | |||

| Line 4: | Line 4: | ||

=== Introduction to prefetching === | === Introduction to prefetching === | ||

Almost all processors today | Almost all processors today use [http://en.wikipedia.org/wiki/Instruction_prefetch prefetching] as a means to speed up execution. Primarily, prefetching is used to shorten the amount of time a processor is in the wait state by predicting which cache block would be accessed next, so that when a [http://en.wikipedia.org/wiki/CPU_cache#Cache_miss cache miss] occurs, the old block in the cache can be immediately replaced with the block that is prefetched and hence decreasing the idle time of the processor. Performance in prefetching is best when it is done by following the [http://en.wikipedia.org/wiki/Out-of-order_execution program order]. However, it need not always be that prefetching is done in program order; a processor trying to guess the result of a calculation during a complex [http://en.wikipedia.org/wiki/Branch_prediction branch prediction] algorithm will need to anticipate the result and fetch the right set of instructions for execution. Things get more complex when it comes to graphical processing units or GPUs. Prefetching can take advantage of [http://en.wikipedia.org/wiki/Coherence_(physics)#Spatial_coherence spatial coherence] and the data that is prefetched are not a set of instructions, but instead they are texture elements that can be mapped to a polygon.<ref>[http://en.wikipedia.org/wiki/Instruction_prefetch Instruction prefetch wiki article.]</ref> | ||

=== Types of prefetching === | === Types of prefetching === | ||

[http://en.wikipedia.org/wiki/Instruction_prefetch Prefetching] can be primarily classified based on whether it is binding or non-binding and whether it is hardware or software controlled. Using a binding prefetch, the value of a later reference, like a register load, is bound at the time the prefetch completes. However this comes with restrictions because the value in the prefetch might not be accurate | [http://en.wikipedia.org/wiki/Instruction_prefetch Prefetching] can be primarily classified based on whether it is binding or non-binding, and whether it is hardware or software controlled. Using a binding prefetch, the value of a later reference, like a register load, is bound at the time the prefetch completes. However, this comes with added restrictions because the value in the prefetch might not be accurate - there might be invalidations that can be caused by another processor in the time frame between prefetch and reference by the current processor. Coming to the non-binding prefetch case, the data is stored into the processor cache, and the coherence is maintained till the processor reads or writes the value. This approach, unlike binding prefetch, will not have an effect on the correctness of any consistency model. It can serve as a serious improvement in terms of performance. | ||

The | The prefetch that is interesting to us is the hardware controlled and non-binding prefetch. Prefetching done in software involves significant effort from the programmer's side, and it may not be scalable as the length of the program increases. In comparison, the non-binding prefetch yields significantly more performance when compared to the binding prefetch. The major performance enhancement from prefetching comes by decreasing the memory latencies that are caused by all consistency models. | ||

In the case of a read operation, the read prefetch is issued to get the data in a read-only shared state in the cache. Since we are considering the non-binding prefetch there is a guarantee that the read operation is going to return a correct value when it is allowed to run irrespective of the actual prefetch completion time. However there is also a catch to this | In the case of a read operation, the read prefetch is issued to get the data in a read-only shared state in the cache. Since we are considering the non-binding prefetch, there is a guarantee that the read operation is going to return a correct value when it is allowed to run, irrespective of the actual prefetch completion time. However, there is also a catch to this: the value read might not always be correct. Consider a case where there is a write operation performed on the memory location that was just read-prefetched. Thus the value can be read again depending on whether an invalidation-based or an update-based coherence scheme is being used. If an [http://en.wikipedia.org/wiki/Cache_coherence update-based coherence scheme] is being used, we can be assured that the other processor will send a bus update and the current processor can pick it up to update its value. However, if an invalidation based coherence scheme is used, there would be a coherence miss and the value will be read again from the system memory, giving an illusion that the prefetch did not occur. | ||

In the case of a write operation, to acquire the exclusive ownership of the line, a read-exclusive prefetch can be used. Since it is cached in the exclusive mode, a write operation can proceed without incurring an invalidate or an update on other caches which have cached the same line or block. The write operation | In the case of a write operation, to acquire the exclusive ownership of the line, a read-exclusive prefetch can be used. Since it is cached in the exclusive mode, a write operation can proceed without incurring an invalidate or an update on other caches which have cached the same line or block. The write operation finishes quickly because the value is already cached and there is no coherence miss, thus reducing the idle time of the processor. However, it is to be noted that the read-exclusive prefetch is only possible if the coherence scheme is invalidation-based and not update-based. The same logic of incorrectness applies here that we have seen in the read case: suppose that another processor asks for a write to the same block; in this case, the exclusive ownership will no longer be maintained. Instead the cache block will be invalidated. | ||

=== How does prefetching work ? === | === How does prefetching work ? === | ||

In a multiprocessor environment, each processor has a [http://en.wikipedia.org/wiki/Memory_disambiguation load] and a [http://en.wikipedia.org/wiki/Memory_disambiguation store buffer]. The way in which consistency models work | In a multiprocessor environment, each processor has a [http://en.wikipedia.org/wiki/Memory_disambiguation load] and a [http://en.wikipedia.org/wiki/Memory_disambiguation store buffer]. The way in which consistency models work are that the accesses to memory locations are delayed until the previous requests have finished. While issuing a read or a write request, we can implement the prefetch by making the hardware automatically issue a prefetch for requests which are in the load or store buffer and those requests which cannot be honored right away because of the delays incurred due to consistency model constraints. | ||

Let us trace through the steps a prefetch request would follow. First it looks up the cache to see whether the block is already present. If it is indeed available, the request is terminated. If it is not present, the prefetch issued to memory. Depending | Let us trace through the steps a prefetch request would follow. First, it looks up the cache to see whether the block is already present. If it is indeed available, the request is terminated. If it is not present, the prefetch is issued to memory. Depending on the type of prefetch technique we are using, the response to the prefetch may or may not be immediate. The response is then placed in the cache. This process can be optimized by detecting duplicate requests to the same location and then combining the responses to each individual request to point to the prefetch request, thereby preventing duplicate requests being issued to memory. This mechanism also taxes the system in the form of requirements. Firstly, it requires that hardware coherent caches and the block that is prefetched should fit into the cache. Then for the write prefetch to work efficiently, there should be an invalidation-based coherence scheme in place. Additionally, the bus bandwidth should be high in order to handle several prefetch requests at the same time. The caches will also be more busy with read/write transactions when prefetching is implemented.<ref>[http://www.opensparc.net/pubs/papers/InstrPfHPCA05.pdf Effective Instruction Prefetching in Chip Multiprocessors for Modern Commercial Applications]</ref> | ||

The amount of lookahead we do for a prefetch needs to be controlled, in the sense that if | The amount of lookahead we do for a prefetch needs to be controlled, in the sense that if a higher number of blocks are prefetched than are required, then the processor might not ever use them and it would also result in increased cache misses of actually required blocks. Thus the extent to which lookahead is done should dynamically adjust depending on whether the processor actually uses the blocks or just evicts them without a read or a write reference to it. | ||

== Prefetching with Sequential Consistency == | == Prefetching with Sequential Consistency == | ||

Sequential Consistency (SC) might cause a reader to assume that its implementation will need a single memory module. | Sequential Consistency (SC) might cause a reader to assume that its implementation will need a single memory module. However, this is not true. Both SC and the relaxed memory models allow many optimizations within them in order to deliver high performance. SC and the relaxed models allow non-binding prefetching along with coherent caching.<ref>[http://www.google.com/url?sa=t&rct=j&q=&esrc=s&source=web&cd=1&sqi=2&ved=0CCkQFjAA&url=ftp%3A%2F%2Fftp.cs.wisc.edu%2Fpub%2Ftechreports%2F1991%2FTR1006.pdf&ei=cZ57T-j7DNCbtwenqIG1CA&usg=AFQjCNHtHiuYi2hYaVbTkSuELNg7PNQuXA&sig2=VFMR7Hq3ofiDnH8tzbFNUg Cache consistency and sequential consistency]</ref> | ||

'''Let us take a look at an example and see how SC vs prefetching with SC performs''' | '''Let us take a look at an example and see how SC vs prefetching with SC performs''' | ||

The assumptions being made are that the processor has non-blocking reads with branch prediction machinery, an invalidation-based cache coherence scheme is in place, if there is a cache hit the latency is 1 clock cycle, and the cache miss incurs a latency of 100 clock cycles. | |||

The following code segment represents a common producer scenario: | The following code segment represents a common producer scenario: | ||

| Line 42: | Line 41: | ||

[[Measuring how the code performs under SC only]] | [[Measuring how the code performs under SC only]] | ||

Since we are considering a system with SC, we know that an access to memory cannot be issued until the previous access has completed. The first three accesses are misses and they occur one after the other without any interleaving, which gives us a total latency of 100 x 3 = 300 clock cycles. The fourth operation however is a cache hit, thus adding only 1 clock cycle. The four accesses take up 301 clock cycles to | Since we are considering a system with SC, we know that an access to memory cannot be issued until the previous access has completed. The first three accesses are misses and they occur one after the other without any interleaving, which gives us a total latency of 100 x 3 = 300 clock cycles. The fourth operation however is a cache hit, thus adding only 1 clock cycle. The four accesses take up 301 clock cycles to complete. | ||

[[Measuring how the same code performs under prefetching with SC]] | [[Measuring how the same code performs under prefetching with SC]] | ||

With prefetching we can get significant improvement in terms of performance. Since the system | With prefetching we can get significant improvement in terms of performance. Since the system has the ability to do prefetching and the processor has branch prediction machinery, let us assume that the branch predictor takes the loop in which the lock L has been successfully acquired. The lookahead operates and A and B are prefetched into memory in the read exclusive mode. When the statement lock L executes, the write accesses to A and B are served in parallel as well because they are prefetched. Thus the total would sum to 102 clock cycles (2 hits in parallel and 1 miss). The fourth memory access is a hit and takes only 1 clock cycle to finish thus giving a total of 103 clock cycles. | ||

Comparing the 103 clock cycles in prefetching under SC .vs. SC only we see that there is nearly a | Comparing the 103 clock cycles in prefetching under SC .vs. SC only we see that there is nearly a 200 clock cycle difference, which is a significant improvement. As the number of accesses increase, the difference is only going to get larger until a certain point where "SC only" would no longer be a viable option. | ||

'''Another example showing how SC vs prefetching with SC performs''' | '''Another example showing how SC vs prefetching with SC performs''' | ||

| Line 72: | Line 71: | ||

Thus again, we see that prefetching with SC performs better than "SC only". | Thus again, we see that prefetching with SC performs better than "SC only". | ||

== | == Performance of prefetching with SC and why the idea has not been widely adopted == | ||

We have two examples | We have seen two examples where prefetching with SC does a better job than "SC only". However, what we need to look at is whether this is the maximum performance which we can extract. Consider the second example where we had 3 reads in a critical section. The address which E[D] is trying to access will depend on the value of D. Read D was a cache hit, and E[D] is not allowed to perform under SC until the read C finishes. Thus, even though prefetching increases performance by reducing the time delay caused due to consistency constraints, it does not deal with situations where out of order usage of the values returned by memory access is critical for the processor to proceed efficiently. In other words, prefetching fails to do a good job when out of order consumption of values is important.<ref>[http://ieeexplore.ieee.org/xpl/login.jsp?tp=&arnumber=223075&url=http%3A%2F%2Fieeexplore.ieee.org%2Fxpls%2Fabs_all.jsp%3Farnumber%3D223075 Memory access dependencies in shared-memory multiprocessors]</ref><ref>[http://www.google.com/url?sa=t&rct=j&q=&esrc=s&source=web&cd=1&ved=0CDIQFjAA&url=http%3A%2F%2Fciteseerx.ist.psu.edu%2Fviewdoc%2Fdownload%3Fdoi%3D10.1.1.52.9935%26rep%3Drep1%26type%3Dpdf&ei=wp17T_vaKsrEtwe9yvSpCA&usg=AFQjCNGQXk84yrSUHkZU-mDw0D8KMVb6WQ&sig2=rhKqMuWKvTBHdFgK3rOthw Performance evaluation of memory consistency models for shared-memory multiprocessors]</ref> | ||

Even though the main use of prefetching is to enable | Even though the main use of prefetching is to enable servicing of the requests faster when compared to the delay constraints imposed by the memory contraint models, correctness needs to be maintained at all times. The number of times a prefetched value is incorrect should be small, and luckily this is indeed true in real world systems for several reasons. The invalidation of the prefetched values is loosely coupled with the delay to obtain a correct execution sequence. These delays are imposed by the memory consistency models, and in SC, which is the strictest of all models, many delays are imposed. Usually, the time when one process releases a synchronization is much earlier when compared another process trying to acquire it. Thus the delays introduced by SC are unnecessary and therefore it taxes the systems performance. <ref>[http://dl.acm.org/citation.cfm?id=285991 Memory access buffering in multiprocessors]</ref> | ||

Such cases are not | Such cases are not rare to find in real applications and hence prefetching cannot be used all the time. Therefore, prefetching under SC was not widely adopted and hence new schemes had to be developed in order to overcome the drawbacks of the current method. | ||

== Solution#1 Prefetching with Release Consistency == | == Solution#1 Prefetching with Release Consistency == | ||

Prefetching under release consistency does at least as good as prefetching under SC. Let us evaluate its performance in the two examples we looked at previously. We follow the same assumptions about the system which we have followed previously. | Prefetching under release consistency (RC) does at least as good as prefetching under SC. Let us evaluate its performance in the two examples we looked at previously. We follow the same assumptions about the system which we have followed previously. | ||

'''The first example''' | '''The first example''' | ||

| Line 99: | Line 98: | ||

[[Measuring how the same code performs under prefetching with RC]] | [[Measuring how the same code performs under prefetching with RC]] | ||

With prefetching we can get significant improvement in terms of performance. Since the system now has the ability to do prefetching and the processor has branch prediction machinery, let us assume that the branch predictor takes the loop in which the lock L has been successfully acquired. The lookahead operates and A and B are prefetched into memory in the read exclusive mode. When the statement lock L executes, the write accesses to A and B are served in parallel as well because they are prefetched. Thus the total would sum to 102 clock cycles (2 hits in parallel and 1 miss). The fourth memory access is a hit and takes only 1 clock cycle to finish thus giving a total of 103 clock cycles. | With prefetching, we can get significant improvement in terms of performance, at least compared to SC. Since the system now has the ability to do prefetching and the processor has branch prediction machinery, let us assume that the branch predictor takes the loop in which the lock L has been successfully acquired. The lookahead operates and A and B are prefetched into memory in the read exclusive mode. When the statement lock L executes, the write accesses to A and B are served in parallel as well because they are prefetched. Thus the total would sum to 102 clock cycles (2 hits in parallel and 1 miss). The fourth memory access is a hit and takes only 1 clock cycle to finish thus giving a total of 103 clock cycles. | ||

In this example, we found that prefetching with SC and prefetching with RC take the amount of time. | In this example, we found that prefetching with SC and prefetching with RC take the amount of time. | ||

| Line 123: | Line 122: | ||

Thus again, we see that prefetching with RC performs better than "RC only". | Thus again, we see that prefetching with RC performs better than "RC only". | ||

However, we have not got a significant performance gain in the second example. Thus even though RC allows more relaxation on the order of operations issued to memory, the gain is not much. Relaxed models offer more options on the hardware implementation perspective than SC so we might get tempted to conclude that hardware must use the relaxed models instead of SC. On the other hand, the performance gain we expect | However, we have not got a significant performance gain in the second example. Thus even though RC allows more relaxation on the order of operations issued to memory, the gain is not much. Relaxed models offer more options on the hardware implementation perspective than SC, so we might get tempted to conclude that hardware must use the relaxed models instead of SC. On the other hand, the performance gain we expect to get is not being delivered under prefetching with RC, thus it cannot be justified to be a good approach either, even though it performs better than prefetching under SC. | ||

Also, the same argument that we used for SC by mentioning that it taxes the system by causing unwanted delays to ensure correctness can be applied to the RC model as well. Though unlike SC, it may not tax the system much because in RC the delays are only inserted at synchronization points. Even then it forms a part of the argument that prefetching under RC is not a very good method. | Also, the same argument that we used for SC by mentioning that it taxes the system by causing unwanted delays to ensure correctness can be applied to the RC model as well. Though unlike SC, it may not tax the system's performance much, because in RC the delays are only inserted at synchronization points. Even then it forms a part of the argument that prefetching under RC is not a very good method. | ||

== Solution#2 Speculative Execution == | == Solution#2 Speculative Execution == | ||

Speculative execution does impose many constraints which relaxed and SC implementations do, but rather it allows them to be more aggressive. Speculative execution allows the processor to | Speculative execution does impose many constraints which relaxed and SC implementations do, but rather it allows them to be more aggressive. Speculative execution allows the processor to execute instructions eagerly. However, the same strictness applies when it comes to being correct. That is, the instructions must be undone when speculations prove incorrect. This scenario can be found in wrongly predicted branches. When the processor knows that it cannot be wrong, it does a commit of the instruction, which frees up the resources that were being used for implementation. There are two strict rules about instructions being committed: <ref>[http://en.wikipedia.org/wiki/Speculative_execution Speculative Execution wiki article]</ref> | ||

- All instructions prior to the current instruction must be committed, & | - All instructions prior to the current instruction must be committed, & | ||

- The current | - The current instruction operation commits. | ||

For example, when we consider a load or store operation, we can be assured that it commits when it does a read or a write on a memory value. With speculative execution, both relaxed and SC implementations can perform operations out of order and in a speculative fashion. | For example, when we consider a load or store operation, we can be assured that it commits when it does a read or a write on a memory value. With speculative execution, both relaxed and SC implementations can perform operations out-of-order and in a speculative fashion. Consider the comparison of the peer performance of relaxed .vs. SC: | ||

read A (miss) | read A (miss) | ||

read B (hit) | read B (hit) | ||

When dealing with SC, read A occurs first, gets committed and then read B gets committed. However, with relaxed models, read B can get committed before read A gets committed. The difference in performance between relaxed and SC is | When dealing with SC, read A occurs first, gets committed and then read B gets committed. However, with relaxed models, read B can get committed before read A gets committed. The difference in performance between relaxed and SC is very narrow. However, the measurement of the difference in performance depends upon benchmarks, latencies, etc. | ||

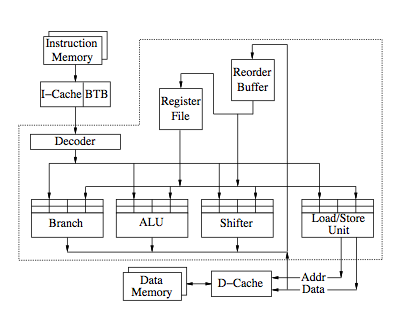

The figure below shows an example implementation of a SE system.<ref>[http://www.csl.cornell.edu/courses/ee572/gharachorloo.icpp91.pdf Two Techniques to Enhance the Performance of Memory Consistency Models by Kourosh Gharachorloo, Anoop Gupta, and John Hennessy]</ref> | The figure below shows an example implementation of a SE system.<ref>[http://www.csl.cornell.edu/courses/ee572/gharachorloo.icpp91.pdf Two Techniques to Enhance the Performance of Memory Consistency Models by Kourosh Gharachorloo, Anoop Gupta, and John Hennessy]</ref> | ||

| Line 148: | Line 147: | ||

=== Idea behind speculative execution === | === Idea behind speculative execution === | ||

The core idea which forms the crux of speculative execution is simple and easy to understand. For example, let us consider there are two accesses a and b in the program order. a is considered to have a large latency whereas b is simply a load access. Also, the consistency model needs a to complete before b can finish. The speculative execution for load accesses proceeds as follows. The processor obtains the value returned by access b before a can complete and proceeds. Now when a completes, the processor checks to see if the speculated return value of b before the completion of a is same as the actual value obtained after a completes. If they both match then the speculation was correct and the processor proceeds without any undo of instructions. However, if the values do not match then we need to redo | The core idea which forms the crux of speculative execution is simple and easy to understand. For example, let us consider there are two accesses a and b in the program order. a is considered to have a large latency whereas b is simply a load access. Also, the consistency model needs a to complete before b can finish. The speculative execution for load accesses proceeds as follows. The processor obtains the value returned by access b before a can complete and it proceeds. Now when a completes, the processor checks to see if the speculated return value of b before the completion of a is same as the actual value obtained after a completes. If they both match then the speculation was correct and the processor proceeds without any undo of instructions. However, if the values do not match then we need to redo access b to get the correct value <ref>[http://www.google.com/url?sa=t&rct=j&q=&esrc=s&source=web&cd=2&ved=0CDUQFjAB&url=http%3A%2F%2Fciteseerx.ist.psu.edu%2Fviewdoc%2Fdownload%3Fdoi%3D10.1.1.57.1560%26rep%3Drep1%26type%3Dpdf&ei=lbB8T8DZKtKCtgeJ7v3mDA&usg=AFQjCNElr6qsvxH41INvR6tY12S4mDwLeQ&sig2=dk_gxOC4J7VEPz3I5EOgXA Speculative Execution in Real-Time Systems]</ref>. These steps in speculative execution can be summarized by 3 different mechanisms. Namely, | ||

a) The speculative mechanism | a) The speculative mechanism | ||

| Line 154: | Line 153: | ||

c) The correction mechanism | c) The correction mechanism | ||

'''a) The speculative mechanism -''' In the speculative mechanism, the most appropriate thing to do would be to perform the access, get the returned value and proceed to use the returned value. If there is a cache hit, the value will be returned instantly, | '''a) The speculative mechanism -''' In the speculative mechanism, the most appropriate thing to do would be to perform the access, get the returned value and proceed to use the returned value. If there is a cache hit, the value will be returned instantly, otherwise if it is a miss the access is pipelined with other accesses just like we have seen in prefetching. | ||

'''b) The detection mechanism -''' The | '''b) The detection mechanism -''' The way to detect if the speculated value is correct or not is by redoing the access after the consistency model would have actually allowed it do be done without speculation. This however might not be the only way. If we could monitor the coherence transactions on that particular location in the cache to see if there is an invalidation, then the detection process can be faster. Thus another improvement when compared to the prefetch technique is that, in speculative execution, the cache is only accessed once unlike twice, which is the norm in prefetch technique. Going back to our example of two accesses a and b, the consistency model requires that access b is deferred until access a is complete. However, under the speculative execution, b can proceed without waiting for a to complete. The detection mechanism works by monitoring whether there is an update or invalidation at location b, which is an indication that the speculation is incorrect. However, if there are no update or invalidation messages, we can be assured that the speculated value was correct. | ||

'''c) The correction mechanism -''' Once the detection mechanism determines that the speculated value is incorrect, the correction mechanism comes into play. The first step that the correction mechanism does is that it discards the computation of the speculated value. The load accesses and the computations after the speculated value can be discarded too, just like the correction mechanism used in processors when there is a branch prediction that has gone wrong. | '''c) The correction mechanism -''' Once the detection mechanism determines that the speculated value is incorrect, the correction mechanism comes into play. The first step that the correction mechanism does is that it discards the computation of the speculated value. The load accesses and the computations after the speculated value can be discarded too, just like the correction mechanism used in processors when there is a branch prediction that has gone wrong. | ||

| Line 176: | Line 175: | ||

5) unlock L (hit) | 5) unlock L (hit) | ||

The pipelining in both prefetching and speculative execution will be same and | The pipelining in both prefetching and speculative execution will be same and thus we might expect the same level of performance. But in this case, read D does not cause a bottle neck because under speculative execution; its value can be immediately consumed before the previous accesses commit. Thus we expect both the SC and RC implementations to finish in 104 clock cycles (analogous to 4 hits and 1 miss). | ||

As soon as we know the address for the access, the load access can be issued under speculative execution irrespective of the consistency model that is being supported. Even | As soon as we know the address for the access, the load access can be issued under speculative execution irrespective of the consistency model that is being supported. Even speculative execution will impose requirements on the system. For all the three mechanisms discussed, we expect hardware support, else we might not get the performance gain that we expect if we implement the same in software.<ref>[http://impact.crhc.illinois.edu/ftp/conference/micro-93-suppression.pdf Speculative Execution Exception Recovery using Write-back Suppression]</ref> | ||

=== Illustrative example === | === Illustrative example === | ||

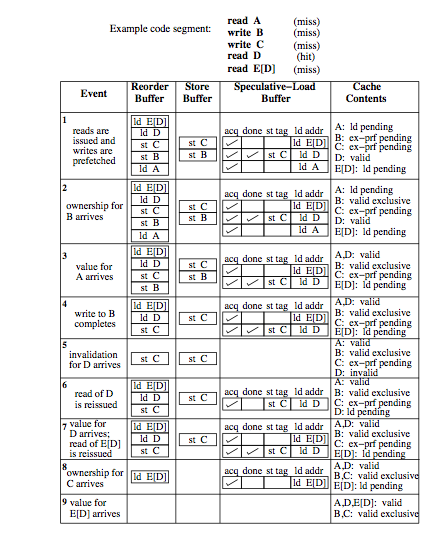

This is the example which we will be taking up for discussion to see how SE works | This is the example which we will be taking up for discussion to see how SE works: | ||

[[File:SE_example.png|600px|Organization of a load store functional unit]] | [[File:SE_example.png|600px|Organization of a load store functional unit]] | ||

In the above figure, the contents of various buffers are showed along with the cache content for each | In the above figure, the contents of various buffers are showed along with the cache content for each event that occurs. All three mechanisms which we have discussed will be traced in the example. The [http://en.wikipedia.org/wiki/Instruction_prefetch prefetching reorder] buffer holds the instructions that are fetched and decoded. Tracing through the set of events, initially the loads and prefetches (exclusive mode) are issued for the corresponding stores. In the meantime, while the store buffer is working on the store operations, the [http://www.csl.cornell.edu/courses/ee572/gharachorloo.icpp91.pdf speculative load buffer] has three loads. The returned value of read D has already been used by the processor. The second event indicates an ownership for B, however it is not immediately completed. Its completion has been reordered and delayed by the reorder buffer. The third event indicates that the value of A arrives and the entries for A are purged from both the speculative-load and reorder buffers. Once the load A completes, the reorder buffer signals the store buffer to allow the store B to complete, and this is a hit because the value is already cached and thus finishes quickly. Store C is next to be served in the reorder buffer and its access is merged with the previous prefetch request for the same location.<ref>[http://www.cs.waikato.ac.nz/timewarp/wengine/papers/gc99_1/ Data and Control Speculative Execution]</ref> | ||

Now to make things interesting, let us assume that there is an invalidation for D. This would cause a mismatch in the value speculated for D and hence the load D and the instructions following it are purged, indicated by event 5. The 6th event shows the correction mechanism by fetching the two instructions again and yet again load D will be a speculative event because store C has not finished. The 7th event shows new value in location D and since it is cached and known, E[D] proceeds. The 8th event shows that store C has finished and since it does not | Now to make things interesting, let us assume that there is an invalidation for D. This would cause a mismatch in the value speculated for D and hence the load D and the instructions following it are purged, indicated by event 5. The 6th event shows the correction mechanism by fetching the two instructions again and yet again load D will be a speculative event because store C has not finished. The 7th event shows new value in location D and since it is cached and its value known, E[D] proceeds. The 8th event shows that store C has finished, and since it does not affect D, the speculative value is correct and and is retired from the reorder and speculative-load buffers. The last event signifies the completion of E[D]. | ||

== Future directions and ambitious implementations == | == Future directions and ambitious implementations == | ||

The paper by Adve and Hill <ref>[ftp://ftp.cs.wisc.edu/markhill/Papers/icpp90_seqcon.pdf Implementing Sequential Consistency In Cache-Based Systems]</ref> proposes an implementation for sequential consistency that is more efficient than conventional implementations. Their scheme requires an invalidation-based cache coherence protocol. At points where a conventional implementation stalls for the full latency of pending writes, their implementation stalls only until ownership is gained. To make the implementation satisfy sequential consistency, the new value written is not made visible to other processors until all previous writes by this processor have completed. The gains from this are expected to be limited, however, since the latency of obtaining ownership is often only slightly smaller than the latency for the write to complete. In addition, the proposed scheme has no provision for hiding the latency of read accesses. Since the visibility-control mechanism reduces the stall time for writes only slightly and does not affect the stall time for reads, | The paper by Adve and Hill <ref>[ftp://ftp.cs.wisc.edu/markhill/Papers/icpp90_seqcon.pdf Implementing Sequential Consistency In Cache-Based Systems]</ref> proposes an implementation for sequential consistency that is more efficient than conventional implementations. Their scheme requires an invalidation-based cache coherence protocol. At points where a conventional implementation stalls for the full latency of pending writes, their implementation stalls only until ownership is gained. To make the implementation satisfy sequential consistency, the new value written is not made visible to other processors until all previous writes by this processor have completed. The gains from this are expected to be limited, however, since the latency of obtaining ownership is often only slightly smaller than the latency for the write to complete. In addition, the proposed scheme has no provision for hiding the latency of read accesses. Since the visibility-control mechanism reduces the stall time for writes only slightly and does not affect the stall time for reads, its performance wont be much better than conventional implementations. In contrast, the prefetch and speculative load techniques provide much greater opportunity for buffering and pipelining of read and write accesses. | ||

The paper by Stenstrom <ref>[http://ieeexplore.ieee.org/xpl/login.jsp?tp=&arnumber=223075&url=http%3A%2F%2Fieeexplore.ieee.org%2Fxpls%2Fabs_all.jsp%3Farnumber%3D223075 A latency-hiding scheme for multiprocessors with buffered multistage networks]</ref> proposes a mechanism for guaranteeing access order at the memory instead of at the processor. Each request contains a processor identification and a sequence number. Consecutive requests from the same processor get consecutive sequence numbers. Each memory module has access to a common data structure called next sequence-number table (NST). The NST contains P entries, one entry per processor. Each entry contains the sequence number of the next request to be performed by the corresponding processor. This allows the mechanism to guarantee that accesses from each processor are kept in program order. Theoretically, this scheme can enhance the performance of sequential consistency. However, the major disadvantage is that caches are not allowed. This can severely hinder the performance when compared to implementations that allow shared locations to be cached. | |||

To gain significant improvement in terms of performance, there have been a number of memory consistency models proposed. One disadvantage of the relaxed memory consistency models is that they present a more complex programming model to the user. The cost of this added performance benefit, however, is the extra hardware complexity that comes with it. The prefetch technique is simple and can easily be absorbed into the modern multiprocessor architectures while the speculative execution technique is not that easy when compared to the prefetch technique. Things get more complicated when the speculative execution technique is to be incorporated into multiprocessor systems when compared to uniprocessor systems. In the future, we might expect better implementations of the speculative execution technique which will make this transition easy. | |||

== Glossary == | |||

'''Prefetching:''' In computer architecture, instruction prefetch is a technique used in microprocessors to speed up the execution of a program by reducing wait states. Modern microprocessors are much faster than the memory where the program is kept, meaning that the program's instructions cannot be read fast enough to keep the microprocessor busy. Adding a cache can provide faster access to needed instructions. | |||

'''Branch prediction:''' In computer architecture, a branch predictor is a digital circuit that tries to guess which way a branch (e.g. an if-then-else structure) will go before this is known for sure. The purpose of the branch predictor is to improve the flow in the instruction pipeline. Branch predictors are crucial in today's pipelined microprocessors for achieving high performance. | |||

'''Speculative execution:''' Speculative execution in computer systems is doing work, the result of which may not be needed. This performance optimization technique is used in pipelined processors and other systems. | |||

'''Pipeline processing:''' Pipeline processing incorporates the functions of a computer's central processing unit (CPU) on a single integrated circuit, (IC) or at most a few integrated circuits. | |||

'''Store buffer:''' The cache controller includes a store buffer to hold data before it is written to the cache | |||

'''GPU:''' Like the CPU, GPU is a single-chip processor. However, the GPU is used primarily for computing 3D functions. | |||

== Quiz == | |||

1) How does SC with prefetching perform? | |||

a) Very bad. | |||

b) Good for some cases and bad for other cases. | |||

c) Can be adopted widely, has good performance. | |||

d) There are better alternatives available. | |||

2) Which of the following are types of prefetching? | |||

a) Sequential. | |||

b) Binding. | |||

c) Non-binding. | |||

d) Release. | |||

3) What effect does prefetching have on speed of execution? | |||

a) Speeds up. | |||

b) Does not have any effect. | |||

c) Degrades performance. | |||

d) Does not speed up always. | |||

4) Which of the following perform better than prefetching under SC? | |||

a) Prefetching with Release Consistency. | |||

b) Prefetching with Weak Ordering. | |||

c) Speculative Execution. | |||

d) All of the above give more or less the same performance as prefetching under SC. | |||

5) Which of the following are true about prefetching? | |||

a) The amount of lookahead we do for a prefetch needs to be controlled. | |||

b) Prefetching in most cases boosts performance. | |||

c) Prefetching scales considerably well with increasing program size. | |||

d) None of the above are true. | |||

== References == | == References == | ||

<references /> | <references /> | ||

Latest revision as of 03:32, 25 April 2012

Prefetching and consistency models.

Prefetching

Introduction to prefetching

Almost all processors today use prefetching as a means to speed up execution. Primarily, prefetching is used to shorten the amount of time a processor is in the wait state by predicting which cache block would be accessed next, so that when a cache miss occurs, the old block in the cache can be immediately replaced with the block that is prefetched and hence decreasing the idle time of the processor. Performance in prefetching is best when it is done by following the program order. However, it need not always be that prefetching is done in program order; a processor trying to guess the result of a calculation during a complex branch prediction algorithm will need to anticipate the result and fetch the right set of instructions for execution. Things get more complex when it comes to graphical processing units or GPUs. Prefetching can take advantage of spatial coherence and the data that is prefetched are not a set of instructions, but instead they are texture elements that can be mapped to a polygon.<ref>Instruction prefetch wiki article.</ref>

Types of prefetching

Prefetching can be primarily classified based on whether it is binding or non-binding, and whether it is hardware or software controlled. Using a binding prefetch, the value of a later reference, like a register load, is bound at the time the prefetch completes. However, this comes with added restrictions because the value in the prefetch might not be accurate - there might be invalidations that can be caused by another processor in the time frame between prefetch and reference by the current processor. Coming to the non-binding prefetch case, the data is stored into the processor cache, and the coherence is maintained till the processor reads or writes the value. This approach, unlike binding prefetch, will not have an effect on the correctness of any consistency model. It can serve as a serious improvement in terms of performance.

The prefetch that is interesting to us is the hardware controlled and non-binding prefetch. Prefetching done in software involves significant effort from the programmer's side, and it may not be scalable as the length of the program increases. In comparison, the non-binding prefetch yields significantly more performance when compared to the binding prefetch. The major performance enhancement from prefetching comes by decreasing the memory latencies that are caused by all consistency models.

In the case of a read operation, the read prefetch is issued to get the data in a read-only shared state in the cache. Since we are considering the non-binding prefetch, there is a guarantee that the read operation is going to return a correct value when it is allowed to run, irrespective of the actual prefetch completion time. However, there is also a catch to this: the value read might not always be correct. Consider a case where there is a write operation performed on the memory location that was just read-prefetched. Thus the value can be read again depending on whether an invalidation-based or an update-based coherence scheme is being used. If an update-based coherence scheme is being used, we can be assured that the other processor will send a bus update and the current processor can pick it up to update its value. However, if an invalidation based coherence scheme is used, there would be a coherence miss and the value will be read again from the system memory, giving an illusion that the prefetch did not occur.

In the case of a write operation, to acquire the exclusive ownership of the line, a read-exclusive prefetch can be used. Since it is cached in the exclusive mode, a write operation can proceed without incurring an invalidate or an update on other caches which have cached the same line or block. The write operation finishes quickly because the value is already cached and there is no coherence miss, thus reducing the idle time of the processor. However, it is to be noted that the read-exclusive prefetch is only possible if the coherence scheme is invalidation-based and not update-based. The same logic of incorrectness applies here that we have seen in the read case: suppose that another processor asks for a write to the same block; in this case, the exclusive ownership will no longer be maintained. Instead the cache block will be invalidated.

How does prefetching work ?

In a multiprocessor environment, each processor has a load and a store buffer. The way in which consistency models work are that the accesses to memory locations are delayed until the previous requests have finished. While issuing a read or a write request, we can implement the prefetch by making the hardware automatically issue a prefetch for requests which are in the load or store buffer and those requests which cannot be honored right away because of the delays incurred due to consistency model constraints.

Let us trace through the steps a prefetch request would follow. First, it looks up the cache to see whether the block is already present. If it is indeed available, the request is terminated. If it is not present, the prefetch is issued to memory. Depending on the type of prefetch technique we are using, the response to the prefetch may or may not be immediate. The response is then placed in the cache. This process can be optimized by detecting duplicate requests to the same location and then combining the responses to each individual request to point to the prefetch request, thereby preventing duplicate requests being issued to memory. This mechanism also taxes the system in the form of requirements. Firstly, it requires that hardware coherent caches and the block that is prefetched should fit into the cache. Then for the write prefetch to work efficiently, there should be an invalidation-based coherence scheme in place. Additionally, the bus bandwidth should be high in order to handle several prefetch requests at the same time. The caches will also be more busy with read/write transactions when prefetching is implemented.<ref>Effective Instruction Prefetching in Chip Multiprocessors for Modern Commercial Applications</ref>

The amount of lookahead we do for a prefetch needs to be controlled, in the sense that if a higher number of blocks are prefetched than are required, then the processor might not ever use them and it would also result in increased cache misses of actually required blocks. Thus the extent to which lookahead is done should dynamically adjust depending on whether the processor actually uses the blocks or just evicts them without a read or a write reference to it.

Prefetching with Sequential Consistency

Sequential Consistency (SC) might cause a reader to assume that its implementation will need a single memory module. However, this is not true. Both SC and the relaxed memory models allow many optimizations within them in order to deliver high performance. SC and the relaxed models allow non-binding prefetching along with coherent caching.<ref>Cache consistency and sequential consistency</ref>

Let us take a look at an example and see how SC vs prefetching with SC performs

The assumptions being made are that the processor has non-blocking reads with branch prediction machinery, an invalidation-based cache coherence scheme is in place, if there is a cache hit the latency is 1 clock cycle, and the cache miss incurs a latency of 100 clock cycles.

The following code segment represents a common producer scenario:

1) lock L (miss) 2) write A (miss) 3) write B (miss) 4) unlock L (hit)

Measuring how the code performs under SC only

Since we are considering a system with SC, we know that an access to memory cannot be issued until the previous access has completed. The first three accesses are misses and they occur one after the other without any interleaving, which gives us a total latency of 100 x 3 = 300 clock cycles. The fourth operation however is a cache hit, thus adding only 1 clock cycle. The four accesses take up 301 clock cycles to complete.

Measuring how the same code performs under prefetching with SC

With prefetching we can get significant improvement in terms of performance. Since the system has the ability to do prefetching and the processor has branch prediction machinery, let us assume that the branch predictor takes the loop in which the lock L has been successfully acquired. The lookahead operates and A and B are prefetched into memory in the read exclusive mode. When the statement lock L executes, the write accesses to A and B are served in parallel as well because they are prefetched. Thus the total would sum to 102 clock cycles (2 hits in parallel and 1 miss). The fourth memory access is a hit and takes only 1 clock cycle to finish thus giving a total of 103 clock cycles.

Comparing the 103 clock cycles in prefetching under SC .vs. SC only we see that there is nearly a 200 clock cycle difference, which is a significant improvement. As the number of accesses increase, the difference is only going to get larger until a certain point where "SC only" would no longer be a viable option.

Another example showing how SC vs prefetching with SC performs

The same assumptions are being made which we made for the first example.

The following code segment represents a common producer scenario:

1) lock L (miss) 2) read C (miss) 3) read D (hit) 4) read E[D] (miss) 5) unlock L (hit)

Measuring how the code performs under SC only

Under the critical section we see that there are three read accesses. Read D is assumed to be a hit and read E[D] may be a hit or a miss depending on the value D. Doing the same math that we have done above, under SC only we see that the total number of clock cycles are 302.

Measuring how the same code performs under prefetching with SC

With prefetching, we see that the total time taken will be 203 (2 misses & 3 hits).

Thus again, we see that prefetching with SC performs better than "SC only".

Performance of prefetching with SC and why the idea has not been widely adopted

We have seen two examples where prefetching with SC does a better job than "SC only". However, what we need to look at is whether this is the maximum performance which we can extract. Consider the second example where we had 3 reads in a critical section. The address which E[D] is trying to access will depend on the value of D. Read D was a cache hit, and E[D] is not allowed to perform under SC until the read C finishes. Thus, even though prefetching increases performance by reducing the time delay caused due to consistency constraints, it does not deal with situations where out of order usage of the values returned by memory access is critical for the processor to proceed efficiently. In other words, prefetching fails to do a good job when out of order consumption of values is important.<ref>Memory access dependencies in shared-memory multiprocessors</ref><ref>Performance evaluation of memory consistency models for shared-memory multiprocessors</ref>

Even though the main use of prefetching is to enable servicing of the requests faster when compared to the delay constraints imposed by the memory contraint models, correctness needs to be maintained at all times. The number of times a prefetched value is incorrect should be small, and luckily this is indeed true in real world systems for several reasons. The invalidation of the prefetched values is loosely coupled with the delay to obtain a correct execution sequence. These delays are imposed by the memory consistency models, and in SC, which is the strictest of all models, many delays are imposed. Usually, the time when one process releases a synchronization is much earlier when compared another process trying to acquire it. Thus the delays introduced by SC are unnecessary and therefore it taxes the systems performance. <ref>Memory access buffering in multiprocessors</ref>

Such cases are not rare to find in real applications and hence prefetching cannot be used all the time. Therefore, prefetching under SC was not widely adopted and hence new schemes had to be developed in order to overcome the drawbacks of the current method.

Solution#1 Prefetching with Release Consistency

Prefetching under release consistency (RC) does at least as good as prefetching under SC. Let us evaluate its performance in the two examples we looked at previously. We follow the same assumptions about the system which we have followed previously.

The first example

The following code segment represents a common producer scenario:

1) lock L (miss) 2) write A (miss) 3) write B (miss) 4) unlock L (hit)

Measuring how the code performs under RC only

Since we are considering a system with RC, we know that write accesses to memory are delayed until the lock access can be performed. The lock L operation takes 100 clock cycles to finish, followed by another 100+1 cycles for write A, write B to finish and then finally the fourth operation takes 1 clock cycle to finish because it is a hit. Thus the total number of clock cycles are 202 under the "RC only" approach.

Measuring how the same code performs under prefetching with RC

With prefetching, we can get significant improvement in terms of performance, at least compared to SC. Since the system now has the ability to do prefetching and the processor has branch prediction machinery, let us assume that the branch predictor takes the loop in which the lock L has been successfully acquired. The lookahead operates and A and B are prefetched into memory in the read exclusive mode. When the statement lock L executes, the write accesses to A and B are served in parallel as well because they are prefetched. Thus the total would sum to 102 clock cycles (2 hits in parallel and 1 miss). The fourth memory access is a hit and takes only 1 clock cycle to finish thus giving a total of 103 clock cycles.

In this example, we found that prefetching with SC and prefetching with RC take the amount of time.

The second example

The following code segment represents a common producer scenario:

1) lock L (miss) 2) read C (miss) 3) read D (hit) 4) read E[D] (miss) 5) unlock L (hit)

Measuring how the code performs under RC only

Under the critical section we see that there are three read accesses. Read D is assumed to be a hit and read E[D] may be a hit or a miss depending on the value D. Doing the same math that we have done above, under "RC only" we can see that the total number of clock cycles are 203.

Measuring how the same code performs under prefetching with RC

With prefetching, we see that the total time taken will be 202 (2 misses & 2 hits pipelined).

Thus again, we see that prefetching with RC performs better than "RC only".

However, we have not got a significant performance gain in the second example. Thus even though RC allows more relaxation on the order of operations issued to memory, the gain is not much. Relaxed models offer more options on the hardware implementation perspective than SC, so we might get tempted to conclude that hardware must use the relaxed models instead of SC. On the other hand, the performance gain we expect to get is not being delivered under prefetching with RC, thus it cannot be justified to be a good approach either, even though it performs better than prefetching under SC.

Also, the same argument that we used for SC by mentioning that it taxes the system by causing unwanted delays to ensure correctness can be applied to the RC model as well. Though unlike SC, it may not tax the system's performance much, because in RC the delays are only inserted at synchronization points. Even then it forms a part of the argument that prefetching under RC is not a very good method.

Solution#2 Speculative Execution

Speculative execution does impose many constraints which relaxed and SC implementations do, but rather it allows them to be more aggressive. Speculative execution allows the processor to execute instructions eagerly. However, the same strictness applies when it comes to being correct. That is, the instructions must be undone when speculations prove incorrect. This scenario can be found in wrongly predicted branches. When the processor knows that it cannot be wrong, it does a commit of the instruction, which frees up the resources that were being used for implementation. There are two strict rules about instructions being committed: <ref>Speculative Execution wiki article</ref>

- All instructions prior to the current instruction must be committed, & - The current instruction operation commits.

For example, when we consider a load or store operation, we can be assured that it commits when it does a read or a write on a memory value. With speculative execution, both relaxed and SC implementations can perform operations out-of-order and in a speculative fashion. Consider the comparison of the peer performance of relaxed .vs. SC:

read A (miss) read B (hit)

When dealing with SC, read A occurs first, gets committed and then read B gets committed. However, with relaxed models, read B can get committed before read A gets committed. The difference in performance between relaxed and SC is very narrow. However, the measurement of the difference in performance depends upon benchmarks, latencies, etc.

The figure below shows an example implementation of a SE system.<ref>Two Techniques to Enhance the Performance of Memory Consistency Models by Kourosh Gharachorloo, Anoop Gupta, and John Hennessy</ref>

Idea behind speculative execution

The core idea which forms the crux of speculative execution is simple and easy to understand. For example, let us consider there are two accesses a and b in the program order. a is considered to have a large latency whereas b is simply a load access. Also, the consistency model needs a to complete before b can finish. The speculative execution for load accesses proceeds as follows. The processor obtains the value returned by access b before a can complete and it proceeds. Now when a completes, the processor checks to see if the speculated return value of b before the completion of a is same as the actual value obtained after a completes. If they both match then the speculation was correct and the processor proceeds without any undo of instructions. However, if the values do not match then we need to redo access b to get the correct value <ref>Speculative Execution in Real-Time Systems</ref>. These steps in speculative execution can be summarized by 3 different mechanisms. Namely,

a) The speculative mechanism b) The detection mechanism c) The correction mechanism

a) The speculative mechanism - In the speculative mechanism, the most appropriate thing to do would be to perform the access, get the returned value and proceed to use the returned value. If there is a cache hit, the value will be returned instantly, otherwise if it is a miss the access is pipelined with other accesses just like we have seen in prefetching.

b) The detection mechanism - The way to detect if the speculated value is correct or not is by redoing the access after the consistency model would have actually allowed it do be done without speculation. This however might not be the only way. If we could monitor the coherence transactions on that particular location in the cache to see if there is an invalidation, then the detection process can be faster. Thus another improvement when compared to the prefetch technique is that, in speculative execution, the cache is only accessed once unlike twice, which is the norm in prefetch technique. Going back to our example of two accesses a and b, the consistency model requires that access b is deferred until access a is complete. However, under the speculative execution, b can proceed without waiting for a to complete. The detection mechanism works by monitoring whether there is an update or invalidation at location b, which is an indication that the speculation is incorrect. However, if there are no update or invalidation messages, we can be assured that the speculated value was correct.

c) The correction mechanism - Once the detection mechanism determines that the speculated value is incorrect, the correction mechanism comes into play. The first step that the correction mechanism does is that it discards the computation of the speculated value. The load accesses and the computations after the speculated value can be discarded too, just like the correction mechanism used in processors when there is a branch prediction that has gone wrong.

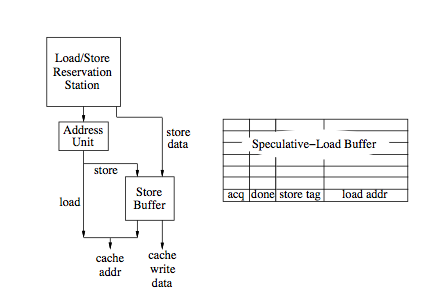

The figure below, shows the organization of a load/store functional unit of a SE system.

The speculative mechanism is superior to the prefetch mechanism (both under SC and RC) by giving an opportunity to use out of order speculated values.

Referring back to the example we discussed while talking about performance of prefetching with RC/SC

The following code segment represents a common producer scenario:

1) lock L (miss) 2) read C (miss) 3) read D (hit) 4) read E[D] (miss) 5) unlock L (hit)

The pipelining in both prefetching and speculative execution will be same and thus we might expect the same level of performance. But in this case, read D does not cause a bottle neck because under speculative execution; its value can be immediately consumed before the previous accesses commit. Thus we expect both the SC and RC implementations to finish in 104 clock cycles (analogous to 4 hits and 1 miss).

As soon as we know the address for the access, the load access can be issued under speculative execution irrespective of the consistency model that is being supported. Even speculative execution will impose requirements on the system. For all the three mechanisms discussed, we expect hardware support, else we might not get the performance gain that we expect if we implement the same in software.<ref>Speculative Execution Exception Recovery using Write-back Suppression</ref>

Illustrative example

This is the example which we will be taking up for discussion to see how SE works:

In the above figure, the contents of various buffers are showed along with the cache content for each event that occurs. All three mechanisms which we have discussed will be traced in the example. The prefetching reorder buffer holds the instructions that are fetched and decoded. Tracing through the set of events, initially the loads and prefetches (exclusive mode) are issued for the corresponding stores. In the meantime, while the store buffer is working on the store operations, the speculative load buffer has three loads. The returned value of read D has already been used by the processor. The second event indicates an ownership for B, however it is not immediately completed. Its completion has been reordered and delayed by the reorder buffer. The third event indicates that the value of A arrives and the entries for A are purged from both the speculative-load and reorder buffers. Once the load A completes, the reorder buffer signals the store buffer to allow the store B to complete, and this is a hit because the value is already cached and thus finishes quickly. Store C is next to be served in the reorder buffer and its access is merged with the previous prefetch request for the same location.<ref>Data and Control Speculative Execution</ref>

Now to make things interesting, let us assume that there is an invalidation for D. This would cause a mismatch in the value speculated for D and hence the load D and the instructions following it are purged, indicated by event 5. The 6th event shows the correction mechanism by fetching the two instructions again and yet again load D will be a speculative event because store C has not finished. The 7th event shows new value in location D and since it is cached and its value known, E[D] proceeds. The 8th event shows that store C has finished, and since it does not affect D, the speculative value is correct and and is retired from the reorder and speculative-load buffers. The last event signifies the completion of E[D].

Future directions and ambitious implementations

The paper by Adve and Hill <ref>Implementing Sequential Consistency In Cache-Based Systems</ref> proposes an implementation for sequential consistency that is more efficient than conventional implementations. Their scheme requires an invalidation-based cache coherence protocol. At points where a conventional implementation stalls for the full latency of pending writes, their implementation stalls only until ownership is gained. To make the implementation satisfy sequential consistency, the new value written is not made visible to other processors until all previous writes by this processor have completed. The gains from this are expected to be limited, however, since the latency of obtaining ownership is often only slightly smaller than the latency for the write to complete. In addition, the proposed scheme has no provision for hiding the latency of read accesses. Since the visibility-control mechanism reduces the stall time for writes only slightly and does not affect the stall time for reads, its performance wont be much better than conventional implementations. In contrast, the prefetch and speculative load techniques provide much greater opportunity for buffering and pipelining of read and write accesses.

The paper by Stenstrom <ref>A latency-hiding scheme for multiprocessors with buffered multistage networks</ref> proposes a mechanism for guaranteeing access order at the memory instead of at the processor. Each request contains a processor identification and a sequence number. Consecutive requests from the same processor get consecutive sequence numbers. Each memory module has access to a common data structure called next sequence-number table (NST). The NST contains P entries, one entry per processor. Each entry contains the sequence number of the next request to be performed by the corresponding processor. This allows the mechanism to guarantee that accesses from each processor are kept in program order. Theoretically, this scheme can enhance the performance of sequential consistency. However, the major disadvantage is that caches are not allowed. This can severely hinder the performance when compared to implementations that allow shared locations to be cached.

To gain significant improvement in terms of performance, there have been a number of memory consistency models proposed. One disadvantage of the relaxed memory consistency models is that they present a more complex programming model to the user. The cost of this added performance benefit, however, is the extra hardware complexity that comes with it. The prefetch technique is simple and can easily be absorbed into the modern multiprocessor architectures while the speculative execution technique is not that easy when compared to the prefetch technique. Things get more complicated when the speculative execution technique is to be incorporated into multiprocessor systems when compared to uniprocessor systems. In the future, we might expect better implementations of the speculative execution technique which will make this transition easy.

Glossary

Prefetching: In computer architecture, instruction prefetch is a technique used in microprocessors to speed up the execution of a program by reducing wait states. Modern microprocessors are much faster than the memory where the program is kept, meaning that the program's instructions cannot be read fast enough to keep the microprocessor busy. Adding a cache can provide faster access to needed instructions.

Branch prediction: In computer architecture, a branch predictor is a digital circuit that tries to guess which way a branch (e.g. an if-then-else structure) will go before this is known for sure. The purpose of the branch predictor is to improve the flow in the instruction pipeline. Branch predictors are crucial in today's pipelined microprocessors for achieving high performance.

Speculative execution: Speculative execution in computer systems is doing work, the result of which may not be needed. This performance optimization technique is used in pipelined processors and other systems.

Pipeline processing: Pipeline processing incorporates the functions of a computer's central processing unit (CPU) on a single integrated circuit, (IC) or at most a few integrated circuits.

Store buffer: The cache controller includes a store buffer to hold data before it is written to the cache

GPU: Like the CPU, GPU is a single-chip processor. However, the GPU is used primarily for computing 3D functions.

Quiz

1) How does SC with prefetching perform?

a) Very bad. b) Good for some cases and bad for other cases. c) Can be adopted widely, has good performance. d) There are better alternatives available.

2) Which of the following are types of prefetching?

a) Sequential. b) Binding. c) Non-binding. d) Release.

3) What effect does prefetching have on speed of execution?

a) Speeds up. b) Does not have any effect. c) Degrades performance. d) Does not speed up always.

4) Which of the following perform better than prefetching under SC?

a) Prefetching with Release Consistency. b) Prefetching with Weak Ordering. c) Speculative Execution. d) All of the above give more or less the same performance as prefetching under SC.

5) Which of the following are true about prefetching?

a) The amount of lookahead we do for a prefetch needs to be controlled. b) Prefetching in most cases boosts performance. c) Prefetching scales considerably well with increasing program size. d) None of the above are true.

References

<references />