CSC/ECE 506 Fall 2007/wiki3 8 38: Difference between revisions

No edit summary |

|||

| (41 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

Wiki: SCI. The IEEE Scalable Coherent Interface is a superset of the SSCI protocol we have been considering in class. A lot has been written about it, but it is still difficult to comprehend. Using SSCI as a starting point, explain why additional states are necessary, and give (or cite) examples that demonstrate how they work. Ideally, this would still be an overview of the working of the protocol, referencing more detailed documentation on the Web. | ''Wiki: SCI. The IEEE Scalable Coherent Interface is a superset of the SSCI protocol we have been considering in class. A lot has been written about it, but it is still difficult to comprehend. Using SSCI as a starting point, explain why additional states are necessary, and give (or cite) examples that demonstrate how they work. Ideally, this would still be an overview of the working of the protocol, referencing more detailed documentation on the Web.'' | ||

__TOC__ | __TOC__ | ||

| Line 5: | Line 5: | ||

= Introduction = | = Introduction = | ||

In parallel systems, data can be obtained from a variety of sources, which includes local or remote memory modules, secondary storage devices and network interfaces. It would be beneficial if certain aspects of mechanisms that are required to gain access to data are standardized such that it allows easily expandable and flexible designs. Various portions of a parallel system may be modified or even upgraded without any effect on the rest of the design if the system adheres to such standards. Also, utilization of standard interfaces forwards the integration of components and subsystems from several different vendors into efficient and usable parallel systems. The Scalable Coherent Interface is one such standard which facilitates the implementation of large-scale cache-coherent parallel systems. | |||

= SSCI = | = Scalable Coherent Interface (SCI) = | ||

The scalable coherent interface has now become a chief hardware based approach to the cache coherence problem in shared memory multiprocessors. SCI is a directory based invalidate coherence protocol. The state of a cache block is distributed to the sharers of that block. Limits that are inherent in bus technology are easily avoided by SCI. The SCI protocol is to provide scalability, coherence and an interface. Scalability is to guarantee that the same mechanisms can be used in single processor systems and large highly parallel multiprocessors. Coherence is to guarantee efficient and integral use of cache memories in distributed shared memory. An interface provides a communication architecture that has multiple values to be brought into a single system and provide smooth inter-operation. | |||

===Goals of SCI=== | |||

*High Performance – For distributed or parallel applications, high communication performance must be obtained when using SCI. Usual aspects of such performance would be a low latency, low CPU overhead for operations dealing with communication and high sustained throughput. | |||

*Scalability – This is addressed in many ways. Some are | |||

**Performance scalability – as the number of nodes being added to the system increases. | |||

**Interconnect Distance scalability | |||

**Memory System scalability – chiefly of cache coherence protocols, which should not have limitations to the number of processors or modules in memory that it can handle. | |||

**Technological scalability – utilization of similar mechanisms in large, small-scale, loosely-coupled or tightly-coupled systems. Also, when new advances are made in technology, it must have the ability to readily make use of such advances. | |||

**Economic scalability – use of similar components and mechanisms in high-end, low-volume systems as well as low-end, high-volume systems. | |||

**Addressing capability has no short term practical limits. | |||

*Coherent Memory System – For the purpose of reduction of Average Access Time to data, caches are becoming more important each day for microprocessors. | |||

*Interface Characteristics – This specifies a standard interface to an interconnect that makes several devices to be connected or attached together and to interoperate. | |||

===Operation=== | |||

In SCI, every interface does not wait for the signal to propagate before it begins to send the next signal. Also, SCI utilizes multiple links so that, concurrently, several transfers can take place. | |||

Usually, a directory entry is in either of the two states : ‘home’ or ‘gone’. If the state is in ‘home’, then memory can immediately satisfy requests to a block as the block has not been cached by any processor. If the state is ‘gone’, then the block has been cached by a processor and might even be modified. Now, the directory contains a pointer to the first processor in the sharing list for this particular block. Hence, on requesting the data, memory returns the pointer to the first processor on the shared list rather than the data itself. The processor asking for the block now forwards its request to the processor on the top of the shared list. Now, the requesting processor adds itself into the shared list as the new head of the list. | |||

In the SCI protocol, any coherent transaction has three phases. | |||

Memory read – When a processor misses in its cache, It asks for the block in the home directory in memory. If the state of the memory is ‘home’, then the main memory replies with the block to the requesting processor. If the state is ‘gone’, then the main memory returns the head of the shared list of processors for that particular block. Then, memory updates its pointer and puts the requesting processor as the new head. | |||

Cache read – When memory returns a pointer toe the requesting processor instead of data, the processor forwards its request for the block to the head of the doubly linked list. When the cache receives, the head of the list returns the data which might have been modified. The head of the list changes its backward pointer to the requesting processor’s node. Now, the requesting processor becomes the head of the list and it has the cache block. | |||

Cleanup – If the cache miss from the requesting processor is a store, the processor has to first invalidate all other cached copies and then only proceed with the store. The new head gives an invalidate request to the next address on the list i.e. to its next processor. This processor invalidates and gives back a pointer to the next processor on its list. The head of the list uses this new pointer and sends it an invalidate request. This goes on until a NULL pointer is returned. This is the cleanup process for invalidation of other cache copies of the block. | |||

= Simple Scalable Coherent Interface (SSCI) = | |||

In directory-based approach, Every memory block has associated directory information; it keeps track of copies of cached blocks and their states. On a miss, it finds the directory entry, looks it up, and communicates only with the nodes that have copies (if necessary). | |||

There are mainly two approaches: Full-bit vector: For k processors, it maintains k presence bit and 1 dirty bit at the home node. Cache state is represented the same way as in bus-based designs (MSI, MESI, etc.). It has three cache states: EM (exclusive or modified), S (shared), U (unowned). Limitation is - Number of presence bits needed grows as the number of processors. | |||

Memory-based schemes store the information about all cached copies at the home node of the block. Cache-based schemes distribute information about copies among the copies themselves. The home contains a pointer to one cached copy of the block. Each copy contains the identity of the next node that has a copy of the block. The location of the copies is therefore determined through network transactions. | |||

Simple SCI (SSCI) retains similarity with full-bit vector protocol: MESI states in the cache; U, S, EM states in the memory directory; It replaces the presence bits with a pointer. | |||

== Why additional states are necessary? == | == Why additional states are necessary? == | ||

Performance & Correctness (Coherence & Consistency) requirement for the protocol necessitates the additional states in the protocol. | |||

=== Need for Busy state === | |||

On a scalable multiprocessors without coherent caches, the main-memory module determined the ordering of writes. The order that writes become visible to all processors is the order in which they reached memory. | |||

e.g. If two processors issue read-exclusive requests for a particular word, the home will provide the requestors with the location of | |||

the dirty node. But which request will reach the dirty node first cannot be guaranteed. This creates the need for additional busy state in the directory. | |||

This can be solved by | |||

# holding requests at home or requestor node and serve them in the order of their arrival | |||

# If the block is busy, reject any further request to it & that request will be retried later | |||

# If directory is busy forward request to dirty node & dirty node will serialize the request execution | |||

In non-coherent scalable multiprocessors, | |||

# For write atomicity in invalidation based protocol, current owner of block has to wait until it recieve all invalidation acks and then only it can read/write new value. | |||

# For write completion, current owner of the block need to wait for ack from memory | |||

In Origin, there are 3 busy states indicates that the directory has received the request but is waiting for completion hence another request for the same block won't be accepted. | |||

# Busy read | |||

# Busy read exclusive or upgrade | |||

# Busy uncached read: In case of DMA, if the memory block is being read, no other processor should be allowed to get write access to it. | |||

=== Poison state === | |||

To improve locality, origin relies on page migration. Hardware keeps reference counts on each page. On every access of memory count is incremented and compared with home node. If count is more than programmable threshold, hardware interrupts one of the local processors to copy the page. Block transfer engine “poison” the source page. Subsequent accesses by other processors receive a bus error and that removes the TLB entry for that location, read the location from the page table & update the TLB with new location. This approach is called - “Lazy TLB shootdown” which reduces the overall cost of migrating memory and changing the virtual-to-physical address mappings. | |||

=== Additional states === | |||

Additional states can be introduced into the sharing list. The SSCI protocol has only one kind of sharing list state : dirty. More options can be introduced into the list to improve performance of the overall shared multiprocessors. Although additional states do increment the complexity of the protocol being utilized and implemented, it would be very much beneficial to the system when the overall performance of the system is increased. The question “Why Additional States are necessary” is answered alongside the introduction of each new state i.e. explanation for the necessity of each and every state has been provided. | |||

Having the following states implemented in the protocol can improve performance. | |||

1. “fresh” and “gone” – Main memory may still be able to respond to a requesting processor if the memory can differentiate between ‘cached and unmodified’ and ‘cached and modified’. Even if the cached value is elsewhere, memory may still be able to respond to the request if the data remains unmodified. | |||

*The “fresh” state shows that memory still has an up-to-date copy of the block, even if the block has been cached and is being shared between processors. As long as the value remains unmodified by any of the processors sharing the data, the memory can keep responding by returning the requested data to the requesting processor. | |||

*The “gone” state indicates that the block has been cached and has been modified. This shows that main memory cant return the data anymore, but has to return the pointer that points to the head of the list. | |||

2. “clean lists” – Although the introduction of these states increase the complexity of handling each access, it does help with the overall performance. In this case, it may be helpful to differentiate the types of read accesses to the cache. One type of read access would be a ‘normal read-only’. Another type of read would be a ‘read with intent to modify later’. The memory in the state is ‘fresh’ when a read-fresh takes place. A read-clean leaves the memory in the ‘gone’ state. Just as with the dirty state, the read-clean allows a write to the block to proceed immediately. When a block is replaced in the cache and the cache is in the ‘clean’ state, no data needs to be written to memory. But with the dirty state, a write-back would be required even if the data had not been modified. | |||

== Examples == | == Examples == | ||

=== 1 DASH Cache Coherence Protocol === | |||

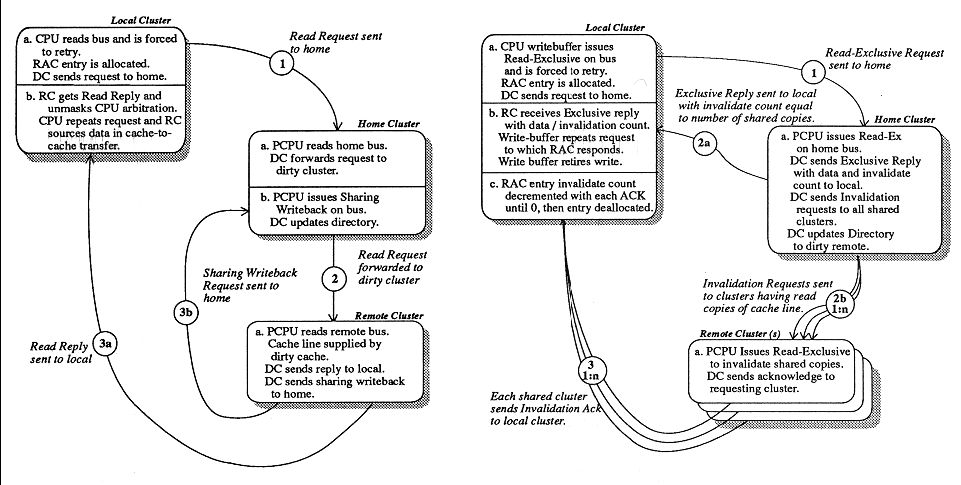

DASH (Directory Architecture for SHared memory) is a scalable shared-memory multiprocessor currently being developed at Stanford’s Computer Systems Laboratory. DASH protocol uses point-to-point messages sent between the processors and memories to keep caches consistent. | |||

The DASH coherence protocol is an invalidation-based ownership protocol. A memory block can be in one of three states as indicated by the associated directory entry: (i) uncached-remote, that is not cached by any remote cluster; (ii) shared-remote, that is cached in an unmodified state by one or more remote clusters; or (iii) dirty-remote, that is cached in a modified state by a single remote cluster. | |||

Please see in the figures below: Left - Flow of Read Request to remote memory with directory in dirty-remote state. Right - Flow of Read-Exclusive Request to remote memory with directory in shared-remote state. | |||

[[Image:DASH.jpg]] | |||

Write back request: A dirty cache line that is replaced must be written back to memory. If the home of the memory block is the local cluster, then the data is simply written back to main memory. If the home cluster is remote, then a message is sent to the remote | |||

home which updates the main memory and marks the block uncached-remote. | |||

= References = | = References = | ||

1 The DASH Cache Coherence Protocol | |||

Daniel Lenoski, James Laudon, Kourosh Gharachorloo, Anoop Gupta, John Hennessy | |||

May 1990 | |||

ACM SIGARCH Computer Architecture News , Proceedings of the 17th annual international symposium on Computer Architecture ISCA '90, | |||

Volume 18 Issue 3a | |||

Publisher: ACM Press | |||

2. 1596-1992 IEEE standard for scalable coherent interface (SCI). | |||

E-ISBN: 0-7381-1204-6 | |||

Year: 1993 | |||

Sponsored by: IEEE Computer Society | |||

3. The SGI Origin: A ccnuma Highly Scalable Server | |||

Laudon, J.; Lenoski, D.; | |||

Computer Architecture, 1997. Conference Proceedings. The 24th Annual International Symposium on | |||

June 2-4, 1997 Page(s):241 - 251 | |||

4. The Scalable Coherence Interface | |||

David.B.Gustavson | |||

September 1991 | |||

"The Scalable Coherence Interface and related standard projects" | |||

5. An analysis of the Scalable Coherent Interface | |||

Eric Rotenberg | |||

June 1995 | |||

An Analytical model of the SCI Coherence protocol | |||

6. Interfaces and Standards : SCI | |||

Behrooz. Parhami | |||

1999 | |||

Introduction to Parallel Processing: Algorithms and Architectures | |||

7. SCI: Scalable Coherent Interface | |||

Hermann Hellwagner | |||

1999 | |||

SCI: Scalable Coherent Interface - Architecture and software for high-performance Compute Clusters | |||

= Further reading = | = Further reading = | ||

1. The Stanford Dash multiprocessor | |||

Lenoski, D.; Laudon, J.; Gharachorloo, K.; Weber, W.-D.; Gupta, A.; Hennessy, J.; Horowitz, M.; Lam, M.S.; | |||

Computer | |||

IEEE Volume 25, Issue 3, March 1992 Page(s):63 - 79 | |||

Digital Object Identifier 10.1109/2.121510 | |||

= External links = | = External links = | ||

Latest revision as of 23:15, 18 October 2007

Wiki: SCI. The IEEE Scalable Coherent Interface is a superset of the SSCI protocol we have been considering in class. A lot has been written about it, but it is still difficult to comprehend. Using SSCI as a starting point, explain why additional states are necessary, and give (or cite) examples that demonstrate how they work. Ideally, this would still be an overview of the working of the protocol, referencing more detailed documentation on the Web.

Introduction

In parallel systems, data can be obtained from a variety of sources, which includes local or remote memory modules, secondary storage devices and network interfaces. It would be beneficial if certain aspects of mechanisms that are required to gain access to data are standardized such that it allows easily expandable and flexible designs. Various portions of a parallel system may be modified or even upgraded without any effect on the rest of the design if the system adheres to such standards. Also, utilization of standard interfaces forwards the integration of components and subsystems from several different vendors into efficient and usable parallel systems. The Scalable Coherent Interface is one such standard which facilitates the implementation of large-scale cache-coherent parallel systems.

Scalable Coherent Interface (SCI)

The scalable coherent interface has now become a chief hardware based approach to the cache coherence problem in shared memory multiprocessors. SCI is a directory based invalidate coherence protocol. The state of a cache block is distributed to the sharers of that block. Limits that are inherent in bus technology are easily avoided by SCI. The SCI protocol is to provide scalability, coherence and an interface. Scalability is to guarantee that the same mechanisms can be used in single processor systems and large highly parallel multiprocessors. Coherence is to guarantee efficient and integral use of cache memories in distributed shared memory. An interface provides a communication architecture that has multiple values to be brought into a single system and provide smooth inter-operation.

Goals of SCI

- High Performance – For distributed or parallel applications, high communication performance must be obtained when using SCI. Usual aspects of such performance would be a low latency, low CPU overhead for operations dealing with communication and high sustained throughput.

- Scalability – This is addressed in many ways. Some are

- Performance scalability – as the number of nodes being added to the system increases.

- Interconnect Distance scalability

- Memory System scalability – chiefly of cache coherence protocols, which should not have limitations to the number of processors or modules in memory that it can handle.

- Technological scalability – utilization of similar mechanisms in large, small-scale, loosely-coupled or tightly-coupled systems. Also, when new advances are made in technology, it must have the ability to readily make use of such advances.

- Economic scalability – use of similar components and mechanisms in high-end, low-volume systems as well as low-end, high-volume systems.

- Addressing capability has no short term practical limits.

- Coherent Memory System – For the purpose of reduction of Average Access Time to data, caches are becoming more important each day for microprocessors.

- Interface Characteristics – This specifies a standard interface to an interconnect that makes several devices to be connected or attached together and to interoperate.

Operation

In SCI, every interface does not wait for the signal to propagate before it begins to send the next signal. Also, SCI utilizes multiple links so that, concurrently, several transfers can take place.

Usually, a directory entry is in either of the two states : ‘home’ or ‘gone’. If the state is in ‘home’, then memory can immediately satisfy requests to a block as the block has not been cached by any processor. If the state is ‘gone’, then the block has been cached by a processor and might even be modified. Now, the directory contains a pointer to the first processor in the sharing list for this particular block. Hence, on requesting the data, memory returns the pointer to the first processor on the shared list rather than the data itself. The processor asking for the block now forwards its request to the processor on the top of the shared list. Now, the requesting processor adds itself into the shared list as the new head of the list.

In the SCI protocol, any coherent transaction has three phases.

Memory read – When a processor misses in its cache, It asks for the block in the home directory in memory. If the state of the memory is ‘home’, then the main memory replies with the block to the requesting processor. If the state is ‘gone’, then the main memory returns the head of the shared list of processors for that particular block. Then, memory updates its pointer and puts the requesting processor as the new head.

Cache read – When memory returns a pointer toe the requesting processor instead of data, the processor forwards its request for the block to the head of the doubly linked list. When the cache receives, the head of the list returns the data which might have been modified. The head of the list changes its backward pointer to the requesting processor’s node. Now, the requesting processor becomes the head of the list and it has the cache block.

Cleanup – If the cache miss from the requesting processor is a store, the processor has to first invalidate all other cached copies and then only proceed with the store. The new head gives an invalidate request to the next address on the list i.e. to its next processor. This processor invalidates and gives back a pointer to the next processor on its list. The head of the list uses this new pointer and sends it an invalidate request. This goes on until a NULL pointer is returned. This is the cleanup process for invalidation of other cache copies of the block.

Simple Scalable Coherent Interface (SSCI)

In directory-based approach, Every memory block has associated directory information; it keeps track of copies of cached blocks and their states. On a miss, it finds the directory entry, looks it up, and communicates only with the nodes that have copies (if necessary).

There are mainly two approaches: Full-bit vector: For k processors, it maintains k presence bit and 1 dirty bit at the home node. Cache state is represented the same way as in bus-based designs (MSI, MESI, etc.). It has three cache states: EM (exclusive or modified), S (shared), U (unowned). Limitation is - Number of presence bits needed grows as the number of processors.

Memory-based schemes store the information about all cached copies at the home node of the block. Cache-based schemes distribute information about copies among the copies themselves. The home contains a pointer to one cached copy of the block. Each copy contains the identity of the next node that has a copy of the block. The location of the copies is therefore determined through network transactions.

Simple SCI (SSCI) retains similarity with full-bit vector protocol: MESI states in the cache; U, S, EM states in the memory directory; It replaces the presence bits with a pointer.

Why additional states are necessary?

Performance & Correctness (Coherence & Consistency) requirement for the protocol necessitates the additional states in the protocol.

Need for Busy state

On a scalable multiprocessors without coherent caches, the main-memory module determined the ordering of writes. The order that writes become visible to all processors is the order in which they reached memory.

e.g. If two processors issue read-exclusive requests for a particular word, the home will provide the requestors with the location of the dirty node. But which request will reach the dirty node first cannot be guaranteed. This creates the need for additional busy state in the directory.

This can be solved by

- holding requests at home or requestor node and serve them in the order of their arrival

- If the block is busy, reject any further request to it & that request will be retried later

- If directory is busy forward request to dirty node & dirty node will serialize the request execution

In non-coherent scalable multiprocessors,

- For write atomicity in invalidation based protocol, current owner of block has to wait until it recieve all invalidation acks and then only it can read/write new value.

- For write completion, current owner of the block need to wait for ack from memory

In Origin, there are 3 busy states indicates that the directory has received the request but is waiting for completion hence another request for the same block won't be accepted.

- Busy read

- Busy read exclusive or upgrade

- Busy uncached read: In case of DMA, if the memory block is being read, no other processor should be allowed to get write access to it.

Poison state

To improve locality, origin relies on page migration. Hardware keeps reference counts on each page. On every access of memory count is incremented and compared with home node. If count is more than programmable threshold, hardware interrupts one of the local processors to copy the page. Block transfer engine “poison” the source page. Subsequent accesses by other processors receive a bus error and that removes the TLB entry for that location, read the location from the page table & update the TLB with new location. This approach is called - “Lazy TLB shootdown” which reduces the overall cost of migrating memory and changing the virtual-to-physical address mappings.

Additional states

Additional states can be introduced into the sharing list. The SSCI protocol has only one kind of sharing list state : dirty. More options can be introduced into the list to improve performance of the overall shared multiprocessors. Although additional states do increment the complexity of the protocol being utilized and implemented, it would be very much beneficial to the system when the overall performance of the system is increased. The question “Why Additional States are necessary” is answered alongside the introduction of each new state i.e. explanation for the necessity of each and every state has been provided.

Having the following states implemented in the protocol can improve performance.

1. “fresh” and “gone” – Main memory may still be able to respond to a requesting processor if the memory can differentiate between ‘cached and unmodified’ and ‘cached and modified’. Even if the cached value is elsewhere, memory may still be able to respond to the request if the data remains unmodified.

- The “fresh” state shows that memory still has an up-to-date copy of the block, even if the block has been cached and is being shared between processors. As long as the value remains unmodified by any of the processors sharing the data, the memory can keep responding by returning the requested data to the requesting processor.

- The “gone” state indicates that the block has been cached and has been modified. This shows that main memory cant return the data anymore, but has to return the pointer that points to the head of the list.

2. “clean lists” – Although the introduction of these states increase the complexity of handling each access, it does help with the overall performance. In this case, it may be helpful to differentiate the types of read accesses to the cache. One type of read access would be a ‘normal read-only’. Another type of read would be a ‘read with intent to modify later’. The memory in the state is ‘fresh’ when a read-fresh takes place. A read-clean leaves the memory in the ‘gone’ state. Just as with the dirty state, the read-clean allows a write to the block to proceed immediately. When a block is replaced in the cache and the cache is in the ‘clean’ state, no data needs to be written to memory. But with the dirty state, a write-back would be required even if the data had not been modified.

Examples

1 DASH Cache Coherence Protocol

DASH (Directory Architecture for SHared memory) is a scalable shared-memory multiprocessor currently being developed at Stanford’s Computer Systems Laboratory. DASH protocol uses point-to-point messages sent between the processors and memories to keep caches consistent.

The DASH coherence protocol is an invalidation-based ownership protocol. A memory block can be in one of three states as indicated by the associated directory entry: (i) uncached-remote, that is not cached by any remote cluster; (ii) shared-remote, that is cached in an unmodified state by one or more remote clusters; or (iii) dirty-remote, that is cached in a modified state by a single remote cluster.

Please see in the figures below: Left - Flow of Read Request to remote memory with directory in dirty-remote state. Right - Flow of Read-Exclusive Request to remote memory with directory in shared-remote state.

Write back request: A dirty cache line that is replaced must be written back to memory. If the home of the memory block is the local cluster, then the data is simply written back to main memory. If the home cluster is remote, then a message is sent to the remote home which updates the main memory and marks the block uncached-remote.

References

1 The DASH Cache Coherence Protocol

Daniel Lenoski, James Laudon, Kourosh Gharachorloo, Anoop Gupta, John Hennessy May 1990 ACM SIGARCH Computer Architecture News , Proceedings of the 17th annual international symposium on Computer Architecture ISCA '90, Volume 18 Issue 3a Publisher: ACM Press

2. 1596-1992 IEEE standard for scalable coherent interface (SCI).

E-ISBN: 0-7381-1204-6 Year: 1993 Sponsored by: IEEE Computer Society

3. The SGI Origin: A ccnuma Highly Scalable Server

Laudon, J.; Lenoski, D.; Computer Architecture, 1997. Conference Proceedings. The 24th Annual International Symposium on June 2-4, 1997 Page(s):241 - 251

4. The Scalable Coherence Interface

David.B.Gustavson September 1991 "The Scalable Coherence Interface and related standard projects"

5. An analysis of the Scalable Coherent Interface

Eric Rotenberg June 1995 An Analytical model of the SCI Coherence protocol

6. Interfaces and Standards : SCI

Behrooz. Parhami 1999 Introduction to Parallel Processing: Algorithms and Architectures

7. SCI: Scalable Coherent Interface

Hermann Hellwagner 1999 SCI: Scalable Coherent Interface - Architecture and software for high-performance Compute Clusters

Further reading

1. The Stanford Dash multiprocessor

Lenoski, D.; Laudon, J.; Gharachorloo, K.; Weber, W.-D.; Gupta, A.; Hennessy, J.; Horowitz, M.; Lam, M.S.; Computer IEEE Volume 25, Issue 3, March 1992 Page(s):63 - 79 Digital Object Identifier 10.1109/2.121510