CSC/ECE 506 Spring 2012/1a mw: Difference between revisions

No edit summary |

|||

| (41 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

<p><b>Comparisons Between Supercomputers</b></p> | <p><b>Comparisons Between Supercomputers</b></p> | ||

<p>[http://dictionary.reference.com/browse/supercomputer Supercomputers] are extremely capable computers, able to solve complex tasks in a relatively small amount of time. Their ability to massively outperform typical home and office computers is made possible normally either by an abundance of processor cores or smaller computers working in conjunction together. Supercomputers are generally specialized computers that tend to be very expensive, not available for general-purpose use and are used in computations where large amounts of numerical processing is required. They are used in scientific, military, graphics applications and for other number or data intensive computations <ref>http://dictionary.reference.com/browse/supercomputer Definition of supercomputer</ref>, <ref>http://www.webopedia.com/TERM/S/supercomputer.html Definition of supercomputer</ref>.</p> | |||

<p>Since supercomputers have existed, as technology has advanced, they have continued to be surpassed by one another. This has lead to a drive for engineers and scientists to design and create supercomputers that continue to outperform others<ref>http://en.wikipedia.org/wiki/Supercomputer#History</ref>. This article compares current supercomputers as well as supercomputer architectures.</p> | |||

<p>For the history, development and current state of supercomputing, including a fairly recent, detailed top 10 list, please see [http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2012/1a_ry a fellow student's wiki article on Supercomputers].</p> | |||

= | = Benchmarking Supercomputers = | ||

<p>Supercomputers are generally compared qualitatively using floating point operations per second, or [http://kevindoran.blogspot.com/2011/04/comparing-performance-of-supercomputers.html FLOPS]. Using standard prefixes, higher levels of FLOPS can be specified as the computing power of supercomputers increases. For example, KiloFLOPS for thousands of FLOPS and MegaFLOPS for millions of FLOPS <ref>http://kevindoran.blogspot.com/2011/04/comparing-performance-of-supercomputers.html Doran, Kevin (April 2011) Comparing the performance of supercomputers</ref>. Often you'll see just the first letter of the prefix with FLOPS. For example, for GigaFLOPS or billions of FLOPS, you'll see [http://top500.org/faq/what_gflop_s GFLOPS] <ref>http://top500.org/faq/what_gflop_s Definition of GFLOPS</ref>.</p> | <p>Supercomputers are generally compared qualitatively using floating point operations per second, or [http://kevindoran.blogspot.com/2011/04/comparing-performance-of-supercomputers.html FLOPS]. Using standard prefixes, higher levels of FLOPS can be specified as the computing power of supercomputers increases. For example, KiloFLOPS for thousands of FLOPS and MegaFLOPS for millions of FLOPS <ref>http://kevindoran.blogspot.com/2011/04/comparing-performance-of-supercomputers.html Doran, Kevin (April 2011) Comparing the performance of supercomputers</ref>. Often you'll see just the first letter of the prefix with FLOPS. For example, for GigaFLOPS or billions of FLOPS, you'll see [http://top500.org/faq/what_gflop_s GFLOPS] <ref>http://top500.org/faq/what_gflop_s Definition of GFLOPS</ref>.</p> | ||

<p>A software package called [http://www.top500.org/project/linpack LINPACK] is a standard approach to testing or benchmarking supercomputers by solving a dense system of linear equations using the Gauss method. <ref>http://www.top500.org/project/linpack LINPACK defined</ref>. However, LINPACK benchmarking software | <p>A software package called [http://www.top500.org/project/linpack LINPACK] is a standard approach to testing or benchmarking supercomputers by solving a dense system of linear equations using the Gauss method. <ref>http://www.top500.org/project/linpack LINPACK defined</ref>. However, LINPACK benchmarking software is not only used to benchmark supercomputers, it can also be used to benchmark a typical user computer <ref>http://www.xtremesystems.org/forums/showthread.php?197835-IntelBurnTest-The-new-stress-testing-program Intel Benchmark Software</ref>.</p> | ||

= Finding Supercomputer Comparison Data = | = Finding Supercomputer Comparison Data = | ||

<p>Starting in 1993, [http://www.TOP500.org TOP500.org] began collecting performance data on computers and update their list every six months <ref>http://top500.org/faq/what_top500 What is the TOP500</ref>. This appears to be an excellent online source of information that collects benchmark data submitted by users of computers and readily provides performance statistics by Vendor, Application, Architecture and nine (9) other areas <ref name="t500stats">http://i.top500.org/stats TOP500 Stats</ref>.</p> | <p>Starting in 1993, [http://www.TOP500.org TOP500.org] began collecting performance data on computers and update their list every six months <ref>http://top500.org/faq/what_top500 What is the TOP500</ref>. This appears to be an excellent online source of information that collects benchmark data submitted by users of computers and readily provides performance statistics by Vendor, Application, Architecture and nine (9) other areas <ref name="t500stats">http://i.top500.org/stats TOP500 Stats</ref>. This article, in order to be vendor neutral, is providing the comparison by architecture. However, there are many ways to compare supercomputers and the user interface at [http://www.TOP500.org TOP500.org] makes these comparisons easy to do.</p> | ||

= Comparison of Supercomputers by Architecture = | = Comparison of Supercomputers by Architecture = | ||

<p> | [[File:2011nov-top500-architecture.png|thumb|right|upright|300px|Current distribution of architecture types among the top 500 supercomputers. Image from i.TOP500.org/stats]] | ||

<p>Traditional supercomputers of today are composed of three (3) types of parallel processing architectures. These architectures are Cluster, Massively Parallel Processing or MPP, and Constellation <ref name="t500stats" />. A non-traditional, or disruptive approach, to supercomputers is [http://searchdatacenter.techtarget.com/definition/grid-computing Grid Computing]<ref>http://searchdatacenter.techtarget.com/definition/grid-computing</ref>.</p> | |||

< | |||

[ | <p>The graphic generated at [http://www.TOP500.org/ TOP500.org] shows the distribution of supercomputers by architecture. The Massively Parallel Processing section of the graph makes up 17.8% of the total number of current supercomputers. The bulk of supercomputers is made up of clustered systems, which Constellation architectures make up a fraction of a percent of supercomputers. Each architecture is further discussed, below.</p> | ||

== Cluster == | == Cluster == | ||

[[File:2011nov-top500-cluster-count.png|thumb|right|upright|325px|Number of cluster systems among the top 500 supercomputers since before 2000. Image from i.TOP500.org/stats]] | |||

<p>A [http://searchdatacenter.techtarget.com/definition/cluster-computing Cluster] is a group of computers connected together that appear as a single system to the outside world and provide load balancing and resource sharing <ref>http://searchdatacenter.techtarget.com/definition/cluster-computing Definition of Cluster Computing</ref>. Invented by Digital Equipment Corporation in the 1980's, clusters of computers form the largest number of supercomputers available today <ref>http://books.google.com/books?id=Hd_JlxD7x3oC&pg=PA90&lpg=PA90&dq=what+is+a+constellation+in+parallel+computing?&source=bl&ots=Rf9nxSqOgL&sig=-xleas5wXvNpvkgYYxguvP1tSLA&hl=en&sa=X&ei=aDcnT-XRNqHX0QHymbjrAg&ved=0CGMQ6AEwBw#v=onepage&q=what%20is%20a%20constellation%20in%20parallel%20computing%3F&f=false Applied Parallel Computing</ref>, <ref name="t500stats" />.</p> | <p>A [http://searchdatacenter.techtarget.com/definition/cluster-computing Cluster] is a group of computers connected together that appear as a single system to the outside world and provide load balancing and resource sharing <ref>http://searchdatacenter.techtarget.com/definition/cluster-computing Definition of Cluster Computing</ref>. Invented by Digital Equipment Corporation in the 1980's, clusters of computers form the largest number of supercomputers available today <ref>http://books.google.com/books?id=Hd_JlxD7x3oC&pg=PA90&lpg=PA90&dq=what+is+a+constellation+in+parallel+computing?&source=bl&ots=Rf9nxSqOgL&sig=-xleas5wXvNpvkgYYxguvP1tSLA&hl=en&sa=X&ei=aDcnT-XRNqHX0QHymbjrAg&ved=0CGMQ6AEwBw#v=onepage&q=what%20is%20a%20constellation%20in%20parallel%20computing%3F&f=false Applied Parallel Computing</ref>, <ref name="t500stats" />.</p> | ||

<p>[http://www.TOP500.org TOP500.org] data as of November 2011 shows that Cluster computing makes up the largest subset of supercomputers at eight-two percent (82%). The | <p>[http://www.TOP500.org TOP500.org] data as of November 2011 shows that Cluster computing makes up the largest subset of supercomputers at eight-two percent (82%). The chart to the right shows the growth of cluster supercomputer systems with the oldest data on the right. Teh number of clustered supercomputer systems grew rapidly during the 21st century and started leveling off after about 7.5 years.</p> | ||

<p>The total processing power of the top 500 cluster supercomputers is reported at 50,192.82 TFLOPS and the trend for growth of cluster based supercomputers has leveled off<ref name="t500stats" />.</p> | |||

== Massively Parallel Processing, MPP == | == Massively Parallel Processing, MPP == | ||

[[File:2011nov-top500-mpp-count.png|thumb|right|upright|325px|Number of MPP systems among the top 500 supercomputers since before 1995. Image from i.TOP500.org/stats]] | |||

<p>[http://whatis.techtarget.com/definition/0,,sid9_gci214085,00.html Massively Parallel Processing] or MPP supercomputers are made up of hundreds of computing nodes and process data in a coordinated fashion <ref name="ttmppdef">http://whatis.techtarget.com/definition/0,,sid9_gci214085,00.html</ref>. Each node of the MPP generally has its own memory and operating system and can be made up of nodes that have multiple processors and/or multiple cores <ref name="ttmppdef" />.</p> | <p>[http://whatis.techtarget.com/definition/0,,sid9_gci214085,00.html Massively Parallel Processing] or MPP supercomputers are made up of hundreds of computing nodes and process data in a coordinated fashion <ref name="ttmppdef">http://whatis.techtarget.com/definition/0,,sid9_gci214085,00.html</ref>. Each node of the MPP generally has its own memory and operating system and can be made up of nodes that have multiple processors and/or multiple cores <ref name="ttmppdef" />.</p> | ||

<p>[http://i.TOP500.org/stats TOP500.org/stats] for the MPP architecture of supercomputers shows that as of November 2011, MPP makes up approximately 17.8% of all supercomputers reported. A graph of the growth and subsequent decline of the MPP architecture from data displayed at [http://i.TOP500.org/stats TOP500.org/stats] is shown | <p>[http://i.TOP500.org/stats TOP500.org/stats] for the MPP architecture of supercomputers shows that as of November 2011, MPP makes up approximately 17.8% of all supercomputers reported. A graph of the growth and subsequent decline of the MPP architecture from data displayed at [http://i.TOP500.org/stats TOP500.org/stats] is shown on the right. MPP supercomputer systems grew from the early 90's until the early part of the 21st century and have since declined in total number.</p> | ||

<p>The total processing power of the top 500 MPP supercomputers is 23,823.97 TFLOPS. The trend of MPP supercomputers, like cluster based supercomputers, has leveled off<ref name="t500stats" />.</p> | |||

<p><ref name="t500stats" /></p> | |||

== Constellation == | == Constellation == | ||

[[File:2011nov-top500-constellation-count.png|thumb|right|upright|350px|Number of constellation systems among the top 500 supercomputers since before 2000. Image from i.TOP500.org/stats]] | |||

<p>A [http://www.mimuw.edu.pl/~mbiskup/presentations/Parallel%20Computing.pdf Constellation] is a cluster of supercomputers <ref>http://www.mimuw.edu.pl/~mbiskup/presentations/Parallel%20Computing.pdf</ref>. [http://www.TOP500.org TOP500.org] shows only one constellation supercomputer as of November 2011. The graph shows rapid growth and decline in the first 5 years of the 21st century.</p> | |||

<p>This author's speculation about the decline of constellations is based on several factors: Multiple processor and/or multiple core computers have been getting faster and less expensive. Combine these less expensive computers into very large clusters and you can get computing power that rivals a constellation. Alternatively, more and more computers have symmetric multiprocessing, SMP, and the concept of constellations and clusters is converging.</p> | |||

<p>The total processing power of the constellation supercomputer is: 52.84 TFLOPS<ref name="t500stats" />.</p> | |||

== Grid Computing == | |||

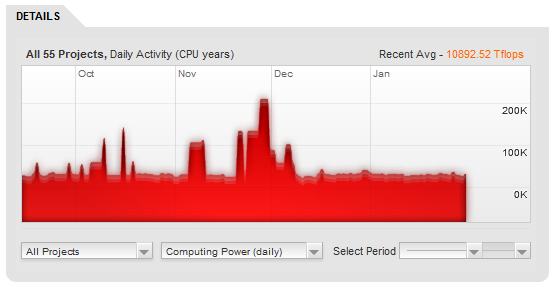

[[File:20120130-grid-computing-graph.png|thumb|right|upright|350px|Daily computing power (TFLOPS) of grid supercomputers over a several month period. Image from http://www.gridrepublic.org/index.php?page=stats]] | |||

<p>Grid Computing is defined as applying many networked computers to solving a single problem simultaneously<ref>http://searchdatacenter.techtarget.com/definition/grid-computing</ref>. It is also defined as a network of computers used by a single company or organization to solve a problem<ref>http://boinc.berkeley.edu/trac/wiki/DesktopGrid</ref>. Yet another definition as implemented by GridRepublic.org creates a supercomputing grid by using volunteer computers from across the globe<ref>http://www.gridrepublic.org/index.php?page=about</ref>. All of these definitions have something in common, and that is using parallel processing to attack a problem that can be broken up into many pieces.</p> | |||

<p>The graph generated by data at [http://www.GridRepublic.org GridRepublic.org] shows the average processing power of this supercomputer created by volunteers from around the world.</p> | |||

<p>The GridRepublic.org statistics is for 55 applications running using a total of 10,979,114 GFLOPS or 10,979.114 TFLOPS<ref name="grstats">http://www.gridrepublic.org/index.php?page=stats</ref>.</p> | |||

= Top 10 Supercomputers = | |||

<p>According to [http://www.top500.org/ Top500.org], the top 10 supercomputers in the world, as of November 2011, are listed below:</p> | |||

{|class="wikitable" | |||

!Number | |||

!Name | |||

!System | |||

|- | |||

|1 | |||

|K computer | |||

|[http://www.top500.org/system/177232 SPARC64 VIIIfx 2.0GHz, Tofu interconnect] | |||

|- | |||

|2 | |||

|Tianhe-1A | |||

|[http://www.top500.org/system/176929 NUDT YH MPP, Xeon X5670 6C 2.93 GHz, NVIDIA 2050] | |||

|- | |||

|3 | |||

|Jaguar | |||

|[http://www.top500.org/system/176544 Cray XT5-HE Opteron 6-core 2.6 GHz] | |||

|- | |||

|4 | |||

|Nebulae | |||

|[http://www.top500.org/system/176819 Dawning TC3600 Blade, Intel X5650, NVidia Tesla C2050 GPU] | |||

|- | |||

|5 | |||

|TSUBAME 2.0 | |||

|[http://www.top500.org/system/176927 HP ProLiant SL390s G7 Xeon 6C X5670, Nvidia GPU, Linux/Windows] | |||

|- | |||

|6 | |||

|Cielo | |||

|[http://www.top500.org/system/177170 Cray XE6, Opteron 6136 8C 2.40GHz, Custom] | |||

|- | |||

|7 | |||

|Pleiades | |||

|[http://www.top500.org/system/177259 SGI Altix ICE 8200EX/8400EX, Xeon HT QC 3.0/Xeon 5570/5670 2.93 Ghz, Infiniband] | |||

|- | |||

|8 | |||

|Hopper | |||

|[http://www.top500.org/system/176952 Cray XE6, Opteron 6172 12C 2.10GHz, Custom] | |||

|- | |||

|9 | |||

|Tera-100 | |||

|[http://www.top500.org/system/176928 Bull bullx super-node S6010/S6030] | |||

|- | |||

|10 | |||

|Roadrunner | |||

|[http://www.top500.org/system/176027 BladeCenter QS22/LS21 Cluster, PowerXCell 8i 3.2 Ghz / Opteron DC 1.8 GHz, Voltaire Infiniband] | |||

|} | |||

<p>For a more detailed version of this list, see [http://expertiza.csc.ncsu.edu/wiki/index.php/CSC/ECE_506_Spring_2012/1a_ry#Top_10_supercomputers_of_today.5B9.5D a fellow student's wiki on supercomputers].</p> | |||

= Advantages & Disadvantages of Supercomputers = | |||

<p>An excellent way to compare the advantages and disadvantages of supercomputers is to use a table. Although this list is not exhaustive, it generally sums up the advantages as being the ability solve large number crunching problems quickly but at a high cost due to the specialty of the hardware, the physical scale of the system and power requirements.</p> | |||

{|class="wikitable" | |||

!Advantage | |||

!Disadvantage | |||

|- | |||

|Ability to process large amounts of data. Examples include atmospheric modeling and oceanic modeling. Processing large matrices and weapons simulation<ref name="paulmurphy">http://www.zdnet.com/blog/murphy/uses-for-supercomputers/746 Murphy, Paul (December 2006) Uses for supercomputers</ref>. | |||

|Limited scope of applications, or in general, they're not general purpose computers. Supercomputers are usually engaged in scientific, military or mathematical applications<ref name="paulmurphy" />. | |||

|- | |||

|The ability to process large amounts of data quickly and in parallel, when compared to the ability of low end commercial systems or user computers<ref>http://nickeger.blogspot.com/2011/11/supercomputers-advantages-and.html Eger, Nick (November 2011) Supercomputer advantages adn disadvantages</ref>. | |||

|Cost, power and cooling. Commercial supercomputers costs hundreds of millions of dollars. They have on-going energy and cooling requirements that are expensive<ref name="robertharris">http://www.zdnet.com/blog/storage/build-an-8-ps3-supercomputer/220?tag=rbxccnbzd1 Harris, Robert (October 2007) Build an 8 PS3 supercomputer</ref>. | |||

|} | |||

<p>Although these are advantages and disadvantages of the traditional supercomputer, there is movement towards the consumerization of supercomputers which could result in supercomputers being affordable to the average person<ref name="robertharris" />. | |||

= Summary of the Comparison of Supercomputers = | |||

Cluster supercomputers account for about twice as much processing in TFLOPS as MPP based supercomputers. The statistics tracked by [http://www.GridRepublic.org GridRepublic.org] for 55 applications shows that grid computing is using about the same amount of processing power as the fastest individual supercomputer listed on the [http://www.TOP500.org TOP500.org] list of supercomputers. The fastest computer listed is the | |||

RIKEN located at the Advanced Institute for Computational Science (AICS) in Japan, which is a K computer, SPARC64 VIIIfx 2.0GHz, and Tofu interconnect that operates at 10510.00 TFLOPS<ref>http://www.top500.org/list/2011/11/100</ref>.</p> | |||

<p>Grid Computing as an alternative to individually defined supercomputers seems to be growing and the expense of operating it is fully distributed across the volunteers that are apart of it. However, with any system where you don't have complete control of its parts, you can't rely on all of those parts being there all the time.</p> | |||

<p> | |||

= References = | = References = | ||

<references /> | <references /> | ||

Latest revision as of 04:32, 7 February 2012

Comparisons Between Supercomputers

Supercomputers are extremely capable computers, able to solve complex tasks in a relatively small amount of time. Their ability to massively outperform typical home and office computers is made possible normally either by an abundance of processor cores or smaller computers working in conjunction together. Supercomputers are generally specialized computers that tend to be very expensive, not available for general-purpose use and are used in computations where large amounts of numerical processing is required. They are used in scientific, military, graphics applications and for other number or data intensive computations <ref>http://dictionary.reference.com/browse/supercomputer Definition of supercomputer</ref>, <ref>http://www.webopedia.com/TERM/S/supercomputer.html Definition of supercomputer</ref>.

Since supercomputers have existed, as technology has advanced, they have continued to be surpassed by one another. This has lead to a drive for engineers and scientists to design and create supercomputers that continue to outperform others<ref>http://en.wikipedia.org/wiki/Supercomputer#History</ref>. This article compares current supercomputers as well as supercomputer architectures.

For the history, development and current state of supercomputing, including a fairly recent, detailed top 10 list, please see a fellow student's wiki article on Supercomputers.

Benchmarking Supercomputers

Supercomputers are generally compared qualitatively using floating point operations per second, or FLOPS. Using standard prefixes, higher levels of FLOPS can be specified as the computing power of supercomputers increases. For example, KiloFLOPS for thousands of FLOPS and MegaFLOPS for millions of FLOPS <ref>http://kevindoran.blogspot.com/2011/04/comparing-performance-of-supercomputers.html Doran, Kevin (April 2011) Comparing the performance of supercomputers</ref>. Often you'll see just the first letter of the prefix with FLOPS. For example, for GigaFLOPS or billions of FLOPS, you'll see GFLOPS <ref>http://top500.org/faq/what_gflop_s Definition of GFLOPS</ref>.

A software package called LINPACK is a standard approach to testing or benchmarking supercomputers by solving a dense system of linear equations using the Gauss method. <ref>http://www.top500.org/project/linpack LINPACK defined</ref>. However, LINPACK benchmarking software is not only used to benchmark supercomputers, it can also be used to benchmark a typical user computer <ref>http://www.xtremesystems.org/forums/showthread.php?197835-IntelBurnTest-The-new-stress-testing-program Intel Benchmark Software</ref>.

Finding Supercomputer Comparison Data

Starting in 1993, TOP500.org began collecting performance data on computers and update their list every six months <ref>http://top500.org/faq/what_top500 What is the TOP500</ref>. This appears to be an excellent online source of information that collects benchmark data submitted by users of computers and readily provides performance statistics by Vendor, Application, Architecture and nine (9) other areas <ref name="t500stats">http://i.top500.org/stats TOP500 Stats</ref>. This article, in order to be vendor neutral, is providing the comparison by architecture. However, there are many ways to compare supercomputers and the user interface at TOP500.org makes these comparisons easy to do.

Comparison of Supercomputers by Architecture

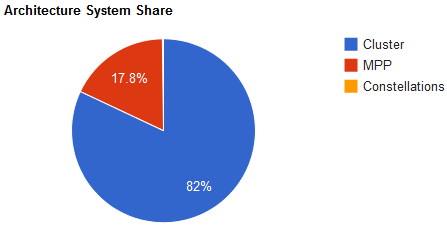

Traditional supercomputers of today are composed of three (3) types of parallel processing architectures. These architectures are Cluster, Massively Parallel Processing or MPP, and Constellation <ref name="t500stats" />. A non-traditional, or disruptive approach, to supercomputers is Grid Computing<ref>http://searchdatacenter.techtarget.com/definition/grid-computing</ref>.

The graphic generated at TOP500.org shows the distribution of supercomputers by architecture. The Massively Parallel Processing section of the graph makes up 17.8% of the total number of current supercomputers. The bulk of supercomputers is made up of clustered systems, which Constellation architectures make up a fraction of a percent of supercomputers. Each architecture is further discussed, below.

Cluster

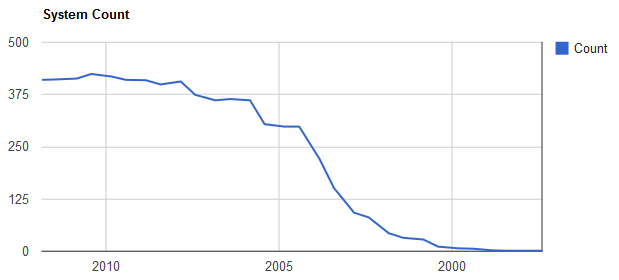

A Cluster is a group of computers connected together that appear as a single system to the outside world and provide load balancing and resource sharing <ref>http://searchdatacenter.techtarget.com/definition/cluster-computing Definition of Cluster Computing</ref>. Invented by Digital Equipment Corporation in the 1980's, clusters of computers form the largest number of supercomputers available today <ref>http://books.google.com/books?id=Hd_JlxD7x3oC&pg=PA90&lpg=PA90&dq=what+is+a+constellation+in+parallel+computing?&source=bl&ots=Rf9nxSqOgL&sig=-xleas5wXvNpvkgYYxguvP1tSLA&hl=en&sa=X&ei=aDcnT-XRNqHX0QHymbjrAg&ved=0CGMQ6AEwBw#v=onepage&q=what%20is%20a%20constellation%20in%20parallel%20computing%3F&f=false Applied Parallel Computing</ref>, <ref name="t500stats" />.

TOP500.org data as of November 2011 shows that Cluster computing makes up the largest subset of supercomputers at eight-two percent (82%). The chart to the right shows the growth of cluster supercomputer systems with the oldest data on the right. Teh number of clustered supercomputer systems grew rapidly during the 21st century and started leveling off after about 7.5 years.

The total processing power of the top 500 cluster supercomputers is reported at 50,192.82 TFLOPS and the trend for growth of cluster based supercomputers has leveled off<ref name="t500stats" />.

Massively Parallel Processing, MPP

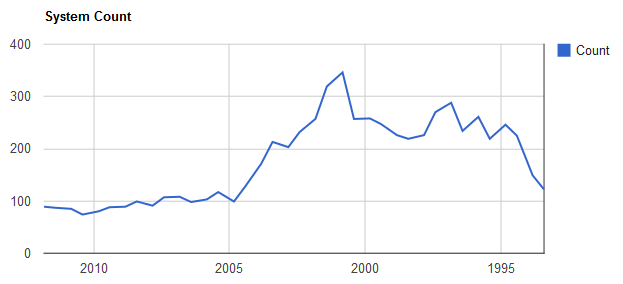

Massively Parallel Processing or MPP supercomputers are made up of hundreds of computing nodes and process data in a coordinated fashion <ref name="ttmppdef">http://whatis.techtarget.com/definition/0,,sid9_gci214085,00.html</ref>. Each node of the MPP generally has its own memory and operating system and can be made up of nodes that have multiple processors and/or multiple cores <ref name="ttmppdef" />.

TOP500.org/stats for the MPP architecture of supercomputers shows that as of November 2011, MPP makes up approximately 17.8% of all supercomputers reported. A graph of the growth and subsequent decline of the MPP architecture from data displayed at TOP500.org/stats is shown on the right. MPP supercomputer systems grew from the early 90's until the early part of the 21st century and have since declined in total number.

The total processing power of the top 500 MPP supercomputers is 23,823.97 TFLOPS. The trend of MPP supercomputers, like cluster based supercomputers, has leveled off<ref name="t500stats" />.

Constellation

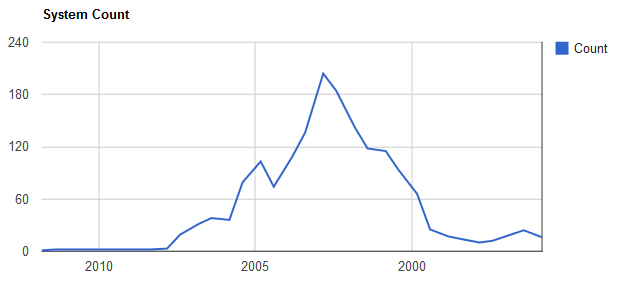

A Constellation is a cluster of supercomputers <ref>http://www.mimuw.edu.pl/~mbiskup/presentations/Parallel%20Computing.pdf</ref>. TOP500.org shows only one constellation supercomputer as of November 2011. The graph shows rapid growth and decline in the first 5 years of the 21st century.

This author's speculation about the decline of constellations is based on several factors: Multiple processor and/or multiple core computers have been getting faster and less expensive. Combine these less expensive computers into very large clusters and you can get computing power that rivals a constellation. Alternatively, more and more computers have symmetric multiprocessing, SMP, and the concept of constellations and clusters is converging.

The total processing power of the constellation supercomputer is: 52.84 TFLOPS<ref name="t500stats" />.

Grid Computing

Grid Computing is defined as applying many networked computers to solving a single problem simultaneously<ref>http://searchdatacenter.techtarget.com/definition/grid-computing</ref>. It is also defined as a network of computers used by a single company or organization to solve a problem<ref>http://boinc.berkeley.edu/trac/wiki/DesktopGrid</ref>. Yet another definition as implemented by GridRepublic.org creates a supercomputing grid by using volunteer computers from across the globe<ref>http://www.gridrepublic.org/index.php?page=about</ref>. All of these definitions have something in common, and that is using parallel processing to attack a problem that can be broken up into many pieces.

The graph generated by data at GridRepublic.org shows the average processing power of this supercomputer created by volunteers from around the world.

The GridRepublic.org statistics is for 55 applications running using a total of 10,979,114 GFLOPS or 10,979.114 TFLOPS<ref name="grstats">http://www.gridrepublic.org/index.php?page=stats</ref>.

Top 10 Supercomputers

According to Top500.org, the top 10 supercomputers in the world, as of November 2011, are listed below:

| Number | Name | System |

|---|---|---|

| 1 | K computer | SPARC64 VIIIfx 2.0GHz, Tofu interconnect |

| 2 | Tianhe-1A | NUDT YH MPP, Xeon X5670 6C 2.93 GHz, NVIDIA 2050 |

| 3 | Jaguar | Cray XT5-HE Opteron 6-core 2.6 GHz |

| 4 | Nebulae | Dawning TC3600 Blade, Intel X5650, NVidia Tesla C2050 GPU |

| 5 | TSUBAME 2.0 | HP ProLiant SL390s G7 Xeon 6C X5670, Nvidia GPU, Linux/Windows |

| 6 | Cielo | Cray XE6, Opteron 6136 8C 2.40GHz, Custom |

| 7 | Pleiades | SGI Altix ICE 8200EX/8400EX, Xeon HT QC 3.0/Xeon 5570/5670 2.93 Ghz, Infiniband |

| 8 | Hopper | Cray XE6, Opteron 6172 12C 2.10GHz, Custom |

| 9 | Tera-100 | Bull bullx super-node S6010/S6030 |

| 10 | Roadrunner | BladeCenter QS22/LS21 Cluster, PowerXCell 8i 3.2 Ghz / Opteron DC 1.8 GHz, Voltaire Infiniband |

For a more detailed version of this list, see a fellow student's wiki on supercomputers.

Advantages & Disadvantages of Supercomputers

An excellent way to compare the advantages and disadvantages of supercomputers is to use a table. Although this list is not exhaustive, it generally sums up the advantages as being the ability solve large number crunching problems quickly but at a high cost due to the specialty of the hardware, the physical scale of the system and power requirements.

| Advantage | Disadvantage |

|---|---|

| Ability to process large amounts of data. Examples include atmospheric modeling and oceanic modeling. Processing large matrices and weapons simulation<ref name="paulmurphy">http://www.zdnet.com/blog/murphy/uses-for-supercomputers/746 Murphy, Paul (December 2006) Uses for supercomputers</ref>. | Limited scope of applications, or in general, they're not general purpose computers. Supercomputers are usually engaged in scientific, military or mathematical applications<ref name="paulmurphy" />. |

| The ability to process large amounts of data quickly and in parallel, when compared to the ability of low end commercial systems or user computers<ref>http://nickeger.blogspot.com/2011/11/supercomputers-advantages-and.html Eger, Nick (November 2011) Supercomputer advantages adn disadvantages</ref>. | Cost, power and cooling. Commercial supercomputers costs hundreds of millions of dollars. They have on-going energy and cooling requirements that are expensive<ref name="robertharris">http://www.zdnet.com/blog/storage/build-an-8-ps3-supercomputer/220?tag=rbxccnbzd1 Harris, Robert (October 2007) Build an 8 PS3 supercomputer</ref>. |

Although these are advantages and disadvantages of the traditional supercomputer, there is movement towards the consumerization of supercomputers which could result in supercomputers being affordable to the average person<ref name="robertharris" />.

Summary of the Comparison of Supercomputers

Cluster supercomputers account for about twice as much processing in TFLOPS as MPP based supercomputers. The statistics tracked by GridRepublic.org for 55 applications shows that grid computing is using about the same amount of processing power as the fastest individual supercomputer listed on the TOP500.org list of supercomputers. The fastest computer listed is the

RIKEN located at the Advanced Institute for Computational Science (AICS) in Japan, which is a K computer, SPARC64 VIIIfx 2.0GHz, and Tofu interconnect that operates at 10510.00 TFLOPS<ref>http://www.top500.org/list/2011/11/100</ref>.

Grid Computing as an alternative to individually defined supercomputers seems to be growing and the expense of operating it is fully distributed across the volunteers that are apart of it. However, with any system where you don't have complete control of its parts, you can't rely on all of those parts being there all the time.

References

<references />