CSC/ECE 506 Fall 2007/wiki2 4 BV: Difference between revisions

No edit summary |

No edit summary |

||

| (27 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

= | =MapReduce= | ||

One of the major problems faced in the network world, is the need to classify huge amounts of data into easily understandable smaller data sets. It might be the problem of classifying hits for a website from different sources or classifying the frequency of occurrence of words in text, the problem involves analyzing large amounts of data into small understandable data sets. | |||

The | ''MapReduce'' is a programming model for processing large data sets. This was developed as a scalable, multiprocessing model by [http://www.google.com/about.html Google]. This model boasts of high scalability and high fault tolerance. ''MapReduce'' helps in ''mapping'' large data sets to smaller number of keys, thus ''reducing'' the data set itself to a more manageable proportion. More specifically, users specify a ''map'' function to process a key/value pair and generate a set of intermediate key/value pairs. The ''reduce'' function merges these intermediate key/values and generates the consolidated output. | ||

Some of the examples, where ''MapReduce'' can be employed are: | |||

*Distributed Grep | |||

*Count of URL Access Frequency | |||

*ReverseWeb-Link Graph | |||

*Term-Vector per Host | |||

=Steps of Parallelization= | |||

The programs written based on this model can be parallelized and executed concurrently on a number of systems. The model provides the capability for partitioning of data, assignment to different processes and merging the results.<br> | |||

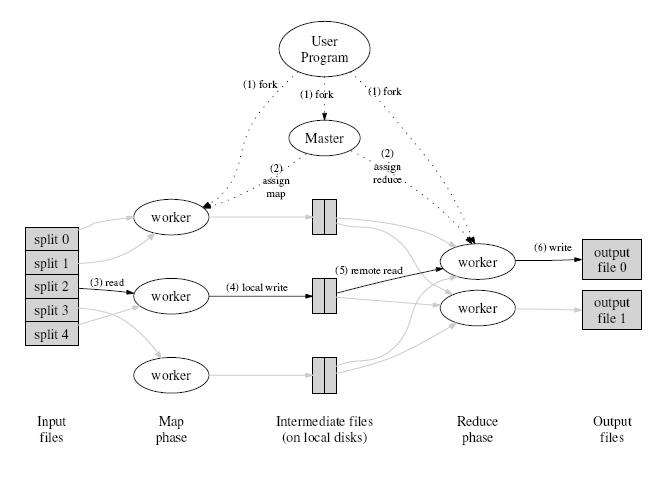

[[Image:MapReduce03.JPG]]<br>[http://209.85.163.132/papers/mapreduce-osdi04.pdf Execution Overview] | |||

===Decomposition=== | |||

''Decomposition'' is a step that divides the problem into manageable tasks. In ''MapReduce'' the input data set is divided into M splits. Typically a master process takes care of the decomposition. The ''MapReduce'' algorithm tries to map a large dataset into a smaller number of key/value pairs. | |||

The number of key/value pairs to be arrived at, will determine the size of the split. For example, the ''grep'' problem, which counts the number of occurrences of a specific word or a group of words in a document, the document is split into M equal parts. The size of each part is based on the number of distinct word(s)to be searched. Hence searching for larger group of words requires the document to be divided into smaller parts. <br> | |||

Thus ''MapReduce'' provides an adaptive method for decomposing a problem. | |||

===Assignment=== | |||

''Assignment'' is a step that assigns the related decomposed tasks to processes. | |||

In ''MapReduce'' splits are assigned to idle map workers that generate R partial/complete outputs. These partial outputs are consolidated into a final result. In the ''grep'' problem, each worker is assigned a part of the document along with the word(s) to be searched. Each worker searches the part of the document assigned to it and generates a partial count of the occurrences. These partial counts from map workers are reduced into a final count by the reduce workers. The master is responsible for assigning M parts of the document and the R intermediate counts to idle workers. <br> | |||

Thus ''MapReduce'' provides for load based task assignment which is beneficial in a scalable multiprocessor architecture. | |||

===Orchestration=== | |||

''Orchestration'' is a step which groups the processes based on their interdependencies. The main purpose of this step is to reduce the synchronization and communication overhead between the processes. In the ''grep'' problem, the intermediate counts generated by the map workers represent an unsorted data set. By alphabetically sorting the intermediate word counts the reduce workers can be assigned with a coherent data set. This increases the probability that each reduce worker may work on a single or a small group of word counts.<br> | |||

By providing an intermediate step for reduce ''MapReduce'' algorithm ensures that data locality is maintained.<br> | |||

[[Image:MapReduce02.gif]]<br>[http://labs.google.com/papers/mapreduce-osdi04-slides/index-auto-0008.html Parallel Execution]<br> | |||

Mapping | ===Mapping=== | ||

''Mapping'' assigns the processes to different processors with an intent of minimizing the communication between them. Considering the ''grep'' problem, the master maps the document parts to processors executing map/reduce function. It is fair to assume that much of the time is spent in transferring the data across the network, from the master to the workers. One way of solving the problem is to, store replicas of the splits at different worker locations. The master can now assign the M splits in such a way that the replicas of the input document are local to the processor on which it is processed. This will eliminate the actual file transfer from the master to the map worker and thereby reducing the communication. | |||

=Summary= | |||

As seen from the ''grep'' example, ''MapReduce'' is a highly scalable and customizable algorithm for processing large data sets. In cases where, the data set is very large compared to the the number of available workers, the problem can be solved recursively. The final output can be fed back to the ''MapReduce'' engine by further refining the key/value pairs. Also, because of the user-customizable nature of the inputs, data and/or keys, any ''MapReduce'' solution is easily portable. | |||

=References= | |||

*[http://labs.google.com/papers/mapreduce.html Google Research publications] | |||

*[http://en.wikipedia.org/wiki/MapReduce Wikipage on MapReduce]] | |||

Latest revision as of 22:56, 27 September 2007

MapReduce

One of the major problems faced in the network world, is the need to classify huge amounts of data into easily understandable smaller data sets. It might be the problem of classifying hits for a website from different sources or classifying the frequency of occurrence of words in text, the problem involves analyzing large amounts of data into small understandable data sets.

MapReduce is a programming model for processing large data sets. This was developed as a scalable, multiprocessing model by Google. This model boasts of high scalability and high fault tolerance. MapReduce helps in mapping large data sets to smaller number of keys, thus reducing the data set itself to a more manageable proportion. More specifically, users specify a map function to process a key/value pair and generate a set of intermediate key/value pairs. The reduce function merges these intermediate key/values and generates the consolidated output.

Some of the examples, where MapReduce can be employed are:

- Distributed Grep

- Count of URL Access Frequency

- ReverseWeb-Link Graph

- Term-Vector per Host

Steps of Parallelization

The programs written based on this model can be parallelized and executed concurrently on a number of systems. The model provides the capability for partitioning of data, assignment to different processes and merging the results.

Execution Overview

Decomposition

Decomposition is a step that divides the problem into manageable tasks. In MapReduce the input data set is divided into M splits. Typically a master process takes care of the decomposition. The MapReduce algorithm tries to map a large dataset into a smaller number of key/value pairs.

The number of key/value pairs to be arrived at, will determine the size of the split. For example, the grep problem, which counts the number of occurrences of a specific word or a group of words in a document, the document is split into M equal parts. The size of each part is based on the number of distinct word(s)to be searched. Hence searching for larger group of words requires the document to be divided into smaller parts.

Thus MapReduce provides an adaptive method for decomposing a problem.

Assignment

Assignment is a step that assigns the related decomposed tasks to processes.

In MapReduce splits are assigned to idle map workers that generate R partial/complete outputs. These partial outputs are consolidated into a final result. In the grep problem, each worker is assigned a part of the document along with the word(s) to be searched. Each worker searches the part of the document assigned to it and generates a partial count of the occurrences. These partial counts from map workers are reduced into a final count by the reduce workers. The master is responsible for assigning M parts of the document and the R intermediate counts to idle workers.

Thus MapReduce provides for load based task assignment which is beneficial in a scalable multiprocessor architecture.

Orchestration

Orchestration is a step which groups the processes based on their interdependencies. The main purpose of this step is to reduce the synchronization and communication overhead between the processes. In the grep problem, the intermediate counts generated by the map workers represent an unsorted data set. By alphabetically sorting the intermediate word counts the reduce workers can be assigned with a coherent data set. This increases the probability that each reduce worker may work on a single or a small group of word counts.

By providing an intermediate step for reduce MapReduce algorithm ensures that data locality is maintained.

Parallel Execution

Mapping

Mapping assigns the processes to different processors with an intent of minimizing the communication between them. Considering the grep problem, the master maps the document parts to processors executing map/reduce function. It is fair to assume that much of the time is spent in transferring the data across the network, from the master to the workers. One way of solving the problem is to, store replicas of the splits at different worker locations. The master can now assign the M splits in such a way that the replicas of the input document are local to the processor on which it is processed. This will eliminate the actual file transfer from the master to the map worker and thereby reducing the communication.

Summary

As seen from the grep example, MapReduce is a highly scalable and customizable algorithm for processing large data sets. In cases where, the data set is very large compared to the the number of available workers, the problem can be solved recursively. The final output can be fed back to the MapReduce engine by further refining the key/value pairs. Also, because of the user-customizable nature of the inputs, data and/or keys, any MapReduce solution is easily portable.