CSC/ECE 506 Spring 2011/ch11 DJ: Difference between revisions

No edit summary |

No edit summary |

||

| (10 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

= '''Supplemental to Chapter 11: SCI Cache Coherence''' = | == '''Supplemental to Chapter 11: SCI Cache Coherence''' == | ||

This is intended to be a supplement to Chapter 11 of [[#References | Solihin ]], which deals with Distributed Shared Memory (DSM) in Multiprocessors. In the book, the basic approaches to DSM are discussed, and a directory based cache coherence protocol is introduced. This protocol is in contrast to the bus-based cache coherence protocols that were introduced earlier. This supplemental focuses on a specific directory based cache coherence protocol called the Scalable Coherent Interface. | |||

== Introduction: What is the Scalable Coherent Interface? == | == Introduction: What is the Scalable Coherent Interface? == | ||

The Scalable Coherent Interface (SCI) is an ANSI/IEEE protocol to provide fast point-to-point connections for high-performance multiprocessor systems [ | The Scalable Coherent Interface (SCI) is an ANSI/IEEE protocol to provide fast point-to-point connections for high-performance multiprocessor systems [[#References | ("IEEE Standards Association") ]]. It works with both shared memory and message passing and was approved in 1992. In the 1980's, as processors were getting faster and faster, they were beginning to outpace the speed of the bus. SCI was created to provide a non-bus based interconnection protocol. It was intended to be scalable, meaning it can work with few or many multiprocessors. | ||

== SCI Cache Coherence == | == SCI Cache Coherence == | ||

SCI utilizes a directory based cache coherence protocol similar to what is describe in Chapter 11 of | SCI utilizes a directory based cache coherence protocol similar to what is describe in Chapter 11 of [[#References | Solihin (2008)]]. Instead of cache coherence being handled by caches snooping for interactions on a bus, a directory manages the coherence of each individual cache by creating a doubly-linked list of the caches that are sharing a particular block of memory. The directory maintains a state for the block in memory, and a pointer to the first cache that is on the shared list. The first cache in the list is the cache for the processor that most recently accessed the block of memory. When the "head" of the list modifies the block of memory, they have to notify main memory that they now have the only clean copy of the data. This head cache must also notify the next cache in the shared list so that the cache can invalidate their copy. The information then propagates from there. | ||

The structure for SCI is fairly complicated and can lead to a number of race conditions. | |||

== Coherence Race Conditions == | == Coherence Race Conditions == | ||

There are many different instances in the SCI cache coherence protocol where race conditions are handled in such a way they are a non-issue. For instance, in SCI only the head node in the link list of sharers is able to write to the block. All sharers, including the head, can read. | There are many different instances in the SCI cache coherence protocol where race conditions are handled in such a way they are a non-issue. For instance, in SCI only the head node in the link list of sharers is able to write to the block. All sharers, including the head, can read. Suppose we have two nodes, N1 and N2, with both nodes sharing a cached block. N1 is the head of the linked list while N2 is the tail of the linked list. If N2 wants to write a value to the cached block, the node must do it in a series of steps. First N2 detaches itself from the linked list by updating N1's NEXT pointer. Since N2 is no longer a sharer, the node must invalidate its cached copy to prevent a race condition (N1 writes to block while N2 holds an out-of-date version of the block). Then N2 has to re-request the block from the directory with intent to write data. The directory then processes N2's request and N2 swaps out the current head's pointer (N1) with its own, making it the new head. N2 then uses the pointer to N1 to invalidate all sharers. In this case N1 is the only sharer [[#References | (Gustavson, and Li 56) ]]. This allows the SCI protocol to keep write exclusivity. Write exclusivity mean only one write can be performed to a give block at a time. Without write exclusivity, each node sharing the block would be able to write to the block, allowing for multiple writes to happen simultaneously. If each cache wrote simultaneously, every cache sharing the block would get invalidated, including the head. Also the block in memory might not posses the correct value. In order for this to be implemented, there needs to be special states for the head, middle, and tail of the linked list. Also there needs to be states that determine the read/write capabilities of the nodes. Table 1 shows how this scenario would look using a simplistic version of SCI discussed later. | ||

{| border="1" cellspacing="0" cellpadding="2" | |||

!colspan="7"| Table 1: Maintaining Write Exclusivity | |||

|---- | |||

!Action | |||

!State N1 | |||

!State N2 | |||

!State Directory | |||

!Head Node | |||

!Comments | |||

|---- | |||

| - | |||

|Sh | |||

|St | |||

|S | |||

|N1 | |||

|Initial State | |||

|---- | |||

|W2 | |||

|Sh | |||

|I | |||

|S | |||

|N1 | |||

|Node 2 wants to write | |||

|---- | |||

| | |||

|I | |||

|M | |||

|EM | |||

|N2 | |||

|N2 becomes new head invalidating N1 | |||

|---- | |||

|} | |||

Another scenario described in 11.4.1 of the Solihin textbook is when a cache is in state M and the directory is in state EM for a given block. If the cache holding the block flushes the value and the value does not make it to the directory before a read request is made, then there can be a potential race condition. The race condition is handled by the use of pending states. When the cache flushes a block, the line transitions from state M to a pending state. The pending state transitions into the invalid state only when the directory has acknowledged receiving the flush. This allows the cache to stall any read request made to the block until the block enters the steady state of I [[#References | (Solihin)]]. | |||

== SCI States == | == SCI States == | ||

=== Directory States === | |||

In SCI, the directory and each cache maintain a series of states. The directory can be in one of three states: | In SCI, the directory and each cache maintain a series of states. The directory can be in one of three states: | ||

| Line 18: | Line 61: | ||

# GONE or EXCLUSIVE/MODIFIED (EM) – The memory block in main memory is not updated, but a cache has the updated memory block | # GONE or EXCLUSIVE/MODIFIED (EM) – The memory block in main memory is not updated, but a cache has the updated memory block | ||

For a cache, there are 29 stable states described in the SCI standard and many pending states. Each state consists of two parts. The first part of the state tells where the state is in the linked list. There are four possibilities: | If the directory has the block of memory in the GONE or EM state, then the directory must forward any incoming read/write requests for the block to the last cache that modified the block. That cache is responsible for updating main memory and the block that requested the data. This is why the directory must maintain a pointer to the first cache in the cache list. | ||

=== Cache States : Complicated === | |||

For a cache, there are 29 stable states described in the SCI standard and many pending states. Each state consists of two parts. The first part of the state tells where the state is in the linked list. There are four possibilities [[#References | (Cutler (1999))]]: | |||

# ONLY – if the cache is the only state to have cached the memory block | # ONLY – if the cache is the only state to have cached the memory block | ||

| Line 25: | Line 71: | ||

# MID – if the cache is not the first or last in the list | # MID – if the cache is not the first or last in the list | ||

In addition to these four location designations for the states, there are other states to describe the actual state of the memory on the cache. Some of these include: | |||

# CLEAN – if the data in the memory block has not been modified, is the same as main memory, but can be modified. | # CLEAN – if the data in the memory block has not been modified, is the same as main memory, but can be modified. | ||

| Line 32: | Line 78: | ||

# COPY – if the data in the memory has not been modified, is the same as main memory, but cannot be modified, only read. | # COPY – if the data in the memory has not been modified, is the same as main memory, but cannot be modified, only read. | ||

A more simplistic way to envision the SCI coherence protocol states is to limit the system to just two processors and expand the MESI protocol | Each designation for the location can match with a designation for the state which creates additional states like: | ||

* ONLY_DIRTY - if the cache is the only state to have the cached memory block, and the cache has modified the memory block so it is different from main memory | |||

* MID_FRESH - if the cache is neither the first nor last cache in the list, and the data it has is the same as what is in main memory. | |||

As implied in the [[#Coherence Race Conditions | Race Conditions ]] section, some states are impossible, like: MID_DIRTY or TAIL_CLEAN. | |||

=== Cache States : Simplified === | |||

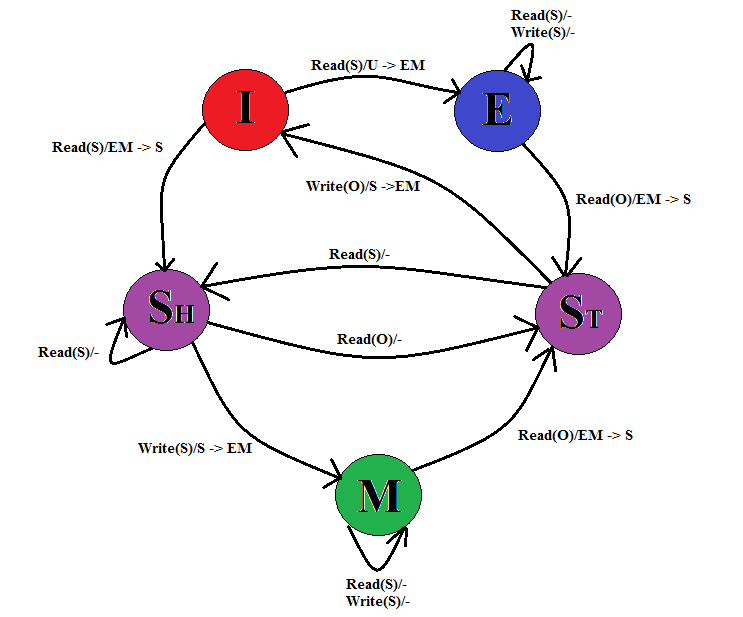

A more simplistic way to envision the SCI coherence protocol states is to limit the system to just two processors and expand the [http://en.wikipedia.org/wiki/MESI_protocol MESI protocol] states. This results in a more compact state diagram, which still gives the general sense of how the protocol causes transitions. It also illustrates how the directory states influence the cache states. In this case, we maintain the three states in the directory (U, S, EM) and the caches have the following states: | |||

# Invalid (I) – if the block in the cache's memory is invalid. | # Invalid (I) – if the block in the cache's memory is invalid. | ||

| Line 40: | Line 94: | ||

# Shared-Tail (St) – if the block in the cache's memory is shared with the other processor's cache and the other processor was the last to read the memory. | # Shared-Tail (St) – if the block in the cache's memory is shared with the other processor's cache and the other processor was the last to read the memory. | ||

To further simplify this scenario, only the following operations are possible: | |||

# Read(O/S) – a read of the data by either the processor attached to the cache (S for Self) or the other processor (O). | # Read(O/S) – a read of the data by either the processor attached to the cache (S for Self) or the other processor (O). | ||

# Write(O/S) – a write of the memory block by either the processor attached to the cache (S) or the other processor (O). | # Write(O/S) – a write of the memory block by either the processor attached to the cache (S) or the other processor (O). | ||

As mentioned in the [[#Coherence Race Conditions | Race Conditions ]] section, in order for a processor to make a write, they have to be in the head of the list (or the Sh state in this scenario). | |||

The following state diagram illustrates the transitions: | |||

[[Image:SCIStatesC.png]] | [[Image:SCIStatesC.png]] | ||

| Line 52: | Line 108: | ||

Initially, the cache is in the "I" state and the directory is in the "U" state. If processor 1 (P1) makes a read, the processor's cache moves from state I to E and the directory moves from U to EM. The directory records that P1 is the head of the directory list. At this point, processor 2 (P2) is in state I. If P2 makes a read, the directory is in state EM, so the directory notifies P1 that P2 is making a read, P1 transitions from E to St, and sends the data to P2. P2 transitions from I to Sh, and creates a pointer to P1 to note that P1 is the next memory on the directory list. The directory transitions from EM to S and the directory updates its pointer from P1 to P2 to note that P2 is now the head of the directory cache list. | Initially, the cache is in the "I" state and the directory is in the "U" state. If processor 1 (P1) makes a read, the processor's cache moves from state I to E and the directory moves from U to EM. The directory records that P1 is the head of the directory list. At this point, processor 2 (P2) is in state I. If P2 makes a read, the directory is in state EM, so the directory notifies P1 that P2 is making a read, P1 transitions from E to St, and sends the data to P2. P2 transitions from I to Sh, and creates a pointer to P1 to note that P1 is the next memory on the directory list. The directory transitions from EM to S and the directory updates its pointer from P1 to P2 to note that P2 is now the head of the directory cache list. | ||

As you can see from the diagram, a Write(S) can only occur when a processor is in the "Sh" state. In the actual SCI protocol, a write can occur when the cache is in the HEAD state combined with CLEAN/FRESH/DIRTY or when the cache is in the ONLY state. In this simplified scenario, these states are combined into one, the Sh state. | |||

== Summary == | |||

The SCI protocol is a directory based protocol for Distributed Memory Management similar to the one described in [[#References | Solihin ]]. This is an extensive and complicated strategy that maintains coherence as it scales to multiple processors. | |||

== References == | |||

* Yan Solihin, ''Fundamentals of Parallel Computer Architecture: Multichip and Multicore Systems,'' Solihin Books, 2008. | |||

* Culler, David E. and Jaswinder Pal Singh, ''Parallel Computer Architecture,'' Morgan Kaufmann Publishers, Inc, 1999. | |||

* Gustavson, David, and Qiang Li. "The Scalable Coherent Interface (SCI)." IEEE Communications Magazine 1996: 56. Web. 25 Apr 2011. <http://www.cs.utexas.edu/users/dburger/teaching/cs395t-s08/papers/9_sci.pdf>. | |||

* "1596-1992 - IEEE Standard for Scalable Coherent Interface (SCI)." IEEE Standards Association. N.p., n.d. Web. <http://standards.ieee.org/findstds/standard/1596-1992.html>. | |||

Latest revision as of 23:54, 25 April 2011

Supplemental to Chapter 11: SCI Cache Coherence

This is intended to be a supplement to Chapter 11 of Solihin , which deals with Distributed Shared Memory (DSM) in Multiprocessors. In the book, the basic approaches to DSM are discussed, and a directory based cache coherence protocol is introduced. This protocol is in contrast to the bus-based cache coherence protocols that were introduced earlier. This supplemental focuses on a specific directory based cache coherence protocol called the Scalable Coherent Interface.

Introduction: What is the Scalable Coherent Interface?

The Scalable Coherent Interface (SCI) is an ANSI/IEEE protocol to provide fast point-to-point connections for high-performance multiprocessor systems ("IEEE Standards Association") . It works with both shared memory and message passing and was approved in 1992. In the 1980's, as processors were getting faster and faster, they were beginning to outpace the speed of the bus. SCI was created to provide a non-bus based interconnection protocol. It was intended to be scalable, meaning it can work with few or many multiprocessors.

SCI Cache Coherence

SCI utilizes a directory based cache coherence protocol similar to what is describe in Chapter 11 of Solihin (2008). Instead of cache coherence being handled by caches snooping for interactions on a bus, a directory manages the coherence of each individual cache by creating a doubly-linked list of the caches that are sharing a particular block of memory. The directory maintains a state for the block in memory, and a pointer to the first cache that is on the shared list. The first cache in the list is the cache for the processor that most recently accessed the block of memory. When the "head" of the list modifies the block of memory, they have to notify main memory that they now have the only clean copy of the data. This head cache must also notify the next cache in the shared list so that the cache can invalidate their copy. The information then propagates from there.

The structure for SCI is fairly complicated and can lead to a number of race conditions.

Coherence Race Conditions

There are many different instances in the SCI cache coherence protocol where race conditions are handled in such a way they are a non-issue. For instance, in SCI only the head node in the link list of sharers is able to write to the block. All sharers, including the head, can read. Suppose we have two nodes, N1 and N2, with both nodes sharing a cached block. N1 is the head of the linked list while N2 is the tail of the linked list. If N2 wants to write a value to the cached block, the node must do it in a series of steps. First N2 detaches itself from the linked list by updating N1's NEXT pointer. Since N2 is no longer a sharer, the node must invalidate its cached copy to prevent a race condition (N1 writes to block while N2 holds an out-of-date version of the block). Then N2 has to re-request the block from the directory with intent to write data. The directory then processes N2's request and N2 swaps out the current head's pointer (N1) with its own, making it the new head. N2 then uses the pointer to N1 to invalidate all sharers. In this case N1 is the only sharer (Gustavson, and Li 56) . This allows the SCI protocol to keep write exclusivity. Write exclusivity mean only one write can be performed to a give block at a time. Without write exclusivity, each node sharing the block would be able to write to the block, allowing for multiple writes to happen simultaneously. If each cache wrote simultaneously, every cache sharing the block would get invalidated, including the head. Also the block in memory might not posses the correct value. In order for this to be implemented, there needs to be special states for the head, middle, and tail of the linked list. Also there needs to be states that determine the read/write capabilities of the nodes. Table 1 shows how this scenario would look using a simplistic version of SCI discussed later.

| Table 1: Maintaining Write Exclusivity | ||||||

|---|---|---|---|---|---|---|

| Action | State N1 | State N2 | State Directory | Head Node | Comments | |

| - | Sh | St | S | N1 | Initial State | |

| W2 | Sh | I | S | N1 | Node 2 wants to write | |

| I | M | EM | N2 | N2 becomes new head invalidating N1 | ||

Another scenario described in 11.4.1 of the Solihin textbook is when a cache is in state M and the directory is in state EM for a given block. If the cache holding the block flushes the value and the value does not make it to the directory before a read request is made, then there can be a potential race condition. The race condition is handled by the use of pending states. When the cache flushes a block, the line transitions from state M to a pending state. The pending state transitions into the invalid state only when the directory has acknowledged receiving the flush. This allows the cache to stall any read request made to the block until the block enters the steady state of I (Solihin).

SCI States

Directory States

In SCI, the directory and each cache maintain a series of states. The directory can be in one of three states:

- HOME or UNCACHED (U) – The only clean copy of the memory block is in main memory

- FRESH or SHARED (S) – The memory block is shared, but the copy in main memory is updated

- GONE or EXCLUSIVE/MODIFIED (EM) – The memory block in main memory is not updated, but a cache has the updated memory block

If the directory has the block of memory in the GONE or EM state, then the directory must forward any incoming read/write requests for the block to the last cache that modified the block. That cache is responsible for updating main memory and the block that requested the data. This is why the directory must maintain a pointer to the first cache in the cache list.

Cache States : Complicated

For a cache, there are 29 stable states described in the SCI standard and many pending states. Each state consists of two parts. The first part of the state tells where the state is in the linked list. There are four possibilities (Cutler (1999)):

- ONLY – if the cache is the only state to have cached the memory block

- HEAD – if the cache is the most recent reader/writer of the block and so is the first in the list

- TAIL – if the cache is the least recent reader/writer of the block and so is the last in the list

- MID – if the cache is not the first or last in the list

In addition to these four location designations for the states, there are other states to describe the actual state of the memory on the cache. Some of these include:

- CLEAN – if the data in the memory block has not been modified, is the same as main memory, but can be modified.

- DIRTY – if the data in the memory block has been modified, is different from main memory, and can be written to again if needed.

- FRESH – if the data in the memory block has not been modified, is the same as main memory, but cannot be modified without notifying main memory.

- COPY – if the data in the memory has not been modified, is the same as main memory, but cannot be modified, only read.

Each designation for the location can match with a designation for the state which creates additional states like:

- ONLY_DIRTY - if the cache is the only state to have the cached memory block, and the cache has modified the memory block so it is different from main memory

- MID_FRESH - if the cache is neither the first nor last cache in the list, and the data it has is the same as what is in main memory.

As implied in the Race Conditions section, some states are impossible, like: MID_DIRTY or TAIL_CLEAN.

Cache States : Simplified

A more simplistic way to envision the SCI coherence protocol states is to limit the system to just two processors and expand the MESI protocol states. This results in a more compact state diagram, which still gives the general sense of how the protocol causes transitions. It also illustrates how the directory states influence the cache states. In this case, we maintain the three states in the directory (U, S, EM) and the caches have the following states:

- Invalid (I) – if the block in the cache's memory is invalid.

- Modified (M) – if the block in the cache's memory has been modified and no longer matches the block in main memory.

- Exclusive (E) – if the cache has the only copy of the memory block and it matches the block in main memory.

- Shared-Head (Sh) – if the block in the cache's memory is shared with the other processor's cache, but this processor was the last to read the memory.

- Shared-Tail (St) – if the block in the cache's memory is shared with the other processor's cache and the other processor was the last to read the memory.

To further simplify this scenario, only the following operations are possible:

- Read(O/S) – a read of the data by either the processor attached to the cache (S for Self) or the other processor (O).

- Write(O/S) – a write of the memory block by either the processor attached to the cache (S) or the other processor (O).

As mentioned in the Race Conditions section, in order for a processor to make a write, they have to be in the head of the list (or the Sh state in this scenario).

The following state diagram illustrates the transitions:

Figure 1: Simplified cache state diagram from SCI

Initially, the cache is in the "I" state and the directory is in the "U" state. If processor 1 (P1) makes a read, the processor's cache moves from state I to E and the directory moves from U to EM. The directory records that P1 is the head of the directory list. At this point, processor 2 (P2) is in state I. If P2 makes a read, the directory is in state EM, so the directory notifies P1 that P2 is making a read, P1 transitions from E to St, and sends the data to P2. P2 transitions from I to Sh, and creates a pointer to P1 to note that P1 is the next memory on the directory list. The directory transitions from EM to S and the directory updates its pointer from P1 to P2 to note that P2 is now the head of the directory cache list.

As you can see from the diagram, a Write(S) can only occur when a processor is in the "Sh" state. In the actual SCI protocol, a write can occur when the cache is in the HEAD state combined with CLEAN/FRESH/DIRTY or when the cache is in the ONLY state. In this simplified scenario, these states are combined into one, the Sh state.

Summary

The SCI protocol is a directory based protocol for Distributed Memory Management similar to the one described in Solihin . This is an extensive and complicated strategy that maintains coherence as it scales to multiple processors.

References

- Yan Solihin, Fundamentals of Parallel Computer Architecture: Multichip and Multicore Systems, Solihin Books, 2008.

- Culler, David E. and Jaswinder Pal Singh, Parallel Computer Architecture, Morgan Kaufmann Publishers, Inc, 1999.

- Gustavson, David, and Qiang Li. "The Scalable Coherent Interface (SCI)." IEEE Communications Magazine 1996: 56. Web. 25 Apr 2011. <http://www.cs.utexas.edu/users/dburger/teaching/cs395t-s08/papers/9_sci.pdf>.

- "1596-1992 - IEEE Standard for Scalable Coherent Interface (SCI)." IEEE Standards Association. N.p., n.d. Web. <http://standards.ieee.org/findstds/standard/1596-1992.html>.