CSC/ECE 506 Spring 2011/ch6b df: Difference between revisions

No edit summary |

No edit summary |

||

| (2 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

=Translation Lookaside Buffer and Cache Addressing= | =Translation Lookaside Buffer and Cache Addressing= | ||

[[Image:Virtual_Memory_address_space.gif|thumbnail|400px|Virtual Memory Addressing<sup><span id="1body">[[#1foot|[1]]]</span></sup>]] | [[Image:Virtual_Memory_address_space.gif|thumbnail|400px|Virtual Memory Addressing<sup><span id="1body">[[#1foot|[1]]]</span></sup>]] | ||

Programs | Programs use virtual addresses to access the memory and caches. Programs use a virtual address to either "increase" the size of the memory or to deal with multiple processes using the same memory/cache. If the program were to not use virtual addresses, two programs running at the same time would need their own section of the cache. For example, if the computer has a 512MB L1 cache and two programs are running at the same time, each program could only use 256MB of the cache. One problem with the programs accessing memory with virtual addresses is that caches and memory need to be accessed with physical addresses. The Operating System is usually used to translate these virtual addresses into physical addresses. The OS can be relatively slow at doing this so a cache is created that stores the most recently accessed addresses. This cache is called the Translation Lookaside Buffer or TLB. Virtual addresses are broken up into two parts: a virtual page number (VPN) and a virtual page offset (VPO). Physical Addresses are broken up into a physical page number (PPN) and a physical page offset (PPO). The page offset is used to determine which page a given value is in. The page number determines which page the data is in. These pages are handled by the OS through page tables. There are three different ways to use the virtual addresses and the physical addresses to access data. The three ways are physically addressed, virtually addressed, and physically tagged but virtually addressed<sup><span id="3body">[[#3foot|[3]]]</span></sup>. | ||

===Translation Lookaside Buffer=== | ===Translation Lookaside Buffer=== | ||

The TLB is a small cache that | The TLB is a small cache that holds the most recently used virtual address to physical address translations. When a virtual address is needing to be accessed, it looks up the address in the TLB to find its corresponding physical page address. If the virtual address is found, then there is a TLB hit and the physical address is obtained. If there is a TLB miss (virtual address is not found) then the OS is called to handle the miss. The OS maps the virtual address to the correct physical address and loads it into the TLB. The use of a TLB reduces the time needed to map a virtual address to a physical address by not having to go through the OS every time<sup><span id="4body">[[#4foot|[4]]]</span></sup>. | ||

===Physically Addressed=== | ===Physically Addressed=== | ||

Physically Addressed refers to using the whole physical address to access memory. To access memory, the computer must first translate the virtual address into a physical address using the TLB. After the computer has the physical address, it can index through the cache and pull out the block it needs. The computer then checks the tag to see if it is a hit or miss. This is not ideal because the time it takes to access the TLB is added onto the time it takes to access the cache<sup><span id="3body">[[#3foot|[3]]]</span></sup>. | Physically Addressed refers to using the whole physical address (PPN and PPO) to access memory. To access memory, the computer must first translate the virtual address into a physical address using the TLB. After the computer has the physical address, it can index through the cache and pull out the block it needs. The computer then checks the tag to see if it is a hit or miss. This is not ideal because the time it takes to access the TLB is added onto the time it takes to access the cache<sup><span id="3body">[[#3foot|[3]]]</span></sup>. | ||

===Virtually Addressed=== | ===Virtually Addressed=== | ||

| Line 14: | Line 14: | ||

===Physically Tagged but Virtually Addressed=== | ===Physically Tagged but Virtually Addressed=== | ||

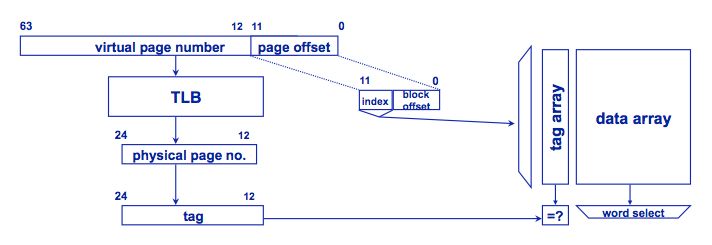

[[Image:VirtualtoPhysicalCacheAddress.jpg|thumbnail|600px|Virtual Memory Addressing<sup><span id="4body">[[#4foot|[4]]]</span></sup>]] | [[Image:VirtualtoPhysicalCacheAddress.jpg|thumbnail|600px|Virtual Memory Addressing<sup><span id="4body">[[#4foot|[4]]]</span></sup>]] | ||

This is where the VPO and the PPO are the exact same and the only thing needing to be translated is the virtual tag. With this model, the TLB only translates the VPN, not the whole virtual address. In this type of addressing, the VPO and VPN are split up. The VPO is used to access the cache while the VPN is sent to the TLB to be translated into the PPN. This method allows us to access the TLB and memory at the same time without the problem addressed with using the virtual address by itself since the PPO is known from the beginning and the cache index and byte offset bits are contained within the PPO. However, there is one problem with this approach. The bits used to specify the set and byte offset are stored within the page offset. Because of this the size of a page has to be greater than the number of sets multiplied by the block size. Given this we find there is a limit on the size of the cache. The maximum cache size is given by the following formula: | This is where the VPO and the PPO are the exact same and the only thing needing to be translated is the virtual tag. With this model, the TLB only translates the VPN, not the whole virtual address. In this type of addressing, the VPO and VPN are split up. The VPO is used to access the cache while the VPN is sent to the TLB to be translated into the PPN. This method allows us to access the TLB and memory at the same time without the problem addressed with using the virtual address by itself since the PPO is known from the beginning and the cache index and byte offset bits are contained within the PPO. However, there is one problem with this approach. The bits used to specify the set and byte offset are stored within the page offset. Because of this the size of a page has to be greater than the number of sets multiplied by the block size. Given this we find there is a limit on the size of the cache. The maximum cache size is given by the following formula<sup><span id="3body">[[#3foot|[3]]]</span></sup>: | ||

| Line 20: | Line 20: | ||

For example, | For example, a cache has the following parameters: page size is 4KB and cache is 2 way set associative with a block size of 32 bytes. A page size of 4KB means 12 bits are needed for the page offset. also, 5 bits are needed for the block offset. This leaves 7 bits to specify the cache set which results in 2^7 or 128 different sets. With the associativity being 2, the maximum cache size is 8KB. This turns out to be a lesser issue due to the fact that bigger caches take longer to access<sup><span id="3body">[[#3foot|[3]]]</span></sup>. | ||

From the above equation and knowing that cache size = (number of sets) X (associativity) X (block size). we can derive a formula relating associativity to cache size and page size: | From the above equation and knowing that cache size = (number of sets) X (associativity) X (block size). we can derive a formula relating associativity to cache size and page size: | ||

Latest revision as of 00:29, 21 March 2011

Translation Lookaside Buffer and Cache Addressing

Programs use virtual addresses to access the memory and caches. Programs use a virtual address to either "increase" the size of the memory or to deal with multiple processes using the same memory/cache. If the program were to not use virtual addresses, two programs running at the same time would need their own section of the cache. For example, if the computer has a 512MB L1 cache and two programs are running at the same time, each program could only use 256MB of the cache. One problem with the programs accessing memory with virtual addresses is that caches and memory need to be accessed with physical addresses. The Operating System is usually used to translate these virtual addresses into physical addresses. The OS can be relatively slow at doing this so a cache is created that stores the most recently accessed addresses. This cache is called the Translation Lookaside Buffer or TLB. Virtual addresses are broken up into two parts: a virtual page number (VPN) and a virtual page offset (VPO). Physical Addresses are broken up into a physical page number (PPN) and a physical page offset (PPO). The page offset is used to determine which page a given value is in. The page number determines which page the data is in. These pages are handled by the OS through page tables. There are three different ways to use the virtual addresses and the physical addresses to access data. The three ways are physically addressed, virtually addressed, and physically tagged but virtually addressed[3].

Translation Lookaside Buffer

The TLB is a small cache that holds the most recently used virtual address to physical address translations. When a virtual address is needing to be accessed, it looks up the address in the TLB to find its corresponding physical page address. If the virtual address is found, then there is a TLB hit and the physical address is obtained. If there is a TLB miss (virtual address is not found) then the OS is called to handle the miss. The OS maps the virtual address to the correct physical address and loads it into the TLB. The use of a TLB reduces the time needed to map a virtual address to a physical address by not having to go through the OS every time[4].

Physically Addressed

Physically Addressed refers to using the whole physical address (PPN and PPO) to access memory. To access memory, the computer must first translate the virtual address into a physical address using the TLB. After the computer has the physical address, it can index through the cache and pull out the block it needs. The computer then checks the tag to see if it is a hit or miss. This is not ideal because the time it takes to access the TLB is added onto the time it takes to access the cache[3].

Virtually Addressed

Virtually Addressed refers to using the whole virtual address to access memory. The good thing about this is memory and the TLB can be accessed at the same time. The problem with this is that each process has its own virtual page table. So if the processor changes from one process to another, the memory still holds the previous process' memory. The new process will not be able to tell the difference between its memory pages and the memory pages of the previous process. In order to solve this problem, the memory and TLB have to be flushed or re-initialized to empty before a new process can be worked on. If processes switch often, the latency required to flush memory will slow down the program[3].

Physically Tagged but Virtually Addressed

This is where the VPO and the PPO are the exact same and the only thing needing to be translated is the virtual tag. With this model, the TLB only translates the VPN, not the whole virtual address. In this type of addressing, the VPO and VPN are split up. The VPO is used to access the cache while the VPN is sent to the TLB to be translated into the PPN. This method allows us to access the TLB and memory at the same time without the problem addressed with using the virtual address by itself since the PPO is known from the beginning and the cache index and byte offset bits are contained within the PPO. However, there is one problem with this approach. The bits used to specify the set and byte offset are stored within the page offset. Because of this the size of a page has to be greater than the number of sets multiplied by the block size. Given this we find there is a limit on the size of the cache. The maximum cache size is given by the following formula[3]:

MaxCacheSize = PageSize X Associativity

For example, a cache has the following parameters: page size is 4KB and cache is 2 way set associative with a block size of 32 bytes. A page size of 4KB means 12 bits are needed for the page offset. also, 5 bits are needed for the block offset. This leaves 7 bits to specify the cache set which results in 2^7 or 128 different sets. With the associativity being 2, the maximum cache size is 8KB. This turns out to be a lesser issue due to the fact that bigger caches take longer to access[3].

From the above equation and knowing that cache size = (number of sets) X (associativity) X (block size). we can derive a formula relating associativity to cache size and page size:

Associativity ≥ (cache size) / (page size)

With a fixed page size, the only way to increase cache size is by increasing associativity. For example, the VAX processor has a page size of 4KB. If the cache is 16KB then the associativity would have to be at least 32[2].

Cache Coherency Problem

When a computer has multiple cores/processors with multiple caches/TLBs, there becomes a problem with TLB coherence. One problem with TLB coherence is that different TLBs in different processors may have incorrect data from one processor changing a block in the TLB while other TLBs now have stale copies of data. This problem can not be simply dealt with one processor invalidating all the other TLB's block when it changes a block. On most systems, the processors do not have the authority to invalidate someone else's TLB blocks. One way to effectively deal with the TLB coherency problem is with the shootdown algorithm. When a processor realizes the changes it is making might cause inconsistencies in the TLBs, the processor will invoke the shootdown algorithm. The shootdown algorithm causes a forceful interrupt into certain processors to perform TLB inconsistency actions, for example, an entry or buffer flush[5]. The interruption is considered "shooting" entries out of the TLB and the entire process is called a shootdown. All inconsistent entries are guaranteed to never be accessed by any TLB again. On Intel processors, there is an instruction called INVLPG. This instruction invalidates a single page table entry in a TLB[6]. This instruction will only invalidate one TLB so this instruction would be used in a shootdown algorithm to invalidate multiple TLBs.

References

1.http://www.ibm.com/developerworks/linux/library/l-kernel-memory-access/index.html?ca=dgr-lnxw100LXUserSpacedth-LX

2. http://people.engr.ncsu.edu/efg/521/f02/common/lectures/notes/lec9.html

3. Fundamentals of Parallel Computer Architecture by Prof.Yan Solihin

4. Computer Design & Technology- Lectures slides by Prof. Eric Rotenberg

5. "Translation Lookaside Buffer Consistency: A Software Approach*". David L. Black, Richard F. Rashid, David B. Golub, Charles R. Hill+, and Robert V. Baron. CarnegieMellon University

6. http://faydoc.tripod.com/cpu/invlpg.htm