CSC/ECE 506 Fall 2007/wiki1 9 arubha: Difference between revisions

No edit summary |

No edit summary |

||

| (34 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

== Trends in vector processing and array processing == | |||

'''''Array Processing''''' is a computer architectural concept that was first put to use in the early 1960s. As scientific computing evolved, the need to process large amounts of data using a common algorithm became important. Computers with an array of processing elements (PEs), controlled by a common [http://en.wikipedia.org/wiki/Control_unit control unit] (CU) were built. The PEs were usually [http://en.wikipedia.org/wiki/Arithmetic_logic_unit ALUs], capable of performing simple mathematical operations. The [http://en.wikipedia.org/wiki/Central_processing_unit CPU] itself would perform the job of the CU. | '''''Array Processing''''' is a computer architectural concept that was first put to use in the early 1960s. As scientific computing evolved, the need to process large amounts of data using a common algorithm became important. Computers with an array of processing elements (PEs), controlled by a common [http://en.wikipedia.org/wiki/Control_unit control unit] (CU) were built. The PEs were usually [http://en.wikipedia.org/wiki/Arithmetic_logic_unit ALUs], capable of performing simple mathematical operations. The [http://en.wikipedia.org/wiki/Central_processing_unit CPU] itself would perform the job of the CU. | ||

As computer architectures evolved, a new concept called the '''''Vector processing''''' was developed during the 1970s. In ''vector processing'', a PE usually consists of a collection of functional units that operate on vectors of data. This greatly simplifies the interconnections and reduces data dependency, compared to ''array processing''. | As computer architectures evolved, a new concept called the '''''Vector processing''''' was developed during the 1970s. In ''vector processing'', a PE usually consists of a collection of functional units that operate on vectors of data. | ||

Since vector architectures provide instructions that perform the same operation on multiple sets of corresponding operands, they exploit [http://en.wikipedia.org/wiki/Instruction_level_parallelism Instruction-Level Parallelism] (ILP) to a large degree. This greatly simplifies the interconnections and reduces data dependency, compared to ''array processing''. | |||

The basic idea of vectorization is to realize that when performing an operation for the first iteration of the loop, we might as well do the same operation for a bunch of following iterations, too. When we get to the bottom of the loop, we'll have accomplished the work which would require multiple passes on a scalar architecture.The concept of vectorization is extensively used in MATLAB . For more details, refer to the following link : [http://web.cecs.pdx.edu/~gerry/MATLAB/programming/performance.html MATLAB_VECTORS] | |||

'''Advantages of Vector Processing:''' | |||

* Pipelining is obviously applicable to vector instructions , since all the elemental operations are independant | |||

* Once the compiler has done the job of converting loops into vector form, the hardware retains lots of flexibility in executing the elemental operations as data become available | |||

* Vectorized code posesses better readability as compared to software-pipelined VLIW code | |||

* Vectorized code is optimised in order to fully exploit large processor bandwidths and interleaved memory systems | |||

'''Pitfalls:''' | |||

* Not every software loop may be amenable to vectorization . ( However, the data dependancy requirements for vectorization are weaker than those for full paralleization. ) | |||

* Some of the code will still have to run the normal scalar instruction set. So a vector machine still has to have a high-performance scalar microarchitecture with speculation,branch prediction, pipelining, multiple issue , etc. | |||

[http://en.wikipedia.org/wiki/Vector_processor Vector processors] and array processors form the basic building blocks of some of the early and most successful supercomputers. Vector and array processing techniques are extensively used by applications like ocean mapping, [http://en.wikipedia.org/wiki/3D_modeling 3D modeling], molecular modeling, [http://en.wikipedia.org/wiki/Weather_forecasting weather forecasting], wind tunnel simulations. The Airbus [http://en.wikipedia.org/wiki/Airbus_A380 A380] project made use of the [http://www.nec.co.jp/hpc/sx-e/sx-world/no27/e10_11.pdf NEC SX-5], scalable vector processor architecture supercomputers, to run simulations and fine tune the design even before the aircraft's maiden flight. | [http://en.wikipedia.org/wiki/Vector_processor Vector processors] and array processors form the basic building blocks of some of the early and most successful supercomputers. Vector and array processing techniques are extensively used by applications like ocean mapping, [http://en.wikipedia.org/wiki/3D_modeling 3D modeling], molecular modeling, [http://en.wikipedia.org/wiki/Weather_forecasting weather forecasting], wind tunnel simulations. The Airbus [http://en.wikipedia.org/wiki/Airbus_A380 A380] project made use of the [http://www.nec.co.jp/hpc/sx-e/sx-world/no27/e10_11.pdf NEC SX-5], scalable vector processor architecture supercomputers, to run simulations and fine tune the design even before the aircraft's maiden flight. | ||

== Trends == | == Past Trends == | ||

The first implementation of a vector processing based | The earliest array processors were used to operate on matrix-like data. The CU would load all the ALUs with a common instruction. The ALUs would get data inputs from a array of memory locations, containing different values from the matrix. This concept of using separate ALUs for each data element, but performing the same operation, is classifed as the Single-Instruction-Multiple-Data ([http://en.wikipedia.org/wiki/SIMD SIMD]) under the [http://en.wikipedia.org/wiki/Flynn's_Taxonomy Flynn Taxonomy]. | ||

The first implementation of a vector processing based computer system was the [http://en.wikipedia.org/wiki/CDC_STAR-100 CDC STAR-100]. It was developed by the [http://en.wikipedia.org/wiki/Control_Data_Corporation Control Data Corporation] (CDC) in the early 1970s and was capable of performing 100 million floating point operations ([http://en.wikipedia.org/wiki/MFLOPS MFLOPS]). The CDC STAR-100 combined scalar and vector computations. Though it was able to achieve a peak performance of 20 MFLOPS, when fully loaded, it's performance for real-life data sets was much lower. | |||

The first system to fully exploit the vector processing architecture was the [http://en.wikipedia.org/wiki/Cray-1 Cray-1]. The Cray-1, again developed by CDC, was able to overcome some of the pitfalls encounterd during the STAR-100 project. The STAR-100 took a lot of time decoding vector instructions and also had to re-fetch data every time an instruction asked for it. The Cray-1 moved away from the memory-memory architecture and introduced a set of CPU registers which would not only pre-fetch frequently used data,but also successive instructions, thus introducing pipelining. This enabled Cray-1 to work on more flexible data-sets and also improved it's instruction fetch & decoding times. But the registers introduced a limit on the vector sizes and also made the system expensive. Even with the drawbacks, the Cray-1 managed to perform at 80 MFLOPS. | |||

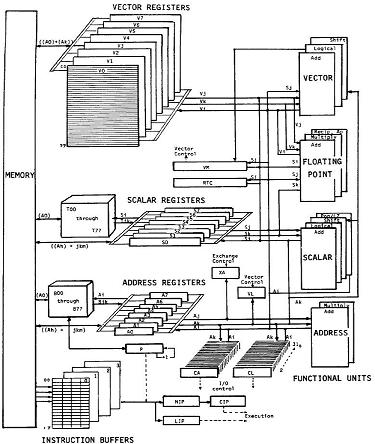

<br>[[Image:Cray1.JPG]]<br>Functional organization of Cray-1<br> | |||

Following the Cray-1, several supercomputers based on vector processing principles, followed. Companies like Fujitsu with [http://www.vaxman.de/historic_computers/fujitsu/vp100/vp100.html VP100] & VP200, Hitachi with S810 and NEC with [http://www.hpce.nec.com/47.0.html SX series], entered the fray. [http://en.wikipedia.org/wiki/Floating_Point_Systems Floating Point Systems] (FPS) came up with a minicomputer with floating point co-processors, the AP-120B. It consisted of array coprocessors exclusively performing floating point operations. It made the conventional minicomputer faster for floating point operations and also less expensive. | |||

Shared communication registers and reconfigurable vector registers are special features in these machines. Japanese | |||

computers are strong in high-speed hardware and interactive vectorizing compilers. | |||

Subsequent MEC supercomputers had the following architectural features: | |||

* four sets of vector pipelines per processor ( 2 add/shift and 2 multiply/ logic pipelines ) | |||

* a scalar unit employing RISC architecture | |||

* support for instruction re-ordering to acheive a higher degree of parallelism | |||

* interleaved main memory | |||

* a maximum of 4 configurable I/O processors to accommodate a 1GBps data transfer rate per I/O processor ( upto 256 channels provided for high-speed network, graphics and peripheral operations ) | |||

With advances in technology, the computers based on vector and array processing models, kept getting faster, owing to faster clock speeds and faster switching gates. As integration levels continue to increase, it becomes more feasible and attractive to put some processing power on the same die as memory circuits. The amount of bandwidth inside a memory chip is astounding, but very little of it makes it out to the pins. When processors are put on the same die as memory circuits, there'll be a premium put on die area (read: simple logic), heat dissipation (read: simple logic again), and bandwidth exploitation (read: ILP). Vectors are the natural solution . | |||

== Emerging Trends == | |||

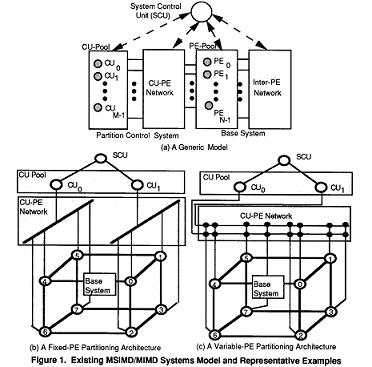

Unlike the past, vector architectures are now being targeted at environments other than supercomputing. Multiple Single-Instruction-Multiple-Data (MSIMD) is the emerging concept which makes use of both scalar and vector architectures. A MSIMD system consists of several PEs, like any other vector architecture, a collection of which may be collectively controlled by a single Control Unit. The number of PEs under a CU is dynamically arrived at and is called ''partioning''. Partioning is performed by the parallel program running on the system.<br>[[Image:MSIMD.JPG]]<br> MSIMD System Models<br> | |||

==== Cell Architecture ==== | |||

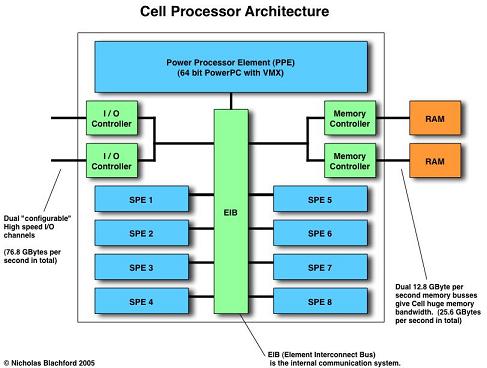

An example of a commercial system which uses the above architecure is the '''Cell''' architecture which was originally envisioned by Sony for the PlayStation 3 . The architecture as it exists today was the work of three companies: Sony, Toshiba and IBM. The Cell architecture is touted to be a relatively general-purpose and exhibits a marked departure from conventional commercial microprocessor designs in that its architecture is closer to multiprocessor vector supercomputers. | |||

Cell is an architecture for high performance distributed computing. It is comprised of hardware and software Cells, software Cells consist of data and programs, which are sent out to the hardware Cells where they are computed, the results are then returned.The cell hardware consists of a Power Processing Element (PPE ) and several Synergistic Processing Elements ( SPEs ). | |||

The PPE is a conventional microprocessor core which sets up tasks for the SPEs to do. In a Cell based system the PPE will run the operating system and most of the applications except all the computationally intensive tasks related to the OS and the applications are offloaded to the SPEs. | |||

An SPE is a self contained vector processor. Like the PPE the SPEs are in-order processors and have no Out-Of-Order capabilities ( i.e instruction re-ordering is not supported ). This means that as with the PPE the compiler is very important. The SPEs do however have 128 registers and this gives plenty of room for the compiler to unroll loops and | |||

perform other relevant optimizations.<br>[[Image:cellarch.JPG]]<br> Cell processor Architecture<br> | |||

'' Stream Processing " : A big difference in Cells from normal CPUs is the ability of the SPEs in a Cell to be chained together to act as a stream processor.A stream processor takes data and processes it in a series of steps. A Cell processor can be set-up to perform streaming operations in a sequence with one | |||

or more SPEs working on each step. In order to do stream processing an SPE reads data from an input into it's local store, performs the processing step then stores the result into it's local store. The second SPE reads the output from the first SPE's local store and processes it and stores it in it's output area. | |||

Streaming is useful in the context of vector processing because it is possible to stream vector results into vector operands and execute multiple dependant vector operations in parallel.Stream processing does not generally require large memory bandwidth but Cell has it anyway and in addition, the internal interconnect system allows multiple communication streams between SPEs simultaneously so they don’t hold each other up.The SPEs are dedicated high speed vector processors and with their own memory don't need to share anything other than the memory (and not even this much if the data can fit in the local stores). In addition , because there are multiple SPEs , the computational capabililty is potentially quite large. | |||

''[http://www.es.jamstec.go.jp/index.en.html Earth Simulator]'', till recently listed among the top 500 supercomputers[[http://www.top500.org]], is another example of a MSIMD implementation. | |||

MSIMD architecture introduces the concept of scalable vector lengths and hence makes any vector parallel processing system configurable based on the application requirements. This ushers in a new era of general purpose supercomputers which will be inexpensive and more versatile. It is demonstrated best by Sony Playstation 3 which is based on [http://en.wikipedia.org/wiki/Cell_microprocessor Cell processor] | |||

== Links == | == Links == | ||

* http://www.freepatentsonline.com/5991531.html | * http://www.freepatentsonline.com/5991531.html | ||

* http://www.freepatentsonline.com/4725973.html | * http://www.freepatentsonline.com/4725973.html | ||

* http://sim.sagepub.com/cgi/reprint/47/3/93 | |||

==Bibliography == | ==Bibliography == | ||

* Parallel computer architecture : a hardware/software approach / David E. Culler, Jaswinder Pal Singh, with Anoop Gupta. ISBN:1558603433 | * Parallel computer architecture : a hardware/software approach / David E. Culler, Jaswinder Pal Singh, with Anoop Gupta. ISBN:1558603433 | ||

* Lecture notes by Professor David A. Patterson http://www.cs.berkeley.edu/~pattrsn/252S98/Lec07-vector.pdf | * Computer architecture : a quantitative approach / John L. Hennessy, David A. Patterson ; with contributions by Andrea C. Arpaci-Dusseau. ISBN:0123704901 | ||

* Lecture notes by Professor David E. Culler http://www.cs.berkeley.edu/~culler/cs252-s02/slides/Lec20-vector.pdf | * Lecture notes by Professor David A. Patterson [http://www.cs.berkeley.edu/~pattrsn/252S98/Lec07-vector.pdf PDF] | ||

* Lecture notes by Professor David E. Culler [http://www.cs.berkeley.edu/~culler/cs252-s02/slides/Lec20-vector.pdf PDF] | |||

* Cray-1 architecture [http://en.wikipedia.org/wiki/Cray-1 Weblink] | |||

* Vector processors [http://en.wikipedia.org/wiki/Vector_processor Weblink] | |||

* 'Single processor-pool MSIMD/MIMD architectures' by Mohamed S. Baig, Tarik A. El-Ghazawi and Nikitas A. Alexandridis [http://ieeexplore.ieee.org/iel2/448/6240/00242709.pdf?tp=&isnumber=&arnumber=242709 PDF] | |||

* Cray-1 Computer System [http://delivery.acm.org/10.1145/360000/359336/p63-russell.pdf?key1=359336&key2=9292309811&coll=GUIDE&dl=GUIDE&CFID=34093939&CFTOKEN=44395131 PDF] | |||

* Cell Architecture [http://www.blachford.info/computer/Cell/Cell1_v2.html Weblink] | |||

* Advanced Computer Architecture - Parallelism, Scalability, Programmability / Kai Hwang ISBN:007053070 | |||

Latest revision as of 20:10, 10 September 2007

Trends in vector processing and array processing

Array Processing is a computer architectural concept that was first put to use in the early 1960s. As scientific computing evolved, the need to process large amounts of data using a common algorithm became important. Computers with an array of processing elements (PEs), controlled by a common control unit (CU) were built. The PEs were usually ALUs, capable of performing simple mathematical operations. The CPU itself would perform the job of the CU.

As computer architectures evolved, a new concept called the Vector processing was developed during the 1970s. In vector processing, a PE usually consists of a collection of functional units that operate on vectors of data. Since vector architectures provide instructions that perform the same operation on multiple sets of corresponding operands, they exploit Instruction-Level Parallelism (ILP) to a large degree. This greatly simplifies the interconnections and reduces data dependency, compared to array processing. The basic idea of vectorization is to realize that when performing an operation for the first iteration of the loop, we might as well do the same operation for a bunch of following iterations, too. When we get to the bottom of the loop, we'll have accomplished the work which would require multiple passes on a scalar architecture.The concept of vectorization is extensively used in MATLAB . For more details, refer to the following link : MATLAB_VECTORS

Advantages of Vector Processing:

- Pipelining is obviously applicable to vector instructions , since all the elemental operations are independant

- Once the compiler has done the job of converting loops into vector form, the hardware retains lots of flexibility in executing the elemental operations as data become available

- Vectorized code posesses better readability as compared to software-pipelined VLIW code

- Vectorized code is optimised in order to fully exploit large processor bandwidths and interleaved memory systems

Pitfalls:

- Not every software loop may be amenable to vectorization . ( However, the data dependancy requirements for vectorization are weaker than those for full paralleization. )

- Some of the code will still have to run the normal scalar instruction set. So a vector machine still has to have a high-performance scalar microarchitecture with speculation,branch prediction, pipelining, multiple issue , etc.

Vector processors and array processors form the basic building blocks of some of the early and most successful supercomputers. Vector and array processing techniques are extensively used by applications like ocean mapping, 3D modeling, molecular modeling, weather forecasting, wind tunnel simulations. The Airbus A380 project made use of the NEC SX-5, scalable vector processor architecture supercomputers, to run simulations and fine tune the design even before the aircraft's maiden flight.

Past Trends

The earliest array processors were used to operate on matrix-like data. The CU would load all the ALUs with a common instruction. The ALUs would get data inputs from a array of memory locations, containing different values from the matrix. This concept of using separate ALUs for each data element, but performing the same operation, is classifed as the Single-Instruction-Multiple-Data (SIMD) under the Flynn Taxonomy.

The first implementation of a vector processing based computer system was the CDC STAR-100. It was developed by the Control Data Corporation (CDC) in the early 1970s and was capable of performing 100 million floating point operations (MFLOPS). The CDC STAR-100 combined scalar and vector computations. Though it was able to achieve a peak performance of 20 MFLOPS, when fully loaded, it's performance for real-life data sets was much lower.

The first system to fully exploit the vector processing architecture was the Cray-1. The Cray-1, again developed by CDC, was able to overcome some of the pitfalls encounterd during the STAR-100 project. The STAR-100 took a lot of time decoding vector instructions and also had to re-fetch data every time an instruction asked for it. The Cray-1 moved away from the memory-memory architecture and introduced a set of CPU registers which would not only pre-fetch frequently used data,but also successive instructions, thus introducing pipelining. This enabled Cray-1 to work on more flexible data-sets and also improved it's instruction fetch & decoding times. But the registers introduced a limit on the vector sizes and also made the system expensive. Even with the drawbacks, the Cray-1 managed to perform at 80 MFLOPS.

Functional organization of Cray-1

Following the Cray-1, several supercomputers based on vector processing principles, followed. Companies like Fujitsu with VP100 & VP200, Hitachi with S810 and NEC with SX series, entered the fray. Floating Point Systems (FPS) came up with a minicomputer with floating point co-processors, the AP-120B. It consisted of array coprocessors exclusively performing floating point operations. It made the conventional minicomputer faster for floating point operations and also less expensive. Shared communication registers and reconfigurable vector registers are special features in these machines. Japanese computers are strong in high-speed hardware and interactive vectorizing compilers. Subsequent MEC supercomputers had the following architectural features:

- four sets of vector pipelines per processor ( 2 add/shift and 2 multiply/ logic pipelines )

- a scalar unit employing RISC architecture

- support for instruction re-ordering to acheive a higher degree of parallelism

- interleaved main memory

- a maximum of 4 configurable I/O processors to accommodate a 1GBps data transfer rate per I/O processor ( upto 256 channels provided for high-speed network, graphics and peripheral operations )

With advances in technology, the computers based on vector and array processing models, kept getting faster, owing to faster clock speeds and faster switching gates. As integration levels continue to increase, it becomes more feasible and attractive to put some processing power on the same die as memory circuits. The amount of bandwidth inside a memory chip is astounding, but very little of it makes it out to the pins. When processors are put on the same die as memory circuits, there'll be a premium put on die area (read: simple logic), heat dissipation (read: simple logic again), and bandwidth exploitation (read: ILP). Vectors are the natural solution .

Emerging Trends

Unlike the past, vector architectures are now being targeted at environments other than supercomputing. Multiple Single-Instruction-Multiple-Data (MSIMD) is the emerging concept which makes use of both scalar and vector architectures. A MSIMD system consists of several PEs, like any other vector architecture, a collection of which may be collectively controlled by a single Control Unit. The number of PEs under a CU is dynamically arrived at and is called partioning. Partioning is performed by the parallel program running on the system.

MSIMD System Models

Cell Architecture

An example of a commercial system which uses the above architecure is the Cell architecture which was originally envisioned by Sony for the PlayStation 3 . The architecture as it exists today was the work of three companies: Sony, Toshiba and IBM. The Cell architecture is touted to be a relatively general-purpose and exhibits a marked departure from conventional commercial microprocessor designs in that its architecture is closer to multiprocessor vector supercomputers.

Cell is an architecture for high performance distributed computing. It is comprised of hardware and software Cells, software Cells consist of data and programs, which are sent out to the hardware Cells where they are computed, the results are then returned.The cell hardware consists of a Power Processing Element (PPE ) and several Synergistic Processing Elements ( SPEs ). The PPE is a conventional microprocessor core which sets up tasks for the SPEs to do. In a Cell based system the PPE will run the operating system and most of the applications except all the computationally intensive tasks related to the OS and the applications are offloaded to the SPEs.

An SPE is a self contained vector processor. Like the PPE the SPEs are in-order processors and have no Out-Of-Order capabilities ( i.e instruction re-ordering is not supported ). This means that as with the PPE the compiler is very important. The SPEs do however have 128 registers and this gives plenty of room for the compiler to unroll loops and

perform other relevant optimizations.

Cell processor Architecture

Stream Processing " : A big difference in Cells from normal CPUs is the ability of the SPEs in a Cell to be chained together to act as a stream processor.A stream processor takes data and processes it in a series of steps. A Cell processor can be set-up to perform streaming operations in a sequence with one or more SPEs working on each step. In order to do stream processing an SPE reads data from an input into it's local store, performs the processing step then stores the result into it's local store. The second SPE reads the output from the first SPE's local store and processes it and stores it in it's output area. Streaming is useful in the context of vector processing because it is possible to stream vector results into vector operands and execute multiple dependant vector operations in parallel.Stream processing does not generally require large memory bandwidth but Cell has it anyway and in addition, the internal interconnect system allows multiple communication streams between SPEs simultaneously so they don’t hold each other up.The SPEs are dedicated high speed vector processors and with their own memory don't need to share anything other than the memory (and not even this much if the data can fit in the local stores). In addition , because there are multiple SPEs , the computational capabililty is potentially quite large.

Earth Simulator, till recently listed among the top 500 supercomputers[[1]], is another example of a MSIMD implementation.

MSIMD architecture introduces the concept of scalable vector lengths and hence makes any vector parallel processing system configurable based on the application requirements. This ushers in a new era of general purpose supercomputers which will be inexpensive and more versatile. It is demonstrated best by Sony Playstation 3 which is based on Cell processor

Links

- http://www.freepatentsonline.com/5991531.html

- http://www.freepatentsonline.com/4725973.html

- http://sim.sagepub.com/cgi/reprint/47/3/93

Bibliography

- Parallel computer architecture : a hardware/software approach / David E. Culler, Jaswinder Pal Singh, with Anoop Gupta. ISBN:1558603433

- Computer architecture : a quantitative approach / John L. Hennessy, David A. Patterson ; with contributions by Andrea C. Arpaci-Dusseau. ISBN:0123704901

- Lecture notes by Professor David A. Patterson PDF

- Lecture notes by Professor David E. Culler PDF

- Cray-1 architecture Weblink

- Vector processors Weblink

- 'Single processor-pool MSIMD/MIMD architectures' by Mohamed S. Baig, Tarik A. El-Ghazawi and Nikitas A. Alexandridis PDF

- Cray-1 Computer System PDF

- Cell Architecture Weblink

- Advanced Computer Architecture - Parallelism, Scalability, Programmability / Kai Hwang ISBN:007053070