CSC/ECE 506 Fall 2007/wiki1 5 1008: Difference between revisions

No edit summary |

|||

| (10 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

== Section 1.1.4 Supercomputers == | == Section 1.1.4 Supercomputers == | ||

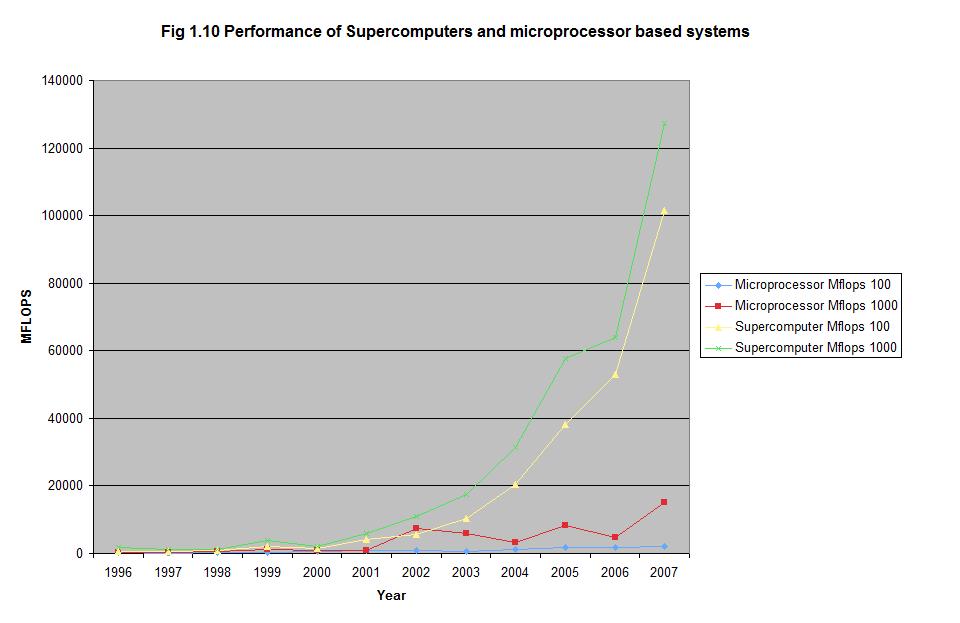

Supercomputing has become a very important part of many fields. These include industry, scientific, defense, and academia. Over the last 10 years there has been a noticeable shift from the vector processing supercomputers and the massively parallel processing (MPP) model | Supercomputing has become a very important part of many fields. These include industry, scientific, defense, and academia. Over the last 10 years, there has been a noticeable shift from the vector processing supercomputers and the massively parallel processing (MPP) model toward the cluster. The main reason for the shift is cost. A vector processor is a specialty piece of equipment, and companies must "tool up" in order to produce such a processor. With the MPP, a company uses a specialty processor that is produced in greater numbers but is still expensive. With the cluster, a developer can produce a very fast and reliable system with common off the shelf technology, or COTS for short. Over the last 10 years, the trend for the Top500 has shown that these cluster systems are becoming more popular and faster than their specialty counterparts. | ||

== Benchmarks == | == Benchmarks == | ||

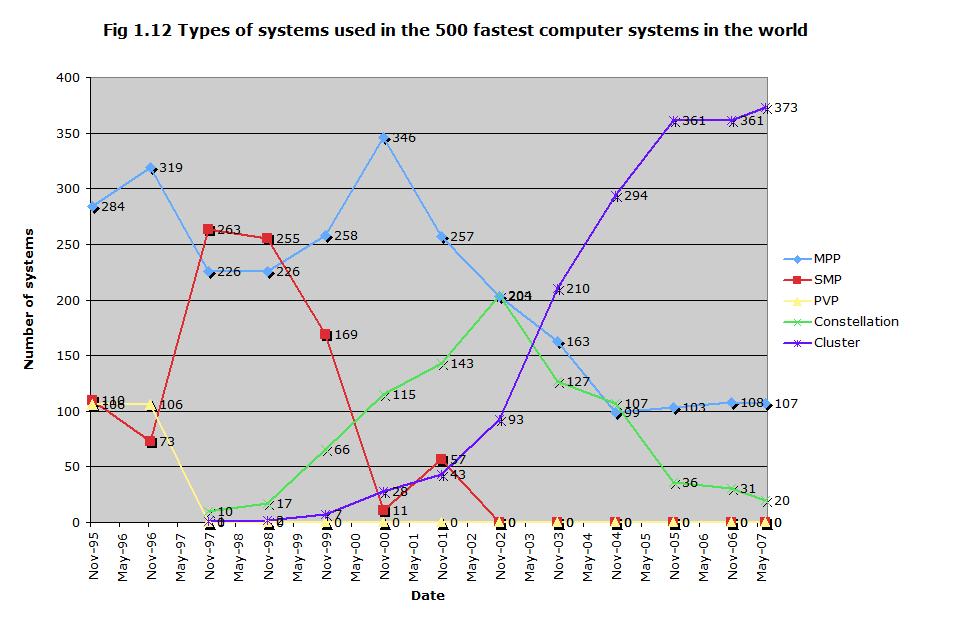

Up until recently the LINPACK benchmark has been the favored method of benchmarking a supercomputing system. This benchmark works by solving dense systems of linear equations (top500.org). This benchmark only tests one segment of the performance of the computing system, but since it has been around for so long it gives a good historical reference (Culler and Singh). The Linpack measures the amount of Floating point Operations Per Second or FLOPS a system can produce. It was written to perform best on vector processor based systems. In recent years the new LAPACK benchmark has gained favor. It is written to more efficiently run on today’s architectures (Wikipedia). This benchmark uses linear equations, least squares of linear systems, eigenvalue problems, and Housholder transforms to benchmark the system (Wikipedia). However, the LINPACK is still used to benchmark systems historical reference. The graph below shows a representative sample of supercomputers as compared to | Up until recently, the LINPACK benchmark has been the favored method of benchmarking a supercomputing system. This benchmark works by solving dense systems of linear equations (top500.org). This benchmark only tests one segment of the performance of the computing system, but since it has been around for so long, it gives a good historical reference (Culler and Singh). The Linpack measures the amount of Floating point Operations Per Second, or FLOPS, a system can produce. It was written to perform best on vector processor based systems. In recent years, the new LAPACK benchmark has gained favor. It is written to more efficiently run on today’s architectures (Wikipedia). This benchmark uses linear equations, least squares of linear systems, eigenvalue problems, and Housholder transforms to benchmark the system (Wikipedia). However, the LINPACK is still used to benchmark systems for historical reference. The graph below shows a representative sample of supercomputers as compared to microprocessors. The graph was constructed using information off of top500.org and netlib.org. | ||

'''LINPACK'''<br> | '''LINPACK'''<br> | ||

http://en.wikipedia.org/wiki/LAPACK<br> | http://en.wikipedia.org/wiki/LAPACK<br> | ||

http://www.netlib.org/linpack/<br> | |||

'''LAPACK'''<br> | '''LAPACK'''<br> | ||

http://en.wikipedia.org/wiki/LAPACK<br> | http://en.wikipedia.org/wiki/LAPACK<br> | ||

http://www.netlib.org/lapack/<br> | |||

Below the graph you find a list of systems used. | Below the graph you find a list of systems used. | ||

[[Image:Fig1.10.jpg]] | [[Image:Fig1.10.jpg]] | ||

'''NOTE:''' The Microprocessors listed are not generally the same as in the supercomputing system.<br> | <br>'''NOTE:''' The Microprocessors listed are not generally the same as in the supercomputing system. Below is the list of system and processors used in the sample.<br> | ||

'''year /Supercomputer /Microprocessor'''<br> | '''year /Supercomputer /Microprocessor'''<br> | ||

| Line 30: | Line 33: | ||

2007 /Cray Red Storm /NEC SC 811<br> | 2007 /Cray Red Storm /NEC SC 811<br> | ||

<br>The graph above shows the trend of supercomputing power measured against individual processing power. The processors and suercomputing systems are chosen at random and are used as a sample. Looking at the graph, you can see that both lines are trending upward. If you were to take the | <br>The graph above shows the trend of supercomputing power measured against individual processing power. The processors and suercomputing systems are chosen at random and are used as a sample. Looking at the graph, you can see that both lines are trending upward. If you were to take the individual processor out of a supercomputing system and do a comparison against one of the individual processors listed, you would see that the individual processor is actually more powerful. | ||

== Current Trend == | == Current Trend == | ||

The trend in supercomputing is going in the direction of the cluster. These can be broken up into two types with a very small difference. Clustered computing involves many small systems usually with 1 processor, which are linked together with some sort of high speed network interface. This is generally gigabit ethernet or infiniband. A good example of a cluster supercomputer would be the NCSA Dell PowerEdge 1955 cluster that was built. There is also the constellation. This involves taking smaller systems with 2 or more processors and linking them together with some sort of high speed interface. Again this can be either gigabit ethernet or infiniband. An example of the constellation system would be the Telecom Italia HP Superdome875 HyperPlex. The trend from 1997 until the current year on the Top500 has started to overwhelmingly favor the cluster. The reason for the preference is the cost involved. Figure 1.12 clearly shows the trend in the industry and is based on the current information from the Top500 site. http://www.top500.org/lists/2007/06 | |||

[[Image:Fig1.12 5 1008.jpg]] | [[Image:Fig1.12 5 1008.jpg]] | ||

== Cluster Computing == | == Cluster Computing == | ||

Cluster computing is very prominent in the current list of the Top500. The trend over the last ten years has moved away from a specialized compute system to a more off the shelf, cheaper, cluster system. These systems are very cost effective and give a lot more bang for the buck than a specialized vector processor or MPP based system. These systems come in several flavors all with slight differences. http://en.wikipedia.org/wiki/Computer_cluster<br> | |||

'''HA'''<br> | |||

A high availability (HA) cluster is used in cases where services need almost 100% uptime. Banking services would be a good candidate for an HA custer. HA's are not considered parallel systems.<br> | |||

'''Load Balanceing'''<br> | |||

A load balance system is used in cases when a service needs to balanced out over many systems. These systems are often known as a server farm. Web sites often use load balancing in order to handle the vast amount of traffic generated on the web.<br> | |||

'''HPC'''<br> | |||

A high performance computing (HPC) cluster does involve parallelism and could be considered in the class of supercomputers because of its nature. The HPC cluster often uses off the shelf systems running linux and the Beowulf clustering software. These systems are used to solve complex mathematics problems often found in physics and metorology. HPC clusters are often a favorite at universities because of the relatively low cost.<br> | |||

'''Grid'''<br> | |||

Grid computing does not fall into any class of parallel computing because each system on the grid often works on separate tasks independent of one another. The grid is often seen as a utility that many different users can use at the same time to accomplish different, mutually exclusive tasks.<br> | |||

==Credit== | |||

http://en.wikipedia.org/wiki/LAPACK<br> | http://en.wikipedia.org/wiki/LAPACK<br> | ||

http://en.wikipedia.org/wiki/LINPACK<br> | http://en.wikipedia.org/wiki/LINPACK<br> | ||

http://www.top500.org/project/linpack<br> | http://www.top500.org/project/linpack<br> | ||

http://en.wikipedia.org/wiki/Computer_cluster<br> | |||

http://www.topix.net/tech/hpc<br> | |||

http://www.netlib.org<br> | http://www.netlib.org<br> | ||

http://www.netlib.org/utk/people/JackDongarra/faq-linpack.html<br> | http://www.netlib.org/utk/people/JackDongarra/faq-linpack.html<br> | ||

David E. Culler, Jaswinder Pal Singh, and Anoop Gupta, "Parallel Computer Architecture" 1999 Morgan Kaufmann Publishers Inc. | David E. Culler, Jaswinder Pal Singh, and Anoop Gupta, "Parallel Computer Architecture" 1999 Morgan Kaufmann Publishers Inc. | ||

Latest revision as of 00:14, 10 September 2007

Section 1.1.4 Supercomputers

Supercomputing has become a very important part of many fields. These include industry, scientific, defense, and academia. Over the last 10 years, there has been a noticeable shift from the vector processing supercomputers and the massively parallel processing (MPP) model toward the cluster. The main reason for the shift is cost. A vector processor is a specialty piece of equipment, and companies must "tool up" in order to produce such a processor. With the MPP, a company uses a specialty processor that is produced in greater numbers but is still expensive. With the cluster, a developer can produce a very fast and reliable system with common off the shelf technology, or COTS for short. Over the last 10 years, the trend for the Top500 has shown that these cluster systems are becoming more popular and faster than their specialty counterparts.

Benchmarks

Up until recently, the LINPACK benchmark has been the favored method of benchmarking a supercomputing system. This benchmark works by solving dense systems of linear equations (top500.org). This benchmark only tests one segment of the performance of the computing system, but since it has been around for so long, it gives a good historical reference (Culler and Singh). The Linpack measures the amount of Floating point Operations Per Second, or FLOPS, a system can produce. It was written to perform best on vector processor based systems. In recent years, the new LAPACK benchmark has gained favor. It is written to more efficiently run on today’s architectures (Wikipedia). This benchmark uses linear equations, least squares of linear systems, eigenvalue problems, and Housholder transforms to benchmark the system (Wikipedia). However, the LINPACK is still used to benchmark systems for historical reference. The graph below shows a representative sample of supercomputers as compared to microprocessors. The graph was constructed using information off of top500.org and netlib.org.

LINPACK

http://en.wikipedia.org/wiki/LAPACK

http://www.netlib.org/linpack/

LAPACK

http://en.wikipedia.org/wiki/LAPACK

http://www.netlib.org/lapack/

Below the graph you find a list of systems used.

NOTE: The Microprocessors listed are not generally the same as in the supercomputing system. Below is the list of system and processors used in the sample.

year /Supercomputer /Microprocessor

1996 /Cray T94 /DEC 8200

1997 /Cray T3e900 /IBM Power2/990 71.5mhz

1998 /Cray T3e1200 /MIPS R10000

1999 /ASCI Blue /IBM Power3

2000 /SP Power3 375 /Alpha EV5

2001 /Alpha Server SC45 /Fujitsu vpp800

2002 /MCR Linux Cluster /NEC

2003 /VT AppleG5 Cluster /Cray X1 800mhz

2004 /Eserver Bladecenter Cluster /IBM Power4 1.5Ghz

2005 /Poweredge1850 Cluster /AMD x86_64

2006 /Nova Scale 5160 /Intel Xeon 3.8 Ghz

2007 /Cray Red Storm /NEC SC 811

The graph above shows the trend of supercomputing power measured against individual processing power. The processors and suercomputing systems are chosen at random and are used as a sample. Looking at the graph, you can see that both lines are trending upward. If you were to take the individual processor out of a supercomputing system and do a comparison against one of the individual processors listed, you would see that the individual processor is actually more powerful.

Current Trend

The trend in supercomputing is going in the direction of the cluster. These can be broken up into two types with a very small difference. Clustered computing involves many small systems usually with 1 processor, which are linked together with some sort of high speed network interface. This is generally gigabit ethernet or infiniband. A good example of a cluster supercomputer would be the NCSA Dell PowerEdge 1955 cluster that was built. There is also the constellation. This involves taking smaller systems with 2 or more processors and linking them together with some sort of high speed interface. Again this can be either gigabit ethernet or infiniband. An example of the constellation system would be the Telecom Italia HP Superdome875 HyperPlex. The trend from 1997 until the current year on the Top500 has started to overwhelmingly favor the cluster. The reason for the preference is the cost involved. Figure 1.12 clearly shows the trend in the industry and is based on the current information from the Top500 site. http://www.top500.org/lists/2007/06

Cluster Computing

Cluster computing is very prominent in the current list of the Top500. The trend over the last ten years has moved away from a specialized compute system to a more off the shelf, cheaper, cluster system. These systems are very cost effective and give a lot more bang for the buck than a specialized vector processor or MPP based system. These systems come in several flavors all with slight differences. http://en.wikipedia.org/wiki/Computer_cluster

HA

A high availability (HA) cluster is used in cases where services need almost 100% uptime. Banking services would be a good candidate for an HA custer. HA's are not considered parallel systems.

Load Balanceing

A load balance system is used in cases when a service needs to balanced out over many systems. These systems are often known as a server farm. Web sites often use load balancing in order to handle the vast amount of traffic generated on the web.

HPC

A high performance computing (HPC) cluster does involve parallelism and could be considered in the class of supercomputers because of its nature. The HPC cluster often uses off the shelf systems running linux and the Beowulf clustering software. These systems are used to solve complex mathematics problems often found in physics and metorology. HPC clusters are often a favorite at universities because of the relatively low cost.

Grid

Grid computing does not fall into any class of parallel computing because each system on the grid often works on separate tasks independent of one another. The grid is often seen as a utility that many different users can use at the same time to accomplish different, mutually exclusive tasks.

Credit

http://en.wikipedia.org/wiki/LAPACK

http://en.wikipedia.org/wiki/LINPACK

http://www.top500.org/project/linpack

http://en.wikipedia.org/wiki/Computer_cluster

http://www.topix.net/tech/hpc

http://www.netlib.org

http://www.netlib.org/utk/people/JackDongarra/faq-linpack.html

David E. Culler, Jaswinder Pal Singh, and Anoop Gupta, "Parallel Computer Architecture" 1999 Morgan Kaufmann Publishers Inc.