CSC/ECE 506 Spring 2010/ch1 lm: Difference between revisions

| (42 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

A '''supercomputer''' is a computer that is most advanted computer of current processing capacity, particularly speed of calculation. Supercomputers were introduced in the 1960s and were designed primarily at Control Data Corporation (CDC), and led the market into the 1970s. | |||

Cray then took over the supercomputer market with his new designs, holding the top spot in supercomputing for five years (1985–1990). In the 1980s a large number of smaller competitors entered the market, in parallel to the creation of the minicomputer market a decade earlier, but many of these disappeared in the mid-1990s "supercomputer market crash." | |||

Today, supercomputers are typically one-of-a-kind custom designs produced by "traditional" companies such as | Today, supercomputers are typically one-of-a-kind custom designs produced by "traditional" companies such as Cray, IBM, and Hewlett-Packard, who purchased many of the 1980s companies to gain their experience. As of 7/2009, the Cray Jaguar is the fastest supercomputer in the world. | ||

Supercomputers are used for highly calculation-intensive tasks such as problems involving quantum physics, weather forecasting, climate research, computational chemistry|molecular modeling (computing the structures and properties of chemical compounds, biological macromolecules, polymers, and crystals), physical simulations (such as simulation of airplanes in wind tunnels, simulation of the detonation of nuclear weapons, and research into nuclear fusion). | |||

==Timeline of supercomputers== | ==Timeline of supercomputers== | ||

This is a list of the record-holders for fastest general-purpose supercomputer in the world, and the year each one set the record. | This is a list of the record-holders for fastest general-purpose supercomputer in the world, and the year each one set the record. | ||

Here we just list entries after 1993. The list reflects the Top500 listing [http://www.top500.org/sublist Directory page for Top500 lists. Result for each list since June 1993], and the "Peak speed" is given as the "Rmax" rating. | |||

{| class="wikitable" border = "1" | {| class="wikitable" border = "1" | ||

! Year !! Supercomputer !! FLOPS|Peak speed<br>(Rmax) !! Location | ! Year !! Supercomputer !! FLOPS|Peak speed<br>(Rmax) !! Location | ||

|- | |- | ||

|rowspan="3"|1993 | |rowspan="3"|1993 | ||

| Line 190: | Line 75: | ||

|- | |- | ||

|rowspan="2" |2008 | |rowspan="2" |2008 | ||

|rowspan="2" |IBM | |rowspan="2" |IBM |Roadrunner | ||

|align=right|1.026 PFLOPS | |align=right|1.026 PFLOPS | ||

|rowspan="2" |Los Alamos National Laboratory|DoE-Los Alamos National Laboratory, New Mexico, United States|USA | |rowspan="2" |Los Alamos National Laboratory|DoE-Los Alamos National Laboratory, New Mexico, United States|USA | ||

| Line 206: | Line 91: | ||

---- | ---- | ||

==Architecture== | |||

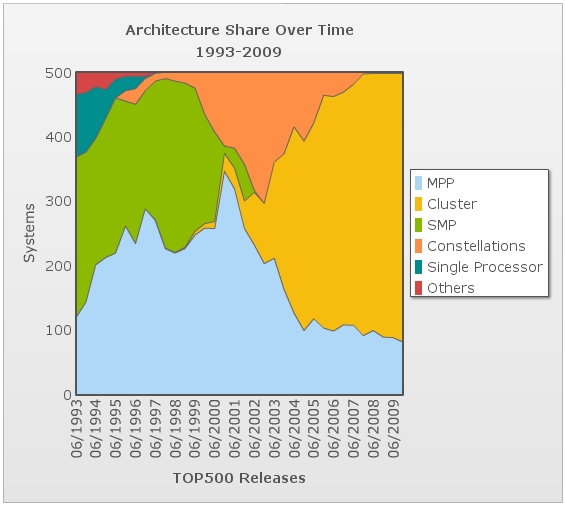

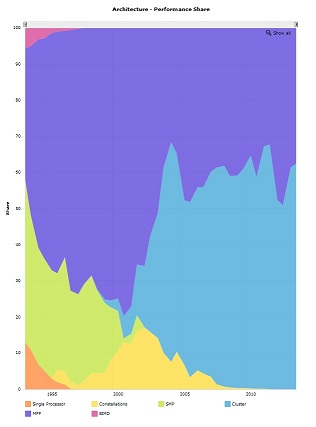

Over the years, we see the changes in supercomputer architecture. Various architectures were developed and abandoned, as computer technology progressed. | |||

In the early '90s, single processors were still common in the supercomputer arena. However, two other architectures played more important roles. One was Massive Parallel Processing (MPP), which is a computer system with many independent arithmetic units or entire microprocessors, that run in parallel. The other is Symmetric Multiprocessing (SMP), a good representative of the earliest styles of multiprocessor machine architectures. The existence of these two architectures met two of the supercomputer's key needs: parallelism and high performance. | |||

As time passed by, more units were available. In the early 2000s, constellation computing was widely used, and MPP reached its peak percentage. | |||

With the rise of cluster computing, the supercomputer world was transformed. In 2009, cluster computing accounted for 83.4% of the architectures in the Top 500. A cluster computer is a group of linked computers, working together closely so that in many respects they form a single computer. Compared to a single computer, clusters are deployed to improve performance and/or availability, while being more cost-effective than single computers of comparable speed or availability. Cluster computers offer a high-performance computing alternative over SMP and massively parallel computing systems. Using redundancy, cluster architectures also aim to provide reliability. | |||

From the analysis above, we can see that supercomputers are highly related to technological change, and actively motivated by it. | |||

[[Image:architecture_system.jpg]] [[Image:architecture_performance.jpg]] | |||

==Processors== | ==Processors== | ||

| Line 211: | Line 110: | ||

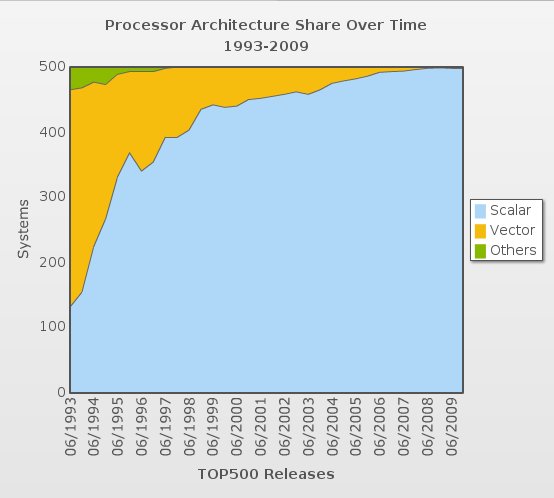

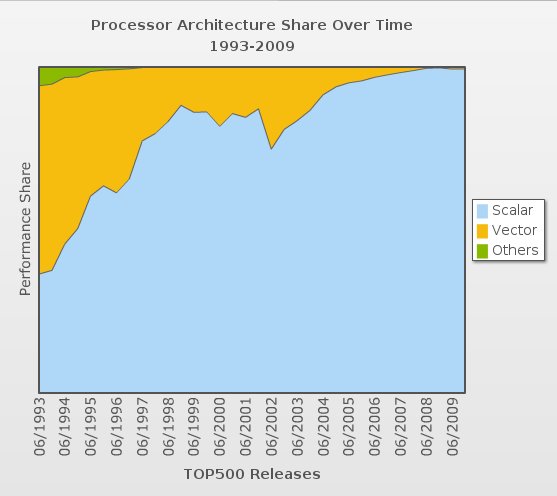

=== Processor Architecture === | === Processor Architecture === | ||

Looking at the following two figures from TOP500 | Looking at the following two figures from the TOP500 Web site, we can see an obvious trend toward scalar processor architecture encroaching on vector architecture. Processor architecture has moved from basic pipeline and RISC models, to vector processors, and then to superscalar processors. | ||

[[Image:Processor_Architecture.jpg]][[Image:Performance_Archi_Share.jpg]] | [[Image:Processor_Architecture.jpg]] [[Image:Performance_Archi_Share.jpg]] | ||

=== Processor Family === | === Processor Family === | ||

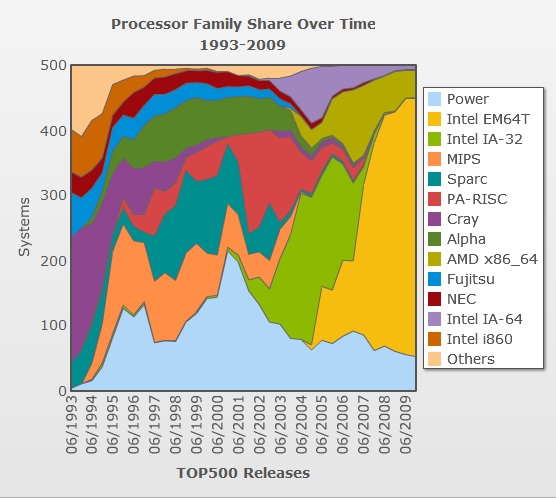

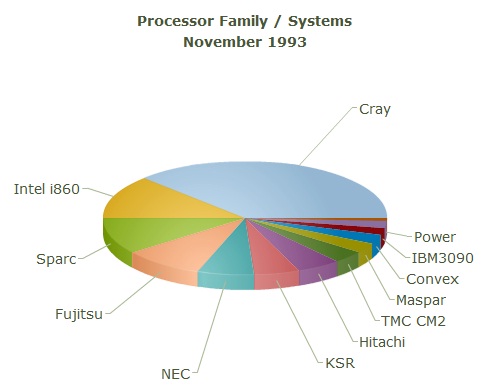

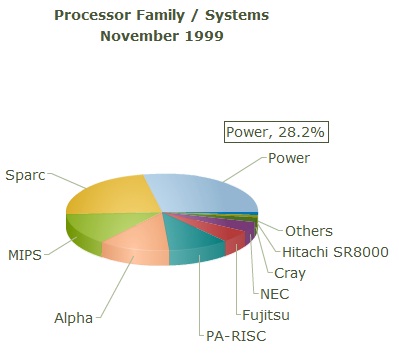

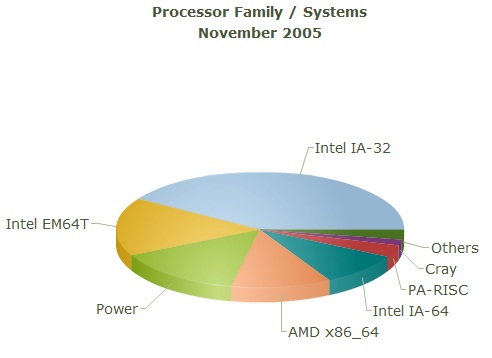

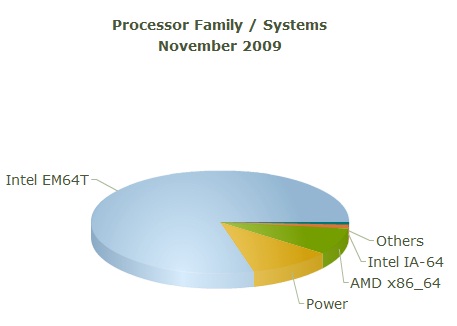

It is quite clear that supercomputers are evolving with the major changes in the commercial market. Time has seen processor upgrades as Intel/AMD release their new products. In 11/2009, Intel EM64T, AMD X86_64 and Power comprise 98% of all the processors used in the top 500 supercomputers. | |||

[[Image:Processor_Family.jpg]] | |||

<br>Here are four charts comparing the evolution from 1993 to 2009. First are 1993 and 1999 distributions:<br> | |||

[[Image:1993_PF.jpg]][[Image:1999_PF.jpg]] | |||

<br>Then come 2005 and 2009:<br> | |||

[[Image:2005_PF.jpg]][[Image:2009_PF.jpg]]<br> | |||

From these four charts, you can see that processor family depends heavily on the vendors and shipping in that time period. Processor families of supercomputers reflect technological progress in the past twenty years. Newly built supercomputers will always adopt new technology. As we have said before, supercomputers are a fluid field, and today's "supercomputer" turns out to be tomorrow's "minicomputer". | |||

=== Number of | === Number of processors === | ||

Through advances in Moore's Law, the frequency and number of processors grows exponentially, as both multiprocessing and cluster computing becomes cost-effective. Supercomputer trends are likely to yield a collection of computers with multiprocessing that are highly interconnected via a high-speed network or switching fabric. There will be a great increase in the number of processors. | |||

==Operating Systems== | ==Operating Systems== | ||

| Line 225: | Line 133: | ||

=== Operating Systems Family === | === Operating Systems Family === | ||

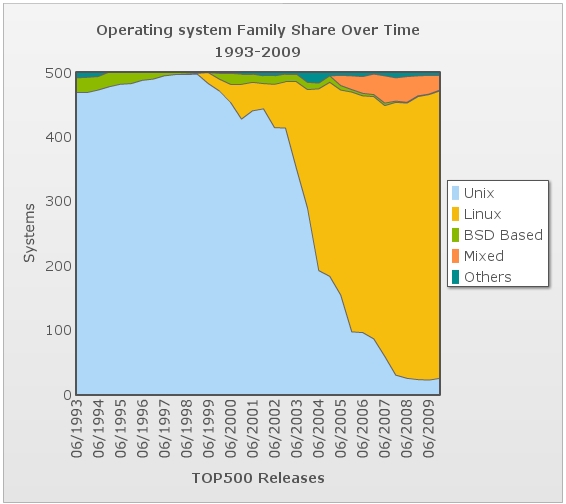

Supercomputer use various of operating systems. The operating system of | Supercomputer use various of operating systems. The operating system of s specific supercomputer depends on its vendor. Until the early-to-mid-1980s, supercomputers usually sacrificed instruction-set compatibility and code portability for performance (processing and memory access speed). For the most part, supercomputers at this time (unlike high-end mainframes) had vastly different operating systems. The Cray-1 alone had at least six different proprietary OSs largely unknown to the general computing community. In a similar manner, there existed different and incompatible vectorizing and parallelizing compilers for Fortran. This trend would have continued with the ETA-10 were it not for the initial instruction set compatibility between the Cray-1 and the Cray X-MP, and the adoption of computer systems such as Cray's Unicos, or Linux. | ||

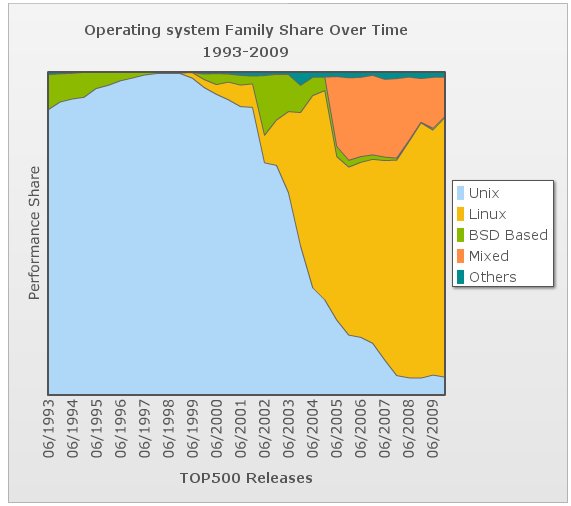

From the statistics | From the Top 500 statistics, before the 21st century almost all the OSs fell into the Unix family, while after year 2000 more and more Linux versions were adopted for supercomputers. In the 2009/11 list, 446 out of 500 supercomputers at the top were using their own distribution of Linux. When we list the OS for each of the top 20 supercomputers, the result for Linux is very impressive:<br><b>18 of the top 20 supercomputers in the world are running some form of Linux.</b><br> | ||

And if you just look at the top 10, | And if you just look at the top 10, **all** of them use Linux. Looking at the list, it becomes clear that prominent supercomputer vendors such as Cray, IBM and SGI have wholeheartedly embraced Linux. In a few cases Linux coexists with a lightweight kernel running on the compute nodes (the part of the supercomputer that performs the actual calculations), but often even these lightweight kernels are based on Linux. Cray, for example, has a modified version of Linux they call CNL (Compute Node Linux). | ||

====IBM AIX==== | ====IBM AIX==== | ||

| Line 235: | Line 143: | ||

====Linux Family==== | ====Linux Family==== | ||

SuSE Linux Enterprise Server Family<br> | ''SuSE Linux Enterprise Server Family''<br> | ||

SLES has been developed based on SUSE Linux. It was first released on 31 October 2000 as a version for IBM S/390 mainframe machines. In December 2000, the first enterprise client (Telia) was made public. In April 2001, the first SLES for x86 was released. SLES version 9 was released in August 2004; SUSE Linux Enterprise Server 10 was released in July 2006; SUSE Linux Enterprise Server 11 was released on March 24, 2009. All of them are supported by the major hardware vendors—IBM, HP, Sun Microsystems, Dell, SGI, Lenovo, and Fujitsu Siemens Computers.<br> | SLES has been developed based on SUSE Linux. It was first released on 31 October 2000 as a version for IBM S/390 mainframe machines. In December 2000, the first enterprise client (Telia) was made public. In April 2001, the first SLES for x86 was released. SLES version 9 was released in August 2004; SUSE Linux Enterprise Server 10 was released in July 2006; SUSE Linux Enterprise Server 11 was released on March 24, 2009. All of them are supported by the major hardware vendors—IBM, HP, Sun Microsystems, Dell, SGI, Lenovo, and Fujitsu Siemens Computers.<br> | ||

Redhat Enterprise/CentOS<br> | ''Redhat Enterprise/CentOS''<br> | ||

Redhat Enterprise along with CentOS are adopted in some vendors' platform. Red Hat Enterprise Linux (RHEL) is a Linux distribution produced by Red Hat and targeted toward the commercial market, including mainframes. CentOS is a community-supported, free and open source operating system based on Red Hat Enterprise Linux. | Redhat Enterprise along with CentOS are adopted in some vendors' platform. Red Hat Enterprise Linux (RHEL) is a Linux distribution produced by Red Hat and targeted toward the commercial market, including mainframes. CentOS is a community-supported, free and open source operating system based on Red Hat Enterprise Linux.<br> | ||

====UNICOS==== | ====UNICOS==== | ||

UNICOS is the name of a range of Unix-like operating system variants developed by Cray for its supercomputers. UNICOS is the successor of the Cray Operating System (COS). It provides network clustering and source code compatibility layers for some other Unixes. UNICOS was originally introduced in 1985 with the Cray-2 system and later ported to other Cray models. The original UNICOS was based on UNIX System V Release 2, and had numerous BSD features (e.g., networking and file system enhancements) added to it. | UNICOS is the name of a range of Unix-like operating system variants developed by Cray for its supercomputers. UNICOS is the successor of the Cray Operating System (COS). It provides network clustering and source code compatibility layers for some other Unixes. UNICOS was originally introduced in 1985 with the Cray-2 system and later ported to other Cray models. The original UNICOS was based on UNIX System V Release 2, and had numerous BSD features (e.g., networking and file system enhancements) added to it. | ||

UNICOS dominated on supercomputer in 1993 in the sense that 188 out of 500 supercomputers then were running UNICOS. Of course one of the reason is that Cray Inc. was the largest supercomputer vendor at that time(40% supercomputers were from Cray Inc.). As more and more other companies entered the market UNICOS's partition dropped with its share of hardware market. After 2000, Cray began to use linux and even Windows HPC to run on their machine and at the same time UNICOS is walking out of supercomputer. | |||

UNICOS dominated on supercomputer in 1993 in the sense that 188 out of 500 supercomputers then were running UNICOS. Of course one of the reason is that Cray Inc. was the largest supercomputer vendor at that time(40% supercomputers were from Cray Inc.). As more and more other companies | |||

====Solaris==== | ====Solaris==== | ||

Solaris appeared when Sun Microsystems began to ship their supercomputer to the market. Technically, Solaris is one of the most powerful operating sytems, sometimes much more secure and efficient than Linux distributions and unix systems. But | Solaris appeared when Sun Microsystems began to ship their supercomputer to the market. Technically, Solaris is one of the most powerful operating sytems, sometimes much more secure and efficient than Linux distributions and unix systems. But Solaris disappears as Sun Microsystems leaves the market now. | ||

====Windows HPC 2008==== | ====Windows HPC 2008==== | ||

Windows HPC Server 2008, released by Microsoft in September 2008, is the successor product to Windows Compute Cluster Server 2003. | Windows HPC Server 2008, released by Microsoft in September 2008, is the successor product to Windows Compute Cluster Server 2003. Windows HPC Server 2008 is designed for high-end applications that require high performance computing clusters. This version of the server is claimed to efficiently scale to thousands of cores. It includes features unique to HPC workloads: a new high-speed NetworkDirect RDMA, highly efficient and scalable cluster management tools, a service-oriented architecture (SOA) job scheduler, and cluster interoperability through standards such as the High Performance Computing Basic Profile (HPCBP) specification produced by the Open Grid Forum (OGF). | ||

Here is the distribution of OS on supercomputers from 1993 to 2009:<br> | |||

[[Image:os_system.jpg]] [[Image:os_performance.jpg]] | [[Image:os_system.jpg]] [[Image:os_performance.jpg]] | ||

=== Operating Systems | === Operating Systems Trends -- Why Linux?=== | ||

IBM | AIX was the operating system for IBM own mainframe, but IBM is a strong proponent for Linux for years now. When IBM started its Blue Gene series of supercomputers back in 2002 it chose Linux as its operating system. The following quote from Bill Pulleyblank of IBM Research nicely sums up why IBM and many other vendors have chosen Linux: | ||

<blockquote>We chose Linux because it’s open and because we believed it could be extended to run a computer the size of Blue Gene. We saw considerable advantage in using an operating system supported by the open-source community, so that we can get their input and feedback.</blockquote> | <blockquote>We chose Linux because it’s open and because we believed it could be extended to run a computer the size of Blue Gene. We saw considerable advantage in using an operating system supported by the open-source community, so that we can get their input and feedback.</blockquote> | ||

In short, it looks like Linux has conquered the supercomputer market almost completely. | In short, it looks like Linux has conquered the supercomputer market almost completely. Linux outguns popular Unix operating systems like AIX and Solaris from Sun Microsystems because those systems contain features that make them great for commercial users but add a lot of system overhead that ends up limiting overall performance. Here comes one example: a "virtualization" feature in AIX lets many applications share the same processor but just hammers performance. | ||

Linux outguns popular Unix operating systems like AIX and Solaris from Sun Microsystems because those systems contain features that make them great for commercial users but add a lot of system overhead that ends up limiting overall performance. | |||

Linus Torvalds says that Linux has caught on in part because while typical Unix versions run on only one or two hardware architectures, Linux runs on more than 20 different hardware architectures including machines based on Intel microprocessors as well as RISC-based computers from IBM and HP. Linux is easy to get, has no licensing costs, has all the infrastructure in place, and runs on pretty much every single relevant piece of hardware out there. | Linus Torvalds says that Linux has caught on in part because while typical Unix versions run on only one or two hardware architectures, Linux runs on more than 20 different hardware architectures including machines based on Intel microprocessors as well as RISC-based computers from IBM and HP. Linux is easy to get, has no licensing costs, has all the infrastructure in place, and runs on pretty much every single relevant piece of hardware out there. | ||

== | ==Segments== | ||

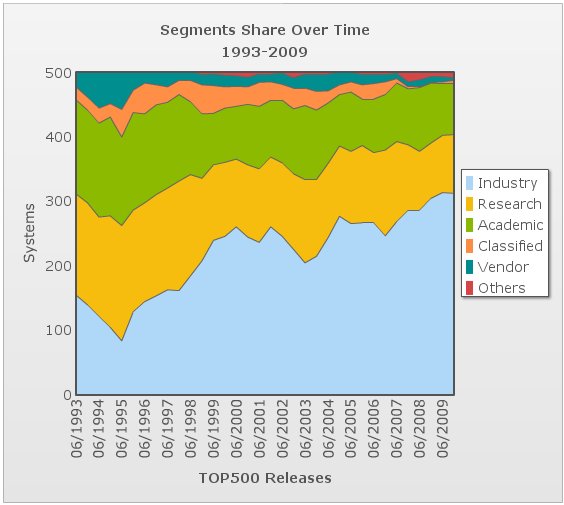

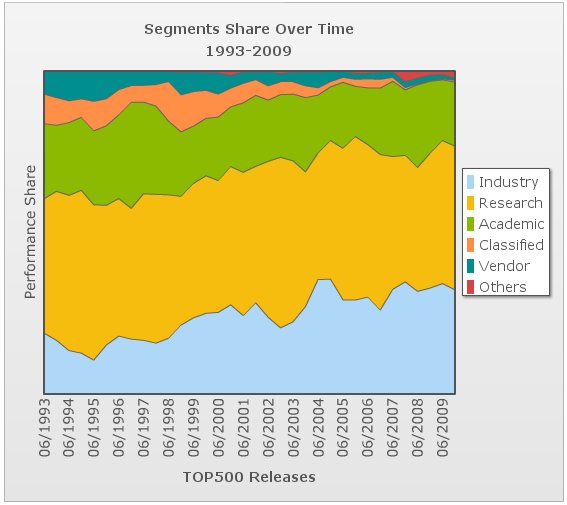

There is also something interesting if you have a look at the segments section of Top500 data. Through all these years, industry and research are all time the two main segment shares. They two end up two balanced performance shares while industry has a much larger system share. This implies research area does own the most high-end devices which is also a main source of technology development. | |||

[[Image:segments_system.jpg]] [[Image:segments_performance.jpg]] | |||

==References== | ==References== | ||

Latest revision as of 22:26, 2 February 2010

A supercomputer is a computer that is most advanted computer of current processing capacity, particularly speed of calculation. Supercomputers were introduced in the 1960s and were designed primarily at Control Data Corporation (CDC), and led the market into the 1970s.

Cray then took over the supercomputer market with his new designs, holding the top spot in supercomputing for five years (1985–1990). In the 1980s a large number of smaller competitors entered the market, in parallel to the creation of the minicomputer market a decade earlier, but many of these disappeared in the mid-1990s "supercomputer market crash."

Today, supercomputers are typically one-of-a-kind custom designs produced by "traditional" companies such as Cray, IBM, and Hewlett-Packard, who purchased many of the 1980s companies to gain their experience. As of 7/2009, the Cray Jaguar is the fastest supercomputer in the world.

Supercomputers are used for highly calculation-intensive tasks such as problems involving quantum physics, weather forecasting, climate research, computational chemistry|molecular modeling (computing the structures and properties of chemical compounds, biological macromolecules, polymers, and crystals), physical simulations (such as simulation of airplanes in wind tunnels, simulation of the detonation of nuclear weapons, and research into nuclear fusion).

Timeline of supercomputers

This is a list of the record-holders for fastest general-purpose supercomputer in the world, and the year each one set the record. Here we just list entries after 1993. The list reflects the Top500 listing Directory page for Top500 lists. Result for each list since June 1993, and the "Peak speed" is given as the "Rmax" rating.

| Year | Supercomputer | Peak speed (Rmax) |

Location |

|---|---|---|---|

| 1993 | CM-5/1024 | 59.7 GFLOPS | DoE-Los Alamos National Laboratory; National Security Agency |

| Fujitsu Numerical Wind Tunnel | 124.50 GFLOPS | National Aerospace Laboratory, Tokyo, Japan | |

| Paragon XP/S 140 | 143.40 GFLOPS | DoE-Sandia National Laboratories, New Mexico, United States|USA | |

| 1994 | Fujitsu Numerical Wind Tunnel | 170.40 GFLOPS | National Aerospace Laboratory, Tokyo, Japan |

| 1996 | Hitachi SR2201/1024 | 220.4 GFLOPS | University of Tokyo, Japan |

| Hitachi/Tsukuba CP-PACS/2048 | 368.2 GFLOPS | Center for Computational Physics, University of Tsukuba, Tsukuba, Japan | |

| 1997 | Intel ASCI Red/9152 | 1.338 TFLOPS | Sandia National Laboratories|DoE-Sandia National Laboratories, New Mexico, United States|USA |

| 1999 | Intel ASCI Red/9632 | 2.3796 TFLOPS | |

| 2000 | IBM ASCI White | 7.226 TFLOPS | DoE-Lawrence Livermore National Laboratory, California, United States|USA |

| 2002 | NEC Earth Simulator | 35.86 TFLOPS | Earth Simulator Center, Yokohama, Japan |

| 2004 | IBM Blue Gene|Blue Gene/L | 70.72 TFLOPS | DoE/IBM|IBM Rochester, Minnesota, United States|USA |

| 2005 | 136.8 TFLOPS | United States Department of Energy|DoE/United States National Nuclear Security Administration|U.S. National Nuclear Security Administration, Lawrence Livermore National Laboratory, California, United States|USA | |

| 280.6 TFLOPS | |||

| 2007 | 478.2 TFLOPS | ||

| 2008 | IBM |Roadrunner | 1.026 PFLOPS | Los Alamos National Laboratory|DoE-Los Alamos National Laboratory, New Mexico, United States|USA |

| 1.105 PFLOPS | |||

| 2009 | Jaguar | 1.759 PFLOPS | DoE-Oak Ridge National Laboratory, Tennessee, United States|USA |

Architecture

Over the years, we see the changes in supercomputer architecture. Various architectures were developed and abandoned, as computer technology progressed.

In the early '90s, single processors were still common in the supercomputer arena. However, two other architectures played more important roles. One was Massive Parallel Processing (MPP), which is a computer system with many independent arithmetic units or entire microprocessors, that run in parallel. The other is Symmetric Multiprocessing (SMP), a good representative of the earliest styles of multiprocessor machine architectures. The existence of these two architectures met two of the supercomputer's key needs: parallelism and high performance.

As time passed by, more units were available. In the early 2000s, constellation computing was widely used, and MPP reached its peak percentage.

With the rise of cluster computing, the supercomputer world was transformed. In 2009, cluster computing accounted for 83.4% of the architectures in the Top 500. A cluster computer is a group of linked computers, working together closely so that in many respects they form a single computer. Compared to a single computer, clusters are deployed to improve performance and/or availability, while being more cost-effective than single computers of comparable speed or availability. Cluster computers offer a high-performance computing alternative over SMP and massively parallel computing systems. Using redundancy, cluster architectures also aim to provide reliability.

From the analysis above, we can see that supercomputers are highly related to technological change, and actively motivated by it.

Processors

Processor Architecture

Looking at the following two figures from the TOP500 Web site, we can see an obvious trend toward scalar processor architecture encroaching on vector architecture. Processor architecture has moved from basic pipeline and RISC models, to vector processors, and then to superscalar processors.

Processor Family

It is quite clear that supercomputers are evolving with the major changes in the commercial market. Time has seen processor upgrades as Intel/AMD release their new products. In 11/2009, Intel EM64T, AMD X86_64 and Power comprise 98% of all the processors used in the top 500 supercomputers.

Here are four charts comparing the evolution from 1993 to 2009. First are 1993 and 1999 distributions:

Then come 2005 and 2009:

From these four charts, you can see that processor family depends heavily on the vendors and shipping in that time period. Processor families of supercomputers reflect technological progress in the past twenty years. Newly built supercomputers will always adopt new technology. As we have said before, supercomputers are a fluid field, and today's "supercomputer" turns out to be tomorrow's "minicomputer".

Number of processors

Through advances in Moore's Law, the frequency and number of processors grows exponentially, as both multiprocessing and cluster computing becomes cost-effective. Supercomputer trends are likely to yield a collection of computers with multiprocessing that are highly interconnected via a high-speed network or switching fabric. There will be a great increase in the number of processors.

Operating Systems

Operating Systems Family

Supercomputer use various of operating systems. The operating system of s specific supercomputer depends on its vendor. Until the early-to-mid-1980s, supercomputers usually sacrificed instruction-set compatibility and code portability for performance (processing and memory access speed). For the most part, supercomputers at this time (unlike high-end mainframes) had vastly different operating systems. The Cray-1 alone had at least six different proprietary OSs largely unknown to the general computing community. In a similar manner, there existed different and incompatible vectorizing and parallelizing compilers for Fortran. This trend would have continued with the ETA-10 were it not for the initial instruction set compatibility between the Cray-1 and the Cray X-MP, and the adoption of computer systems such as Cray's Unicos, or Linux.

From the Top 500 statistics, before the 21st century almost all the OSs fell into the Unix family, while after year 2000 more and more Linux versions were adopted for supercomputers. In the 2009/11 list, 446 out of 500 supercomputers at the top were using their own distribution of Linux. When we list the OS for each of the top 20 supercomputers, the result for Linux is very impressive:

18 of the top 20 supercomputers in the world are running some form of Linux.

And if you just look at the top 10, **all** of them use Linux. Looking at the list, it becomes clear that prominent supercomputer vendors such as Cray, IBM and SGI have wholeheartedly embraced Linux. In a few cases Linux coexists with a lightweight kernel running on the compute nodes (the part of the supercomputer that performs the actual calculations), but often even these lightweight kernels are based on Linux. Cray, for example, has a modified version of Linux they call CNL (Compute Node Linux).

IBM AIX

AIX (Advanced Interactive eXecutive) is the name given to a series of proprietary operating systems sold by IBM for several of its computer system platforms, based on UNIX System V with 4.3BSD-compatible command and programming interface extensions. AIX runs on 32-bit or 64-bit IBM POWER or PowerPC CPUs (depending on version) and can address up to 32 terabytes (TB) of random access memory. The JFS2 file system—first introduced by IBM as part of AIX—allows computer files and partitions over 4 petabytes in size.

Linux Family

SuSE Linux Enterprise Server Family

SLES has been developed based on SUSE Linux. It was first released on 31 October 2000 as a version for IBM S/390 mainframe machines. In December 2000, the first enterprise client (Telia) was made public. In April 2001, the first SLES for x86 was released. SLES version 9 was released in August 2004; SUSE Linux Enterprise Server 10 was released in July 2006; SUSE Linux Enterprise Server 11 was released on March 24, 2009. All of them are supported by the major hardware vendors—IBM, HP, Sun Microsystems, Dell, SGI, Lenovo, and Fujitsu Siemens Computers.

Redhat Enterprise/CentOS

Redhat Enterprise along with CentOS are adopted in some vendors' platform. Red Hat Enterprise Linux (RHEL) is a Linux distribution produced by Red Hat and targeted toward the commercial market, including mainframes. CentOS is a community-supported, free and open source operating system based on Red Hat Enterprise Linux.

UNICOS

UNICOS is the name of a range of Unix-like operating system variants developed by Cray for its supercomputers. UNICOS is the successor of the Cray Operating System (COS). It provides network clustering and source code compatibility layers for some other Unixes. UNICOS was originally introduced in 1985 with the Cray-2 system and later ported to other Cray models. The original UNICOS was based on UNIX System V Release 2, and had numerous BSD features (e.g., networking and file system enhancements) added to it. UNICOS dominated on supercomputer in 1993 in the sense that 188 out of 500 supercomputers then were running UNICOS. Of course one of the reason is that Cray Inc. was the largest supercomputer vendor at that time(40% supercomputers were from Cray Inc.). As more and more other companies entered the market UNICOS's partition dropped with its share of hardware market. After 2000, Cray began to use linux and even Windows HPC to run on their machine and at the same time UNICOS is walking out of supercomputer.

Solaris

Solaris appeared when Sun Microsystems began to ship their supercomputer to the market. Technically, Solaris is one of the most powerful operating sytems, sometimes much more secure and efficient than Linux distributions and unix systems. But Solaris disappears as Sun Microsystems leaves the market now.

Windows HPC 2008

Windows HPC Server 2008, released by Microsoft in September 2008, is the successor product to Windows Compute Cluster Server 2003. Windows HPC Server 2008 is designed for high-end applications that require high performance computing clusters. This version of the server is claimed to efficiently scale to thousands of cores. It includes features unique to HPC workloads: a new high-speed NetworkDirect RDMA, highly efficient and scalable cluster management tools, a service-oriented architecture (SOA) job scheduler, and cluster interoperability through standards such as the High Performance Computing Basic Profile (HPCBP) specification produced by the Open Grid Forum (OGF).

Here is the distribution of OS on supercomputers from 1993 to 2009:

Operating Systems Trends -- Why Linux?

AIX was the operating system for IBM own mainframe, but IBM is a strong proponent for Linux for years now. When IBM started its Blue Gene series of supercomputers back in 2002 it chose Linux as its operating system. The following quote from Bill Pulleyblank of IBM Research nicely sums up why IBM and many other vendors have chosen Linux:

We chose Linux because it’s open and because we believed it could be extended to run a computer the size of Blue Gene. We saw considerable advantage in using an operating system supported by the open-source community, so that we can get their input and feedback.

In short, it looks like Linux has conquered the supercomputer market almost completely. Linux outguns popular Unix operating systems like AIX and Solaris from Sun Microsystems because those systems contain features that make them great for commercial users but add a lot of system overhead that ends up limiting overall performance. Here comes one example: a "virtualization" feature in AIX lets many applications share the same processor but just hammers performance. Linus Torvalds says that Linux has caught on in part because while typical Unix versions run on only one or two hardware architectures, Linux runs on more than 20 different hardware architectures including machines based on Intel microprocessors as well as RISC-based computers from IBM and HP. Linux is easy to get, has no licensing costs, has all the infrastructure in place, and runs on pretty much every single relevant piece of hardware out there.

Segments

There is also something interesting if you have a look at the segments section of Top500 data. Through all these years, industry and research are all time the two main segment shares. They two end up two balanced performance shares while industry has a much larger system share. This implies research area does own the most high-end devices which is also a main source of technology development.