CSC/ECE 517 Fall 2009/wiki1b 11 cc: Difference between revisions

No edit summary |

No edit summary |

||

| (44 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

== '''Static vs. | == '''Static vs. Dynamic Object Oriented Languages from the Perspective of Performance''' == | ||

Object-oriented languages can be analyzed | ===Introduction=== | ||

Object-oriented languages performance can be analyzed by their handling mechanism, in this case the dynamical and statical have significant differences. For example dynamical delays the type decision until run time which is known as '''Type Feedback''' or '''TF''', on the other hand the statical first determines and strongly restricts type usage in compile time which is called '''Type Inference''' or '''TI'''. This page is intended to describe the performance affections using each one of them and deliver performance results. | |||

One parameter to measure | One parameter to measure performance are calls, they tend to slow down the program execution and its performance through the calling overhead that might occur in some instances. In a program that has many calls has very little opportunities to optimize the code, this affects more the dynamically dispatch typed calls. The common way to write a program is by containing as more calls as possible at the source level instead of having them at procedural level since the programming style in object oriented encourages factorizing code into small pieces to obtain fine-grained reuse, this practice makes more difficult the optimization and thus affects the execution performance. | ||

Since the appearance of compilers up to today’s modern compilers are unable or very limited into remove dynamical calls; therefore dynamic object oriented programs usually exhibit a higher calling frequency than static procedural programs. The frequent calls, combined with the scarce opportunities for code optimizations, can lead to poor run-time performance. | |||

===Calls versus Re-factorization=== | |||

A good and common behavior when writing a program is to re-factorize the code in order to make it more modular and understandable by dividing the code in to subtasks or subroutines, this approach provides better organization, specialized subroutines and better documentation rather to have all the code application in a single function, however this leads to create more calls that affect the execution performance, specially if a call carries not only the parameters arguments but also the entire object or even worst the complete object environment (e.g. ruby) which will slow down the execution on each call. | |||

Besides that the task to eliminate dynamical calls or to reduce them up to an optimum allowed level by statically binding or in-lining them is not as easy as it might seen, even for the statically-typed languages, the programmer must have very deep knowledge in the code execution process and its application in order to optimize calls. | |||

For example the following C++ code : | |||

MathematicalObject* obj; | |||

... | ... | ||

obj-> | obj->Exponential(0, 0); | ||

One can think that this type declaration the a compiler can thus statically bind the “'''Exponential'''” call, however it cannot because the compiler does not know the object class and therefore it cannot know whether “'''Any_1::Exponential'''” or “'''Any_2::Exponential'''” will be invoked. | |||

The compiler must know, by explicit declarations in a statically typed language, the type of every object anywhere that the program execution will use. Henceforth the programmer has restrictions on what the compiler is able to provide, in the end it is only extra information, because the compiler does not known if the program is still safe to be executed, for example an infinite loop that might get the program stuck in an overflow call executions, the compiler is unable to know this in advance (just set a simple warning) leaving this cases out of the optimization opportunities. | |||

There are things that can be done in statically typed language and some other that can be done in dynamical typed language, the important trade off here will be from the performance point of view, for instance lets define each one of them and after that make the principal differences before jumping to the performance benchmarking. | |||

---- | ---- | ||

===Type Inference === | ===Type Inference === | ||

Static Object-Oriented Language '''Type Inference TI''' | |||

In Statically Typed languages require that all variables to be of a specific type, in this way the compiler can ensure that the variable contains only an object compatible with the specified type. In this way the compiler can ensure the calling method and this kind of errors cannot happen in run time. It introduces safety execution, reliable code and introduces efficiency of the results. | |||

However static type are unable to evolve in some circumstances, for example the upper classes restrict the inherited ones to evolve into various forms because the strict type binding concept. | |||

Examples of static languages are '''Eiffel''', '''Java''', '''C#''', '''C++''', '''Visual Basic''', ...... | |||

Eiffel , Java , C# , C++ , Visual Basic | |||

---- | ---- | ||

| Line 29: | Line 42: | ||

===Type Feedback === | ===Type Feedback === | ||

Dynamic Object-Oriented Language | Dynamic Object-Oriented Language '''Type Feedback TF''' | ||

The Dynamic Typed languages don't require variables to be of a specific type, in other words any variable can contain any value or object, this feature makes it more flexible and easy to change on run time, however the programmer must be more careful when a variable is expected to contain certain object, commonly happens that variables have objects other than it was expected, this causes uncertainty when calling a method and it can lead to error at run time. | |||

This kin of error can affect the understanding of messages when the objects are not the expected ones or even worse the messages can be interpreted in other different sense. | |||

Examples of dynamic languages are '''Smalltalk''', '''Ruby''', '''Python''', '''Perl''', ... | |||

Smalltalk , Ruby , Python , Perl | |||

===Principal Comparison Between Static and Dynamic=== | |||

Table 1 summarizes the principal differences between Dynamic and Static Object Oriented languages from the performance point of view taken from [http://www.jvoegele.com/software/langcomp.html Language Comparissons], below are the explanations of each '''Feature''' and its effect, refer to the same link read the complete study. | |||

Table | |||

{| class="wikitable" border="1" | {| class="wikitable" border="1" | ||

| Line 47: | Line 60: | ||

|- | |- | ||

| Generic Classes | | Generic Classes | ||

| No | |||

| Yes | | Yes | ||

|- | |- | ||

| Inheritance | | Inheritance | ||

| | | Both class and object inheritance | ||

| | | Only class inheritance | ||

|- | |- | ||

| Method Overloading | | Method Overloading | ||

| Line 59: | Line 72: | ||

|- | |- | ||

| Higher Order Functions & Lexical Closures | | Higher Order Functions & Lexical Closures | ||

| | | Both High Order and Lexical Closures | ||

| | | Only High Order | ||

|- | |- | ||

| Uniform Access | | Uniform Access | ||

| Line 67: | Line 80: | ||

|- | |- | ||

| Class Variables/Methods | | Class Variables/Methods | ||

| Yes | | Yes and very advanced | ||

| | | Yes, but limited | ||

|- | |- | ||

| Reflection | | Reflection | ||

| Line 75: | Line 88: | ||

|} | |} | ||

Table. Performance comparison of Static and Dynamic. | ''''Table 1. Performance comparison of Static and Dynamic.'''' | ||

====Generic Classes==== | |||

Generic classes refer to those classes that are able to assign proper parameters with specific data types. In static typed languages it permits to keep compile time safe and at the same time to be flexible like dynamically typed languages. For example Java does not have this feature, which is a severe disadvantage compared with other static languages, on the other hand Dynamic typed languages do not need parameterized types in order to support generic programming. In terms of performance the generic classes make the static languages to behave in some sense close to dynamic languages, this is a significant improvement. | |||

====Inheritance==== | |||

Inheritance is the ability to share methods to subclasses or an object can be defined as an extension of another class, most of object oriented languages support class based inheritance, but only a few can support class based and object based inheritance at run time by sharing their code characteristics to another classes or objects | |||

This feature is important; those object oriented languages that have both inheritance have more capability performance. | |||

====Method Overloading==== | |||

Method overloading is when two or more classes have methods with the same name, this is an advantage in some cases, for example a problem arises when calling these methods with the same number and type of arguments from different methods, the solution is to have a different name for each method or relate the method to the direct super classes inheritance. Therefore it is expected to object oriented languages to perform not very well if this kind of errors happens. | |||

====Higher Order Functions & Lexical Closures==== | |||

Higher order functions are those that can be used as data objects, it means that they can be bound to variables and also they can be passed as parameters just like variables, they can also be returned as the result of other functions. | |||

Lexical closures have extra capabilities in the sense that not only passes the functions as a parameter variable, but also it is able to carry the entire environment around with it. | |||

For example Smalltalk and Ruby have both higher order functions and lexical closures, which might be good for expanding their capabilities, however to carry the entire environment with it will certainly slow down the time execution if calls are frequent and it would consume processor resources, henceforth the performance would be affected. | |||

Java and C++ only have high order functions and not lexical closures, however they provide a similar partial support of lexical closures using function objects or "functors". | |||

====Uniform Access==== | |||

The principle behind uniform access is very well explained in [http://en.wikipedia.org/wiki/Object-Oriented_Software_Construction Bertrand Meyer's Object-Oriented Software Construction], it states ''''All services offered by a module should be available through a uniform notation, which does not betray whether they are implemented through storage or through computation.'''' It is expected to improve the performance since the calls would have uniform access. | |||

Java in one hand cannot support uniform access, for example the use of “ece.bar” object is different from using “ece.bar()” if it were a function, for instance if “.bar” is changed then any use of “ece” must also be changed. | |||

On the other hand Ruby supports uniform access principle directly. | |||

====Class Variables/Methods==== | |||

Class variable/method exists when there is only one copy of a class despite having many instances of the same class and it is shared by every instance of the class | |||

Smalltalk and Ruby support the most advanced notion of class variables and methods, because the classes are by themselves objects. | |||

Java and C++ provide "static" members that are basically the same as in dynamic, however they are limited because they cannot be inherited. | |||

====Reflection==== | |||

Reflection is the ability to determine the pieces of information about an object at run-time, the features to determine are the type of the object, its inheritance structure, containing methods, number and types of parameters and return types. | |||

Smalltalk, Ruby and Python are very good and flexible in their reflection mechanisms, on the contrary C++ does not provide reflection, Java supports it but it is not flexible. It is expected to perform better if the reflection information is available, but to know all this in advance might slowdown the execution time | |||

---- | |||

===Benchmarking Between Static and Dynamic=== | |||

So far only the definitions of static and dynamic typed languages and their differences have been given with a little guess on the performance, the truth is that performance depends on more than software structures and definitions, it depends of the framework, processor speed and more, to avoid this problem a benchmarking was conducted in a standard environment. | |||

The following is a study of performance of the same program in C, Java (Both static) and Python, Ruby (both dynamic) and run them on a 300MHz Pentium speed, to see the complete experiment follow the link [http://dan.corlan.net/bench.html Language Bench]. | |||

The standard program is to compute the same 100 polynomial terms 500,000 times by using same algorithm in all programs, the program is intended to tests the access to local vectors and be able to calculate simple arithmetic operations in loops, the advantage is that the program is independent of the different libraries and operating system calls of each object oriented language features. | |||

In this benchmarking the performance is taken as the direct cost in terms of the number of users to achieve a required response time, this cost is directly proportional to computation time, in other words the performance is measured as how quickly operations, loop, access to array elements and function calls last to be completed. | |||

The benchmarking is on C and Java versus Python and Ruby | |||

'''C [http://dan.corlan.net/bench.html#COPT See original code]''' | |||

#include <stdio.h> | |||

#include <stdlib.h> | |||

main(short argc, char **argv) { | |||

float mu = 10.0; | |||

float x,s; | |||

float pu = 0.0; | |||

int su, i, j, n; | |||

float pol[100]; | |||

register tm1; | |||

register int tp1; | |||

n = atol(argv[1]); | |||

x = atof(argv[2]); | |||

for(i=0; i<n; i++) { | |||

tp1=2; | |||

tm1=1/2.0; | |||

for (j=0; j<100; j++) { | |||

pol[j] = mu = (mu + tp1) * tm1; | |||

} | |||

s = 0.0; | |||

for (j=0; j<100; j++) { | |||

s = x*s + pol[j]; | |||

} | |||

pu += s; | |||

} | |||

printf("%f\n",pu); | |||

} | |||

'''Java [http://dan.corlan.net/bench.html#JAVA See original code]''' | |||

public class tpoly { | |||

static public void main(String argv[]) { | |||

float mu = (float)10.0; | |||

float x,s; | |||

float pu = (float)0.0; | |||

int su, i, j, n; | |||

float pol[] = new float[100]; | |||

n = 500000; | |||

x = (float)0.2; | |||

for(i=0; i<n; i++) { | |||

for (j=0; j<100; j++) { | |||

mu = (mu + (float)2.0) / (float)2.0; | |||

pol[j] = mu; | |||

} | |||

s = (float)0.0; | |||

for (j=0; j<100; j++) { | |||

s = x*s + pol[j]; | |||

} | |||

pu += s; | |||

} | |||

System.out.println(pu); | |||

} | |||

} | |||

'''Python [http://dan.corlan.net/bench.html#PYTHON See original code]''' | |||

n = 500000 | |||

x = 0.2 | |||

def t(x): | |||

mu = 10.0 | |||

pu = 0.0 | |||

pol =[0] * 100 | |||

r = range(0,100) | |||

for i in range(0,n): | |||

for j in r: | |||

pol[j] = mu = (mu + 2.0) / 2.0 | |||

su = 0.0 | |||

for j in r: | |||

su = x * su + pol[j] | |||

pu = pu + su | |||

print pu | |||

t(x) | |||

'''Ruby [http://dan.corlan.net/bench.html#RUBY See original code]''' | |||

n = 500000 | |||

x = 0.2 | |||

mu = 10 | |||

pu = 0 | |||

pol = [] | |||

n.times do | |||

0.upto(99) { |j| pol[j] = mu = (mu + 2) * 0.5 } | |||

s = 0 | |||

0.upto(99) { |j| s = x * s + pol[j] } | |||

pu += s | |||

end | |||

print pu,"\n" | |||

Results are listed below, as mentioned above the performance is measured in the seconds needed to perform the simple task. | |||

{| class="wikitable" border="1" | |||

|- | |||

! Language | |||

! Seconds to perform task | |||

|- | |||

| C, using gcc V2.95.4 | |||

| 2.73 | |||

|- | |||

| Java, gcj V3.0 | |||

| 3.03 | |||

|- | |||

| Python V2.1.2 | |||

| 515.04 | |||

|- | |||

| Ruby | |||

| 1074.52 | |||

|} | |||

'''Table 2. Benchmark in Seconds to Perform the Simple Task''' | |||

The conclusion is that the static typed languages have better performance, however the performance in terms on the seconds is disembogues, some may argue correctly that performance must be measured from the programmer point of view when assessing static versus dynamic, for this reason below is a benchmarking from the optimization point of view, but first it is required some rules that will apply for optimization | |||

===Optimization of Static and Dynamic Code and Benchmarking=== | |||

Another benchmarking was found in the attemp to optimize performance, the complete investigation was made and studied in [http://eprints.kfupm.edu.sa/36296/1/36296.pdf Optimization Benchmarking], in order to accomplish this the programs were divided into two groups. | |||

• “Tiny” and “Small” are a set of very small integer programs, they are included as reference but do not contribute much for the performance point of view | |||

• | • “Large” is a set of big enough application programs that exhibit a variety of object-oriented programming styles. | ||

Table 3 shows the benchmarking and their description taken from [http://eprints.kfupm.edu.sa/36296/1/36296.pdf Optimization Benchmarking] | |||

[[Image:Example.jpg|600px|]] | |||

'''Table 3. Benchmark Porgrams [http://eprints.kfupm.edu.sa/36296/1/36296.pdf Optimization Benchmarking]''' | |||

Although tiny and small are good to measure performance they are unlike real application, instead the focus will be on the "Large" set of applications since they are closer and more realistic to the common applications. | |||

====Execution Time: ==== | |||

In this case also the performance was measured in terms of speed, which in the end is the ultimate goal of an optimizing compiler. Figure 1 shows the relative execution time of the benchmarks compiled with TF (dynamic) and TI (static) systems | |||

[[Image:bench_1.jpg|500px|]] | |||

[ | '''Figure 1. Execution Time Benchmarking [http://eprints.kfupm.edu.sa/36296/1/36296.pdf Optimization Benchmarking]''' | ||

---- | ---- | ||

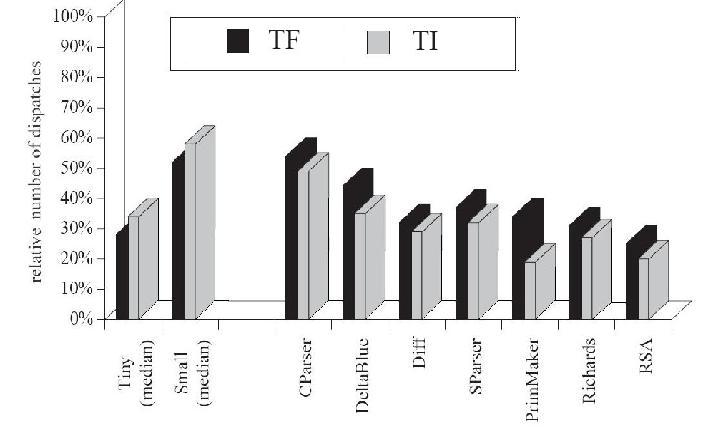

==Number of | ====Number of Dispatches:==== | ||

A dispatch is the proper selection of the correct piece of code to be executed, by measuring the number of dispatches that a program performs, it is possible to determine the performance by the number of creating mono-morphic calls. | |||

In this case Type Inference (TI) can determine the exact receiver class for many sends. | |||

Type | In contrast, Type Feedback (TF) requires a dispatch test because the receiver type always includes the unknown class. | ||

The benchmark results are shown in Figure 2 | |||

[[Image:bench_2.jpg|500px|]] | |||

[ | '''Figure 2. Number of Dispatches Benchmarking [http://eprints.kfupm.edu.sa/36296/1/36296.pdf Optimization Benchmarking]''' | ||

---- | ---- | ||

References | ==Conclusions:== | ||

---- | 1. The best performance is all about the right tool for the job, neither of static nor dynamic is better 100% of the time, in fact the systems benchmarking presented here were created by man and therefore they have flaws, also the experience or background might change the perception of performance. | ||

Dynamic typing might perform better because it gets rid off of many constrains for a static programmer, but runtime errors can mess the performance expected or what was planned from the programmer. In fact dynamic typing may fix the errors, but it may drive a novice programmer crazy trying to find out the data type between a constant char and integer variable | |||

2. Modern Java can actually compiles down to an inherently dynamic intermediate language, therefore dynamic typing isn't the huge performance-killer that it seems to be, the reasons of moving a static language type to dynamic type is not execution time performance, it should be related as how programming making performance is. | |||

3. If the performance is measured in how the code is reusable and transportable then statically typed systems programming are not very good, the constrains of static makes code less reusable, versus the flexible feature of dynamic typed language. | |||

4. One undeniable advantage of static typed language is their earlier detection of programming mistakes, better documentation and more visible opportunities for optimization, which mainly consist of changing virtual calls by known compiled calls, this clearly increases runtime efficiency, in other words “''well-typed programs cannot go wrong''”, however the static type checking is a compile-time abstraction and hence it is incomplete, which means that execution can still go in a bad way due to the properties that are couldn’t not be tracked. | |||

5. The seeking of making a static typed language less partial and more complete causes the type systems to become very complicated and perhaps more robust than needed, it is true that the performance of static language is very good but it is very rigid, it is like trying to run a marathon with a ball and chain tied to your leg and triumphantly shouting that you nearly made it. | |||

Dynamic languages, on the contrary, are soft and this feature makes them very good for prototyping systems, because it deals with changing or unknown requirements or parameters, dynamic typed languages are indeed indispensable for truly dynamic program behavior like method interception, mobile code, runtime reflection, etc. one undeniable conclusion is that dynamic typed languages enable to the programmer to find errors as early in the development as possible and fix them in place, the interaction with other systems that change dynamically makes them perfect for integration where the static typed code cannot interact in the same way. | |||

==References== | |||

[http://www.jvoegele.com/software/langcomp.html Programming Language Comparison] | |||

[http://stackoverflow.com/questions/125367/dynamic-type-languages-versus-static-type-languages Dynamic type languages versus static type languages] | |||

[http://www.voidspace.org.uk/python/weblog/arch_d7_2008_09_06.shtml Static Typing, Dynamic Language Performance, V8 and Tracing JITs] | |||

[http://codebetter.com/blogs/matthew.podwysocki/archive/2008/05/28/static-versus-dynamic Static versus Dynamic Languages - Attack of the Clones] | |||

[http://eprints.kfupm.edu.sa/36296/1/36296.pdf Optimization Benchmarking] | |||

==See Also== | |||

[http://en.wikipedia.org/wiki/Programming_language Wikipedia.org: Programming language] | |||

[http://en.wikipedia.org/wiki/Type_system Wikipedia.org: Type system] | |||

[http://en.wikipedia.org/wiki/Just-in-time_compilation Wikipedia.org: Just-in-time compilation] | |||

[http://en.wikipedia.org/wiki/Java_performance Wikipedia.org: Java performance] | |||

[http://en.wikipedia.org/wiki/JRuby Wikipedia.org: Ruby on Java] | |||

[http://en.wikipedia.org/wiki/Common_Language_Runtime Wikipedia.org:Common Language Runtime] | |||

---- | ---- | ||

Latest revision as of 04:40, 28 September 2009

Static vs. Dynamic Object Oriented Languages from the Perspective of Performance

Introduction

Object-oriented languages performance can be analyzed by their handling mechanism, in this case the dynamical and statical have significant differences. For example dynamical delays the type decision until run time which is known as Type Feedback or TF, on the other hand the statical first determines and strongly restricts type usage in compile time which is called Type Inference or TI. This page is intended to describe the performance affections using each one of them and deliver performance results.

One parameter to measure performance are calls, they tend to slow down the program execution and its performance through the calling overhead that might occur in some instances. In a program that has many calls has very little opportunities to optimize the code, this affects more the dynamically dispatch typed calls. The common way to write a program is by containing as more calls as possible at the source level instead of having them at procedural level since the programming style in object oriented encourages factorizing code into small pieces to obtain fine-grained reuse, this practice makes more difficult the optimization and thus affects the execution performance.

Since the appearance of compilers up to today’s modern compilers are unable or very limited into remove dynamical calls; therefore dynamic object oriented programs usually exhibit a higher calling frequency than static procedural programs. The frequent calls, combined with the scarce opportunities for code optimizations, can lead to poor run-time performance.

Calls versus Re-factorization

A good and common behavior when writing a program is to re-factorize the code in order to make it more modular and understandable by dividing the code in to subtasks or subroutines, this approach provides better organization, specialized subroutines and better documentation rather to have all the code application in a single function, however this leads to create more calls that affect the execution performance, specially if a call carries not only the parameters arguments but also the entire object or even worst the complete object environment (e.g. ruby) which will slow down the execution on each call.

Besides that the task to eliminate dynamical calls or to reduce them up to an optimum allowed level by statically binding or in-lining them is not as easy as it might seen, even for the statically-typed languages, the programmer must have very deep knowledge in the code execution process and its application in order to optimize calls.

For example the following C++ code :

MathematicalObject* obj;

...

obj->Exponential(0, 0);

One can think that this type declaration the a compiler can thus statically bind the “Exponential” call, however it cannot because the compiler does not know the object class and therefore it cannot know whether “Any_1::Exponential” or “Any_2::Exponential” will be invoked.

The compiler must know, by explicit declarations in a statically typed language, the type of every object anywhere that the program execution will use. Henceforth the programmer has restrictions on what the compiler is able to provide, in the end it is only extra information, because the compiler does not known if the program is still safe to be executed, for example an infinite loop that might get the program stuck in an overflow call executions, the compiler is unable to know this in advance (just set a simple warning) leaving this cases out of the optimization opportunities.

There are things that can be done in statically typed language and some other that can be done in dynamical typed language, the important trade off here will be from the performance point of view, for instance lets define each one of them and after that make the principal differences before jumping to the performance benchmarking.

Type Inference

Static Object-Oriented Language Type Inference TI

In Statically Typed languages require that all variables to be of a specific type, in this way the compiler can ensure that the variable contains only an object compatible with the specified type. In this way the compiler can ensure the calling method and this kind of errors cannot happen in run time. It introduces safety execution, reliable code and introduces efficiency of the results. However static type are unable to evolve in some circumstances, for example the upper classes restrict the inherited ones to evolve into various forms because the strict type binding concept.

Examples of static languages are Eiffel, Java, C#, C++, Visual Basic, ......

Type Feedback

Dynamic Object-Oriented Language Type Feedback TF

The Dynamic Typed languages don't require variables to be of a specific type, in other words any variable can contain any value or object, this feature makes it more flexible and easy to change on run time, however the programmer must be more careful when a variable is expected to contain certain object, commonly happens that variables have objects other than it was expected, this causes uncertainty when calling a method and it can lead to error at run time. This kin of error can affect the understanding of messages when the objects are not the expected ones or even worse the messages can be interpreted in other different sense.

Examples of dynamic languages are Smalltalk, Ruby, Python, Perl, ...

Principal Comparison Between Static and Dynamic

Table 1 summarizes the principal differences between Dynamic and Static Object Oriented languages from the performance point of view taken from Language Comparissons, below are the explanations of each Feature and its effect, refer to the same link read the complete study.

| Feature | Dynamic | Static |

|---|---|---|

| Generic Classes | No | Yes |

| Inheritance | Both class and object inheritance | Only class inheritance |

| Method Overloading | Yes | No |

| Higher Order Functions & Lexical Closures | Both High Order and Lexical Closures | Only High Order |

| Uniform Access | Yes | No |

| Class Variables/Methods | Yes and very advanced | Yes, but limited |

| Reflection | Yes | No |

'Table 1. Performance comparison of Static and Dynamic.'

Generic Classes

Generic classes refer to those classes that are able to assign proper parameters with specific data types. In static typed languages it permits to keep compile time safe and at the same time to be flexible like dynamically typed languages. For example Java does not have this feature, which is a severe disadvantage compared with other static languages, on the other hand Dynamic typed languages do not need parameterized types in order to support generic programming. In terms of performance the generic classes make the static languages to behave in some sense close to dynamic languages, this is a significant improvement.

Inheritance

Inheritance is the ability to share methods to subclasses or an object can be defined as an extension of another class, most of object oriented languages support class based inheritance, but only a few can support class based and object based inheritance at run time by sharing their code characteristics to another classes or objects

This feature is important; those object oriented languages that have both inheritance have more capability performance.

Method Overloading

Method overloading is when two or more classes have methods with the same name, this is an advantage in some cases, for example a problem arises when calling these methods with the same number and type of arguments from different methods, the solution is to have a different name for each method or relate the method to the direct super classes inheritance. Therefore it is expected to object oriented languages to perform not very well if this kind of errors happens.

Higher Order Functions & Lexical Closures

Higher order functions are those that can be used as data objects, it means that they can be bound to variables and also they can be passed as parameters just like variables, they can also be returned as the result of other functions.

Lexical closures have extra capabilities in the sense that not only passes the functions as a parameter variable, but also it is able to carry the entire environment around with it.

For example Smalltalk and Ruby have both higher order functions and lexical closures, which might be good for expanding their capabilities, however to carry the entire environment with it will certainly slow down the time execution if calls are frequent and it would consume processor resources, henceforth the performance would be affected.

Java and C++ only have high order functions and not lexical closures, however they provide a similar partial support of lexical closures using function objects or "functors".

Uniform Access

The principle behind uniform access is very well explained in Bertrand Meyer's Object-Oriented Software Construction, it states 'All services offered by a module should be available through a uniform notation, which does not betray whether they are implemented through storage or through computation.' It is expected to improve the performance since the calls would have uniform access.

Java in one hand cannot support uniform access, for example the use of “ece.bar” object is different from using “ece.bar()” if it were a function, for instance if “.bar” is changed then any use of “ece” must also be changed.

On the other hand Ruby supports uniform access principle directly.

Class Variables/Methods

Class variable/method exists when there is only one copy of a class despite having many instances of the same class and it is shared by every instance of the class Smalltalk and Ruby support the most advanced notion of class variables and methods, because the classes are by themselves objects. Java and C++ provide "static" members that are basically the same as in dynamic, however they are limited because they cannot be inherited.

Reflection

Reflection is the ability to determine the pieces of information about an object at run-time, the features to determine are the type of the object, its inheritance structure, containing methods, number and types of parameters and return types. Smalltalk, Ruby and Python are very good and flexible in their reflection mechanisms, on the contrary C++ does not provide reflection, Java supports it but it is not flexible. It is expected to perform better if the reflection information is available, but to know all this in advance might slowdown the execution time

Benchmarking Between Static and Dynamic

So far only the definitions of static and dynamic typed languages and their differences have been given with a little guess on the performance, the truth is that performance depends on more than software structures and definitions, it depends of the framework, processor speed and more, to avoid this problem a benchmarking was conducted in a standard environment.

The following is a study of performance of the same program in C, Java (Both static) and Python, Ruby (both dynamic) and run them on a 300MHz Pentium speed, to see the complete experiment follow the link Language Bench.

The standard program is to compute the same 100 polynomial terms 500,000 times by using same algorithm in all programs, the program is intended to tests the access to local vectors and be able to calculate simple arithmetic operations in loops, the advantage is that the program is independent of the different libraries and operating system calls of each object oriented language features.

In this benchmarking the performance is taken as the direct cost in terms of the number of users to achieve a required response time, this cost is directly proportional to computation time, in other words the performance is measured as how quickly operations, loop, access to array elements and function calls last to be completed.

The benchmarking is on C and Java versus Python and Ruby

#include <stdio.h>

#include <stdlib.h>

main(short argc, char **argv) {

float mu = 10.0;

float x,s;

float pu = 0.0;

int su, i, j, n;

float pol[100];

register tm1;

register int tp1;

n = atol(argv[1]);

x = atof(argv[2]);

for(i=0; i<n; i++) {

tp1=2;

tm1=1/2.0;

for (j=0; j<100; j++) {

pol[j] = mu = (mu + tp1) * tm1;

}

s = 0.0;

for (j=0; j<100; j++) {

s = x*s + pol[j];

}

pu += s;

}

printf("%f\n",pu);

}

Java See original code

public class tpoly {

static public void main(String argv[]) {

float mu = (float)10.0;

float x,s;

float pu = (float)0.0;

int su, i, j, n;

float pol[] = new float[100];

n = 500000;

x = (float)0.2;

for(i=0; i<n; i++) {

for (j=0; j<100; j++) {

mu = (mu + (float)2.0) / (float)2.0;

pol[j] = mu;

}

s = (float)0.0;

for (j=0; j<100; j++) {

s = x*s + pol[j];

}

pu += s;

}

System.out.println(pu);

}

}

Python See original code

n = 500000

x = 0.2

def t(x):

mu = 10.0

pu = 0.0

pol =[0] * 100

r = range(0,100)

for i in range(0,n):

for j in r:

pol[j] = mu = (mu + 2.0) / 2.0

su = 0.0

for j in r:

su = x * su + pol[j]

pu = pu + su

print pu

t(x)

Ruby See original code

n = 500000

x = 0.2

mu = 10

pu = 0

pol = []

n.times do

0.upto(99) { |j| pol[j] = mu = (mu + 2) * 0.5 }

s = 0

0.upto(99) { |j| s = x * s + pol[j] }

pu += s

end

print pu,"\n"

Results are listed below, as mentioned above the performance is measured in the seconds needed to perform the simple task.

| Language | Seconds to perform task |

|---|---|

| C, using gcc V2.95.4 | 2.73 |

| Java, gcj V3.0 | 3.03 |

| Python V2.1.2 | 515.04 |

| Ruby | 1074.52 |

Table 2. Benchmark in Seconds to Perform the Simple Task

The conclusion is that the static typed languages have better performance, however the performance in terms on the seconds is disembogues, some may argue correctly that performance must be measured from the programmer point of view when assessing static versus dynamic, for this reason below is a benchmarking from the optimization point of view, but first it is required some rules that will apply for optimization

Optimization of Static and Dynamic Code and Benchmarking

Another benchmarking was found in the attemp to optimize performance, the complete investigation was made and studied in Optimization Benchmarking, in order to accomplish this the programs were divided into two groups.

• “Tiny” and “Small” are a set of very small integer programs, they are included as reference but do not contribute much for the performance point of view

• “Large” is a set of big enough application programs that exhibit a variety of object-oriented programming styles.

Table 3 shows the benchmarking and their description taken from Optimization Benchmarking

Table 3. Benchmark Porgrams Optimization Benchmarking

Although tiny and small are good to measure performance they are unlike real application, instead the focus will be on the "Large" set of applications since they are closer and more realistic to the common applications.

Execution Time:

In this case also the performance was measured in terms of speed, which in the end is the ultimate goal of an optimizing compiler. Figure 1 shows the relative execution time of the benchmarks compiled with TF (dynamic) and TI (static) systems

Figure 1. Execution Time Benchmarking Optimization Benchmarking

Number of Dispatches:

A dispatch is the proper selection of the correct piece of code to be executed, by measuring the number of dispatches that a program performs, it is possible to determine the performance by the number of creating mono-morphic calls.

In this case Type Inference (TI) can determine the exact receiver class for many sends.

In contrast, Type Feedback (TF) requires a dispatch test because the receiver type always includes the unknown class.

The benchmark results are shown in Figure 2

Figure 2. Number of Dispatches Benchmarking Optimization Benchmarking

Conclusions:

1. The best performance is all about the right tool for the job, neither of static nor dynamic is better 100% of the time, in fact the systems benchmarking presented here were created by man and therefore they have flaws, also the experience or background might change the perception of performance.

Dynamic typing might perform better because it gets rid off of many constrains for a static programmer, but runtime errors can mess the performance expected or what was planned from the programmer. In fact dynamic typing may fix the errors, but it may drive a novice programmer crazy trying to find out the data type between a constant char and integer variable

2. Modern Java can actually compiles down to an inherently dynamic intermediate language, therefore dynamic typing isn't the huge performance-killer that it seems to be, the reasons of moving a static language type to dynamic type is not execution time performance, it should be related as how programming making performance is.

3. If the performance is measured in how the code is reusable and transportable then statically typed systems programming are not very good, the constrains of static makes code less reusable, versus the flexible feature of dynamic typed language.

4. One undeniable advantage of static typed language is their earlier detection of programming mistakes, better documentation and more visible opportunities for optimization, which mainly consist of changing virtual calls by known compiled calls, this clearly increases runtime efficiency, in other words “well-typed programs cannot go wrong”, however the static type checking is a compile-time abstraction and hence it is incomplete, which means that execution can still go in a bad way due to the properties that are couldn’t not be tracked.

5. The seeking of making a static typed language less partial and more complete causes the type systems to become very complicated and perhaps more robust than needed, it is true that the performance of static language is very good but it is very rigid, it is like trying to run a marathon with a ball and chain tied to your leg and triumphantly shouting that you nearly made it.

Dynamic languages, on the contrary, are soft and this feature makes them very good for prototyping systems, because it deals with changing or unknown requirements or parameters, dynamic typed languages are indeed indispensable for truly dynamic program behavior like method interception, mobile code, runtime reflection, etc. one undeniable conclusion is that dynamic typed languages enable to the programmer to find errors as early in the development as possible and fix them in place, the interaction with other systems that change dynamically makes them perfect for integration where the static typed code cannot interact in the same way.

References

Programming Language Comparison

Dynamic type languages versus static type languages

Static Typing, Dynamic Language Performance, V8 and Tracing JITs

Static versus Dynamic Languages - Attack of the Clones

See Also

Wikipedia.org: Programming language

Wikipedia.org: Just-in-time compilation

Wikipedia.org: Java performance

Wikipedia.org:Common Language Runtime