CSC/ECE 517 Fall 2017/M1753 OffscreenCanvas: Difference between revisions

| (2 intermediate revisions by the same user not shown) | |||

| Line 87: | Line 87: | ||

===Step 4: Implement OffscreenCanvas Constructor=== | ===Step 4: Implement OffscreenCanvas Constructor=== | ||

Create a new OffscreenCanvas by implementing the following Syntax: | Create a new OffscreenCanvas by implementing the following Syntax: | ||

pub fn Constructor( | pub fn Constructor( | ||

| Line 103: | Line 102: | ||

===Step 5: Implement the getContext() method for OffscreenCanvas element === | ===Step 5: Implement the getContext() method for OffscreenCanvas element === | ||

Implement the ''OffscreenCanvas.getContext'' ignoring the WebGL requirements: | Implement the ''OffscreenCanvas.getContext'' ignoring the WebGL requirements: | ||

#[allow(unsafe_code)] | #[allow(unsafe_code)] | ||

| Line 144: | Line 142: | ||

===Step 8:Support offscreen webgl contexts in the 2d context=== | ===Step 8:Support offscreen webgl contexts in the 2d context=== | ||

support offscreen webgl contexts in a similar fashion to the 2d context, by sharing relevant code between OffscreenCanvasRenderingContext2d and WebGLRenderingContext. | support offscreen webgl contexts in a similar fashion to the 2d context, by sharing relevant code between OffscreenCanvasRenderingContext2d and WebGLRenderingContext. | ||

#[inline] | |||

fn request_image_from_cache(&self, url: ServoUrl) -> ImageResponse { | |||

self.base.request_image_from_cache(url) | |||

} | |||

pub fn origin_is_clean(&self) -> bool { | |||

self.base.origin_is_clean() | |||

} | |||

== '''Testing Details''' == | == '''Testing Details''' == | ||

Latest revision as of 18:13, 12 December 2017

The HTML specification defines a <canvas> element that use a 2D or 3D rendering context and to draw graphics. The biggest limitation of <canvas> is that all of the javascript that manipulates the <canvas> has to run on the main thread, as the UI complexity of the application increases, developers inadvertently hit the performance wall. A new specification was recently developed that defines a canvas that can be used without being associated with an in-page canvas element, allowing for more efficient rendering. The OffscreenCanvas API achieves pre-rendering that using a separate off-screen canvas to render temporary image, and then rendering the off-screen canvas back to the main thread.<ref>https://www.html5rocks.com/en/tutorials/canvas/performance/</ref><ref>https://github.com/servo/servo/wiki/Offscreen-canvas-project</ref>

Introduction

The OffscreenCanvas API provides a way to interact with the same canvas APIs but in a different thread. This allows rendering to progress no matter what is going on in the main thread.

Background

Servo is a project to develop a new Web browser engine, aiming to take advantage of parallelism at many levels. Servo is written in Rust, a new language designed specifically with Servo's requirements. Rust is a systems programming language focused on three goals: safety, speed, and concurrency<ref>https://doc.rust-lang.org/book/first-edition/</ref>. Rust provides a task-parallel infrastructure and a strong type system that enforces memory safety and data race freedom<ref>https://github.com/servo/servo/wiki/Design</ref>. Now servo is focused both on supporting a full Web browser, through the use of the purely HTML-based user interface Browser.html and on creating a solid, embeddable engine.

The Canvas element is part of HTML5 and allows for dynamic, scriptable rendering of 2D shapes and bitmap images. The canvas element should be provided with content that conveys essentially the same function or purpose as the canvas's bitmap. The canvas element has two attributes to control the size of the element's bitmap: width and height. The intrinsic dimensions of the canvas element when it represents embedded content are equal to the dimensions of the element's bitmap<ref>https://html.spec.whatwg.org/multipage/canvas.html#the-offscreencanvas-interface</ref>.The biggest limitation of the canvas element is that all of the javascript that manipulates the canvas element has to run on the main thread, sometimes the render can take a long time and it seems to lock up the main JS thread, causing rest of the UI to become slow or unresponsive.

The OffscreenCanvas interface provides a canvas that can be pre-rendered off screen. It is available in both the window and worker contexts. Pre-rendering means using a separate offscreen canvas on which to render temporary images, and then rendering the offscreen canvases back onto the visible one<ref>https://www.html5rocks.com/en/tutorials/canvas/performance/</ref>. This API is the first that allows a thread other than the main thread to change what is displayed to the user. This allows rendering to progress no matter what is going on in the main thread<ref>https://hacks.mozilla.org/2016/01/webgl-off-the-main-thread/</ref>. This API is currently implemented for WebGL1 and WebGL2 contexts only. Our project is to implement the Offscreen API for all 2D, WebGL, WebGL2, and BitmapRenderer contexts.

Project Overview and Scope

The OffscreenCanvas API project is composed of two primary implementation: the OffscreenCanvas that provides a canvas that can be rendered off screen, and the OffscreenCanvasRenderingContext2D that is a rendering context interface for drawing to the bitmap of an OffscreenCanvas object. In the end it achieves pre-rendering that using a separate off-screen canvas to render temporary image, and then rendering the off-screen canvas back to the main thread.

Application Achitecture

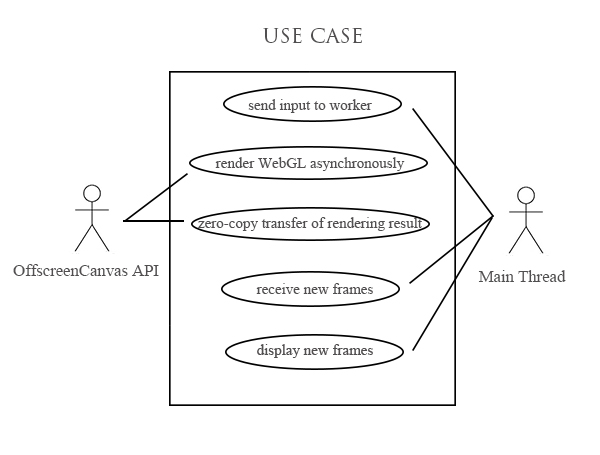

Use Case Description

Rendering heavy shaders in servo should not slow down the main thread (e.g. other UI components). The API needs to be able to render WebGL entirely asynchronously from a worker, displaying the results in a canvas owned by the main thread, without any synchronization with the main thread. In this mode, the entire application runs in the worker. The main thread only receives input events and sends them to the worker for processing.

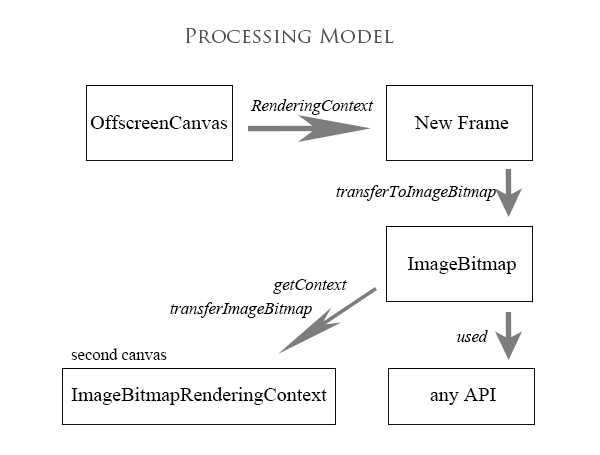

Processing Model

The processing model involves synchronous display of new frames produced by the OffscreenCanvas.The application generates new frames using the RenderingContext obtained from the OffscreenCanvas. When the application is finished rendering each new frame, it calls transferToImageBitmap to "tear off" the most recently rendered image from the OffscreenCanvas.The resulting ImageBitmap can then be used in any API receiving that data type; notably, it can be displayed in a second canvas without introducing a copy. An ImageBitmapRenderingContext is obtained from the second canvas by calling getContext. Each frame is displayed in the second canvas using the transferImageBitmap method on this rendering context.

Implementation Steps

- Create the OffscreenCanvas and OffscreenCanvasRenderingContext2d interfaces.

- Hide the new interfaces by default

- Enable the existing automated tests for this feature

- Implement the OffscreenCanvas constructor that creates a new canvas

- Implement the OffscreenCanvas.getContext ignoring the WebGL requirements

- Extract all relevant canvas operations from CanvasRenderingContext2d

- Implement the convertToBlob API to allow testing the contents of the canvas

- Support offscreen webgl contexts in a similar fashion to the 2d context

Implementation

Step 1: Create Interfaces

Create the OffscreenCanvas and OffscreenCanvasRenderingContext2d interfaces with stub method implementations.

To create the interfaces, the following steps are to be followed :

- 1. adding the new IDL file at components/script/dom/webidls/OffscreenCanvas.webidl<ref>https://html.spec.whatwg.org/multipage/canvas.html#the-offscreencanvas-interface</ref><ref>https://html.spec.whatwg.org/multipage/canvas.html#the-offscreen-2d-rendering-context</ref>;

cd servo/components/script/dom/webidls

touch OffscreenCanvas.webidl

touch OffscreenCanvasRenderingContext2D.webidl

- 2. creating components/script/dom/OffscreenCanvas.rs;

cd servo/components/script/dom

touch offscreencanvas.rs

touch offscreencanvasrenderingcontext2d.rs

- 3. listing OffscreenCanvas.rs in components/script/dom/mod.rs;

- 4. defining the DOM struct OffscreenCanvas with a #[dom_struct] attribute, a superclass or Reflector member, and other members as appropriate;

- 5. implementing the dom::bindings::codegen::Bindings::OffsceenCanvasBindings::OffscreenCanvasMethods trait for OffscreenCanvas;

- 6. adding/updating the match arm in create_element in components/script/dom/create.rs (only applicable to new types inheriting from HTMLElement)

Step 2: Hide newly created interfaces by default

- 1. Hide the new interfaces by default by adding a [Pref="dom.offscreen_canvas.enabled"] attribute to each one and add a corresponding preference to resources/prefs.json

- Add the attribute in file OffscreenCanvas and OffscreenCanvasRendering:

- Add the corresponding preference in prefs.json:

Step 3: Enable Tests

- 1. Add offscreen-canvas directory to tests folder.

cd servo/tests/wpt/metadata

mkdir offscreen-canvas

- 2. Enable test preference for offscreen-canvas by adding __dir__.ini file to the newly created directory.

cd servo/tests/wpt/metadata/offscreen-canvas

touch __dir__.ini

- 3. Run tests and change expected results<ref>https://github.com/servo/servo/blob/master/tests/wpt/README.md#updating-test-expectations</ref>.

Step 4: Implement OffscreenCanvas Constructor

Create a new OffscreenCanvas by implementing the following Syntax:

pub fn Constructor(

global : &GlobalScope,

width: u64,

height: u64

) -> Result<DomRoot<OffscreenCanvas>, Error> {

Ok(OffscreenCanvas::new(global, width, height))

}

pub fn get_size(&self) -> Size2D<i32> {

Size2D::new(self.Width() as i32, self.Height() as i32)

}

Step 5: Implement the getContext() method for OffscreenCanvas element

Implement the OffscreenCanvas.getContext ignoring the WebGL requirements:

#[allow(unsafe_code)]

// https://html.spec.whatwg.org/multipage/#dom-offscreencanvas-getcontext

unsafe fn GetContext(&self,

cx: *mut JSContext,

id: DOMString,

attributes: Vec<HandleValue>)

-> Option<OffscreenRenderingContext> {

match &*id {

"2d" => {

self.get_or_init_2d_context()

.map(OffscreenRenderingContext::OffscreenCanvasRenderingContext2D)

}

"webgl" | "experimental-webgl" => {

self.get_or_init_webgl_context(cx, attributes.get(0).cloned())

.map(OffscreenRenderingContext::WebGLRenderingContext)

}

_ => None

}

}

Step 6: Extract all relevant canvas operations from CanvasRenderingContext2d

Extract all relevant canvas operations from CanvasRenderingContext2d into an implementation shared with OffscreenCanvasRenderingContext2d. Create a trait that abstracts away any operation that currently uses self.canvas in the 2d canvas rendering context, since the offscreen canvas rendering context has no associated <canvas> element

/// Trait to get dimensions of off-screen element

pub trait OffscreenCanvasRendering {

//get width of offscreen-canvas element

fn width(&self) -> u64{

}

// Get the height of the offscreen-canvas element

fn Height(&self) -> u64{

}

}

Step 7: Implement the convertToBlob API

Implement the convertToBlob API to allow testing the contents of the canvas

Step 8:Support offscreen webgl contexts in the 2d context

support offscreen webgl contexts in a similar fashion to the 2d context, by sharing relevant code between OffscreenCanvasRenderingContext2d and WebGLRenderingContext.

#[inline]

fn request_image_from_cache(&self, url: ServoUrl) -> ImageResponse {

self.base.request_image_from_cache(url)

}

pub fn origin_is_clean(&self) -> bool {

self.base.origin_is_clean()

}

Testing Details

- 1. Building

Servo is built with the Rust package manager, Cargo.

The following commands are steps to built servo in development mode, please note the resulting binary is very slow.

git clone https://github.com/jitendra29/servo.git

cd servo

./mach build --dev

./mach run tests/html/about-mozilla.html

- 2. Testing

Servo provides automated tests, adding the --release flag to create an optimized build:

./mach build --release

./mach run --release tests/html/about-mozilla.html

Notes:

- We have not added any new tests to the test suite as servo follows TDD and tests were previously written for OffscreenCanvas. We are adjusting some of the test expectations for the tests to pass our implementation.

Design Pattern

Prototype Pattern

Prototype Pattern is used in this project, since the function of rendering context that OffscreenCanvas API is going to implement coincides with that of Canvas and Canvas2DRenderingContext, which have already been achieved in Servo.

DRY Pattern

To avoid needless duplication, we extract all relevant canvas operations from CanvasRenderingContext2d into an implementation shared with OffscreenCanvasRenderingContext2d.

Pull Request

Here is our pull request. In the link you can see all code snippets changed due to implementing the above steps, as well as integration test progression information.

References

<references/>