CSC/ECE 506 Fall 2007/wiki4 7 jp07: Difference between revisions

No edit summary |

No edit summary |

||

| (5 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

== Helper Threads == | == Helper Threads == | ||

One of the problems when using parallel machines is that the machine is only | One of the problems when using parallel machines is that the machine is typically fed only sequential code that cannot be run in parallel. Parallel programming is rare, and programmers that are accustomed to programming for a single threaded environment are resitant to switch paradigms to a multi-thread environment. Therefore, much of the benefit of having the ability to run multiple threads simultaneously is lost. This is applicable to all of the multi-threading paradigms including Simultaneous Multithread Systems (SMTs) [http://en.wikipedia.org/wiki/Simultaneous_multithreading], Symmetric Multiprocessors (SMPs) [http://en.wikipedia.org/wiki/Symmetric_multiprocessing], and Chip Multiprocessors (CMPs) [http://en.wikipedia.org/wiki/Multi-core_%28computing%29]. | ||

A solution would be to rewrite or recompile the programs to make use of parallel execution. But, in some cases this may be too time consuming or even unfeasible due to the nature of the program. Also, as mentioned earlier programmers are resistant to writing parallel code. The other possibility is to allow the compiler to extract the parallelism from the program using automated techniques. These techniques work well for scientific code with regularly indexed loops. But for standard code, these techniques are ineffective and are unable to extract much parallelism. | |||

Helper threads run in parallel to the main thread, and do work for the main thread to improve it's performance [Olokuton]. Typically these threads will execute parts of the program "ahead" of the main thread, in an attempt to predict branches and/or values before the main thread completes. This is done to help shadow the penalty of long latency instructions. The figure below illustrates the basic concepts of helper thread execution. | In response to these two extremes, there exists a middle ground where the sequential program is not truly parallelized but the multithreading capabilities are utilized to improve execution time. By forking of threads to assist the main thread, these "helper threads" speed up the execution of the main thread. | ||

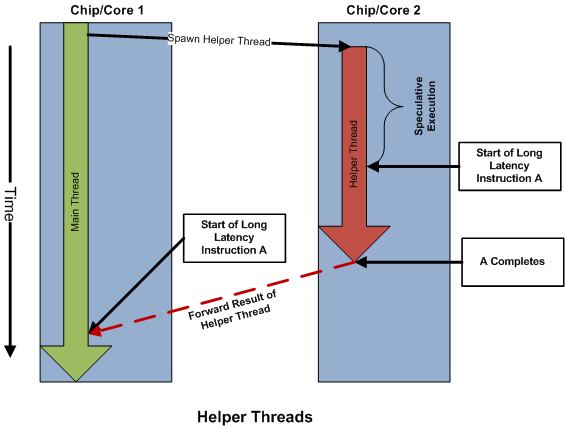

Helper threads run in parallel to the main thread, and do work for the main thread to improve it's performance [Olokuton Text]. Typically these threads will execute parts of the program "ahead" of the main thread, in an attempt to predict branches and/or values before the main thread completes. This is done to help shadow the penalty of long latency instructions. The figure below illustrates the basic concepts of helper thread execution. | |||

<div align="center">[[Image:Helper_threads.png]]</div> | <div align="center">[[Image:Helper_threads.png]]</div> | ||

Note that in a CMP the two contexts would be separate chips, or in an SMT they would be separate thread contexts. The main sequential program will have some knowledge from previous training or via the compiler that a potential long latency instruction such as a cache miss is upcoming. Through some history or prior knowledge the helper thread runs ahead of the main thread executing the instruction vital only to the long-latency instruction. This thread completes ahead of the main thread, so that when the main thread finally reaches the long latency instruction, the helper thread can forward the computed result. | Note that in a CMP the two contexts shown above would be separate chips, or in an SMT they would be separate thread contexts. The main sequential program will have some knowledge from previous training or via the compiler [http://www.google.com/url?sa=t&ct=res&cd=1&url=http%3A%2F%2Fpact05.ce.ucsc.edu%2Fdatacentric.pdf&ei=8SpOR_2oNJvMeMXjpY8N&usg=AFQjCNGKl9aloiJ_PrQHobjnHMmHvwzo0g&sig2=klWNxGwt7yrPpjciV9MpzQ] that a potential long latency instruction such as a cache miss is upcoming. Through some history or prior knowledge the helper thread runs ahead of the main thread executing the instruction vital only to the long-latency instruction. This thread completes ahead of the main thread, so that when the main thread finally reaches the long latency instruction, the helper thread can forward the computed result. | ||

== Applications of Helper Threads == | == Applications of Helper Threads == | ||

| Line 15: | Line 17: | ||

=== Speculative Branch Prediction === | === Speculative Branch Prediction === | ||

One class of helper thread applications is prediction helper threads | One class of helper thread applications is speculative branch prediction helper threads. [http://csdl2.computer.org/persagen/DLAbsToc.jsp?resourcePath=/dl/proceedings/&toc=comp/proceedings/isca/2002/1605/00/1605toc.xml&DOI=10.1109/ISCA.2002.1003588] The technique proposed uses Simultaneous Subordinate Multithreading (SSMT) to run helper threads called "microthreads". These threads consist of microcode, which is code written specifically for manipulating hardware structures within the processor. A SPAWN instruction within the program is used to indicate when a microthread should be initiated. For the branch prediction mechanism the scheme dynamically decides on likely mispredicted branches and constructs microthreads to predict these branches. | ||

Difficult to predict branches are determined using a path cache that stores information about previous branch mispredictions and tracks the difficulty of these branches to predict. Before a microthread is created a branch must go through a training interval where the difficulty is determined. | A microthread will consist of relavant instructions for the branch mispredict and execute them in parallel to the branch. This is of course completely speculative, but will be much more accurate than a traditional branch predictor as actual code is used to generate the prediction. | ||

Difficult to predict branches are determined using a path cache that stores information about previous branch mispredictions and tracks the difficulty of these branches to predict. Before a microthread is created a branch must go through a training interval where the difficulty to predict is determined. If a branch mispredicts this will increase the difficulty metric, whereas a correct prediction would indicate it is less difficult. | |||

The microthreads are created using a post retirement buffer (PRB). Upon retirement, instructions are inserted into the post retirement buffer along with dependence information. When a difficult branch retires a scanner scans the PRB for the recent dependent instructions that the branch depended on and creates a microthread. This microthread is stored and used when the microthread is spawned before the next branch occurs. This way the helper thread computes the branch target before the actual thread reaches the branch. | The microthreads are created using a post retirement buffer (PRB). Upon retirement, instructions are inserted into the post retirement buffer along with dependence information. When a difficult branch retires a scanner scans the PRB for the recent dependent instructions that the branch depended on and creates a microthread. This microthread is stored and used when the microthread is spawned before the next branch occurs. This way the helper thread computes the branch target before the actual thread reaches the branch. | ||

=== Speculative | === Speculative Prefetching === | ||

Another application of helper thread technology is the use of speculative prefetching. This attempts to mask the long latency of an L2 miss by handling it prior to the main thread execution.[http://www.google.com/url?sa=t&ct=res&cd=1&url=http%3A%2F%2Fwww.cs.cmu.edu%2F~luk%2Fluk_papers%2Fisca01.ps.gz&ei=ci5OR4GPGKiMeu3R2JIN&usg=AFQjCNFJr4YY6GLRhUe9kp4ynBdYtqRknw&sig2=oePO2l1PgzkQhI5agm6zlQ ] | |||

In these ideas, the techniques will use helper threads to follow pointer chains or procedure calls into the future to encounter memory accesses ahead of time. This way if there are L2 misses during this pre-execution, then the helper thread will have prefetched this data for the main thread. | |||

==== Potential Problems ==== | |||

In speculative prefetching, the key is that runahead execution can cause major cache thrashing. If the execution run-ahead prefetches data before the data is used in the main thread, then misses will occur that did not exist previously. Furthermore, if speculative execution is too aggressive, then multiple prefetches to the same cache line may occur and the prefetched data may be removed before the main thread ever uses it, thus causing cache misses and cache thrashing. | |||

Alternatively, if the thread is not aggressive enough, then there will be no benefit for the prefetching. The prefetch will not cover the L2 miss latency and thus the usefulness of the work is lost. It is possible that the prefetch start slightly ahead of the main thread and partially covers the L2 miss penalty but not fully. | |||

Therefore, the key area of research in this field is to balance the aggressiveness of speculation with the amount of thread synchronization. | |||

=== Slipstream Technology === | === Slipstream Technology === | ||

=== | The Slipstream processor [http://www.tinker.ncsu.edu/ericro/publications/conference_ASPLOS-9.pdf] uses helper threads to run two versions of a program simultaneously. An instruction removal predictor and confidence are used to generate an "A-stream" program that executes a subset of the instructions of the "R-stream" program (the full program execution). Both run simultenously alongside one another with the A-stream providing data values and branch predictions ahead of time to the R-stream. | ||

A delay buffer is placed between the two instruction streams to communicate the necessary information. This allows the A-stream to mask some of the latencies incurred by the R-stream execution. The name slipstream occurs because the A-stream is running slightly faster than the R-stream, and the residual trail is pulling the R-stream along, similar to how a car is pulled along when it is drafting another car at high speeds. | |||

This technology not only provides improvement in execution time for a single threaded program, but also provide fault tolerance. At high clock speeds and tighter transistor packing, the likelihood of transient faults are increasing. Therefore redundant execution can be used to assist in detecting transient faults. Since the A-stream and R-stream have overlap, the two can validate execution and detect transient faults. However, this will only work for instructions that exist in both versions of the program. | |||

== Conclusions == | |||

There has been even more work done in helper thread research; however, most of the work centers around using the thread to execute the relevant instructions for a long latency operation ahead of normal execution to get the result sooner. There are many challenges that are face with synchronization, determining the best point to spawn the helper thread, and the importance of having a helper thread for a particular instruction (priority management). However, what is clear from these techniques is that even for highly sequential code, the benefits of a multi-core system can be leveraged, and money, power, and area are not completely wasted. | |||

== Additional Links == | == Additional Links == | ||

| Line 36: | Line 60: | ||

* [http://csdl2.computer.org/persagen/DLAbsToc.jsp?resourcePath=/dl/proceedings/&toc=comp/proceedings/isca/2002/1605/00/1605toc.xml&DOI=10.1109/ISCA.2002.1003588 Difficult Path Branch Prediction using Helper Threads] | * [http://csdl2.computer.org/persagen/DLAbsToc.jsp?resourcePath=/dl/proceedings/&toc=comp/proceedings/isca/2002/1605/00/1605toc.xml&DOI=10.1109/ISCA.2002.1003588 Difficult Path Branch Prediction using Helper Threads] | ||

* [http://www.google.com/url?sa=t&ct=res&cd=1&url=http%3A%2F%2Fpact05.ce.ucsc.edu%2Fdatacentric.pdf&ei=8SpOR_2oNJvMeMXjpY8N&usg=AFQjCNGKl9aloiJ_PrQHobjnHMmHvwzo0g&sig2=klWNxGwt7yrPpjciV9MpzQ Compiler Support for Helper Threading] | * [http://www.google.com/url?sa=t&ct=res&cd=1&url=http%3A%2F%2Fpact05.ce.ucsc.edu%2Fdatacentric.pdf&ei=8SpOR_2oNJvMeMXjpY8N&usg=AFQjCNGKl9aloiJ_PrQHobjnHMmHvwzo0g&sig2=klWNxGwt7yrPpjciV9MpzQ Compiler Support for Helper Threading] | ||

* [http://www.google.com/url?sa=t&ct=res&cd=1&url=http%3A%2F%2Fwww.cs.cmu.edu%2F~luk%2Fluk_papers%2Fisca01.ps.gz&ei=ci5OR4GPGKiMeu3R2JIN&usg=AFQjCNFJr4YY6GLRhUe9kp4ynBdYtqRknw&sig2=oePO2l1PgzkQhI5agm6zlQ Tolerating Memory Latency through prefetching with helper threads] | |||

Latest revision as of 23:02, 3 December 2007

Helper Threads

One of the problems when using parallel machines is that the machine is typically fed only sequential code that cannot be run in parallel. Parallel programming is rare, and programmers that are accustomed to programming for a single threaded environment are resitant to switch paradigms to a multi-thread environment. Therefore, much of the benefit of having the ability to run multiple threads simultaneously is lost. This is applicable to all of the multi-threading paradigms including Simultaneous Multithread Systems (SMTs) [1], Symmetric Multiprocessors (SMPs) [2], and Chip Multiprocessors (CMPs) [3].

A solution would be to rewrite or recompile the programs to make use of parallel execution. But, in some cases this may be too time consuming or even unfeasible due to the nature of the program. Also, as mentioned earlier programmers are resistant to writing parallel code. The other possibility is to allow the compiler to extract the parallelism from the program using automated techniques. These techniques work well for scientific code with regularly indexed loops. But for standard code, these techniques are ineffective and are unable to extract much parallelism.

In response to these two extremes, there exists a middle ground where the sequential program is not truly parallelized but the multithreading capabilities are utilized to improve execution time. By forking of threads to assist the main thread, these "helper threads" speed up the execution of the main thread.

Helper threads run in parallel to the main thread, and do work for the main thread to improve it's performance [Olokuton Text]. Typically these threads will execute parts of the program "ahead" of the main thread, in an attempt to predict branches and/or values before the main thread completes. This is done to help shadow the penalty of long latency instructions. The figure below illustrates the basic concepts of helper thread execution.

Note that in a CMP the two contexts shown above would be separate chips, or in an SMT they would be separate thread contexts. The main sequential program will have some knowledge from previous training or via the compiler [4] that a potential long latency instruction such as a cache miss is upcoming. Through some history or prior knowledge the helper thread runs ahead of the main thread executing the instruction vital only to the long-latency instruction. This thread completes ahead of the main thread, so that when the main thread finally reaches the long latency instruction, the helper thread can forward the computed result.

Applications of Helper Threads

Speculative Branch Prediction

One class of helper thread applications is speculative branch prediction helper threads. [5] The technique proposed uses Simultaneous Subordinate Multithreading (SSMT) to run helper threads called "microthreads". These threads consist of microcode, which is code written specifically for manipulating hardware structures within the processor. A SPAWN instruction within the program is used to indicate when a microthread should be initiated. For the branch prediction mechanism the scheme dynamically decides on likely mispredicted branches and constructs microthreads to predict these branches.

A microthread will consist of relavant instructions for the branch mispredict and execute them in parallel to the branch. This is of course completely speculative, but will be much more accurate than a traditional branch predictor as actual code is used to generate the prediction.

Difficult to predict branches are determined using a path cache that stores information about previous branch mispredictions and tracks the difficulty of these branches to predict. Before a microthread is created a branch must go through a training interval where the difficulty to predict is determined. If a branch mispredicts this will increase the difficulty metric, whereas a correct prediction would indicate it is less difficult.

The microthreads are created using a post retirement buffer (PRB). Upon retirement, instructions are inserted into the post retirement buffer along with dependence information. When a difficult branch retires a scanner scans the PRB for the recent dependent instructions that the branch depended on and creates a microthread. This microthread is stored and used when the microthread is spawned before the next branch occurs. This way the helper thread computes the branch target before the actual thread reaches the branch.

Speculative Prefetching

Another application of helper thread technology is the use of speculative prefetching. This attempts to mask the long latency of an L2 miss by handling it prior to the main thread execution.[6]

In these ideas, the techniques will use helper threads to follow pointer chains or procedure calls into the future to encounter memory accesses ahead of time. This way if there are L2 misses during this pre-execution, then the helper thread will have prefetched this data for the main thread.

Potential Problems

In speculative prefetching, the key is that runahead execution can cause major cache thrashing. If the execution run-ahead prefetches data before the data is used in the main thread, then misses will occur that did not exist previously. Furthermore, if speculative execution is too aggressive, then multiple prefetches to the same cache line may occur and the prefetched data may be removed before the main thread ever uses it, thus causing cache misses and cache thrashing.

Alternatively, if the thread is not aggressive enough, then there will be no benefit for the prefetching. The prefetch will not cover the L2 miss latency and thus the usefulness of the work is lost. It is possible that the prefetch start slightly ahead of the main thread and partially covers the L2 miss penalty but not fully.

Therefore, the key area of research in this field is to balance the aggressiveness of speculation with the amount of thread synchronization.

Slipstream Technology

The Slipstream processor [7] uses helper threads to run two versions of a program simultaneously. An instruction removal predictor and confidence are used to generate an "A-stream" program that executes a subset of the instructions of the "R-stream" program (the full program execution). Both run simultenously alongside one another with the A-stream providing data values and branch predictions ahead of time to the R-stream.

A delay buffer is placed between the two instruction streams to communicate the necessary information. This allows the A-stream to mask some of the latencies incurred by the R-stream execution. The name slipstream occurs because the A-stream is running slightly faster than the R-stream, and the residual trail is pulling the R-stream along, similar to how a car is pulled along when it is drafting another car at high speeds.

This technology not only provides improvement in execution time for a single threaded program, but also provide fault tolerance. At high clock speeds and tighter transistor packing, the likelihood of transient faults are increasing. Therefore redundant execution can be used to assist in detecting transient faults. Since the A-stream and R-stream have overlap, the two can validate execution and detect transient faults. However, this will only work for instructions that exist in both versions of the program.

Conclusions

There has been even more work done in helper thread research; however, most of the work centers around using the thread to execute the relevant instructions for a long latency operation ahead of normal execution to get the result sooner. There are many challenges that are face with synchronization, determining the best point to spawn the helper thread, and the importance of having a helper thread for a particular instruction (priority management). However, what is clear from these techniques is that even for highly sequential code, the benefits of a multi-core system can be leveraged, and money, power, and area are not completely wasted.

Additional Links

- Dynamic Speculative Precomputation

- Slipstream Processors

- A Survey on Helper Threads

- HeapMON -- Helper Thread Bug Detection Scheme with contributions from NCSU

- Helper Threads via Virtual Multithreading

- Difficult Path Branch Prediction using Helper Threads

- Compiler Support for Helper Threading

- Tolerating Memory Latency through prefetching with helper threads