CSC/ECE 506 Fall 2007/wiki4 5 1008: Difference between revisions

| (13 intermediate revisions by the same user not shown) | |||

| Line 12: | Line 12: | ||

A technique used to run multiple threads at the same time. Care needs to be taken by the programmer to ensure multiple threads do not interfere with each other.<br> | A technique used to run multiple threads at the same time. Care needs to be taken by the programmer to ensure multiple threads do not interfere with each other.<br> | ||

'''Superscaler'''<br> | '''Superscaler'''<br> | ||

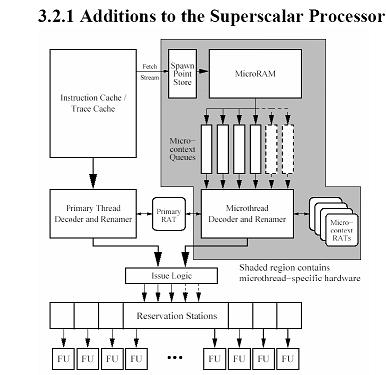

A processor architecture that executes more than one instruction during a single pipeline stage by pre-fetching multiple instructions and simultaneously dispatching them to redundant functional units on the processor(wikipedia).<br> | A processor architecture that executes more than one instruction during a single pipeline stage by pre-fetching multiple instructions and simultaneously dispatching them to redundant functional units on the processor(wikipedia). One of the drawbacks to using this type of processor is that it must be modified in order to use helper threads as illustrated below.<br> | ||

[[Image:Superscaler.jpg]] | |||

Picture credit: Studer | |||

==Types of Helper threads== | ==Types of Helper threads== | ||

| Line 20: | Line 22: | ||

A helper thread where an exact copy of the program is run as the “future thread” on the processor whenever the main thread runs out of its allocated set of registers, signifying limited instruction level parallelism(studer).<br> | A helper thread where an exact copy of the program is run as the “future thread” on the processor whenever the main thread runs out of its allocated set of registers, signifying limited instruction level parallelism(studer).<br> | ||

'''Slipstream Processing'''<br> | '''Slipstream Processing'''<br> | ||

1 dual processor CMP that runs redundant copies of the same program. A significant number of dynamic instructions are removed from one of the program copies, without sacrificing its ability to make correct forward progress | 1 dual processor CMP that runs redundant copies of the same program. A significant number of dynamic instructions are removed from one of the program copies, without sacrificing its ability to make correct forward progress(Ibrihim,Byrd,Rotenberg). These two copies are broken into an A-stream and an R-stream.<br> | ||

==Uses of helper threads== | ==Uses of helper threads== | ||

| Line 30: | Line 32: | ||

Up to this point only parallel helper threads have been discussed. There are also sequential helper threads. These threads work in a manner similar to slipstream processors, which will be discussed later, except instead of running a separate copy of the same program all of the time, they run what is know as a future thread. While executing, the future thread can resolve branches, prefetch data, and calculate values that will be used by the main thread(Studer). A future thread is triggered when the main thread has used up all of the physical registers that it can. When the helper is triggered the registers are allocated between the main thread and the helper dynamically(Studer). | Up to this point only parallel helper threads have been discussed. There are also sequential helper threads. These threads work in a manner similar to slipstream processors, which will be discussed later, except instead of running a separate copy of the same program all of the time, they run what is know as a future thread. While executing, the future thread can resolve branches, prefetch data, and calculate values that will be used by the main thread(Studer). A future thread is triggered when the main thread has used up all of the physical registers that it can. When the helper is triggered the registers are allocated between the main thread and the helper dynamically(Studer). | ||

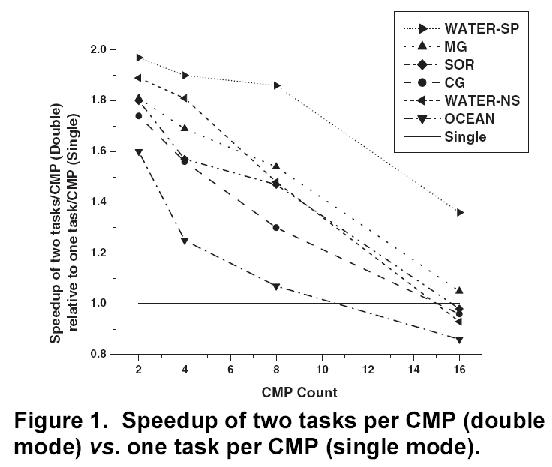

Slipstream processing uses a CMP to run two copies of the same program. On one core the full program is run. On the second core a reduced version of the same program is run. The reduced program is used to construct an accurate view of future memory accesses. The reason all of this is done is to improve overall performance. Two concurrent, parallel, copies of the same program are not run because at some point concurrency does not help. You will eventually hit a performance threshold(Ibrihim,Byrd,Rotenberg). In order to use slipstream mode on a CMP the programmer must use slipstream aware libraries. Slipstreaming is not just automatically turned on in a CMP. When the program is run it is divided into two streams an A-stream and an R-stream. The A-stream is a reduced copy of the program that will start to run ahead of the R-stream, which is a full copy of the program. When used for prefetching the A-stream will prefetch data in to the L2 cache for the R-Stream,which both streams share. | Slipstream processing uses a CMP to run two copies of the same program. On one core the full program is run. On the second core a reduced version of the same program is run. The reduced program is used to construct an accurate view of future memory accesses. The reason all of this is done is to improve overall performance. Two concurrent, parallel, copies of the same program are not run because at some point concurrency does not help. You will eventually hit a performance threshold(Ibrihim,Byrd,Rotenberg). In order to use slipstream mode on a CMP the programmer must use slipstream aware libraries. Slipstreaming is not just automatically turned on in a CMP. When the program is run it is divided into two streams an A-stream and an R-stream. The A-stream is a reduced copy of the program that will start to run ahead of the R-stream, which is a full copy of the program. When used for prefetching the A-stream will prefetch data in to the L2 cache for the R-Stream,which both streams share.<br> | ||

[[Image:figure1_slipstream2.jpg]] | |||

Picture credit: Ibrihim,Byrd,Rotenberg | |||

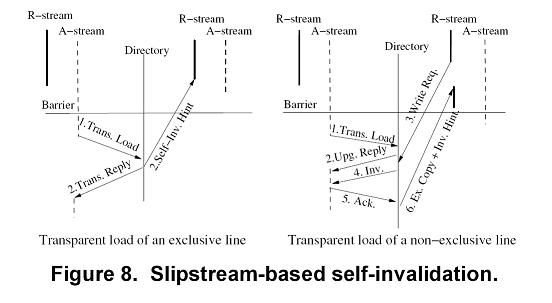

Slipstream processing can also be used to do Self Invalidation. Self invalidation can be used to optimize coherence in a system. Using self invalidation allows the processor to invalidate any of the lines in its local cache before a conflict can occur. By doing this latency due to coherence misses is reduced and overall system performance is increased. However, self invalidation would not be possible without the use of a transparent load. The transparent load is there to prevent premature data migration. Remember the A-stream is running ahead of the R-stream prefetching values. With out the transparent load it is entirely possible that a non coherent value culd be placed into memory and read by the R-stream. | Slipstream processing can also be used to do Self Invalidation. Self invalidation can be used to optimize coherence in a system. Using self invalidation allows the processor to invalidate any of the lines in its local cache before a conflict can occur. By doing this latency due to coherence misses is reduced and overall system performance is increased. However, self invalidation would not be possible without the use of a transparent load. The transparent load is there to prevent premature data migration. Remember the A-stream is running ahead of the R-stream prefetching values. With out the transparent load it is entirely possible that a non coherent value culd be placed into memory and read by the R-stream. | ||

[[Image:figure8_transparentload2.jpg]] | |||

Picture credit: Ibrihim,Byrd,Rotenberg | |||

==Issues using helper threads== | ==Issues using helper threads== | ||

Latest revision as of 17:38, 2 December 2007

Section 3.1 Olukotun book

Helper Threads

A helper thread is a thread that will do some of the work in advance of the main thread. This is also called pseudo-parallelization. There are several types of helper threads, these include parallel,sequential, and slipsteam processing on CMPs. The slipstream method was developed here at NCSU. Depending on how the helper is used a fair amount of speedup can be attained. However, there is a limit and this is touched on with slipstream processing.

Terms

In order to fully understand the wiki there are a few tems that will need to be defined.

SMT

Simultaneous multi threading - a processor design that combines hardware multi threading and superscaler processor technology to allow multiple threads to issue instructions each cycle. This technology is also known as hyper threading. In an SMT processor the helper thread is executed using spare contexts. The complexity of using a helper thread is greatly reduced in this type of processor.(Studer)

CMP

Chip multi processor - two or more identical processors on the same die that work together.

Multi threading

A technique used to run multiple threads at the same time. Care needs to be taken by the programmer to ensure multiple threads do not interfere with each other.

Superscaler

A processor architecture that executes more than one instruction during a single pipeline stage by pre-fetching multiple instructions and simultaneously dispatching them to redundant functional units on the processor(wikipedia). One of the drawbacks to using this type of processor is that it must be modified in order to use helper threads as illustrated below.

Picture credit: Studer

Picture credit: Studer

Types of Helper threads

Parallel

A parallel helper thread runs on the same code as the main thread. These can be constructed simply because they run on the same code as the main thread however, they do add complexity because hardware needs to be aded in order to stop them.

Sequential

A helper thread where an exact copy of the program is run as the “future thread” on the processor whenever the main thread runs out of its allocated set of registers, signifying limited instruction level parallelism(studer).

Slipstream Processing

1 dual processor CMP that runs redundant copies of the same program. A significant number of dynamic instructions are removed from one of the program copies, without sacrificing its ability to make correct forward progress(Ibrihim,Byrd,Rotenberg). These two copies are broken into an A-stream and an R-stream.

Uses of helper threads

The main use for a helper thread is to speed up the execution of tasks of a parent thread. They can be used for branch prediction, exception handling, and loop execution. The helper thread is used in an attempt to speed up the parent thread. The helper thread can be used to handle cache misses, this is especially true in the case of sequential integer type programs. In general a helper thread begins execution prior to a problem instruction in the main thread,performs some sort of work, then stops execution(Studer).

Examples

From the text the author talks about to uses for helper threads. These are branch prediction and to prefetch data into on chip caches. Olukotun only briefly covers how these two operations are done. Branch prediction is a very essential part in todays modern computing systems. It allows the processor to to execute instructions with waiting for a branch to be resolved(wikipedia). Most current pipelined microprocessors do some sort of branch prediction since a new instruction must start to execute before the current instruction finishes. When a helper thread is going to be doing branch prediction profiling could be used to determine the branch. Using profiling would not add any overhead to the program during runtime. In order to streamline the helper thread, context is taken into consideration. The helper will be more streamlined because it will only be used when the branch is truly hard to predict(Studer). In order to stop the helper thread while doing branch prediction the results that it returns should no longer be useful. In order to determine the usefulness of the data returned by the helper thread a vector is used to store the helpers branch history. When the parent thread deviates from that the thread is terminated. Control flow is also used to determine whether or not to terminate a helper. However, different flow control only becomes an issue if branches are removed from the helper threads. This is not an issue in an SMT which can use the normal branch predictor(Studer).The second example talked about in the text has to deal with cache prefetching. Cache prefetching helper threads are used to grab data and put it in the cache for the main thread. Doing this allows the main thread to work more efficiently and helps with speedup.

Up to this point only parallel helper threads have been discussed. There are also sequential helper threads. These threads work in a manner similar to slipstream processors, which will be discussed later, except instead of running a separate copy of the same program all of the time, they run what is know as a future thread. While executing, the future thread can resolve branches, prefetch data, and calculate values that will be used by the main thread(Studer). A future thread is triggered when the main thread has used up all of the physical registers that it can. When the helper is triggered the registers are allocated between the main thread and the helper dynamically(Studer).

Slipstream processing uses a CMP to run two copies of the same program. On one core the full program is run. On the second core a reduced version of the same program is run. The reduced program is used to construct an accurate view of future memory accesses. The reason all of this is done is to improve overall performance. Two concurrent, parallel, copies of the same program are not run because at some point concurrency does not help. You will eventually hit a performance threshold(Ibrihim,Byrd,Rotenberg). In order to use slipstream mode on a CMP the programmer must use slipstream aware libraries. Slipstreaming is not just automatically turned on in a CMP. When the program is run it is divided into two streams an A-stream and an R-stream. The A-stream is a reduced copy of the program that will start to run ahead of the R-stream, which is a full copy of the program. When used for prefetching the A-stream will prefetch data in to the L2 cache for the R-Stream,which both streams share.

Picture credit: Ibrihim,Byrd,Rotenberg

Picture credit: Ibrihim,Byrd,Rotenberg

Slipstream processing can also be used to do Self Invalidation. Self invalidation can be used to optimize coherence in a system. Using self invalidation allows the processor to invalidate any of the lines in its local cache before a conflict can occur. By doing this latency due to coherence misses is reduced and overall system performance is increased. However, self invalidation would not be possible without the use of a transparent load. The transparent load is there to prevent premature data migration. Remember the A-stream is running ahead of the R-stream prefetching values. With out the transparent load it is entirely possible that a non coherent value culd be placed into memory and read by the R-stream.

Picture credit: Ibrihim,Byrd,Rotenberg

Picture credit: Ibrihim,Byrd,Rotenberg

Issues using helper threads

One of the most critical issues concerning helper threads is timing. If the helper thread does not do the branch prediction in time the work done by the helper just turns into extra computation that the system did for no reason, and the data is completely useless to the main thread. Another issue is the processor complexity. Extra hardware needs to be added to the processor in order for the main thread to access the results of the helper. With a sequential helper thread any loads done to cache will directly effect the main thread. What this means is the future(helper)thread can not store any values in cache or main memory.

On an SMT processor getting initial values into the registers for the helper thread proves to be a problem. A significant amount of thread spawn overhead can be attributed to this problem. SMT processors also need to have hardware modifications done to them in order to use helper threads. Communicating values to the helper thread can also prove to be difficult. If hardware modifications are not used then programs will need to be altered in order to use helper threads. However, taking care of communications programatically can degrade performance because the helper requires a large number of values to be spawned(Studer).

References

A Survey of Helper threads and thier implementations: Ahern Studer, http://www.ece.rochester.edu/~mihuang/TEACHING/OLD/ECE404_SPRING03/HTsurvey.pdf

Helper Threads via Virtual Multithreading On An Experimental Itanium 2 Processor-based Platform: Perry H. Wang, Jamison D. Collins, Hong Wang, Dongkeun Kim, Bill Greene, Kai-Ming Chan, Aamir B. Yunus, Terry Sych, Stephen F. Moore, and John P. Shen http://delivery.acm.org/10.1145/1030000/1024411/p144-wang.pdfkey1=1024411&key2=1477195911&coll=GUIDE&dl=GUIDE&CFID=15151515&CFTOKEN=6184618

Slipstream Execution Mode for CMP-Based Multiprocessors: Khaled Z. Ibrahim, Gregory T. Byrd, and Eric Rotenberg http://www.cs.ucr.edu/~gupta/hpca9/HPCA-PDFs/17-ibrahim.pdf

http://www.cs.washington.edu/research/smt/

http://en.wikipedia.org/wiki/Superscalar

http://en.wikipedia.org/wiki/Branch_prediction