CSC517 OffScreenCanvas Servo

About Servo:

Servo is a parallel web-engine project under Mozilla, which aims to develop a web engine to better utilize the potential of multiple processing units to parse and display web-pages faster than conventional browser engines. Servo is implemented using Rust programming language, which is similar to C, except for the fact that it is specially tuned for better memory safety and concurrency features.

Servo makes web-page rendering faster by using parallel layout, styling, web-renders, and constellation. Since, when a thread or javascript of a particular section of the webpage fails, it doesn't affect other tabs, browser or even other elements of the same web-page. So, in a way, Rust lets the browser to "fail better" than other browsers.

Why use rust ?

Algorithms which deal with multiple threads running in parallel are inherently difficult, due to synchronization, data sharing and other typical issues which arise while designing parallel algorithms. C++ makes this process even harder as it does not have efficient mechanisms to deal with this. So, the idea behind rust and servo is to rewrite C++ (and create rust) and use that to rewrite a browser.

Before you get started

Off Screen Canvas:

Canvas is a popular way of drawing all kinds of graphics on the screen and an entry point to the world of WebGL. It can be used to draw shapes, images, run animations, or even display and process video content. It is often used to create beautiful user experiences in media-rich web applications and online games. Because canvas logic and rendering happens on the same thread as user interaction, the (sometimes heavy) computations involved in animations can harm the app’s real and perceived performance.

Until now, canvas drawing capabilities were tied to the <canvas> element, which meant it was directly depending on the DOM. OffscreenCanvas, as the name suggests, decouples the DOM and the Canvas API by moving it off-screen. Due to this decoupling, rendering of OffscreenCanvas is fully detached from the DOM and therefore offers some speed improvements over the regular canvas as there is no synchronization between the two.

Web workers and Offscreen Canvas

Web Workers makes it possible to run a script operation in a background thread separate from the main execution thread of a web application. The advantage of this is that laborious processing can be performed in a separate thread, allowing the main (usually the UI) thread to run without being blocked/slowed down. So, JavaScript could run in the background without affecting the performance of the webpage, independent of other scripts. But, web-workers are treated as external files, which makes it impossible for them to access window object, document object and the parent object. Hence, web-workers can only communicate with the rendering-engine by passing messages. But, how is OffscreenCanvas rendered using web-workers?: Suppose the task is to render an image in the canvas. The rendering engine creates a web-worker, and sends it the image data that it needs to process. The web-worker does the required processing, and returns the resultant image back to the rendering-engine. In this way, an image is rendered using an entirely independent process from the webpage.

Web IDL

Web IDL is an interface definition language that can be used to describe interfaces that are intended to be implemented in web browsers. It is an IDL variant with:

- A number of features that allow one to more easily describe the behavior of common script objects in a web context.

- A mapping of how interfaces described with Web IDL correspond to language constructs within an ECMAScript execution environment.

Scope:

The scope of the project included:

- Creating the OffscreenCanvas and OffscreenCanvasRenderingContext2d interfaces with stub method implementations.

- Implementing the OffscreenCanvas Constructor [1] that creates a new canvas.

Getting Started

Building Servo

- Testing servo requires it to build it locally, or using a cloud service like Janitor.

- Building servo locally would take approximately 30mins - 1 hour depending upon your computer.

- For further steps regarding building servo locally, please visit this.

Verifying Build

- We can verify whether servo has been built by running the following command: ./mach run http://www.google.com

- This command will render the homepage of google in a new browser instance of servo. If this executes correctly, then the build is fine.

- Specific functionalities could be tested by writing a custom html file and then running it the similar way.

- Note: the following command won't work if you're on Janitor.

What we did

Overview

- Our task was to implement the functionality of OffscreenCanvas.

- Now, since OffscreenCanvas objects are declared and used in JavaScript, but the rendering happens outside the domain of JavaScript, we would need two different objects, one to interact with JavaScript, and the other to handle the rendering, independent of JavaScript.

- The object to interact with JavaScript is OffscreenCanvas, whereas the object which handles rendering is OffscreenCanvasRenderingContext2D.

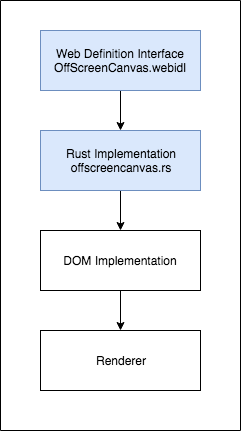

- To implement a functionality, first we created the webIDL files, which contains interfaces of the functionalities we need to implement.

- After creating the interfaces, the next step was to implement them. The implementation of the webIDL files is written in the rust file (.rs) with the same name. (For example, OffscreenCanvas.webidl -> offscreencanvas.rs)

- The webIDL files contains the constructor as well as all the methods which were supposed to be implemented as specified in the webIDL files.

- Testing the implemented code is discussed in further sections.

Code Flow

Under the hood

- OffscreenCanvas.webidl: This is an interface, which defines what the implementation of the OffscreenCanvas should contain. As it directly interacts with JavaScript, hence it should have a constructor, with two arguments height and width, just like a regular HTMLCanvas element. The code for the same is shown in the implementation. Also, it contains the getContext method, which specifies the type of context: 2d, webgl or webgl2.

- OffscreenCanvasRenderingContext2D.webidl: This is also an interface file, which defines what the implementation of OffscreenCanvasRenderingContext2D should look like. As it does not interacts directly with javascript, but interacts with the OffscreenCanvas object that we created, it contains a back reference to the same. It also defines the functionalities which its implementation class should follow.

- offscreencanvas.rs: This file is responsible for implementing OffscreenCanvas.webidl. It has a constructor, which is implemented as per the requirements of the webIDL, posted in the section below (implementation). When a new instance of offscreencanvas is created, it calls the constructor, which in turn calls the new method in the interface,\ which ultimately calls the new method defined in this file. The arguments of the constructor, namely height, width and (weak reference) options, are stored in the new object which is created. It also contains a reflector object, which tells the JavaScript garbage collector to ignore the canvas object while clearing memory. The implementation for the method getContext(), returns a weak reference to the OffscreenCanvasRenderingContext2d, which is responsible to perform all the tasks in the backend.

- offscreenrenderingcontext2d.rs: : This file contains the implementation for OffscreenCanvasRenderingContext2D. Since it does not interact directly with JavaScript, neither does it have a constructor, nor does it have a reflector object. The functionalities mentioned in the interface for this file have to be implemented here. But that is beyond the scope of our current project. As of now, this file only contains the code to help successfully build an offscreencanvas object. The functionalities of this file are likely to be implemented in our subsequent project.

Implementation

NOTE

The elements of the code added here has been explained in detail in the section: What we did -> under the hood.

OffScreenCanvas.webidl

typedef (OffscreenCanvasRenderingContext2D or WebGLRenderingContext or WebGL2RenderingContext) OffscreenRenderingContext;

dictionary ImageEncodeOptions {

DOMString type = "image/png";

unrestricted double quality = 1.0;

};

enum OffscreenRenderingContextId { "2d", "webgl", "webgl2" };

[Constructor([EnforceRange] unsigned long long width, [EnforceRange] unsigned long long height), Exposed=(Window,Worker), Transferable]

interface OffscreenCanvas : EventTarget {

attribute [EnforceRange] unsigned long long width;

attribute [EnforceRange] unsigned long long height;

OffscreenRenderingContext? getContext(OffscreenRenderingContextId contextId, optional any options = null);

ImageBitmap transferToImageBitmap();

Promise<Blob> convertToBlob(optional ImageEncodeOptions options);

};

OffScreenCanvasRenderingContext2D.webidl

[Exposed=Window]

interface CanvasRenderingContext2D {

// back-reference to the canvas

readonly attribute HTMLCanvasElement canvas;

};

CanvasRenderingContext2D implements CanvasState;

CanvasRenderingContext2D implements CanvasTransform;

CanvasRenderingContext2D implements CanvasCompositing;

CanvasRenderingContext2D implements CanvasImageSmoothing;

CanvasRenderingContext2D implements CanvasFillStrokeStyles;

CanvasRenderingContext2D implements CanvasShadowStyles;

CanvasRenderingContext2D implements CanvasFilters;

CanvasRenderingContext2D implements CanvasRect;

CanvasRenderingContext2D implements CanvasDrawPath;

CanvasRenderingContext2D implements CanvasUserInterface;

CanvasRenderingContext2D implements CanvasText;

CanvasRenderingContext2D implements CanvasDrawImage;

CanvasRenderingContext2D implements CanvasImageData;

CanvasRenderingContext2D implements CanvasPathDrawingStyles;

CanvasRenderingContext2D implements CanvasTextDrawingStyles;

CanvasRenderingContext2D implements CanvasPath;

offscreencanvasrenderingcontext2d.rs

#[dom_struct]

pub struct OffscreenCanvasRenderingContext2D{

reflector_: Reflector,

canvas: Option<Dom<OffscreenCanvas>>,

}

impl OffscreenCanvasRenderingContext2D {

pub fn new_inherited(canvas: Option<&OffscreenCanvas>) -> OffscreenCanvasRenderingContext2D {

OffscreenCanvasRenderingContext2D {

reflector_: Reflector::new(),

canvas: canvas.map(Dom::from_ref),

}

}

pub fn new(canvas: Option<&OffscreenCanvas>) -> DomRoot<OffscreenCanvasRenderingContext2D> {

reflect_dom_object(Box::new(OffscreenCanvasRenderingContext2D::new_inherited(canvas)), OffscreenCanvasRenderingContext2DWrap)

}

}

offscreencanvas.rs

pub enum OffscreenRenderingContext {

Context2D(Dom<OffscreenCanvasRenderingContext2D>),

WebGL(Dom<WebGLRenderingContext>),

WebGL2(Dom<WebGL2RenderingContext>),

}

#[dom_struct]

pub struct OffscreenCanvas{

reflector_: Reflector,

height: u64,

width: u64,

context: DomRefCell<Option<OffscreenRenderingContext>>,

placeholder: Option<Dom<HTMLCanvasElement>>,

}

impl OffscreenCanvas{

pub fn new_inherited(height: u64, width: u64, placeholder: Option<&HTMLCanvasElement>) -> OffscreenCanvas {

OffscreenCanvas {

reflector_: Reflector::new(),

height: height,

width: width,

context: DomRefCell::new(None),

placeholder: placeholder.map(Dom::from_ref),

}

}

pub fn new(global: &GlobalScope, height: u64, width: u64, placeholder: Option<&HTMLCanvasElement>) -> DomRoot<OffscreenCanvas> {

reflect_dom_object(Box::new(OffscreenCanvas::new_inherited(height,width,placeholder)), global, OffscreenCanvasWrap)

}

pub fn Constructor (global: &GlobalScope, height: u64, width: u64) -> Fallible<DomRoot<OffscreenCanvas>> {

//step 1

let offscreencanvas = OffscreenCanvas::new(global,height,width,None);

//step 2

if offscreencanvas.context.borrow().is_some() {

return Err(Error::InvalidState);

}

offscreencanvas.height = height;

offscreencanvas.width = width;

offscreencanvas.placeholder = None;

//step 3

Ok(offscreencanvas)

}

pub fn context(&self) -> Option<Ref<OffscreenRenderingContext>> {

ref_filter_map::ref_filter_map(self.context.borrow(), |ctx| ctx.as_ref())

}

fn get_or_init_2d_context(&self) -> Option<DomRoot<OffscreenCanvasRenderingContext2D>> {

if let Some(ctx) = self.context() {

return match *ctx {

OffscreenRenderingContext::Context2D(ref ctx) => Some(DomRoot::from_ref(ctx)),

_ => None,

};

}

//let window = window_from_node(self);

//let size = self.get_size();

let context = OffscreenCanvasRenderingContext2D::new(self);

*self.context.borrow_mut() = Some(OffscreenRenderingContext::Context2D(Dom::from_ref(&*context)));

Some(context)

}

}

impl OffscreenCanvasMethods for OffscreenCanvas{

#[allow(unsafe_code)]

unsafe fn GetContext(&self,cx: *mut JSContext, contextID: DOMString, options: HandleValue) -> Option<OffscreenCanvasRenderingContext2DOrWebGLRenderingContextOrWebGL2RenderingContext> {

if contextID == "2d"

{

self.get_or_init_2d_context();

}

}

fn Width(&self) -> u64 {

return self.width;

}

fn SetWidth(&self, value: u64) -> () {

self.width = value;

}

fn Height(&self) -> u64 {

return self.height;

}

fn SetHeight(&self, value: u64) -> () {

self.height = value;

}

}

Test Plan

The changes we introduced could be tested using two ways.

- The first and recommended approach is to enable the existing automated tests for the OffScreenCanvas feature. We have enabled this by the following steps:

1. Added the offscreen-canvas directory to tests/wpt/include.ini

2. Added a __dir__.ini file to tests/wpt/metadata/offscreen-canvas which enables the new preference

This, followed by updating the expected test results gave us the desired results.

- The second approach can be by writing your own test-cases. A sample HTML code to successfully test a rendered OffscreenCanvas is given below:

<canvas id="testCanvas"></canvas>

<script>

var testCanvas = document.getElementById("testCanvas").getContext("bitmaprenderer");

var offscreen = new OffscreenCanvas(256,256);

var gl = offscreen.getContext('2d');

// any custom commands to draw something on the canvas (or leave it as it it for the sake of testing)

var bitmapOne = offscreen.transferToImageBitmap();

testCanvas.transferImageBitmap(bitmapOne);

</script>

We have followed the first procedure where we plan to test the rendering of OffScreen canvas with the help of existing test cases already provided to us.

Pull Request

The pull request used to incorporate our changes upstream is available here [2].

References:

1. https://research.mozilla.org/servo-engines/

2. https://research.mozilla.org/rust/

3. https://hacks.mozilla.org/2017/05/quantum-up-close-what-is-a-browser-engine/