CSC/ECE 517 Fall 2017/E17A7 Allow Reviewers to bid on what to review

This page describes the project E17A7 which is one of the several projects to allow Expertiza support a conference. Specifically, it involves adding the ability of conference paper reviewers to bid for what they want to review. The members of this project are:

Leiyang Guo (lguo7@ncsu.edu)

Bikram Singh (bsingh8@ncsu.edu)

Navin Venugopal (nvenugo2@ncsu.edu)

Sathwick Goparapu (sgopara@ncsu.edu)

Introduction

Expertiza is an open source project created using Ruby on Rails. This project is a software primarily to create reusable learning objects through peer review and also supports team projects. Expertiza allows the creation of instructors and student accounts. This allows the instructors to post projects (student learning objects) which can be viewed and worked upon by students. These can also be peer reviewed by students later.

Background of the project

In the existing Expertiza functionality, the functionality for bidding for topics is present though only for specific situations. The bidding ability is only for bidding for a topic for (teams of) students in a course. This involves the instructor posting a list of project topics, and each student (or all students together as a team, if some students have already formed a team) then post a preference list, listing topics (s)he wants to work on. Then the bidding algorithm assigns the topics, particularly with the following features:

- Students (not in a team) with close preferences are assigned to a project team, and the common preference is the project topic assigned to the team. Alternatively, for existing teams, the project topic is assigned as per the common project topic preference list

- Each team is assigned only one topic

- Each topic (if assigned) is assigned to a maximum of one team

Description of project

This project is responsible for the bidding algorithm used in the conference, which is significantly different from the project bidding algorithm as explained above.

For the purposes of the project, we assume that there are several reviewers in a conference who review papers which are proposed to be presented in the conference. Also, the entire list of papers proposed to be presented in the conference is also available.

Then the basic working of the project assumes:

- Before the bidding close deadline, reviewers submit a list of papers they wish to review.

- After the bidding deadline, the algorithm assigns papers to reviewers to review, such that:

- Each paper (if assigned) is assigned to a maximum of R reviewers (here R represents some constant)

- Each reviewer is assigned a maximum of P papers to review (here P represents some constant)

- Assignment of papers can be individual or team based

Project Requirements

In this section, we discuss the problem statement, then discuss the existing code and possible changes required.

Problem Statement

- To take the existing bidding code in Expertiza (which is meant for students bidding for project topics) and make it callable either for bidding for topics or bidding for submissions to review.

- The matching algorithm is currently not very sophisticated. Top trading cycles is implemented in the web service (though it is currently not used by bidding assignment) and could be adapted to this use.

In the subsequent discussion with the mentor, it was concluded that the two bidding situations are very different hence it was decided to keep the two separate, at least initially. Also, the second requirement was modified to first make the bidding for a conference using any algorithm and if time permits, to use a better algorithm like top trading cycles.

Current Project Aims

- To develop code such that both applications (bidding for project teams and bidding for conference paper reviews) use the same code

- To improve the algorithm for calculating the score for a particular project topic/conference paper review assigned to a project team/conference reviewer

- To ensure that the topic assignment algorithm assigns topics in a balanced manner in the case no reviewer has bid for any topic

- To develop a variable name in the database so as to distinguish between a project topic and a reviewer topic

We want to state here that we are not responsible for developing any conference features. Specifically, this means that we are not changing any UI features. We will be primarily relying on UI changes done by E17A5

Existing Algorithm

Problem

The existing algorithm solves the following problem:

To form teams of students of Maximum size M and then assign topics (there are N topics) based on preference lists submitted either by individual students or teams (complete or otherwise). Preference Lists can have minimum 0 topic and a maximum of L topics. Then the topics are allocated to teams such that each team gets a unique topic and each topic is assigned only to one team.

Functionality

The topics are assigned to project teams in 2 steps:

- Making teams: This is done using a k-means clustering and a weighting formula that favours increasing overall student satisfaction and adding members until the maximum allowable team size is reached. You can read about it in detail here.

- The basic cost function used in the algorithm is: D(i,j) = 1/N * ∑ [ M -U(i) ] where i varies from 1 to N

- The Algorithm calculates the weights for every user, not a team or part of an incomplete team. It then assigns teams using the weights, by plotting graphs for each topic. It is as follows:

- Draw a graph for every topic j with X-axis as the priority position i and the Y axis as the Weights.

- Hierarchical K means to select teams such that all students in a team are close to each other in the graph above, hopefully, more towards the left of the graph, and also such that there are a minimum of 1 and a maximum of M students per team;

- The Algorithm is as follows:

For every topic j :

For every position i that topic j can be placed in (any) preference list:

Let U(i) = number of students who selected topic j at position i of their (individual) preference list

E(i) = 1/U(i)

Calculate D(i,j)

Weight(i,j) = E(i) / D(i,j)

- Assigning topics to teams: This is implemented as a one line, comparing bid preferences to allot topics.

Design

In the following subsections, we discuss the problem, proposed design and code. We are trying to combine the code so that it can be used for both the project topic bidding as well as the conference paper review bidding.

The Combined Problem

The combined problem statement is as follows: Given a list of people (students or reviewers) and also a list of N items to bid on (project topics or conference paper reviews), we require the following:

- To form teams of Maximum size M (M is a positive number)

- Based on preference lists submitted by people/teams having a minimum of 0 items and a maximum of L items, to allot items to teams such that each team gets at most P items and each item (to be bid on) is assigned to at most R teams.

Points of Discussion

We note the differences:

- For Bidding for Assignment Topics, M is generally greater then 1, but P = 1 and R = 1. The item to be bid on is the project topics.

- For Bidding for Conference Paper Reviews, M >= 1 but P and R are generally greater then 1. The item to be bid on is the conference paper reviews.

We note that a team of 1 person makes little sense, but we still implement it to be so that the code is compatible for both the applications.

Proposed Design

Keeping the existing algorithm and the project requirements in mind, we decided to divide the code into 2 parts:

- Part A: Make teams for people not in a team (and if applicable, complete incomplete teams)

- Part B: Assign topics to teams

We note that Part A has been implemented on a web service independent of Expertiza. We also note that the algorithm explained above applies only to Part A, and that it is pretty sophisticated. Hence we choose to keep the existing code as is for this part. We will simply call the web service, provide the list of people, their bidding preference and also the max_team_size.

Part B is implemented as one line. It is pretty simple. We also note that it simply cannot be used for conference paper review assignment. Hence we completely change this section and implement it using a new algorithm.

Changes in Part B of the Design

In the 2 part design proposed in the above section, there were major changes in part B. These are discussed below:

Proposed Score Calculation Algorithm

Let N = Number of topics

B = Base score t = Total number of topics an user prefers

For every topic i:

den = find_den

for every user j:

if(user has preferred this topic)

r = priority(topic)

num = (N + 1 - r) * B * N

score[topic][user] = 1 / (num/den + B)

if(user has preferred no topic)

score[topic][user] = 1 / B

if(user has not preferred this topic)

num = B * N

score[topic][user] = 1/ (B - num/den)

Now find_den = ∑ [ N + 1 ] - ∑ [k] where the iterating variable k varies from 1 to t

Meaning of the Scores

- As we can see, each user gets a separate score for each topic there is to bid for. The scores are assigned on the basis of the preference list submitted.

- Two users can get identical scores only when their preference list is identical. Even if user 2 has all topics of user 1 except the last one in the same order as user 1, still all topics of both users get different scores.

Assignment of Topics

- All scores are arranged in ascending order.

- For the following values:

n1 = total number of teams

n2 = total number of topics

n3 = P

n4 = R

where the meanings of R and P are as above

- Then calculate m1 = floor (n1 * n3 / n2)

- Calculate m2 = floor (n2 * n4 / n1)

- The topics are assigned on the basis of this order of scores, with the restriction that one topic should not be assigned to more then R reviewers, and each reviewer should not get more then the minimum of (P , m1) topics, provided that the score of the bid is lesser then the base score

- The topics are assigned on the basis of this order of scores, with the restriction that one reviewer should not be assigned more then P topics, and each topic should not get more then the minimum of (R , m2) reviewers, provided that the score of the bid is lesser then the base score

- When the bid score is greater then the base score, then the normal test conditions apply

- This last modification is done for the balancing of assignment of topics in the case no team has submitted a preference list. In this case, this change tries to ensure that every topic is assigned in such a way that there are more or less equal number of reviewers reviewing each topic.

Design Methodology

The design methodologies that we will use while writing the code is discussed here.

- We will implement this new algorithm such that the code is DRY

- Use of naming conventions: To define the new variable to differentiate between project and conference topics as is_conference?

- Re factoring the methods: The method that we are working on was very long. We will re factor it into several smaller methods of around 20 lines (altogether, these new smaller methods should be visible on a screen as a whole)

- No hard coding of the data assignments

- Use of new database objects if required

- To understand and to use existing database column values, even in the case they are not used in the situation as of now. We have to implement this with the entry conference_slots

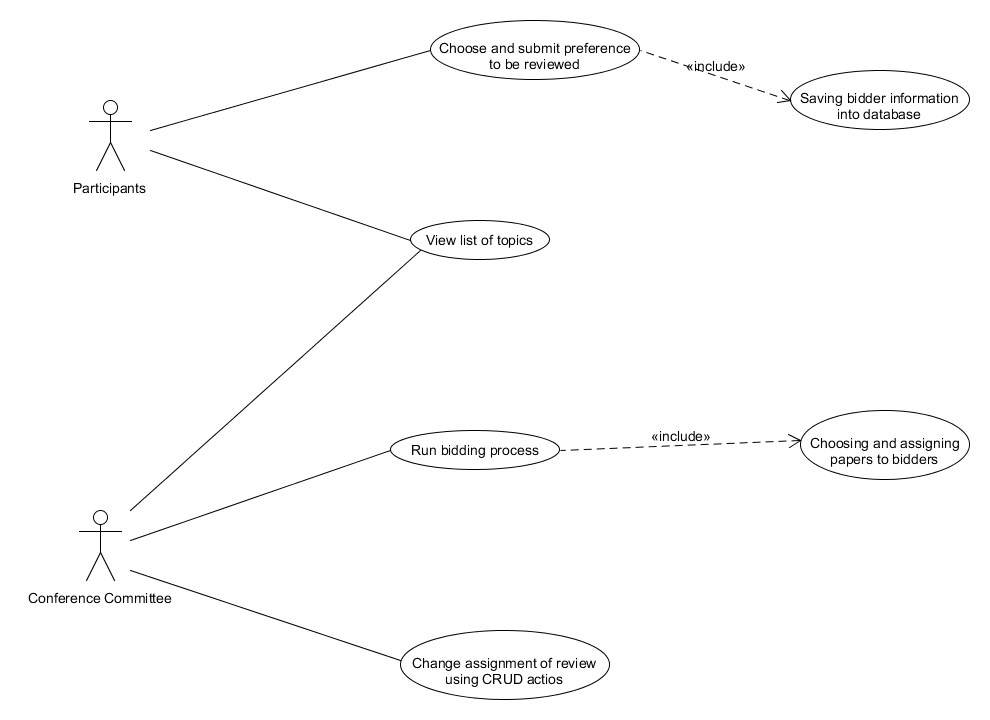

Use Case Diagram

Actors:

- Conference Reviewer: Submits a preference list of papers and reviews assigned papers

- Conference Administrator: Responsible for the assignment of topics to the reviewers

Scenario The case of reviewers submitting a list of preferred topics and the administrator running the assignment process. For our project, the main modification would be concentrating on Use Case 4 and 5.

Choose and submit preference to be reviewed

- Use Case Id: 1

- Use Case Description: Participants choose the preference for conference review topics and submit it

- Actors: Participants

- Pre Conditions: Conference papers are presented and submitted, and the participants are eligible for reviewing

- Post Conditions: Conference committee members can view participants preference and run bidding algorithm on it

Saving topic and participant information into database

- Use Case Id: 2

- Use Case Description: bidding preferences and related participants information are processed and saved to database

- Actors: None

- Triggered by Use Case 1

- Pre Conditions: participants preferences are submitted

- Post Conditions: information can be retrieved and used by bidding algorithm

View list of topics and bid

- Use Case Id: 3

- Use Case Description: participants can view list of topic available for conference paper topic

- Actors: Participants

Run bidding Algorithm

- Use Case Id: 4

- Use Case Description: Committee members can run bidding algorithm on application to help assigning the conference paper topics to participants

- Actors: Conference Committee

- Pre Conditions: preferences must be submitted by participants

- Post Conditions: the bidding result can be used for paper assignment

Display assigned paper to bidders

- Use Case Id: 5

- Use Case Description: System assigns participants to conference paper topics according to bidding result

- Actors: None

- Triggered by Use Case 4

- Pre Conditions: bidding algorithm has run and result has been returned

- Post Conditions: Participants can view topics been assigned to them

Change assignment of review using CRUD actions

- Use Case Id: 6

- Use Case Description: Conference committee members can change assignment result manually

- Actors: Conference Committee

- Pre Conditions: topic assignment has been done

- Post Conditions: changes in bidding result is visible to participants and other committee members

Data Flow Diagram

Below is the Data Flow Diagram for process flows of the project. The diagram shows the process of bidding algorithm that we proposed to use for conference paper review assignment.

We explain in the terms of the actors: Reviewer and Administrator

- Before the deadline, all the reviewers have to submit preference list of papers. They can save, modify list as many times as they want, but they can submit once

- This information is saved in the database

- When the deadline is passed, stop accepting preference lists

- The administrator runs the paper assignment algorithm

- For every topic, calculate the score for each user

- Make a common score list, which keeps score assigned to every topic of every user. There should be a way to find out the topic and user a particular element of the score list belongs to

- Now start assigning topics according to highest score

- Keep track of the number of topics assigned to each user and the number of users assigned to each topic

- If the number of topics assigned to one user reaches the maximum value, remove that user from consideration (remove all scores corresponding to that user from score list)

- If the number of users assigned to one topic reaches the maximum value, remove that topic from consideration (remove all scores corresponding to that topic from score list)

- Continue the above process until the score list is empty

- Save the bidding into the database

- Inform users about the results of the bidding

Log: Key Changes

We extended the code for bidding in the lottery controller, made a separate type of assignment in the database using a new column "is_conference?". In addition, a new bid object model was also defined. We discuss these changes in the following sub sections:

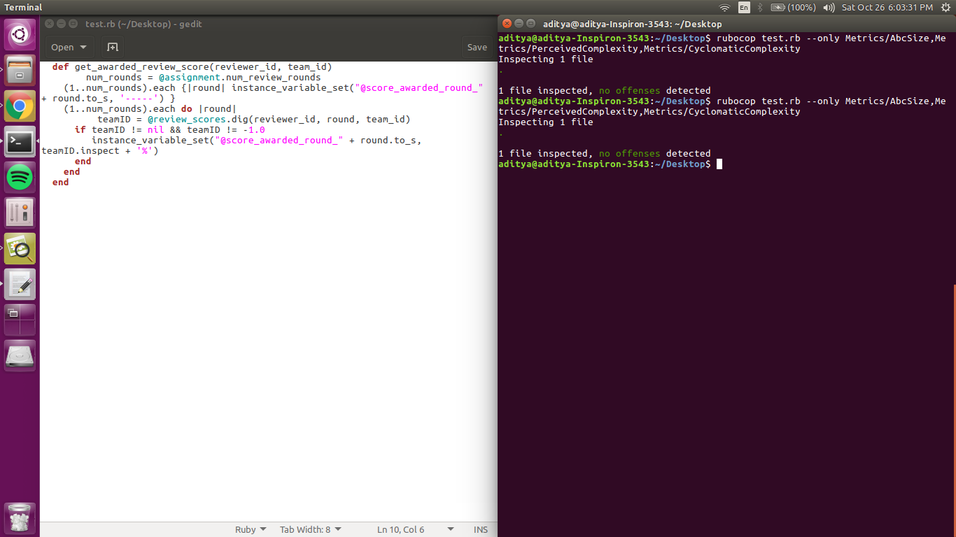

Method Refactoring

- Files Changed: lottery_controller.rb

- Description of Change: Earlier the lottery_controller.rb consisted of one method of 50 lines, which is not readable. We divided it into several methods

New Assignment Type

- We realize that we must create the conference "assignment" to be compatible with the whole of Expertiza, and it must be different from a course assignment. To do this, we create a new column "is_conference?" in the assignment table

- This variable is boolean, and if true, then it means the assignment is a conference assignment

- We created a migration for the same

- Code:

class AddIsConferenceToAssginment < ActiveRecord::Migration

def change

add_column :assignments, :is_conference?, :boolean , default: false

end

end

New Bid Object Model

Following the design methodology, we defined a new model to store the "scorelist" of bids along with the topics and the team ids.

- Files created: bid_score.rb

- Code:

class BidScore include ActiveModel::Validations include ActiveModel::Conversion extend ActiveModel::Naming attr_accessor :score, :team_id, :topic_id validates_presence_of :name, :team_id, :topic_id def initialize(score,teamId,topicId) @score = score @team_id = teamId @topic_id = topicId end def persisted? false end end

Forming teams even for conference reviewers

- We decided to make Part A run even for conference reviewers. Thus, even reviewers can form teams.

- Files Changed: Lottery_controller.rb

- Code:

def run_intelligent_assignment

priority_info = []

assignment = Assignment.find_by(id: params[:id])

topics = assignment.sign_up_topics

teams = assignment.teams

teams.each do |team|

# grab student id and list of bids

bids = []

topics.each do |topic|

bid_record = Bid.find_by(team_id: team.id, topic_id: topic.id)

bids << (bid_record.nil? ? 0 : bid_record.priority ||= 0)

end

team.users.each { |user| priority_info << { pid: user.id, ranks: bids } if bids.uniq != [0] }

end

data = { users: priority_info, max_team_size: assignment.max_team_size }

url = WEBSERVICE_CONFIG["topic_bidding_webservice_url"]

response = RestClient.post url, data.to_json, content_type: :json, accept: :json

# store each summary in a hashmap and use the question as the key

teams = JSON.parse(response)["teams"]

create_new_teams_for_bidding_response(teams, assignment)

if !assignment.is_conference?

begin

run_intelligent_bid(assignment)

rescue => err

flash[:error] = err.message

end

else

run_conference_bid assignment

end

redirect_to controller: 'tree_display', action: 'list'

end

Use of Conference_slot variable

While we defined some of our own variables when creating the new functionality, we also have used the conference_slots option to control the assignment of topics to reviewers.

- Code Changes : Given in the next sub section

Balancing of Assignment of Topics to Reviewers

- This was discussed above

- Basically, in the case none/some of the teams have no preferences, then these teams should be assigned topics such that each topic has more or less the same number of reviewers assigned.

- There can be two cases in which this does not happen: more number of teams via-a-vis topics or vice versa.

- File Changed: lottery_controller.rb

- Code Changes:

#we are adjusting the max limit of teams with below formula so that topic assignments is done in a balancing way

balanced_max_limit_of_teams = ((all_topics.length * assignment.max_reviews_per_submission) / teams.length.to_f).ceil

balanced_max_limit_of_teams = [balanced_max_limit_of_teams , assignment.max_reviews_per_submission].min

#Assigning topics to teams based on highest score

sorted_list.each do |s|

#we are adjusting the max limit of topics with below formaula so that topic assignments is done in a balancing way

balanced_max_limit_of_topics = ((teams.length * max_limit_of_topics[s.topic_id]) / all_topics.length.to_f).ceil

balanced_max_limit_of_topics = [balanced_max_limit_of_topics , max_limit_of_topics[s.topic_id]].min

if((incomplete_topics[s.topic_id]< (s.score < base ? max_limit_of_topics[s.topic_id] : balanced_max_limit_of_topics) ) && (incomplete_teams[s.team_id]< (s.score < base ? assignment.max_reviews_per_submission : balanced_max_limit_of_teams) ))

SignedUpTeam.create(team_id: s.team_id, topic_id: s.topic_id)

incomplete_teams[s.team_id]+= 1

incomplete_topics[s.topic_id]+= 1

end

end

Use of Latest Expertiza Code Base

In the latest revision, we have used the latest expertiza code base for our project

Test Plan

Manual Testing

- UI testing of the implemented functionality to be done.

- No UI has been created been created for the conference paper review bidding. Hence assignment bidding controller is used to manually test the code on a local host to determine the feasibility of code.

- Log in as an instructor

- Go to an Assignment

- Go to topics section, add few topics for the assignment. Later check the enable bidding checkbox button.

- Go to add participants page, add few student to the assignment.

- Now save the assignment.

- Login as student (who is a participant of the assignment)

- Go to the assignment page, then go to sign up sheet and bid for topics.

- Do the above the step for the participants.

- Login back to an instructor, go to manage assignments page, click on the run intelligent assignment button.

- Go to the topics tab in the assignment, here you will be able to view all the team's assignments to their topics.

Automated Test Cases

- TDD and Feature Test cases to be written. For instance, we will do Rspec test in cases below:

- When all the reviewers submit their preferences.

- When there are no preferences from any user.

- When few have their preferences and few don't.

Edge cases

- Case 1: No reviewer submits a list of preferred topics to review : In this case all topics are assigned same bid score. Topics are assigned to users on the basis of "balanced assignment", first come first serve.

- Case 2: All reviewers submit exactly the same list of topics to review: It will be first come, first serve.

- Case 3: Number of topics exceed the number of teams: Some topics may not be reviewed,depending on the limits put by the conference. It is responsibility of administrator to add more teams in such a situation